From the Desk of Gus Mueller

From the Desk of Gus Mueller

As you can tell by the title, this month’s 20Q is about language development. As I was thinking about what I might write in my introductory comments, I remembered something I had read recently about the language of earthworms—yes, earthworms. So I quickly set about to tell you all how it is that earthworms have a language, and how they use it to communicate. And of course, armed with all this information about earthworm language, I couldn’t resist tossing out a few possible comments that one earthworm might make to another. All in all, when it was finished, I thought it was a pretty clever intro piece.

Gus Mueller

When our Editor-in-Chief Carolyn Smaka reviewed my little blurb, she kindly pointed out that language development in children, and the role of the audiologist, is a pretty big deal. Something that needs to be taken seriously. Probably not something that should be even indirectly trivialized with earthworm stories. She’s right of course. It is a big deal. So if you want to learn about the language of earthworms, you’ll have to go elsewhere. If human language development is your primary interest, read on.

We know that hearing loss can significantly impact language development, and that audiologists play a huge role in assuring that the auditory stage is set for language development to occur. This begins with early identification of a hearing impairment, and continues with the fitting of appropriate hearing aids and cochlear implants when hearing loss is detected. The latter also includes careful programming of the instruments and verification of the aided signals, to assure that audibility of speech has been maximized for a given child.

Given the close tie between hearing and language development, you might think that this general area is a common research track for audiologists. It isn’t. But we did find an audiologist whose professional interests have followed this path. Derek Stiles, PhD, is an assistant professor at Rush University in Chicago, and Director of the Rush Child Hearing Lab. Dr. Stiles’ research today is a continuation of his work at the University of Iowa, where he studied the relationships between speech audibility, working memory, and vocabulary in children with hearing loss.

In addition to his numerous research projects, Derek continues to hone his pediatric clinical skills with an active patient caseload—and he has plenty of opportunities to observe language development first hand at home each evening with his twin toddlers. He also is involved with the Rush AuD program, teaching classes and directing student research projects. We thank him for providing us some key insights here at 20Q, regarding the crticial role of the audiologist in language development.

Gus Mueller, PhD

Contributing Editor

February 2014

To browse the complete collection of 20Q with Gus Mueller articles, please visit www.audiologyonline.com/20Q

20Q: The Audiologist as Gatekeeper for Language and Cognition

Derek Stiles

1. I know you’re an audiologist, and I’ve heard that you also know a few things about language development?

Well, I think any audiologist who works frequently with children also deals with language. One of the most important jobs of the pediatric audiologist is to reduce the impact of a child’s hearing loss on their language development. Children with hearing loss are less equipped to ‘fill in the gaps’ of speech and cannot compensate for decreased speech audibility the way that adults might be able to. Fortunately, there are many tools available to audiologists to measure and improve aided speech audibility. We are still learning more about the effects of limited audibility on other aspects of child development. I’ve been looking at vocabulary development in children with hearing loss. This includes the number of words a child knows as well as the other skills and processes that a child with hearing loss might uniquely use to support learning new words.

2. Sounds interesting. I would guess that vocabulary development differs between children with normal hearing and children with hearing loss?

It certainly does. The first thing we see is that the vocabulary sizes of children with hearing loss are typically smaller (Moeller, 2011; Stiles, McGregor, & Bentler, 2012). We also see that the depth of understanding is not as strong as in children with normal hearing. So the mental dictionary of a child with hearing loss is less robust than a matched peer. The other thing we see is that children with hearing loss are slower to learn new words incidentally than children with normal hearing (Pittman, 2008).

3. Why do we see these differences? Is it simply an audibility thing?

That’s an area we’re trying to understand better. It is more than just audibility. Hearing loss reduces the number of meaningful opportunities to learn new words. For example, let’s say the student is in a classroom doing a group activity on nature. As the children are working, the teacher introduces some new vocabulary. A child with normal hearing may be able to pick up the new vocabulary despite the noisy activity whereas a child with hearing loss may not be able to effectively extract the teacher’s voice from the background noise. That is one less meaningful learning opportunity for the child with hearing loss. The number of lost opportunities add up over time, resulting in smaller vocabulary. In the scenario I just described, we expect that an FM system is being used by the teacher to protect the word learning opportunity. However, many word learning opportunities occur outside of school in unstructured environments and may be inaccessible to the child with hearing loss.

4. You mentioned that there are other skills and processes that might support word learning in children with hearing loss. Such as?

One area I am interested in is working memory. One can describe working memory as that part of cognition that is active during mental manipulations. For example, reciting your grocery list while driving to the market involves working memory. Comprehension of spoken language itself depends on memory (Frankish, 1996). Speech is essentially sequential: a string of phonemes forms a word, and a string of words forms a sentence. Speech comprehension requires maintenance of words heard earlier as more words are still arriving. If you’ve forgotten the beginning of a sentence by the time the end of the sentence comes around, then the meaning of the sentence is easily lost.

Working memory has also been implicated in word learning. When a child is exposed to a new word, he must integrate information from the visual field – the possible referents of the new word – and the auditory input – the phonological sequence of the new word. Keeping the phonological information active while the referent is selected and matched to the new word may involve working memory (Gathercole & Baddeley, 1993).

5. I think I’m following you, but could you give me an example of how this would work?

Sure. Let’s say a child hears the utterance, “Look at that yellow budgie”. If she’s never heard the word budgie before, she needs to link that new word to the object in the environment that is the right match. If she’s forgotten “budgie” by the time she’s figured out the person is talking about a yellow bird, then she was not able to profit from that word learning opportunity.

There is research coming out of the University of Indiana showing that hearing influences the development of working memory. Working memory capacity of profoundly deaf individuals and later-implanted children is smaller than that of hearing children (Burkholder & Pisoni, 2003; Pisoni & Cleary, 2003; Pisoni & Geers, 2000). There appears to be a critical period in early childhood where exposure to spoken language supports development of working memory. In my research, children with mild to moderate hearing loss did not have the same deficits in working memory, so perhaps even some residual audibility in early childhood is enough to support development of working memory (Stiles, Bentler, & McGregor, 2012).

6. As audiologists, do we really need to concern ourselves with these broader cognitive processes?

Children with hearing loss may have to do more mental work than hearing children when it comes to processing auditory information. The amount of resources being used at any moment is called the cognitive load. Tasks that we perform automatically demand a small cognitive load. Tasks that are complicated, requiring more mental processing, demand a high cognitive load. In general, decoding speech is low demand – we do it automatically. However, if we are trying to have a conversation with someone who has a thick Armenian accent in the front row of a Phish concert while multicolored glow sticks are flying through the air, the demand on our auditory and cognitive systems to understand our conversation partner increases substantially. There was recently a 20Q with Ben Hornsby that discussed this very topic. Listening fatigue is common, but what we are concerned about is whether that fatigue is hitting children with hearing loss harder or sooner. Decoding speech in the presence of hearing loss increases cognitive load, so speech understanding may not be as automatic. This would make learning more effortful for children with hearing loss, whether in an everyday environment, or in a classroom setting. So what strategies are children using to compensate for it?

7. Sounds good, but you didn’t really answer my question.

Okay—the answer is “yes.” Something I frequently hear from parents of children with hearing loss is confusion over whether the behaviors their children exhibit are a direct effect of limited auditory perception, or a sign of a separate developmental problem. Audiologists are not equipped to answer that question unless they are familiar with these processes. Experienced pediatric audiologists may develop a gut sense of what is typical and atypical behavior for a child with hearing loss over time, but to be rigorous, we need scientific evidence to back up these intuitions.

8. Are there ways children with hearing loss might be compensating?

Definitely. Some of these are behavioral strategies. Children might use withdrawal or domination of the conversation to avoid embarrassment from communication breakdowns (Kluwin, Blennerhassett, & Sweet,1990).

I’m particularly interested in how children might be using vision to compensate. It’s been shown that speechreading develops earlier in children with hearing loss than in children with normal hearing. This tells me that children with hearing loss are making speechreading a part of their communication toolbox from an early age. Children with normal hearing eventually get to the point where they can obtain the benefit from visual cues, but children with hearing loss get there first. The exact mechanism for this, however, is still not known.

9. But you have some ideas?

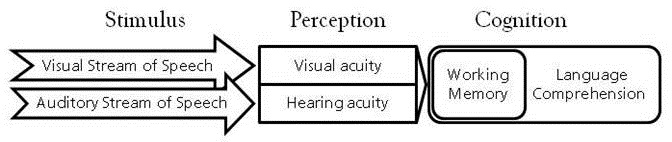

Some. My lab has found a relationship between working memory and audiovisual integration occurring in children with hearing loss that doesn’t occur in children with normal hearing. I think this is because children with hearing loss are using the visual stream of speech more actively for comprehension and so it engages with executive processes like working memory differently than for children with normal hearing. We are only beginning to look at this, but it may have implications for the education and habilitation of children with hearing loss. Figure 1 illustrates how visual and auditory information, as well as working memory, contribute to language comprehension.

Figure 1. Both visual and auditory information are used for language comprehension. The fidelity of this information is affected by the child’s vision and hearing status. As perceptual acuity declines, language comprehension becomes more difficult.

Our next project is to investigate how speechreading supports learning new words.

10. Is the goal of your work to determine how children with hearing loss are different and then try to make them “more typical?”

Certainly it is important to understand the developmental differences between children with hearing loss and their peers with normal hearing. I don’t think it’s realistic to say we can make a child with hearing loss ‘typical’. I think when the strengths and weaknesses of a child with hearing loss are identified, then we should teach the child how to take advantage of their strengths to compensate. Eventually I would like to find out what strategies the higher performing children are using and then teach them to the lower performing children to see if it helps. For instance, does the visual looking pattern (e.g., how much they are focusing on the speaker’s face versus the learning material) vary between higher and lower performing children with hearing loss? If so, then habilitation could include teaching children strategies for quickly shifting their attention to the most important visual cues in the environment.

11. Does technology play a role in this?

That’s an interesting question. In general, we’ve had two distinct groups of kids, those using hearing aids and those using cochlear implants who have separate auditory experiences. The spectral and temporal information that children receive from each type of technology is different. That is changing for a group of children receiving hybrid systems who will be able to benefit from both acoustic and electric hearing. I am hoping that these combined benefits will yield smaller deficits in language development.

Thinking even further ahead, I wonder what language development will be like for children with regenerated hair cells? Maybe one day I’ll have an answer to that question!

12. Are there other factors that we should consider regarding these children?

It helps to get to know about the child’s family and daily environments. Certainly a rich learning environment is important for children with hearing loss, just as it is for children with normal hearing. Socioeconomic status plays a part in this as well. Children with hearing loss living in poverty are particularly at risk for delays in language development. Also, the level of residual hearing is an important factor. Children with milder losses tend to fare better than children with severe losses.

13. You’re referring to their pure-tone average?

Not necessarily. I think the pure-tone average is useful for indicating the quietest levels a child can hear, but it doesn’t directly tell us about the child’s access to speech. A measure like the Speech Intelligibility Index (SII) is a better indicator of a child’s access to speech. For example, consider a child with a precipitously sloping hearing loss above 2000 Hz. A standard 3-frequency PTA would look as good as that of a child with normal hearing. This child’s SII would be only 0.63, a value that we know can be handicapping.

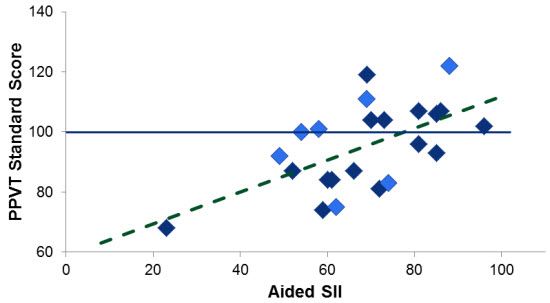

An additional benefit of the SII is that it can take into account the effects of a child’s hearing aid on conversational speech. In my research, I found a significant relationship between a child’s aided speech audibility and their vocabulary size. Children whose aided speech intelligibility index was less than around 0.60 were likely to have vocabulary sizes one standard deviation lower than normal (see Figure 2).

Figure 2. Receptive vocabulary score (PPVT) as a function of aided speech intelligibility index (SII). Data compiled from separate studies of children in Iowa and Illinois. A standard score on the PPVT between 85 and 115 is considered normal.

14. I see you’re now talking about the aided SII. That means it’s pretty critical that we program the hearing aid correctly?

It’s extremely critical. Providing the appropriate aided audibility has the potential to change the child’s life. Unfortunately, this critical component of hearing aid fitting doesn’t receive the attention it deserves. As pointed out in last month’s 20Q with Gus Mueller, the only way to assure appropriate audibility is to conduct probe-microphone measures. Relying on manufacturers’ default fittings or computer simulations is not a reasonable alternative. In fact, these approaches usually result in insufficient audibility.

15. How can I relate the big picture of all this to colleagues, students and parents?

I like to go back to a social cognition framework (Frith, 2008). It’s fascinating. If you consider that the essence of sensory input can be reduced to the level of photons hitting a retina, molecules bouncing against an eardrum, etc., then the depth of meaning humans learn to ascribe to combinations of fundamentally simple stimuli is amazing. An acoustic example would be how a change in modulation rate and formant frequencies of the sounds emanating from a person’s mouth affects how you understand his or her meaning. A parent can say their child’s name affectionately, or in a way that means ‘stop what you are doing now’. The phonological sequence is the same, but the suprasegmental information is not. The meaning of spoken speech is carried at many levels. That so much sociolinguistic information is carried acoustically means the effects of hearing loss impacts language development and access to social information.

It’s really a matter of how these different channels of information (auditory, visual, etc.) combine to form a complete picture of the world, and how this picture gets formed by someone who doesn’t get all the channels. I think the impact is most clearly exemplified in the case of someone who experiences a sudden hearing loss. An individual is used to interacting with the world – this combination of sensory stimuli – in a certain way. Suddenly, there is a loss of hearing and one of the routes the brain uses to access information about the world is no longer available. That individual now has to adapt to a new distribution of incoming stimuli. In other words, when you can’t trust your ears consistently, do you learn to trust your eyes more? This is why I think that early development of speechreading in children with hearing loss is so interesting. It’s evidence that children with hearing loss trust their eyes more.

16. So you’re saying that the distribution of reliable sensory input is different for children with hearing loss and this can affect their social development?

That’s what I believe. Let’s take theory of mind, an early milestone in social development. Theory of mind refers to a child's understanding that other people do not see the world the same way as they do and may not have the same information that they have. For example, if Susie is shown a box of cookies and asked what is in the box, Susie will answer, “cookies.” The examiner then opens the box and shows Susie that the box if filled with crayons rather than cookies. The examiner then asks Susie what Leo (who has not seen the inside of the box) will think is in there. If Susie has not yet developed theory of mind, she will say that Leo thinks that crayons are in there because, to her, everyone knows what she knows. In contrast, a child with theory of mind would indicate that Leo will think that there are cookies in the box. Theory of mind usually develops around 4 years of age.

Deaf children who have deaf parents who sign to them have reliable sensory input – both the sensory organ used for communication and the fluency of the language model are reliable. These children perform like children with normal hearing on theory of mind tasks (Schick, de Villiers, de Villiers, & Hoffmeister, 2007). Kids seem to do best when the language modality is matched to the intact sensory organ. Deaf children of hearing parents raised either orally or using sign language do not perform as well on theory of mind tasks. These children’s exposure to language is not as intact, either because of poor audibility of spoken speech or poor language modeling by nonfluent parents. Thus we are seeing that hearing loss affects social development even before children start school. Because the cultural demands for spoken language are incredibly strong, we have to do our best to give children with hearing loss as much audibility as possible.

17. Interesting. Can’t say I’ve thought much about theory of mind until now. So do you have some clinical tips regarding what I should be doing when I work with children with hearing loss?

I’ll go back to what I mentioned earlier. As audiologists, one of the first things we can do is make sure our hearing aid fittings are the best they can be. Work by Andrea Pittman at ASU showed that the more bandwidth children are able to hear, the faster they can learn new words (Pittman, 2008). One of the most important jobs of a pediatric audiologist is to optimize speech audibility for a child with hearing loss. In early childhood, there needs to be frequent monitoring. Children’s ear canals grow quickly, resulting in a loss of sound pressure at the tympanic membrane. Audiologists should plan to frequently evaluate the child’s real-ear response using probe-microphone measures to ensure optimal amplification throughout early childhood.

I think it is well understood in our field that even following intervention, the child is not hearing the same as a child with normal hearing. Audiologists need to consider the ramifications of that. How will any residual loss in speech audibility and fidelity following intervention affect language development, and in a broader sense, cognitive and socioemotional development? What additional interventions can help support this child to meet his or her full potential? What can parents do to help support their child’s language development? I am hoping my research contributes answers to these questions.

18. You keep mentioning audibility—what are the best ways to determine this?

Fortunately, there are many tools available to provide audiologists with audibility information for their patients with hearing aids. Anyone can download software that calculates the SII from https://sii.to. A more user-friendly tool is the SHARP software available from Boys Town (https://audres.org/rc/sharp/). Also certain manufacturers of hearing aid analyzers and audiometers provide a calculation of the SII. For aided audibility, probe-microphone measurements must be made, either directly, or through the application of real-ear to coupler difference (RECD) calculations. Because the sound pressure level of the output in the child’s ear canal is directly affected by the size and shape of their ear canal, these measurements need to be taken often as the child’s growth affects the ear canal acoustics.

19. So you’re saying that I should calculate the aided SII for my patients, but then what?

Calculating the aided SII is a great start. I would like to see the SII become a part of conversations with speech-language pathologists or developmental therapists working with the child. Sometimes our allies in other fields are not aware of the limitations of amplification. It is important to educate them that, even in the best case scenario, the hearing aids may not provide complete access to the speech signal. For example, if I had appropriately fit a one-year-old toddler whose aided SII was less than 0.70, I would be sure to tell the therapists that he was at greater risk for language delays so they could keep close tabs on his development in this area.

20. How else can we support language development in children with hearing loss?

It is essential to understand and identify key language milestones so that you can meaningfully monitor a child’s development and make appropriate referrals when a child is not meeting those milestones. Audiologists working with children should have information about enriching the language environment available to parents. The website www.zerotothree.org is a good referral source for parents seeking downloadable information about early development including language. ASHA also has information on their website. Audiologists should also be aware of or facilitate peer support groups for parents of children with hearing loss.

Perhaps most importantly, I would like audiologists to remind themselves of the impact their service has on the children they treat. We need language to think. Because improved audibility leads to better spoken language outcomes and better language leads to stronger cognition, audiologists are extremely crucial in ensuring that children with hearing loss meet their full potential. If audiologists forget that aspect, then they are missing an important aspect of their craft.

References

Burkholder, R.A., & Pisoni, D.B. (2003). Speech timing and working memory in profoundly deaf children after cochlear implantation. Journal of Experimental Child Psychology, 85(1), 63-88.

Frankish, C. (1996). Auditory short-term memory and the perception of speech. In S. E. Gathercole (Ed.), Models of short-term memory (pp. 179-207). East Sussex: Psychology Press.

Frith, C.D. (2008). Social cognition. Philosophical Transactions of the Royal Society of London, Series B, Biological Sciences, 363(1499), 2033-9.

Gathercole, S.E., & Baddeley, A.D. (1993). Phonological working memory: A critical building block for reading development and vocabulary acquisition? European Journal of Psychology of Education, 8(3), 259-272.

Kluwin, T., Blennerhassett, L., & Sweet, C. (1990). The revision of an instrument to measure the capacity of hearing-impaired adolescents to cope. The Volta Review, 92(6), 283-291.

Moeller, M.P. (2011). Language development: New insights and persistent puzzles. Seminars in Hearing, (32)2, 172-181.

Pisoni, D.B., & Cleary, M. (2003). Measures of working memory span and verbal rehearsal speed in deaf children after cochlear implantation. Ear and Hearing, 24(1) Suppl. 106S-20S.

Pisoni, D.B., & Geers, A. E. (2000). Working memory in deaf children with cochlear implants: Correlations between digit span and measures of spoken language processing. In B.J. Gantz, R.S. Tyler, J. T. Rubinstein (Eds.), Seventh symposium on cochlear implants in children. St. Louis, MO: Annals Publishing Company.

Pittman, A.L. (2008). Short-term word-learning rate in children with normal hearing and children with hearing loss in limited and extended high-frequency bandwidths. Journal of Speech, Language, and Hearing Research, 51(3), 785-797.

Schick, B., de Villiers, P., de Villiers, J., & Hoffmeister, R. (2007). Language and theory of mind: a study of deaf children. Child Development, 78(2), 376-396.

Stiles, D.J., Bentler, R.A., & McGregor, K. K. McGregor. (2012). The speech intelligibility index and the pure-tone average as predictors of lexical ability in children fit with hearing aids. Journal of Speech, Language, and Hearing Research, 55, 764-778.

Stiles, D.J., McGregor, K.K., & Bentler, R.A. (2012). Vocabulary and working memory in children fit with hearing aids. Journal of Speech, Language, and Hearing Research, 55(1), 154-167.

Cite this content as:

Stiles, D. (2014, February). 20Q: The audiologist as gatekeeper for language and cognition. AudiologyOnline, Article 12453. Retrieved from: https://www.audiologyonline.com