Introduction

We live in a complex world. We are constantly bombarded by stimulation. As humans, we have an amazing ability to sort through this onslaught and automatically and effortlessly make sense of the constant flow of events happening around us.

Our sensory systems function in such a manner that we automatically and subconsciously use what ever information that we can gather to organize the world around us into meaningful objects and events. We normally are not consciously aware of the individual auditory, visual, olfactory or proprioceptive stimuli that are constantly bombarding us. Rather, we are aware of the things and happenings all around us, especially those that are meaningful to us. Driven by Gestalt principles of organization (Moore, 2001; Neuhoff, 2004), this organization and analysis function is absolutely vital for us not to become overwhelmed by too much information. Without our ability to organize and focus, we would be paralyzed into inaction due to massive over-stimulation. This review will further explain how the human brain organizes the sound around us, how hearing loss affects that finely-tuned ability, and how the Oticon Epoq is restoring more of that functionality using evidence-based principles.

Organizing the World of Sound

In the auditory world, the terms Stream Segregation and Scene Analysis (Bregman, 1990) have been coined to describe how we turn massive amounts of individual sounds into usable information. It is important to remember that, except in psychoacoustical labs, sounds are not created without context. Sounds are the result of activity, generated by humans, nature and machines. Importantly, sounds occur over time. Naturally occurring sounds simply do not start and stop instantaneously. Rather, they are the result of something happening. Stream Segregation refers to the cognitive activity of linking individual sounds over time to a common source.

The most common example of Stream Segregation is speech. People typically do not produce one phoneme at a time. Speech is comprised of a series of phonemes that form words and words that form sentences. Speech does not take on meaning until these isolated speech sounds are grouped into meaningful wholes. The listener has to be able to track these sounds over time, assigning them to the same source, so that the meaning can be uncovered.

Scene Analysis refers to the cognitive activity of deconstructing the totality of sound input into isolated sound sources arising from specific places in space. It is the way we perceptually organize the world of sound around us into meaningful objects and events, with specific spatial relationships.

These two skills combine to allow us to understand and appreciate the world of sound around us. We use the abilities, which are normally automatic, to develop an immediate understanding of where we are in reference to the rest of the world, to recognize what is happening all around us and to focus our attention on the sound sources that we choose. When faced with the task of listening to speech in a challenging environment, Stream Segregation and Scene Analysis allow us to find and follow the speech of the talker we are interested in, suppressing the interference of the other sounds in our immediate surroundings (Cherry, 1953). The failure of current computer-based speech recognition devices is, in part, related to programmers' inability to replicate these incredibly powerful yet automatic processes of human cognition. Speech understanding is not an event of the auditory system; it is an event of the cognitive system.

Spatial Hearing

The main function of having two ears is the ability to resolve the spatial relationships between ourselves and the sounds around us. Neither core auditory sensitivity nor acuity is enhanced appreciably by two ears. However, our ability to tell where sound is coming from is almost totally dependent on a binaural system (Blauert, 1983).

Localization of sound sources is possible due to timing and level differences of sounds arriving at one ear versus the other (Yost, 1994). It has long been recognized that when sounds originate somewhere other than precisely in front or behind us, the sound will reach the near ear earlier and at a higher level than the far ear. We use timing differences below 1500 Hz and level differences above 1500 Hz to determine where the sound came from. The timing differences are due to the finite speed of sound (sounds from 90 degrees to the side arrive at the near ear about 0.6 to 0.7 ms before arriving at the far ear), and level differences are due to the ability of the head to attenuate sounds with shorter wavelengths (by up to 20 dB). We are also sensitive to the location of sounds that occur from somewhere other than the same elevation of the ears, using acoustical information in the extreme high frequencies (above 5000 Hz). This skill is related to the convoluted shape of the pinna, where differences in source elevation will interact with the wrinkles and folds of the external ear in such a way to create significant peaks and valleys in the extreme high-frequency response.

In terms of speech-understanding improvement, the signal-to-noise ratio (S/N) can be improved by up to 5 dB if the competition is something other than a competing talker. If the competition is one or two other voices, the improvement can be on the order of 5 to 10 dB or greater with 90 degrees of spatial separation (Arbogast, Mason, & Kidd, 2005; Hawley, Litovsky, & Culling, 2004; Kidd, Mason, & Gallun, 2005). The extra improvement clearly has a cognitive component, showing the power of identifying different speech streams in space and focusing attention on one versus another.

Based on the approach of the Articulation Index (Kryter, 1962), we assume that speech energy only up to about 5000 Hz is important for speech understanding. However, under natural conditions and consistent with the notion of Scene Analysis, it is beneficial to first locate the talker before speech information can be gleaned. Kidd, Arbogast, Mason, and Gallun (2005) demonstrated significant improvements in speech understanding in noise if uncertainty about the talker location can be reduced or eliminated. Best, Carlile, Jin, and Schaik (2005) demonstrated that speech energy above 8000 Hz can be used to effectively localize the talker in space. These results suggest a two-staged model of speech understanding in spatial contexts in which high-frequency speech energy (even that above the region where phonemic information is found) is used to help locate the talker in the environment, then allowing for focused attention in order to glean speech information.

Sensorineural Hearing Loss and Spatial Resolution

There have been several investigations into spatial abilities of patients with sensorineural hearing loss (e.g., Byrne & Noble, 1998; Goverts, 2004; Noble, Ter-Host & Byrne, 1995). As with many psychoacoustical skills, patients with sensorineural hearing loss demonstrate significant patient-to-patient differences in performance on spatial resolution skills. Further, consistent with studies of other core hearing abilities, some patients perform nearly as well as listeners with normal hearing, whereas other show dramatic decrements in performance. In some cases, even when the audibility of signals is assured, some patients demonstrate no measurable ability to differentiate sound source location, as if all sounds come from the same location.

However, when measured using speech in noise, most patients with sensorineural hearing loss seem to have some residual skills. Although, on average, impaired patients show less benefit of spatial separation of speech from competition, they still show performance above chance (Gelfand, Ross, & Miller, 1988), especially when the competition is the speech of no more than a few talkers (Arbogast, Mason, & Kidd, 2005).

The Effect of Amplification

First and foremost, if a sound cannot be heard, it cannot help the binaural auditory system to localize (Dubno, Ahlstrom, & Horwitz, 2002). Therefore, the simple audibility improvements offered by any hearing aid fitting is assumed to assist spatial hearing under some conditions. Subjective reports from patients consistently indicate that hearing aids help with spatial resolution. Sometimes these reports are inconsistent with measured performance under controlled laboratory conditions. However, it is important to note that these patient reports may likely be due to improvement in audibility, especially for higher-frequency information used to resolve auditory space. Since signals come to hearing aid users at all sorts of levels, improving the audibility of softer sounds may well improve general awareness of the different sounds in the environment. Although pure localization ability may or may not be improved, awareness certainly is.

Given the importance of spatial hearing to effective speech understanding in realistic noisy environments, there have been surprisingly few studies that have looked at the objective effects of amplification on spatial perception. Some of these observations form our working knowledge. However, given that the effects have not been studied extensively, especially as hearing aid technology has advanced, these observations have to be treated as tentative.

- As noted above, most subjective surveys indicate that amplification, especially binaural amplification, improves the patient's ability to tell where sound is coming from and to separate the speech of one talker from another (Noble, Ter-Host, & Byrne, 1995). Again, these reports likely represent the total combined effects of improved audibility.

- Objectively measured localization performance does not consistently seem to be improved through the provision of hearing aids (Koehnke & Besing, 1997), especially when test-signal levels in the unaided condition are above threshold. However, there are both case examples and group data showing positive results (Rakerd, Vander Velde, & Hartmann, 1998).

- Speech understanding in noise, as reflected in S/N improvement, does seem to improve with two hearing aids compared to one (Festen & Plomp, 1986). Part of this effect seems to be due to binaural squelch (a true binaural effect). Sometimes this effect is due to improvements in audibility on one ear versus the other, depending on where the speech and competition come from.

- Localization performance seems to be worse in closed earmold configurations compared to more open conditions (Byrne, Sinclair, & Noble, 1998). The more direct high-frequency sound entering the ear canal, the better localization. Improved bandwidth in hearing aids also helps to improve localization (Byrne & Noble, 1998).

- Advanced signal processing in hearing aids (multi-channel, wide dynamic range compression, adaptive directionality and noise reduction) may disrupt localization ability (Keidser et al., 2006; Van den Bogaert, Klasen, Moonen, Van Deun, & Wouters, 2006). However, the effects are highly dependent on test configuration and procedures. Given that these systems disrupt naturally occurring relationships in sounds, especially when comparing one ear to the other, it is reasonable to suspect some effects. At times, these suspicions have been confirmed.

Oticon Epoq: Targeting Improved Spatial Perception

Epoq is a new advanced-technology hearing aid by Oticon. Along with a variety of other signal processing improvements, there are specific features in Epoq designed to improve spatial resolution for the user (Schum, 2007).

- Wireless communication between hearings aids: A version of digital magnetic wireless communication is implemented in Epoq at the chip level to allow for high-speed data transfer between the two hearing aids in a binaural fitting. This feature allows for the coordination of switch position and program selection, synchronization of noise reduction and adaptive directionality, improved accuracy of feedback and own voice detection and, most importantly, preservation of naturally occurring interaural spectral differences. As indicated above, these spectral differences in the mid and high frequencies form the basis of localization. The high-speed sharing of data between the hearing aids allows us to implement a feature called Spatial Sound, which adjusts the gain and compression response of the hearing aids to preserve these important cues.

- Extreme Bandwidth: The bandwidth of Epoq is an industry-leading 10 kHz in all models. Pushing the response of the device out to this extreme allows for the preservation of localization cues that have historically been inaudible in previous generations of hearing aids.

- RITE Design: The movement away from traditional earmolds towards thin tube and receiver-in-the-ear (RITE) fittings, in combination with ever-improving feedback cancellation algorithms, has put new emphasis on the advantages of open fittings. Beyond the minimization of occlusion, open fittings also allow for the passage of high-frequency information even beyond the bandwidth of the hearing aid. Again, the better the perception of high-frequency information, the greater likelihood of effective spatial resolution. Further, compared to thin tube approaches, placement of the receiver in the canal provides a smoother response, improving sound quality, decreasing feedback risk, and maximizing the ability to provide complete high-frequency gain.

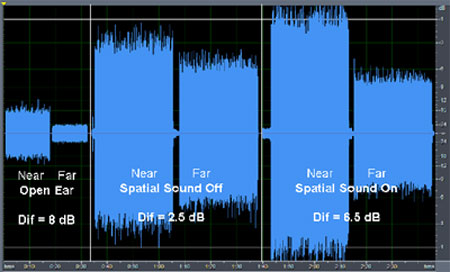

Figure 1 demonstrates the effect of the Spatial Sound feature. Recordings were made in the ear canals of KEMAR with sound presented 90 degrees to the right of midline. The stimulus was white noise presented at 75 dB SPL. Recordings were made under three conditions: (1) open ear canal, (2) binaural Epoq fitting with Spatial Sound feature disabled, and (3) binaural Epoq fitting with the Spatial Sound feature enabled. For these recordings, the directional and noise reduction systems were disabled. The Epoq RITE hearing aids were fit for a moderate, high-frequency hearing loss, and an open dome was used. All of the recordings were then bandpass filtered from 1 to 10 kHz to compare the response within the functional bandwidth of the device.

Figure 1: Recordings of white noise made in the ear canals of KEMAR. The speaker was oriented at +90 degrees to the right of midline and recordings were made in both the near and far ears. Recordings were made with the ear canals open (left panel), with Epoq RITEs in place with the Spatial Sound feature disabled (middle panel) and then enabled (right panel).

In Figure 1, the left-most panel shows the recording in the near and then far ear canal. The overall difference from one side of the head to the other was 8 dB, reflecting the average interaural spectral difference at + 90 degrees. The middle panel shows the effect of compression when operating independently in one ear versus the other. The natural 8 dB interaural difference has now been reduced to 2.5 dB. The right-most panel shows the effect with Spatial Sound activated. The gain and compression response has been adjusted to retain interaural spectral differences. In this case, the difference from the right to the left ear is 6.5 dB. When Spatial Sound is active, the input level to both ears is shared between the hearing aids and the gain/compression response is based on a central decision necessary to, as best as possible, maintain naturally occurring interaural spectral difference.

Taken together, the features of Spatial Sound, Extreme Bandwidth and RITE design have placed emphasis of feeding the brain the most complete, best preserved information that forms the basis of spatial resolution. As discussed above, in attempt to maximize audibility and minimize the effects of background noise, hearing aids in reality have been limiting or distorting the natural localization cues.

Measuring Spatial Perception

Localization has never been an ability evaluated in routine clinical practice. Accurate objective measurement of localization requires speaker arrangements and support electronics that simply are not available in typical hearing aid facilities. Further, measuring the ability to localize discrete signals in sterile test environments likely does not capture the user's experience of sound in space, especially in everyday environments.

In order to assess a user's perception of sound within the environment, Gatehouse and Noble (2004) developed the Speech, Spatial and Qualities of Hearing (SSQ) questionnaire. This scale is comprised of 53 items across three domains: Speech understanding (mainly in challenging, real-life environments), Spatial Perception (assessing perception of localization, distance, movement, etc.) and Sound Qualities (assessing the ability to separate sounds from each other, familiarity of known voices and other sounds, distinctiveness of common sounds, etc.). The SSQ is designed to assess those aspects of the listening experience that are driven by factors beyond audibility and S/N. They have used the SSQ, for example, to demonstrate the advantages of bilateral over unilateral hearing aid fittings (Noble & Gatehouse, 2006).

Epoq and the SSQ

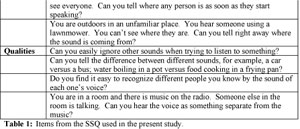

In an attempt to verify the effectiveness of the specific technical features in Epoq designed to improve spatial perception, selected items from the SSQ were used as part of a larger clinical study of Epoq fittings (Hansen, in preparation). The SSQ results from 76 patients from that study are reported here. We selected 17 items from the SSQ that specifically focused on spatial resolution. We selected three items from the Speech subscale, ten items from the Spatial subscale, and four items from the Qualities subscale (see Table 1).

Table 1: Items from the SSQ used in the present study.

Each patient was an experienced bilateral user of advanced-technology, multi-channel nonlinear digital hearing aids, mainly Oticon Syncro (83%). As part of the current study, each patient was fit with one of five styles of Epoq: ITC = 9; ITE = 20; BTE = 21; RITE with a custom earmold = 10; RITE with an open dome = 16. All patients were within the published fitting range of the particular model of Epoq used. In general, patients were fit with the same model of Epoq as their Syncro fitting. Since Syncro is not available in a RITE model, the 31 Epoq RITE users were drawn from users of other Syncro models. All testing with Syncro occurred before the patients were fit with Epoq. Patients wore the Epoqs for at least one week before subsequent testing began.

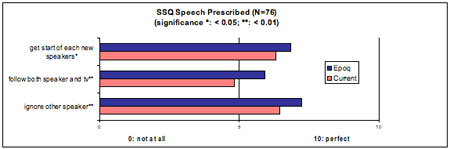

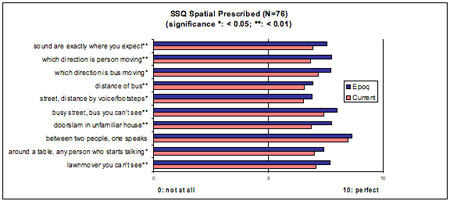

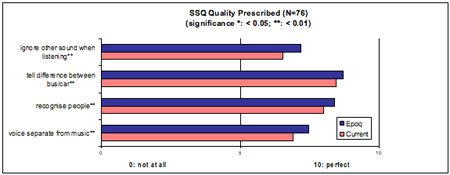

Figures 2, 3, and 4 provide the SSQ results on items from the Speech, Spatial, and Quality subscales, respectively. T-tests were performed on each item separately to determine if the mean differences reflected true differences. The asterisks on the graphs provide the level of significance, with a single asterisk indicating that the mean difference reached the p

Figure 2: The results of 76 Epoq users on 3 selected questions from the SSQ Speech subscale. The "current" device was either Syncro (83%) or some other advanced technology multi-channel nonlinear device.

Figure 3: The results of 76 Epoq users on 10 selected questions from the SSQ Spatial subscale.

Figure 4: The results of 76 Epoq users on 4 selected questions from the SSQ Sound Qualities subscale.

As can be seen, all items drawn from the Speech subscale reached at least the p

It is clear that there are consistent perceived improvements in the perception of spatial-related abilities by these Epoq users. These improvements may not have been fully documented using more traditional testing techniques, such as objective speech-in-noise testing or questionnaires such as the APHAB. However, the ability to ignore one type of sound while paying attention to the talker or the ability to follow two sources of sound at the same time are meaningful to users. Skills such as these demonstrate how listeners can actively use their binaural abilities to function in real, meaningful situations.

Above, we isolated three specific features in Epoq designed to improve spatial resolution: Spatial Sound, Extreme Bandwidth, and the RITE design. It is not possible (and not our intention in this study) to isolate the contribution from each feature. However, since only 16 of the 76 patients used a RITE with an open dome, we assume that the majority of the effect was a combination of bandwidth improvements and the Spatial Sound feature.

Conclusion

The hearing aid industry has expended considerable effort over the years to develop technologies to minimize the effects of noise on the overall listening experience for individual with hearing loss. The normal-hearing individual can perform quite effectively in difficult environments, based on the brain's ability to separate figure from ground - a skill that is dependent on binaural sound processing. Technical efforts to minimize the effects of noise in the presence of sensorineural hearing loss, although impressive, have fallen short of the performance of the normally-hearing system. We have treated noise as the enemy that must be eliminated. However, persons with normal hearing do not need to have the noise eliminated, just organized. Once the various sources of sound - both important signals and distractions - can be mapped out, we are effective at focusing our attention on what we find important.

The specific technical characteristics of Epoq (i.e., Spatial Sound, Extreme Bandwidth, and the RITE design) have been implemented to provide the patient's auditory system with a cue set that preserves natural localization information as much as possible. The responses from a large group of patients on the SSQ, a test designed specifically to assess spatial perception, indicate that these design features are providing patients with improved spatial perception. We are allowing the best signal processor available - the human brain - have access to the information it needs to perform this important function.

References

Arbogast, A., Mason, C., & Kidd, G. (2005). The effect of spatial separation on informational masking of speech in normal-hearing and hearing-impaired listeners. Journal of the Acoustical Society of America, 117(4), 2169-2180.

Best, V., Carlile, S., Jin, C., & Schaik, A. (2005). The role of high frequencies in speech localization. Journal of the Acoustical Society of America, 118(1), 353-363.

Bregman, A. (1990). Auditory Scene Analysis: The Perceptual Organization of Sound. MIT, Cambridge, MA.

Blauert, J. (1983). Psychoacoustical binaural phenomena. In R. Klinke & R. Hartmann (Eds.) Hearing - Psychological Bases and Psychophysics (pp. 182-199). Berlin: Springer-Verlag.

Byrne, D. & Noble, W. (1998). Optimizing sound localization with hearing aids. Trends in Amplification, 3(2), 51-73.

Byrne, D., Sinclair, S., & Noble, W. (1998). Open earmold fittings for improving aided auditory localization for sensorineural hearing losses with good high-frequency hearing. Ear & Hearing, 19, 62-71.

Cherry, E. (1953). Some experiments on the recognition of speech, with one and with two ears. Journal of the Acoustical Society of America, 25, 975-979.

Dubno, J., Ahlstrom, J., & Horwitz. (2002). Spectral contributions to the benefit from spatial separation of speech and noise. Journal of Speech, Language, and Hearing Research, 45, 1297-1310.

Festen, J. M., & Plomp, R. (1986). Speech-reception threshold in noise with one and two hearing aids. Journal of the Acoustical Society of America, 79, 465-471.

Gatehouse, S., & Noble, W. (2004). The speech, spatial and qualities of hearing scale (SSQ). International Journal of Audiology, 43, 85-99.

Gelfand, S. A., Ross, L., & Miller, S. (1988). Sentence reception in noise from one versus two sources: Effects of aging and hearing loss. Journal of the Acoustical Society of America, 83, 248-256.

Goverts, S. T. (2004). Assessment of spatial and binaural hearing in hearing impaired listeners (Doctoral dissertation, Vrije Universiteit, Amsterdam, 2004).

Hansen, L.B. (2007). The Epoq Product Test. Oticon A/S, in preparation.

Hawley, M., Litovsky, R., & Culling, J. (2004). The benefit of binaural hearing in a cocktail party: Effect of location and type of interferer. Journal of the Acoustical Society of America, 115, 833-843.

Keidser, G., Rohrseitz, K., Dillon, H., Hamacher, V., Carter, L., Rass, U., & Convery, E. (2006). The effect of multi-channel wide dynamic compression, noise reduction, and the directional microphone on horizontal localization performance in hearing aid wearers. International Journal of Audiology, 45, 563-579.

Kidd, G., Arbogast, T., Mason, C., & Gallun, F. (2005). The advantage of knowing where to listen. Journal of the Acoustical Society of America, 118, 3804-3815.

Kidd, G., Mason, C., & Gallun, F. (2005). Combining energetic and informational masking for speech identification. Journal of the Acoustical Society of America, 118, 982-992.

Koehnke, J., & Besing, J. (1997). Binaural performance in listeners with impaired hearing: Aided and unaided results. In R. Gilkey & T. Anderson (Eds.) Binaural and Spatial Hearing in Real and Virtual Environments (pp. 701-724). Hillside, NJ: Earlbaum.

Kryter, K. (1962). Methods for the calculation and use of the Articulation Index. Journal of the Acoustical Society of America, 34, 1689-1697.

Moore, B.C.J. (2001). An Introduction to the Psychology of Hearing. New York: Academic Press.

Neuhoff, J. (2004). Ecological Psychoacoustics. New York: Elsevier.

Noble, W., & Gatehouse, S. (2006). Effects of bilateral versus unilateral hearing aid fittings on abilities measured by the SSQ. International Journal of Audiology, 45, 172-181.

Noble, W., Ter-Host, K., & Byrne, D. (1995). Disabilities and handicaps associated with impaired auditory localization. Journal of the American Academy of Audiology, 6, 29- 40.

Rakerd, B., Vander Velde, T., & Hartmann, W. (1998). Sound localization in the median sagital plane by listeners with presbyacusis. Journal of the American Academy of Audiology, 9, 466- 479.

Schum, D. J. (2007). Redefining the hearing aid as the user's interface with the world. The Hearing Journal, 60(5), 28-33.

Van den Bogaert, T., Klasen, T., Moonen, M., Van Deun, L., & Wouters, J. (2006). Horizontal localization with bilateral hearing aids: Without is better than with. Journal of the Acoustical Society of America. 119(1), 515-526.

Yost, W. (1994). Fundamentals of Hearing: An Introduction. San Diego: Academic Press.