Introduction

This paper offers a comprehensive exploration of the technological advancements in front-end processing and sound processing utilized by Advanced Bionics (AB) cochlear implant systems. It highlights the strategic integration of Phonak's extensive experience in front-end sound processing and analysis with specialized expertise from AB in translating acoustic signals into meaningful electrical stimuli for the auditory nerve. This collaboration underscores a fundamental shift towards creating a seamless, natural, and highly adaptive hearing experience that aims to alleviate the cognitive burden and fatigue often associated with listening in complex real-world environments. The goal, "effortless hearing," is not merely a convenience but a critical factor in improving the daily lives and communicative abilities of CI recipients.

Figure 1. Front-end and sound processing diagram.

Front-End Processing

Rebecca Lewis, the CI Audiology Director at Pacific Neuroscience Institute, provided insights into the initial stages of capturing sound and translating it into an electrical signal to be delivered to the auditory nerve. Conceptualizing the CI system as an intricate "translator," Dr. Lewis described how acoustic signals from the recipient's environment are meticulously converted into electrical signals, which are then perceived and understood by the brain. This initial phase, front-end processing, is paramount as it dictates the quality and characteristics of the auditory data available for subsequent processing stages.

Microphone System and Calibration

Sound is captured by one of four distinct microphones: the headpiece microphone, the front and rear processor microphones (which work together), and the T-mic. These microphones are precisely calibrated to offer optimal performance across devices, which is particularly critical for bilateral and bimodal recipients, ensuring a high-quality sound signal.

Omnidirectional Mode

The omnidirectional microphone mode uses the front processor microphone and is designed to capture sound from all directions. This setting provides an expansive auditory field, making it suitable for quiet listening environments where the sound source is directly in front or where environmental awareness from all directions is desired.

T-mic Functionality

The T-mic, an omnidirectional microphone strategically positioned at the opening of the ear canal, utilizes the natural acoustic effects of the pinna. By leveraging these inherent "pinna effects," the T-mic enhances speech understanding in both quiet and noisy environments and contributes to a more natural sound perception. Its placement allows it to mimic the natural sound localization cues that are provided by the pinna.

Real Ear Sound

Real Ear Sound utilizes directional microphones to synthetically reproduce the pinna-related directional cues that are naturally captured by the T-mic. This advanced processing aims to further refine sound localization and spatial awareness, particularly for recipients who may not always utilize the T-mic.

Fixed Directional Microphones

For challenging listening situations characterized by significant background noise, the fixed directional microphone mode employs a beamformer. This technology intelligently utilizes the front and rear processor microphones to create a focused listening beam by establishing a "null" (a zone of minimal sensitivity) at 180 degrees (behind the listener), this mode effectively attenuates sounds originating from the rear, thereby enhancing focus on sounds originating from the front. This targeted approach is invaluable in environments where the primary sound source is in front and distracting noise is behind the listener.

Advanced Directional Features for Complex Environments

Beyond the fundamental microphone modes, AB systems integrate highly advanced directional features that are automatically managed by AutoSense OS, designed to optimize performance in the most complex and dynamic listening situations.

UltraZoom and SNR Boost

UltraZoom, in conjunction with SNR Boost, is a feature that is automatically engaged in the Speech in Noise program within AutoSense OS. This sophisticated combination works to significantly improve speech understanding in noisy environments by providing additional attenuation of sounds originating from the back. It represents a proactive approach to noise reduction, allowing the listener to focus on the desired speech signal without being overwhelmed by extraneous background sounds. The adaptive nature of the algorithm ensures that the noise reduction is dynamic and responsive to changes in the acoustic environment.

StereoZoom

StereoZoom is a bilateral beamformer, specifically designed for one-on-one communication in very loud and challenging environments. This feature exclusively leverages four microphones (two from each ear in bilateral and bimodal recipients) to create a narrow focus for speech. Activated automatically in the Speech in Loud Noise program, StereoZoom dramatically improves the signal-to-noise ratio for the target speaker, allowing for clearer and more focused conversations even in highly adverse acoustic conditions. Its effectiveness is particularly pronounced in situations like bustling restaurants or noisy social gatherings.

Comfort Features

Beyond optimizing speech understanding, AB sound processors integrate comfort features designed to improve comfort for the CI recipient and enhance the overall listening experience across various soundscapes. These features are crucial for promoting consistent device use and reducing listening fatigue.

SoundRelax is an automated feature meticulously designed to attenuate sudden, impulsive loud sounds. Imagine the jarring effect of crashing dishes in a sink or the sudden blare of a fire truck siren. SoundRelax attenuates these abrupt noises, thereby preventing discomfort. SoundRelax ensures a more comfortable and less startling listening experience in dynamic environments.

WindBlock

Wind noise, a common annoyance for individuals using hearing devices, can significantly impact sound quality, particularly in outdoor settings. WindBlock is specifically engineered to reduce the disruptive effects of wind noise, allowing for clearer and more comfortable listening during outdoor activities such as golfing, hiking, or simply strolling in breezy conditions. By suppressing the broadband, turbulent noise generated by wind, this feature significantly improves the audibility of desired sounds.

EchoBlock

Reverberant environments, such as large classrooms, gymnasiums, or auditoriums, can significantly compromise speech understanding due to reflections of sound waves. EchoBlock is designed to improve both comfort and ease of listening in such highly reverberant rooms.

AutoSense OS

AutoSense OS transforms the recipient’s experience by automating program adjustments. This innovation directly addresses the pervasive patient desire for a "set it and forget it" approach, eliminating the cognitive burden and inconvenience associated with manual program changes throughout the day. AutoSense OS continually analyzes incoming auditory signals with remarkable speed and precision, selecting the optimal microphone mode and comfort features to ensure recipients consistently experience their best hearing performance.

Every 400 milliseconds, AutoSense OS analyzes the incoming soundscape, classifying it into one of seven predefined sound classes. Continuous analysis allows the AutoSense OS to calculate the statistical probability that the recipient's current listening environment aligns with a specific sound class, subsequently activating the most appropriate sound class or a nuanced blend of settings. This dynamic adaptation ensures that the device's parameters are optimized for the prevailing acoustic conditions.

With numerous features integrated into AutoSense OS, the classifier is able to generate over 200 distinct possible settings. This adaptability allows the system to fine-tune its response to the recipient's specific listening environment, moving beyond manual program switching to offer a less effortful listening experience.

Acoustic vs. Streaming Classification

A unique aspect of AutoSense OS is its ability to classify both acoustic environments and streamed audio signals. This dual classification is a significant industry first. Input is intelligently categorized as either "Media Speech" or "Media Music," prompting the system to adjust its parameters to optimize the listener's audio streaming experience accordingly. For instance, as a recipient transitions from listening to ambient sounds in a quiet home to streaming a podcast in a car, and then to streaming music during a walk, AutoSense OS seamlessly classifies these distinct auditory inputs and automatically optimizes the settings for each. This ensures clarity for spoken content and rich sound quality for musical content.

Exclusive and Non-Exclusive Programs

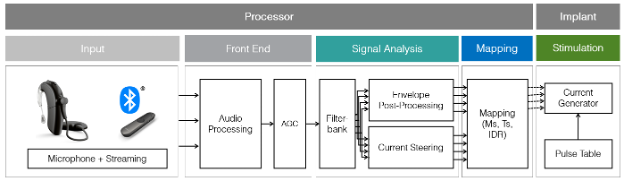

Figure 2. Exclusive and Non-Exclusive Programs.

AutoSense OS is built upon seven fundamental programs, intelligently blended or exclusively selected based on the complexity of the listening environment.

- Exclusive Programs: Three programs are designated as exclusive: "Speech in Loud Noise," "Music," and "Speech in the Car." These classes cannot be blended with others and are automatically selected when the recipient is definitively within one of these specific environments. One of the exclusive programs is automatically selected when the recipient is one of those specific environments.

- Non-Exclusive Acoustic Programs: Recognizing that many real-world environments are inherently complex and do not neatly fit into a single category, AutoSense OS incorporates four non-exclusive acoustic programs. These can be activated either exclusively or, more commonly, as a blend to address intricate real-world environments that defy simple classification. For example, a bustling outdoor zoo might trigger a blend of Speech in Noise and Comfort in Noise to manage both human communication and pervasive environmental sounds. Similarly, a noisy restaurant might necessitate the activation of Speech in Loud Noise prioritize conversation amidst noise of the restaurant.

Clinical Impact and Patient Feedback

Clinical studies and extensive patient feedback consistently underscore the transformative impact of AutoSense OS. Patients frequently report no discernible delay in program changes, highlighting the seamless and unnoticeable nature of its operation. The system's ability to automatically identify challenging listening environments and optimize settings has led to demonstrable improvements in speech recognition. For instance, studies have shown a 46% improvement in noise with AutoSense OS 3.0 enabled, even in a challenging +5 dB signal-to-noise ratio environment. Research studies validate the clinical effectiveness of this adaptive technology.

Qualitative feedback from recipients further reinforces these findings. Patients express significant satisfaction, noting reduced car noise, and better hearing in difficult listening situations, including restaurant situations and group conversations, often without the need for additional accessories like Roger microphones.

Streaming and Pediatric Applications

Figure 3. Streaming and Pediatric Applications.

The seamless integration of streaming capabilities within AutoSense OS is another significant advantage. The processor automatically transitions into and out of streaming programs (for Bluetooth phone calls, Phonak Partner mic, or Roger microphones) as recipients start or stop streaming. This automation simplifies the recipient’s experience, making sophisticated streaming functionality easier than ever before. Studies have shown significant patient preference for streaming on the phone with Marvel CI devices compared to earlier generations, emphasizing the positive impact of this seamless integration.

Furthermore, AutoSense Sky OS 3.0, specifically developed for pediatric listening environments, demonstrates equivalent benefits. Research has shown a 46% increase in speech recognition and significant improvements in listening and clarity ratings in various noise levels, even at a challenging -5 dB signal-to-noise ratio. This tailored approach ensures that children and teenagers receive optimal listening experiences in their unique daily situations, including classrooms, libraries, playgrounds, and while listening to music.

Sound Processing

Jacob Sulkers, Coordinator of the Surgical Hearing Implant Program, provided detailed information on the stages of sound processing, elaborating on how the captured acoustic signals are transformed into electrical pulses for the auditory nerve.

Temporal Details: Preserving the Timing Cues

Temporal cues, representing information about the acoustic signal over time, are fundamental to speech perception and the naturalness of sound. These cues convey vital information such as word and syllable structure (e.g., distinguishing long words from short words), prosody (the rhythm, stress, and intonation of speech), manner of articulation (e.g., differentiating voiced from unvoiced sounds like 'b' versus 'p'), vocalization strength (e.g., 'f' versus 's'), and nasality (e.g., 'm' versus 'n'). Accurate preservation of these timing cues is directly correlated with speech clarity and naturalness.

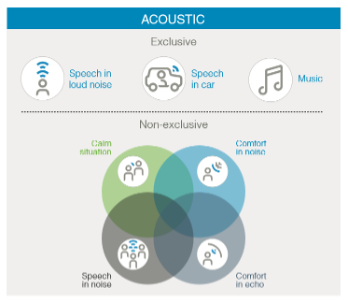

Stimulation Rate and Fine Structure Preservation

The ability of a cochlear implant system to accurately capture and deliver sound to the auditory nerve is profoundly influenced by its stimulation rate. In normal hearing, the ear utilizes both the overall envelope (the slow-changing shape of sound over time) and the fine structure (the very rapid, intricate variations within the sound signal) to comprehend speech. A higher stimulation rate in CI systems enables a more precise reflection of this fine structure within the overall envelope. This increased temporal resolution ensures that the rapid fluctuations and subtle details of speech are preserved and delivered to the auditory nerve. The result is enhanced clarity and naturalness of speech, leading to improved hearing performance, particularly in distinguishing similar-sounding phonemes and appreciating the nuances of spoken language.

Figure 4. Low vs. high stimulation rate for cochlear implants.

Spectral Details: Delivering Frequency Information

Spectral details, which encompass the frequency information, are equally critical for auditory perception. Accurate frequency information is essential for a multitude of perceptual tasks, including:

- Vowel Identification: Distinguishing between different vowel sounds (e.g., 'ee' vs. 'ah') relies heavily on the accurate perception of their characteristic formant frequencies.

- Pitch Perception and Intonation: The ability to discern pitch differences and the overall intonation contours of speech contributes to understanding emotion, linguistic emphasis, and distinguishing questions from statements.

- Consonant Place of Articulation: Identifying where a consonant is produced in the vocal tract (e.g., labial 'p', alveolar 't', velar 'k') is highly dependent on precise frequency information.

- Depth and Naturalness of Voice: Rich and detailed frequency information contributes significantly to the perceived depth, naturalness, and timbre of a speaker's voice.

Current Steering (HiRes Fidelity 120)

Advanced Bionics utilizes current steering technology in HiRes Fidelity 120 and HiRes Optima sound processing strategies. In conventional CI systems, electrical stimulation typically occurs only at the discrete locations of the physical electrodes. However, with current steering, AB's system allows for the perception of a significantly greater number of pitch percepts than just the pitches at the location of the physical electrodes. With 16 electrodes, the system effectively creates 120 virtual channels. This is achieved by precisely steering electrical current between adjacent physical contact points. By modulating the current distribution between electrodes, the system can target discrete neural elements situated between the physical contacts, effectively expanding the spectral resolution.

This enhanced spectral resolution has a profound impact on sound quality. The increased frequency information results in a much more natural and clearer sound, significantly improving the appreciation of music, the accurate identification of environmental sounds, and comprehension of tonal languages. When compared to conventional sound processing, current steering provides a richer, more detailed sound.

Figure 5. HiRes Fidelity 120 current steering.

Intensity Processing: Managing Loudness for Comfort and Clarity

Intensity, or loudness, is a crucial aspect of sound that requires careful management within a CI system to ensure that soft sounds are audible while loud sounds remain comfortably processed and do not cause discomfort or distortion.

Input Dynamic Range (IDR)

The Input Dynamic Range (IDR) defines the range of sound captured by the microphones that are processed and delivered to the CI system. A wider IDR ensures that a greater proportion of soft sounds are captured and made audible to the recipient. This is particularly critical for speech, which is inherently dynamic, with varying levels of intensity. If a speaker's voice dips below a narrow IDR, those soft speech segments may become inaudible, compromising understanding. Therefore, a wider IDR directly improves the perception of important, subtle speech sounds.

Clinical research and experience suggest that while the default IDR of 60 dB in AB devices is generally appropriate for most patients, an IDR of 70 dB can be beneficial for new patients. This wider range allows for greater access to soft sounds without necessarily compressing loud sounds excessively. Clinical decisions regarding IDR adjustment are often individualized, based on patient feedback and performance, with the ultimate goal of optimizing audibility and comfort across the full spectrum of environmental sounds.

Signal Analysis: Refining the Auditory Signal

Beyond the fundamental aspects of temporal, spectral, and intensity processing, AB employs signal analysis features designed to further refine the auditory signal, maximizing its clarity and intelligibility for the recipient.

ClearVoice

ClearVoice is an advanced noise-cleaning feature that plays a pivotal role in improving the signal-to-noise ratio (SNR) on each channel within the system. Its primary function is to separate distracting background noises from crucial speech signals, thereby making communication significantly easier, especially in challenging environments. ClearVoice has been proven to be superior in noise reduction compared to conventional methods.

ClearVoice continuously assesses the auditory environment. When background noise, such as steady-state noise or multi-talker babble, is detected alongside speech, ClearVoice dynamically intervenes. It attenuates the noise while preserving the integrity of the speech signal. Studies have shown statistically and clinically significant improvements in speech recognition with ClearVoice enabled, particularly at medium and high settings. While ClearVoice does not completely eliminate background noise (which is important for spatial awareness), it significantly improves the SNR, allowing the speech signal to be more audible.

Clinically, a medium setting for ClearVoice is often the default and preferred starting point for most patients, as it provides a benefit in noise without introducing noticeable artifacts. Low or high settings can be considered based on individual patient preferences or specific environmental demands. For instance, a high setting might be beneficial for individuals frequently exposed to extreme noise, while a low setting or even disabling ClearVoice might be preferred by patients whose professions require them to accurately monitor background machinery noise. The flexibility of these settings allows for a highly customized approach to noise management, ensuring both effective communication and environmental awareness.

SoftVoice

Soft Voice is another crucial sound cleaning feature available in Advanced Bionics devices, specifically designed to improve the audibility of low-level input. In many acoustic environments, subtle sounds or very soft speech can be obscured by low-level background noise. SoftVoice works by effectively cleaning up this unwanted low-level noise, allowing soft speech signals and other soft sounds to become clearer and easier to detect.

For new patients, SoftVoice is typically enabled by default to provide the cleanest possible sound. However, for upgrade patients, who may be accustomed to a different sound profile from their previous devices, clinicians may engage in a trial period with SoftVoice enabled and disabled to determine individual preference. The goal is always to maximize the perception of the acoustic signal, regardless of its volume, thereby contributing to a more complete and natural auditory experience.

Stimulation Delivery

The final stage of the sound processing pathway involves the delivery of processed sound as electrical pulses to the auditory system. Advanced Bionics uniquely offers two distinct stimulation options: sequential and paired stimulation, providing clinicians with greater flexibility in customizing the patient's auditory experience.

Sequential Stimulation

In sequential stimulation, only one channel of sound is stimulated at any given moment in time. This method involves a successive activation of electrodes along the array. AB offers several sequential stimulation options, including HiRes Optima S, HiRes S with Fidelity 120, HiRes S, and CIS. These options vary in their specific algorithms and the way they represent sound, providing clinicians with choices based on patient needs and preferences.

Paired Stimulation

In contrast to sequential stimulation, paired stimulation involves the simultaneous activation of two stimulation sites at any given moment in time. This approach can offer unique benefits to patients, potentially influencing sound quality or perceived clarity. Available paired stimulation options include HiRes Optima P, HiRes P with Fidelity 120, HiRes P, and MPS.

HiRes Optima Programs and Clinical Application

HiRes Optima programs (HiRes Optima S and HiRes Optima P) are built upon the foundation of HiRes Fidelity 120, leveraging the benefits of current steering for enhanced frequency resolution. A significant advantage of HiRes Optima programs is their superior battery efficiency, requiring less power compared to other high-resolution options. For new patients and initial activations, most clinicians start with the latest technology, typically a HiRes Optima program, to maximize the benefits of current steering and energy efficiency.

Dr. Sulkers shared the following about how he determines which sound processing strategy to use: Clinical practice often involves starting with HiRes Optima S. This preference is frequently rooted in cumulative clinical experience, where many patients express an initial preference for the sound quality provided by sequential stimulation. However, the decision is always patient-centric. During initial follow-up appointments (e.g., at one week or one month), clinicians often offer the alternative version (e.g., HiRes Optima P if HiRes Optima S was initially used) to allow the patient to compare and express a clear preference. The ease of switching between these options, requiring only minor adjustments to M levels (maximum comfort levels), facilitates this comparative process. Ultimately, patient preference is paramount, and if a clear choice is expressed, that option is adopted. If no strong preference exists, clinicians may default to HiRes Optima S based on their clinical bias and experience.

Conclusion

The pursuit of improving ease of use and optimizing hearing is at the core of the combined technological advancements in front-end and sound processing by Phonak and Advanced Bionics. The meticulous design of microphone systems, coupled with adaptive comfort features, ensures optimal sound capture and comfort across diverse acoustic environments. AutoSense OS largely eliminates the need for manual adjustments, allowing recipients to navigate their daily lives with reduced cognitive load and enhanced auditory clarity.

Advanced Bionics utilizes current steering technology of HiRes Fidelity 120, to deliver a richer, more natural, and highly detailed auditory experience. Features like ClearVoice and SoftVoice actively refine the signal, improving speech intelligibility in noise and enhancing the audibility of soft sounds. The availability of both sequential and paired stimulation options, particularly the power-efficient HiRes Optima programs, provides clinicians with the flexibility to customize sound delivery to individual patient preferences.

Collectively, the front-end processing and sound processing technologies offered by AB provide recipients with the opportunity to achieve improved speech understanding, greater listening comfort, and enhance overall satisfaction in challenging and dynamic listening environments. By minimizing the burden of device management, these innovations allow individuals with cochlear implants to engage more fully with their world.

References

Advanced Bionics. (2015). Advanced Bionics technologies for understanding speech in noise. Advanced Bionics.

Advanced Bionics. (2015). Auto UltraZoom and StereoZoom features: Unique Naída CI Q90 solutions for hearing in challenging listening environments. Advanced Bionics.

Advanced Bionics. (2016). Bimodal StereoZoom feature: Enhancing conversation in extreme noise for unilateral AB implant recipients. Advanced Bionics.

Advanced Bionics. (2021). AutoSense OS™ 3.0 operating system. Advanced Bionics.

Advanced Bionics. (2021). Marvel CI technology: Leveraging natural ear acoustics to optimize hearing performance. Advanced Bionics.

Blauert, J. (1997). Spatial hearing: The psychophysics of human sound localization. MIT Press.

Brendel, M., Geissler, G., Büchner, A., Fredelake, S., & Lenarz, T. (2013, November 26-29). The microphone location effect on speech perception using the TComm for the Neptune processor [Conference presentation]. 9th APSCI, Hyderabad, India.

Brendel, M., Michels, A., Agrawal, S., Galster, J., & Arnold, L. (2022, April 26-27). Investigation of automatic scene classification and wireless streaming technology in the Naida Marvel hearing system [Conference presentation]. British Cochlear Implant Group, Cardiff, Wales.

Chang, Y. T., Yang, H. M., Lin, Y. H., Liu, S. H., & Wu, J. L. (2009). Tone discrimination and speech perception benefit in Mandarin-speaking children fit with HiRes fidelity 120 sound processing. Otol Neurotol, 30(6), 750-757. https://doi.org/10.1097/MAO.0b013e3181b286b2

Chen, C., Stein, A. L., Milczynski, M., Litvak, L. M., & Reich, A. (2015, July 12-17). Simulating pinna effect by use of the real ear sound algorithm in Advanced Bionics CI recipients [Conference presentation]. Conference on Implantable Auditory Prostheses, Lake Tahoe, CA.

Feilner, M., Rich, S., & Jones, C. (2016). Automatic and directional for kids: Scientific background and implementation of pediatric optimized automatic functions. Phonak Insight. Retrieved from www.phonakpro.com/evidence

Findlen, U. M., & Agrawal, S. (2023, June 7-10). Classifier-based noise management technology helps children with cochlear implants listen in noise [Poster highlight presentation]. CI2023: Cochlear Implants in Children and Adults, Dallas, TX.

First, J. B., Holden, L. K., Reeder, R. M., & Skinner, M. W. (2009). Speech recognition in cochlear implant recipients: Comparison of standard HiRes and HiRes 120 sound processing. Otol Neurotol, 30(2), 146-152. https://doi.org/10.1097/MAO.0b013e3181924ff8

Fischer, W. H., & Schäfer, J. W. (1991). Direction-dependent amplification of the human outer ear. British Journal of Audiology, 25(2), 123–130.

Frohne-Buechner, C., Buechner, A., Gaertner, L., Battmer, R. D., & Lenarz, T. (2004). Experience of uni- and bilateral cochlear implant users with a microphone positioned in the pinna. International Congress Series, 1273, 93-96.

Gifford, R. H., & Revit, L. J. (2010). Speech perception for adult cochlear implant recipients in a realistic background noise: Effectiveness of preprocessing strategies and external options for improving speech recognition in noise. J Am Acad Audiol, 21(7), 441-451.

Jahn, A. F., & Santos-Sacchi, J. (2001). Physiology of the ear. Singular Pub.

Jones, H. G., Kan, A., & Litovsky, R. Y. (2016). The effect of microphone placement on interaural level differences and sound localization across the horizontal plane in bilateral cochlear implant users. Ear and Hearing, 37(5), e341–e345.

Koch, D. B., Osberger, M. J., Segel, P., & Kessler, D. (2004). HiResolution and conventional sound processing in the HiResolution bionic ear: Using appropriate outcome measures to assess speech recognition ability. Audiol Neurootol, 9(4), 214-223.

Koch, D. B., Quick, A., Osberger, M. J., Saoji, A., & Litvak, L. (2014). Enhanced hearing in noise for cochlear implant recipients: Clinical trial results for a commercially available speech-enhancement strategy. Otol Neurotol, 35(5), 803-809. https://doi.org/10.1097/MAO.0000000000000301

Kolberg, E. R., Sheffield, S. W., Davis, T. J., Sunderhaus, L. W., & Gifford, R. H. (2015). Cochlear implant microphone location affects speech recognition in diffuse noise. J Am Acad Audiol, 26(1), 51-58, 109-110.

Legarth, S., Latzel, M., & Rodrigues, T. (2018). Media streaming: The sound quality wearers prefer. Phonak Field Study News. Retrieved from www.phonakpro.com/evidence

Mayo, P. G., & Goupell, M. J. (2020). Acoustic factors affecting interaural level differences for cochlear-implant users. The Journal of the Acoustical Society of America, 147(4), EL357.

Miller, S., Wolfe, J., Duke, M., Schafer, E., Agrawal, S., Koch, D., & Neumann, S. (2021). Benefits of bilateral hearing on the telephone for cochlear implant recipients. Journal of the American Academy of Audiology. Advance online publication. https://doi.org/10.1055/s-0041-1722982

Olivier, E. (2005). Performance of the Auria T-Mic and the behind-the-ear microphone in noise. Auditory Research Bulletin, Advanced Bionics, 160-161.

Rodrigues, T., & Liebe, S. (2018). Phonak AutoSense OS™ 3.0: The new and enhanced automatic operating system. Phonak Insight. Retrieved from www.phonakpro.com/evidence

Rottmann, T., Brendel, M., Buechner, A., & Lenarz, T. (2010, March 12-13). Connection of a mobile phone to a cochlear implant system [Conference presentation]. 9th Advanced Bionics European Investigators’ Conference, Amsterdam, The Netherlands.

Shaw, E. A. (1974). Transformation of sound pressure level from the free field to the eardrum in the horizontal plane. J Acoust Soc Am, 56(6), 1848-1861.

Summerfield, A. Q., & Kitterick, P. T. (2010). Effects of microphone location on the performance of bilateral cochlear implants. Advanced Bionics.

Walsh, W. E., Dougherty, B., Reisberg, D. J., Applebaum, E. L., Shah, C., O'Donnell, P., & Richter, C. P. (2008). The importance of auricular prostheses for speech recognition. Archives of Facial Plastic Surgery, 10(5), 321–328.

Wiener, F. M., & Ross, D. A. (1946). The pressure distribution in the auditory canal in a progressive sound field. The Journal of the Acoustical Society of America, 18(2).