Learning Outcomes

After this course, participants will be able to:

- Describe the role of EEG alpha activity as an indicator of listening effort.

- Describe the association between objectively measured brain activity and behavioral measures regarding working memory.

- Describe the effect of modern hearing aid technology with respect to listening effort when talking on the telephone.

Objective

Measuring listening effort is an interesting approach to evaluating hearing aids. Design: The current study investigated whether data of an electroencephalogram (EEG) can detect changes in neurophysiological indices indicative of listening effort in seven hearing aid users. To assess the effect of a binaural algorithm to support listening on the phone, EEG activity while performing an auditory working memory N-Back task was measured under low SNR conditions with the algorithm either activated or deactivated as well as in a third condition with the algorithm deactivated but a high SNR. Results: Although behavioral responses did not differ between conditions, neurophysiological effects were detected. The auditory event-related potential N1 was more pronounced, when the binaural algorithm was active. When the binaural algorithm was deactivated and the SNR was low, EEG alpha frequency power was relatively larger compared to when the algorithm was activated. The neurophysiological responses with the binaural algorithm activated were comparable to the effect of a 3 dB increase in the SNR. Conclusions: The results of the study show that the binaural algorithm modulates neural parameters that have been associated with listening effort. This study underlines EEG as a promising methodology to assess neural correlates of listening effort.

Introduction

Speech perception can be effortful in noisy environments particularly for hearing impaired individuals, even with hearing aids (CHABA - Committee on Hearing and Bioacoustics, 1988; McCoy et al., 2005; Klink et al., 2012).

Speech perception under adverse conditions is challenging and increased efforts to decode the spoken signal negatively impact cognitive functions such as working memory (WM) for example (Schneider and Pichora-Fuller, 2000). If signal processing is effortful, more processing resources have to be devoted to sensory encoding leaving fewer resources available for higher level processing. This “effortfulness hypothesis” (Rabitt, 1968) is derived from the limited resources theory (Kahneman, 1973) that states that all mental processes, including perceptual ones, share a common pool of resources with limited capacity. If one of the processes requires more resources, fewer resources will be left for other processes (Just and Carpenter, 1992; Rabbitt, 1968; Kahneman, 1973). If signal processing can be rendered to operate more efficiently at the sensory level, resources are released to benefit higher level processes (Frtusova et al., 2013; Just and Carpenter, 1992; Schneider and Pichora-Fuller, 2000).

The topic of measuring listening effort is of tremendous interest in the context of hearing aid fitting (Pichora-Fuller and Singh, 2006). There is no unified definition of what constitutes listening effort. In line with the definition proposed in the Framework for Understanding Effortful Listening (FUEL; Pichora-Fuller et al., 2016), in our understanding, listening effort refers to an intentional act of investing mental resources, which have corresponding neural underpinnings, in order to comprehend a (speech) signal of interest in an acoustically challenging environment. Also, prolonged phases of listening effort result in reported fatigue and can negatively affect cognitive functions (Edwards, 2007). A number of methods have been used to assess listening effort (McGarrigle et al., 2014), such as dual-task paradigms (e.g., Fraser et al., 2010;) or pupillometry to quantify listening effort by taking physiological pupil responses into consideration (e.g., Zekveld et al., 2010). Pichora-Fuller and Singh (2006) point out how achievements in translational neuroscience can contribute to optimal hearing aid fitting by taking factors such as cognitive effort and its neural correlates into account. Based on this, another potential approach to assessing listening effort is to analyze neurophysiological responses by means of recording an electroencephalogram (EEG). Strauss and colleagues (2010) proposed a neurodynamic model to illustrate the link between electrophysiological measures and listening effort. Based on this neurodynamic model, Bernarding and colleagues (2014) conducted an EEG study and reported an increase in phase coherence in the lower alpha frequency band in listening conditions that require increased effort. Findings from other studies support EEG as approach to quantify listening effort, although the studies differ with respect to the type of analysis and EEG parameters of interest (Strauss et al., 2010; Bernarding et al., 2014; Strauß et al., 2014).

The EEG signal can be decomposed into different frequency bands. There is data linking increased alpha power, or event-related synchronization (ERS), to an increase in cognitive effort (e.g., Klimesch et al., 2007; Obleser et al., 2012). Few studies applied auditory paradigms to look into alpha activity and its relation to cognitive functioning (Strauß et al., 2014). A recent auditory EEG study demonstrates the similarity in neural activity between increased cognitive demand and increased listening effort (Obleser et al., 2012). The authors manipulated the signal-to-noise ratio (SNR) on the one hand and WM load on the other hand. They focused their analyses of neural activity on the alpha-frequency band and showed an increase in alpha power both in the increased WM load condition and in the decreased SNR condition. This was interpreted as an index for increased listening effort during suboptimal SNR conditions (e.g., Klimesch et al., 2007; Obleser et al., 2012).

Alpha activity is presumed to reflect suppression of interfering information (e.g., noise) from being processed. That is, alpha activity is important for inhibiting distracting information to focus on task-relevant information and is to be localized to scalp areas where suppression is necessary (Foxe and Snyder, 2011; Strauß et al., 2014).

The current study aims at evaluating a binaural hearing aid algorithm when listening to speech on the telephone in background noise. There is longstanding evidence for the benefit of binaural over monaural hearing (e.g., Avan et al., 2015) and for an advantage of binaural over monaural hearing aid fitting (Pichora-Fuller and Singh, 2006; Boymans et al., 2008).

Hearing aid users report marked difficulties in speech perception on the telephone (Kochkin, 2010). The binaural hearing aid algorithm DuoPhone® by Phonak® transmits the speech signal from the telephone ear into the contralateral ear. Previous studies (Nyffler, 2010) have shown a benefit for DuoPhone® when adult participants were asked to listen to speech in noise. A study with children could show benefits in speech intelligibility when using DuoPhone® (Wolfe et al., 2015). Another study investigating DuoPhone® did not show statistically significant improvements in speech intelligibility when using binaural in comparison with monaural presentation of the telephone signal but better ratings in terms of subjective listening effort (Latzel et al., 2014). To obtain objective, physiological data indicative of changes in listening effort, the current project aimed to experimentally assess the DuoPhone® function by recording and analyzing EEG data. Based on the “effortfulness hypothesis” (Rabbitt, 1968) we implemented an auditory N-back WM task (cf. Gevins and Cutillo, 1990) in different listening conditions (see section Methods & Materials) to measure changes in (listening) effort. We recorded behavioral and EEG data. With regards to event-related brain potentials (ERP), we focused the analyses on the auditory N1 and P3. The N1 is an automatic brain response elicited by the onset of a sound. The N1 is involved in auditory feature analysis and it increases in amplitude with increasing stimulus intensities (Näätänen and Picton, 1987). Despite the fact that the N1 reflects early sensory processing, its amplitude can be modulated by attention, with attended stimuli eliciting larger amplitudes than unattended stimuli (Näätänen and Picton, 1987). In normal hearing adults, the N1 peaks between 90 to 150 ms after stimulus onset and is largest at electrode sites around the vertex (Eggermont and Ponton, 2002). The N1 tends to be delayed in older adults (e.g., Winneke and Phillips, 2011) and the source of the N1 is suggested to be in the planum temporale of the superior temporal gyrus near primary auditory cortex (Eggermont and Ponton, 2002; Näätänen and Picton, 1987).

The P3 is a broad positive ERP occurring between 300 and 1000 ms after presentation of the stimulus and two subcomponents of P3 are reported. The P3a is elicited by distinct, infrequent stimuli presented among frequent stimuli without the requirement of a response from the participant. In contrast, the P3b is elicited during task-relevant processing of stimuli (cf. Polich, 2007). Here, P3 always refers to the P3b subcomponent. The P3 reaches the maximum peak at central posterior sites and several studies have validated P3 as a measure of WM load (e.g., Segalowitz et al., 2001; Frtusova et al., 2013) showing that the amplitude of P3 decreases as WM load increases.

Hypotheses

We hypothesized that a reduction in listening effort by using the DuoPhone® function would improve WM processing, as reflected by faster response times and better accuracy in the N-back task. We further hypothesize an increase in the N1 amplitude due to a better signal quality with DuoPhone® compared to without DuoPhone® (Näätänen and Picton, 1987). Based on the shared resource hypothesis (Rabbit, 1968), this improved auditory processing should yield decrease in WM load as indexed by a larger P3 amplitude for the DuoPhone® condition.

With respect to EEG alpha-frequency activity, it is expected that alpha power should decline with decreasing listening effort (Obleser et al., 2012). Therefore, if the DuoPhone® function improves auditory signal processing, less effort will be exerted on irrelevant noise suppression which should in turn be visible in reduced alpha power (i.e. increased ERS). Similarly, a decrease in alpha power should also be evident in conditions with an improved SNR even without DuoPhone®.

Materials and Methods

Participants

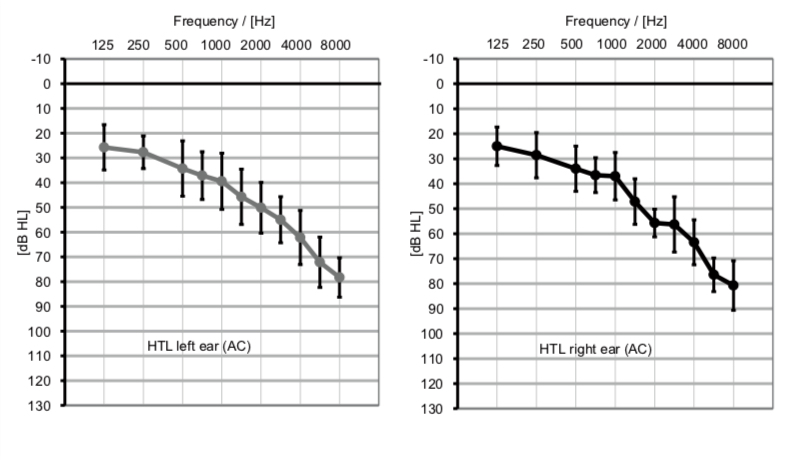

All participants (N = 10) were experienced hearing aid users and were recruited from a previous study investigating DuoPhone® (Latzel et al., 2014). This fact limited the maximum number of potential participants in this study. Data of three participants had to be discarded due to very noisy EEG data (n = 2) or due to poor behavioral performance (i.e., performance deviated more than four standard deviations from the mean; n = 1). The final sample consisted of seven older adults (mean age 73.4 years (SD = 3.46); 2 women) without any history of neurological or psychological impairments, but with moderate hearing deficits (Figure 1). The study was approved by the local ethics committee of the Carl-von-Ossietzky University in Oldenburg. Participants were compensated for participation.

Figure 1. Average pure tone audiograms (error bars = standard deviation) of the participants (n = 7) for the left ear (left) and the right ear (right).

Stimuli and Task

The stimuli for the 2-back task, explained below in more detail, consisted of spoken one-syllabic German digits (1-9; excluding the two-syllabic 7) presented monaurally via open headphones (Sennheiser HD-650) only to the preferred “telephone ear” of each individual participant. The advantage of using an open headphone is that surrounding background noise is only minimally perturbed so that the noise reaches the ear and is picked up by the microphones in the hearing aids. The stimuli were taken from recordings of the German version of Digit-Triplet Test (DTT; Zokoll et al., 2012) and presented at 65 dB SPL. The reason to use headphones instead of a telephone was to ensure proper and consistent placement of the sound source relative to the ear for all participants. The usage of headphones instead of a regular telephone required the rendering of the signal to simulate the sound characteristics of a telephone signal. This was achieved by establishing a landline phone connection using the G.711-codec. On the far-end side the signal (i.e., the digits) was sent digitally into the phone line (PhonerLite, 2014). On the near-end side the transmitted signal was recorded on a digital answering machine (sipgate GmbH, Germany). The stimuli were presented using OpenVibe software V. 0.17.0 (Renard et al., 2010). The digits of the 2-back task were presented via open headphones simultaneously in background noise presented from loudspeakers at 45, 135, 225, and 315°. The background noise consisted of uncorrelated international speech test signals (ISTS; Holube et al., 2010). The intensity of the background noise was adjusted individually depending on the participant’s performance in the DTT that preceded the experimental N-back task (see section Procedure).

In the 2-back task, participants were asked to decide for each digit they heard whether it corresponds to the digit they had heard two lags earlier. If the two digits were identical (match trial), participants had to indicate this by pressing the space bar of a standard keyboard. Non-match trials did not require a button press response. The digits were presented in a pseudo-randomized sequence to create a 40/60 ratio of match/non-match trials (cf. Frtusova et al., 2013). Each condition (see below) comprised a total of 120 digits. The stimulus onset asynchrony was set to 2000 ms and the response time window was fixed at 100 to 1600 ms after stimulus onset.

Procedure

After describing the task to the participants and obtaining an informed consent a modified version of the DTT was conducted. The digits in the DTT were presented at a fixed level of 65 dB SPL and the noise level was adjusted adaptively to obtain the individual SNR corresponding to a digit identification rate of 50% (SNR50). For the DTT the participants were fitted with hearing aids and the DuoPhone® function was deactivated. The digits of the DTT were presented via open headphones while the ISTS background noise came from the four surrounding loudspeakers. For the 2-back task, the obtained noise level (SNR50) was decreased by 4 dB SPL. Based on previous pilot testing, this improvement in SNR was estimated to yield a speech identification rate of about 90%. No EEG was recorded during the DTT.

After the DTT was completed, the EEG cap and the headphones were placed on the participant’s head and participants were allowed to practice the 2-back task. There were a total of three conditions that were presented in blocks in a pseudo-randomized order to ensure a counterbalanced sequence among participants:

- DuoPhone® off & low SNR (DPoff_LoSNR) – the DuoPhone® function was turned off and the background noise level corresponded to SNR50 + 4dB SPL

- DuoPhone® on & low SNR (DPon_LoSNR) – the DuoPhone® function was turned on and the background noise level corresponded to SNR50 + 4dB SPL

- DuoPhone® off & high SNR (DPoff_HiSNR) – the DuoPhone® function was turned off and the background noise level corresponded to SNR50 + 7dB SPL.

Low SNR corresponded to the SNR setting from the DTT 50% intelligibility (SNR50) + 4 dB SPL (i.e. subtract 4 dB SPL from the individual noise level resulting from the DTT). High SNR corresponds to improving the SNR by additional 3 dB SPL (subtract additional 3 dB SPL from the noise level (i.e. SNR50 + 4 dB SPL + 3 dB SPL)). Each condition lasted about five minutes and participants were given breaks in between. The entire session lasted two hours including the DTT and the EEG electrode cap setup.

EEG Data

A 24 channel wireless Smarting EEG system (mBrainTrain, Belgrade, Serbia) was used to record EEG during the 2-back task. The brain activity was recorded from 24 Ag/AgCl electrode sites mounted into a custom-made elastic EEG cap (EasyCap, Herrsching, Germany) and arranged according to the International 10–20 system (Jasper, 1958). OpenVibe software V.0.17.0 was used to record EEG data (Renard et al., 2010). The EEG was recorded at a sampling rate of 500 Hz, with a low-pass filter of 250 Hz. Impedances were kept below 10 – 15 kOhm. EEG data offline processing was conducted using EEGLab v.12 (Delorme and Makeig, 2014). EEG recordings were re-referenced off-line to a linked left and right mastoid reference. Continuous EEG data were filtered using 0.1 Hz to 30 Hz bandpass filter after applying a 50Hz notch filter. Ocular and other EEG artefacts were identified by visual inspection and removed using an independent component analysis.

The continuous EEG data were epoched into a -1000 ms to 2000 ms window around the onset of each digit to compute averaged ERPs as well as event-related spectral perturbations (ERSP; time-frequency analysis). ERPs were baseline-corrected using a 200 ms pre-stimulus time interval. To extract time-frequency data or ERSP, epoched trial data were convoluted with dynamic Morlet wavelets (3 cycles width at lowest frequency to 50 cycles width at highest), and the power spectra were estimated from 3 Hz to 250 Hz steps and for the entire duration of the epoch (-1000 ms to 2000 ms relative to stimulus onset). For each of the three conditions ERSP estimates were obtained for each participant separately. Only epochs containing a correct response to a match trial were included in the subsequent statistical analyses.

Results

Behavioral Findings

Behavioral results include response times and accuracy (d-prime; e.g., Frtusova et al., 2013). D-prime (d’) takes an individual’s response bias into account by including the standardized false alarm rate and hit rate (d’ = z(false alarm rate) – z(hit rate); Yanz, 1984;). Behavioral data were analyzed using repeated measure ANOVAs with the within-subject factor condition (DPon_LoSNR; DPoff_LoSNR; DPoff_HiSNR). Statistical analyses did not indicate any statistically significant differences between any of the conditions neither for response times (DPon_LoSNR: m = 896 ms, SD = 195; DPoff_LoSNR: m = 860 ms, SD = 152; DPoff_HiSNR: m = 790 ms, SD = 157) nor accuracy (DPon_LoSNR: m = 2.31, SD = 0.76; DPoff_LoSNR: m = 2.46, SD = 0.76; DPoff_HiSNR: m = 2.56, SD = 0.75).

Electrophysiological Results

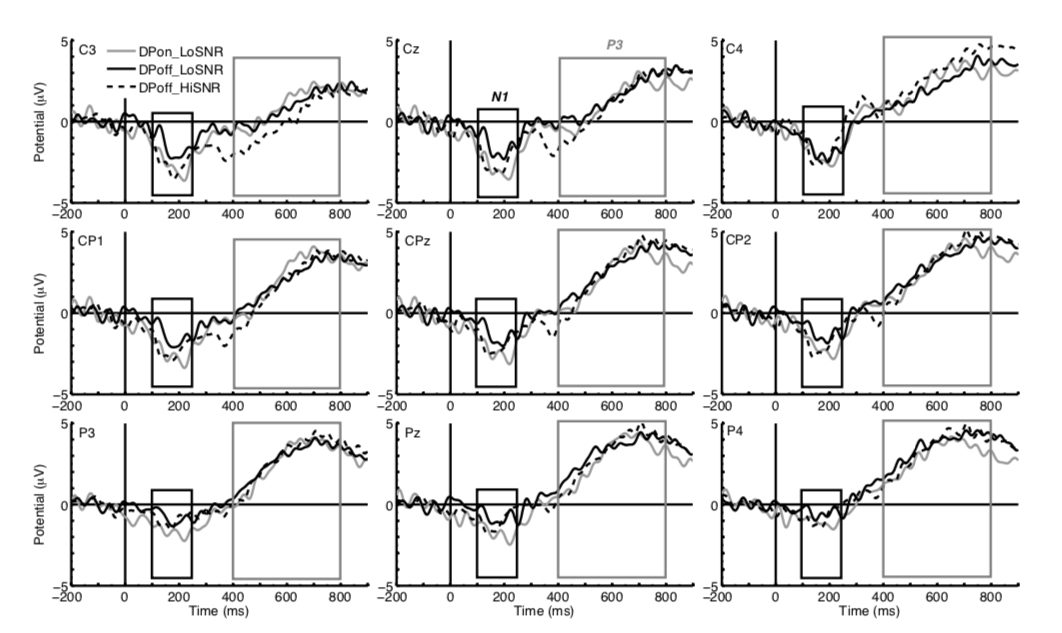

Figure 2 illustrates the ERP responses to match trials followed by correct responses for the conditions DPoff_LoSNR, DPon_LoSNR and DPoff_HiSNR. The waveforms are taken from central, centro-parietal and parietal sites to show the ERPs N1 and P3. The N1 component is most prominent in the time window of 100 to 250 ms after stimulus onset whereas the P3 is a broad positive wave in the time window of 400 to 800 ms after stimulus onset.

Figure 2. ERP waveforms of the conditions DPoff_LoSNR (black solid line), DPon_LoSNR (grey solid line) and DPoff_HiSNR (black dotted line) at central electrode sites (top row, left to right C3, Cz, C4) centro-parietal sites (middle row, left to right CP1, CPz, CP2) and parietal sites (bottom row, left to right: P3, Pz, P4). The black box indicates the time window of the N1 (100 – 250 ms after stimulus onset) and the grey box indicates the time window of the P3 (400 to 800 ms after stimulus onset).

N1 Results

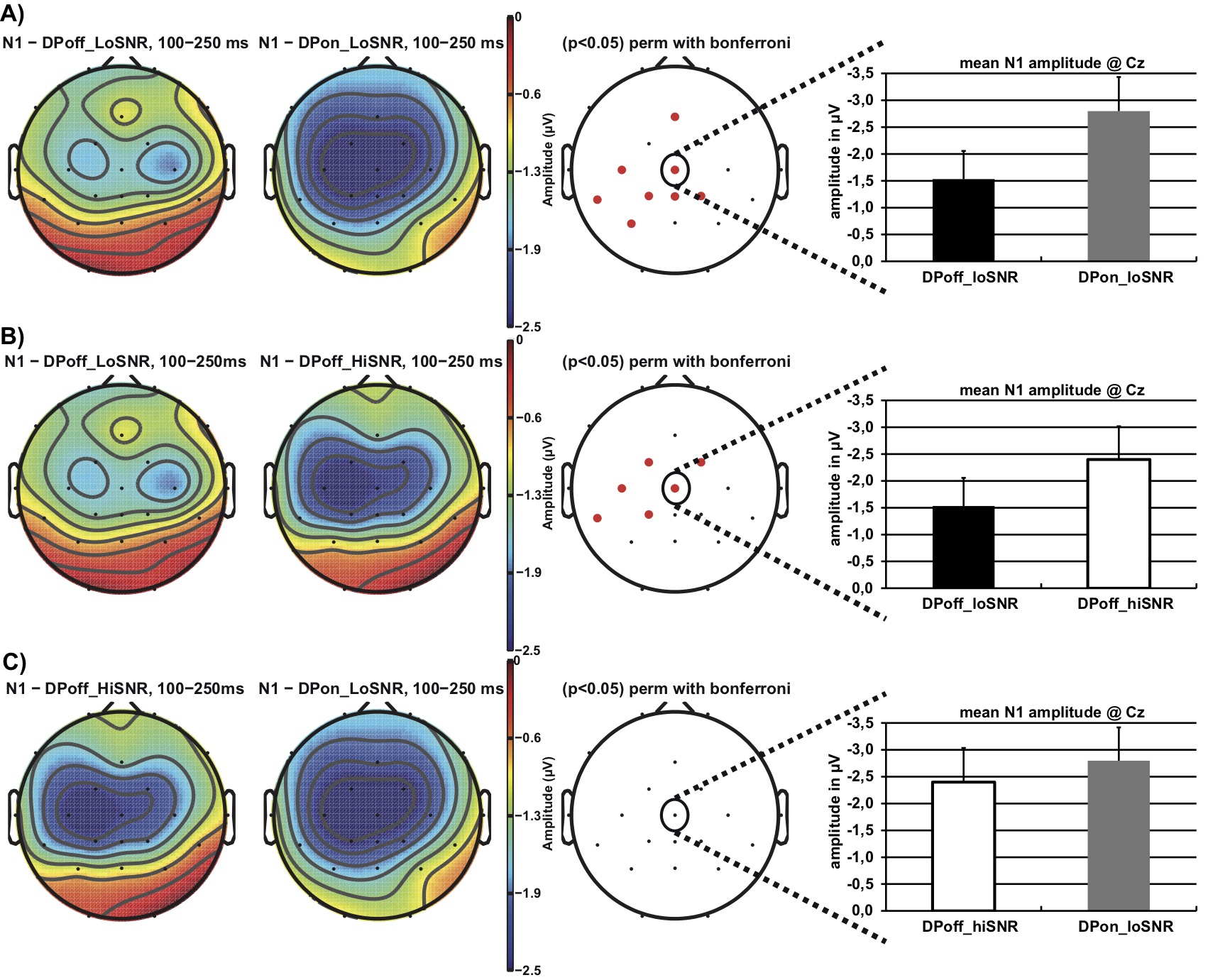

Figure 3 illustrates the N1 effect with respect to the topographical distribution in the time interval of 100 to 250 ms after stimulus onset. Topographical distribution differences of the N1 were analyzed using Bonferroni corrected permutation t-tests with a statistical significance level of p = .05 as implemented in EEGLab v.12. Figure 3a illustrates that the N1 amplitude is larger (i.e., more negative) in the DPon_LoSNR compared to the DPoff_LoSNR condition at fronto-central sites. Similarly, the N1 amplitude is larger in the DPoff_HiSNR compared to the DPoff_LoSNR condition (Figure 3b). No statistically significant differences in N1 amplitude are apparent when comparing DPon_LoSNR to the DPoff_HiSNR condition (Figure 3c).

Figure 3. Topographical distribution of the N1 amplitude in the time window 100 – 250 ms after stimulus onset contrasting the conditions: a) DPoff_LoSNR vs. DPon_LoSNR, b) DPoff_HiSNR vs. DPoff_LoSNR and c) DPoff_HiSNR vs. DPon_LoSNR. The red dots on the panels in the third column indicate electrode sites where the conditions differ significantly from each other (Bonferroni corrected permutation t-tests p< .05). The panels on the right display bar graphs of the N1 mean amplitude at electrode site Cz and corresponding standard error values; note, negativity is plotted upwards.

P3 Results

Based on visual inspection of the ERP waveforms in figure 2 the P3 responses overlap for all conditions. P3 amplitudes were computed by averaging ERPs to correct match trials over the time window 400 to 800 ms after stimulus onset at electrode site Pz where the P3 is most prominent. Neither permutation t-tests nor a repeated measures ANOVA with the within-subject factor condition revealed any statistically significant difference between conditions (DPoff_LoSNR: m = 3.27 µV, SD = 2.68; DPon_LoSNR: m = 2.90 µV, SD = 3.40; DPoff_HiSNR: m= 3.19 µV, SD = 2.98).

ERSP Time-Frequency Data

ERSP data were analyzed using Bonferroni corrected permutation t-tests with a statistical significance level of p = .05 (EEGLab v.12). We restricted the analyses of ERSP to the alpha frequency band from 7 to 14 Hz. As outlined previously, this frequency band has been associated with listening effort and cognitive load in parietal brain areas. In order to reduce the number of statistical tests we restricted our statistical permutation tests of ERSP data a-priori to the parietal electrode sites P3, Pz, P4.

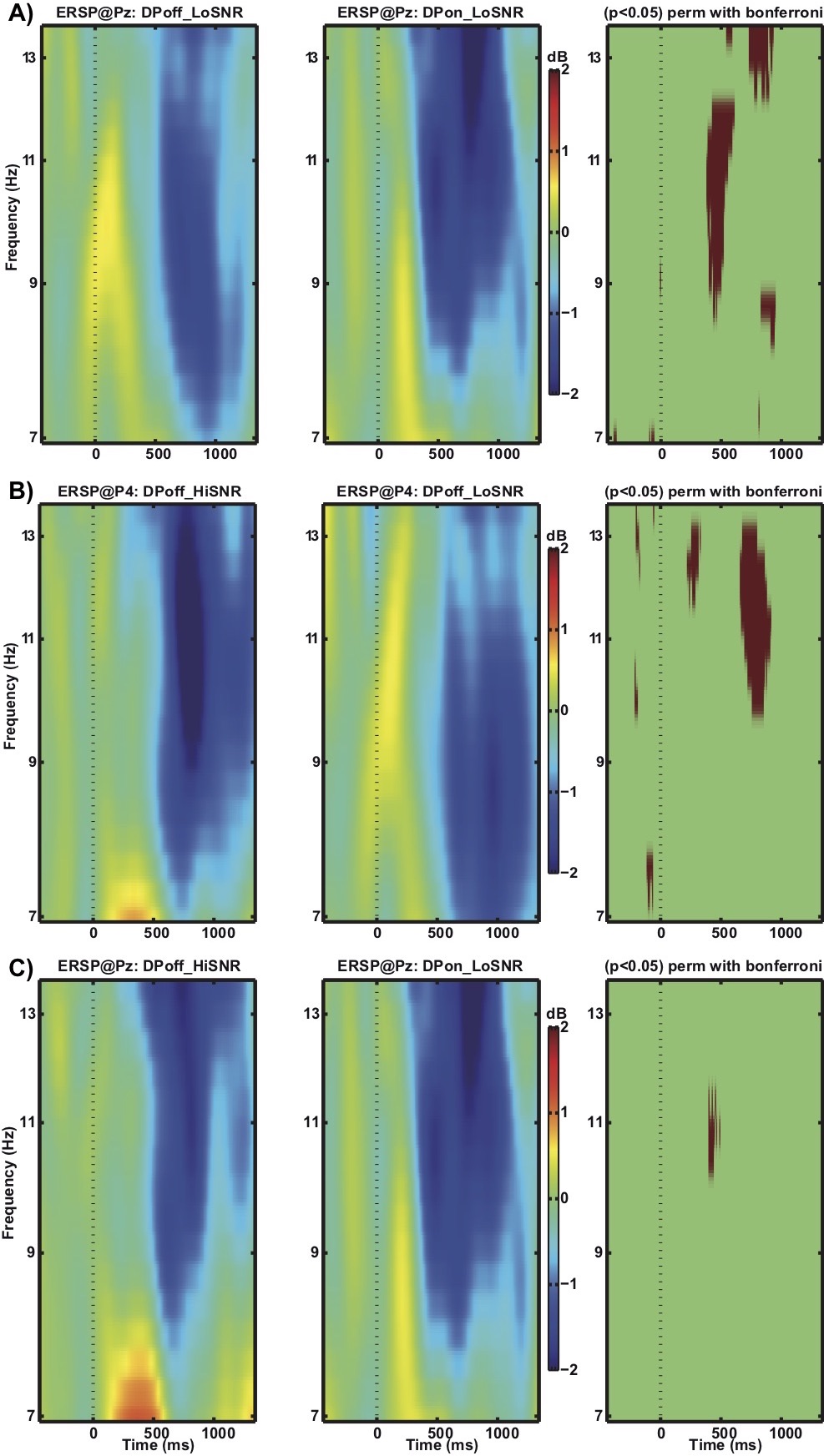

Figure 4 depicts the ERSP data. The contrast between the conditions DPoff_LoSNR and DPon_LoSNR at electrode site Pz (Figure 4a) reveals that, relative to baseline activity, alpha power (9 – 12 Hz) is significantly more reduced in the DPon_LoSNR condition compared to the DPoff_LoSNR for an extended period of time (from 381 ms to 615 ms after stimulus onset).The pattern of results is similar for the comparison of DPoff_HiSNR and DPoff_LoSNR (Figure 4b). As for DPon_LoSNR (figure 4a) relative to baseline activity, alpha power is significantly more reduced in the DPoff_HiSNR condition compared to DPoff_LoSNR from 660 ms to 920 ms at electrode site P4. The results differ slightly from the contrast DPon_LoSNR and DPoff_LoSNR in that the differences are most prominent at a different electrode site (P4 vs. Pz) and during slightly later time windows. The results suggest that the activation patterns with respect to the alpha frequency band ERSP data are similar for the conditions DPoff_HiSNR and DPon_LoSNR. A statistical analysis confirmed this similarity by comparing DPon_LoSNR with DPoff_HiSNR. No statistically significant differences become apparent at site Pz (Figure 5c) or P4 (not shown).

Figure 4. Comparison of ERSP of alpha frequency band (7-14 Hz) a) at electrode Pz for conditions DPoff_LoSNR and DPon_LoSNR, b) at electrode P4 for conditions DPoff_HiSNR and DPoff_LoSNR, and c) at electrode Pz for conditions DPoff_HiSNR and DPon_LoSNR. Red areas in the third column indicate time points where the difference between the two conditions reached statistical significance.

Correlation Between EEG and Behavioural Data

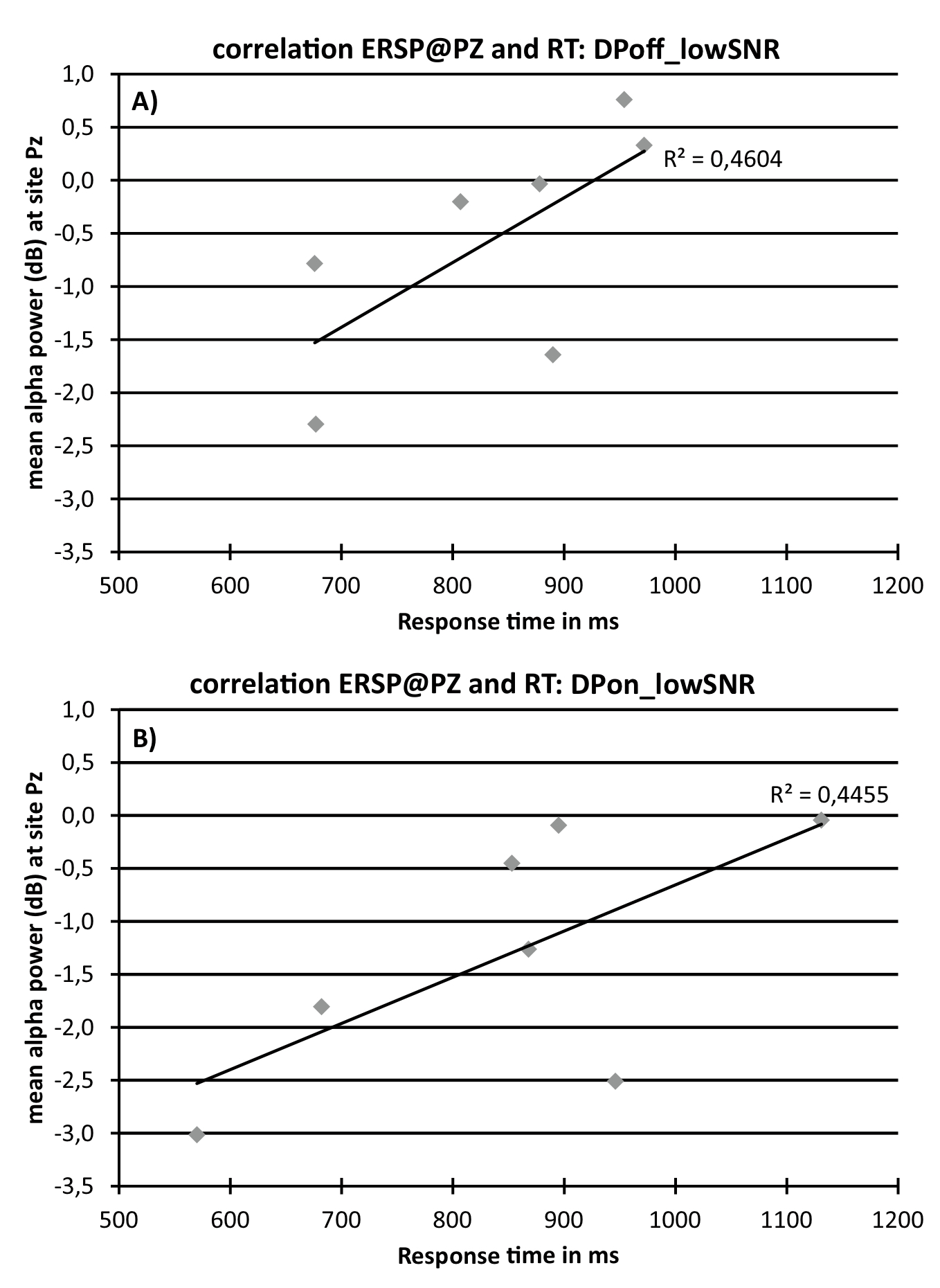

To further explore a potential relationship between response time and ERSP data correlational analyses were conducted. Figure 5 indicates positive correlations between Alpha at Pz (average alpha power between 9 to 12 Hz in the time window of 300 to 600 ms after stimulus onset; see figure 4a) and response time for the condition DPoff_lowSNR (Figure 5a; r = 0.68; R² = .46; p = .09) and DPon_lowSNR (Figure 5b; r = 0.67; R²= .45; p = .10).

Figure 5. Scatterplots showing the correlation between response times and alpha band ERSP data at electrode Pz (average alpha power between 9 to 12 Hz in the time window of 300 to 600 ms after stimulus onset) for condition DPoff_lowSNR (A; top) and DPon_lowSNR (B; bottom).

Discussion

In this study participants performed a 2-back WM task in a noisy environment while EEG responses were recorded to investigate the effects of the binaural DuoPhone® hearing aid function on neurophysiological markers indicative of listening effort.

Based on the limited resource hypothesis (Kahneman, 1973; Rabbitt, 1968), we hypothesized that change in auditory functioning affects resources available for cognitive WM processes. By streaming the telephone signal binaurally to both ears, DuoPhone® is intended to improve speech intelligibility and hence reduce the effort when listening on the telephone in background noise. That is, fewer neural resources have to be invested in suppressing irrelevant background noise and spared resources will be available for higher level operations (e.g., Strauß et al., 2014). We hypothesized this reduction in listening effort to be visible in both behavioral and electrophysiological data.

Analyses of the behavioral data did not reveal benefits when DuoPhone® was activated (DPon_LoSNR vs. DPoff_LoSNR), or when SNR was raised by 3 dB SPL (DPoff_HiSNR vs. DPoff_LoSNR). One potential explanation is that participants compensated during the worst listening condition (DPoff_LoSNR) by investing more (neural) resources (i.e. effort) to still perform to the best of their abilities.

Support for this compensation claim comes from the ERSP data of the alpha frequency band (figure 4), which has been proposed to be an index for auditory inhibition of irrelevant information and listening effort (Strauß et al., 2014). Alpha power was relatively more reduced in conditions with a high SNR (DPoff_HiSNR) relative to conditions with a low SNR (DPoff_LoSNR) when DuoPhone® was not activated. Likewise, alpha power was relatively more reduced when DuoPhone® was activated (DPon_LoSNR) compared to when DuoPhone® was deactivated (DPoff_LoSNR) at low SNR. The effects (DPon_LoSNR and DPoff_HiSNR ) were not identical but quite similar as the differences were detected at different sites but in close proximity to each other (electrode site P4 is adjacent to Pz) and in slightly shifted time windows. There is evidence in the literature linking increased alpha power to an increase in cognitive and listening effort (e.g., Klimesch et al., 2007; Obleser et al., 2012; Strauß et al., 2014). Regarding the ERSP data in the current study it should be noted that relative to baseline, alpha power is reduced in the condition when DuoPhone® was turned off (DPoff_LoSNR), however, the reduction of alpha power was significantly more pronounced when DuoPhone® was activated (DPon_LoSNR) (see figure 4). In other words, the relatively larger reduction in alpha power under DuoPhone® usage, i.e. increase in event-related desynchronization, seems to suggest that the task required less effort than when DuoPhone® was deactivated (i.e. when there was no binaural streaming). Given that the cognitive load posed by the 2-back WM task was constant across conditions, differences in the ERSP data are likely due to differences in auditory processing. Given the time point of observed differences, the effects are likely linked to top-down processes. Strauß and colleagues (2014) consider power in the alpha frequency band an index for auditory inhibition of irrelevant information. A study by Obleser and colleagues (2012) show similar alpha responses to i) modifications in WM load and ii) changes in SNR. More specifically, both an increase in WM load and an increase in acoustic degradation led to relative increases in alpha power. These findings support the suggestion that the observed reductions in alpha power during DuoPhone® usage indicate a reduction in inhibitory effort which constitutes a central component of listening effort. It should also be noted that the alpha frequency effects were most prominent at parietal sites and less over temporal electrodes. This might be due to the choice of masking noise. ISTS is speech noise and contains both informational and energetic masking. This difference could explain a shift towards more parietal sites (Strauß et al., 2014).

Although no main effects for behavioral data were detected, correlational analyses unveiled an interesting association between ERSP data and response times. Although not statistically significant the correlations are intriguing. An increase in processing effort or listening effort, as reflected by an increase in alpha power, is linked to an increase in reaction times (Figure 5). This lends support to alpha as a neurophysiological index of processes pertaining to the construct of listening effort. It should be noted that the sample size is small and interpretations of the reported correlations should be considered with care. Futures studies are advised to follow-up on this including larger sample sizes.

The N1 ERP data (Figure 3) provide neurophysiological support for improved listening conditions i) when DuoPhone® was activated (DPon_LoSNR) and ii) when the SNR was improved by 3 dB SPL (DPoff_HiSNR). Analyses revealed a significantly larger N1 amplitude when DuoPhone® was activated (DPon_LoSNR) compared to when it was deactivated (DPoff_LoSNR). This effect could be due to an improved signal quality. This interpretation is supported by the fact that a similar result was obtained when comparing the N1 responses to stimuli presented with an improved SNR (+3 dB SPL; DPoff_HiSNR) relative to a condition with more background noise (DPoff_LoSNR). Previous research has shown that a more intense auditory stimulus is related to an increase in N1 amplitude (Näätänen and Picton, 1987). That is, the effect of DuoPhone® on N1 amplitude is comparable to improving the SNR by 3 dB SPL.

Previous research has shown that improving auditory processing modulates WM also at the electrophysiological level reflected in changes in P3 amplitude (Frtusova et al., 2013). No effect on P3 amplitude was found in the current study. Perhaps the small number of participants and large variances can partially explain the lack of significant effects. Future studies with a larger sample size could shed more light on this.

Importantly, the ERSP results discussed above suggest a relationship between auditory processing and cognition. The improved auditory signal modulates early auditory ERP responses which in turn could lead to a reduction in alpha power which has been postulated to indicate a reduction in resources invested in inhibition of irrelevant information. This decrease in resources spent on inhibition of noise is closely linked to the concept of listening effort as it indicates a reduction in resource allocation to overcome an (acoustic) obstacle to achieve the goal of understanding speech (Pichora-Fuller et al., 2016). Although the results of this study support the conclusion of alpha power as marker of listening effort, more research is required to better understand the linkage between alpha modulations and listening effort. As outlined in the Framework for Understanding Effortful Listening (FUEL), motivation to understand speech in noise is likely a crucial factor that influences the degree of invested effort and constitutes a factor that future studies should consider (Pichora-Fuller et al., 2016).

The current results have to be considered with caution due to a small sample size (n = 7), but the findings provide support to a growing body of literature for employing EEG as tool to assess listening effort. Most importantly, neurophysiological EEG analyses enable to unveil compensatory mechanisms in the absence of behavioral differences. Increased listening effort might mask behavioral performance effects but can be uncovered by analyzing modulations in alpha frequency power (Strauß et al., 2014). In scenarios with favourable SNRs above threshold where speech understanding rates are high (in the current study the rates were about 90%), behavioural effects are likely to be less pronounced than in SNRs around threshold level.

The results presented here have important implications for hearing aid fittings and evaluations of hearing aid functionalities. However, the implications go beyond hearing aids. A recent study evaluated hearing support in telephones for employees in service- and support centers (Winneke et al., 2016). The results of that study align with the results presented here, because in conditions where hearing support was provided, alpha-band power was reduced relative to conditions without hearing support. This shows that measuring EEG brain activity has important implications for the area of ergonomics. Future research should continue to use brain signals to evaluate listening and cognitive effort online to develop supportive brain computer interfaces possibly implemented in hearing aids (Parasuraman and Wilson, 2008).

Acknowledgements

The authors would like to thank Jana Besser for constructive feedback and support during manuscript preparation and Kevin Pollak and Matthias Vormann from Hörzentrum Oldenburg GmbH for their support in conducting the experiments.

Conflict of Interest declaration

The authors declare that there is no conflict of interest. However, please note that the hearing aid algorithm used for testing in the current study is integrated in commercially available hearing aids. The study received some financial support by Sonova AG and two of the co-authors are employed by Phonak AG (ML) and Sonova AG (PD).

References

Avan, P., Giraudet, F., and Büki, B. (2015). Importance of Binaural Hearing. J Audiol Otol, 20(Suppl. 1), 3-6.

Bernarding, C., Strauss, D.J., Hannemann, R., Seidler, H., and Corona-Strauss, F. I. (2014). Objective assessment of listening effort in the oscillatory EEG: Comparison of different hearing aid configurations. In Conf Proc IEEE Eng Med Biol Soc, 36th Annual International Conference of the IEEE, pp. 2653-2656.

Committee on Hearing and Bioacoustics. (1988). Speech understanding and aging. J Acoust Soc Am, 83, 859–895.

Delorme A. and Makeig S. (2004) EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics. J Neurosci Methods,134, 9-21

Edwards, E. (2007). The future of hearing aid technology. Trends Amplif, 11, 31–45

Eggermont, J., and Ponton, C. (2002). The neurophysiology of auditory perception: From single units to evoked potentials. Audiol Neurootol, 7, 71–99.

Foxe, J. J., and Snyder, A. C. (2011). The role of alpha-band brain oscillations as a sensory suppression mechanism during selective attention. Front Psychol, 2, 154.

Fraser, S., Gagné, J. P., Alepins, M., and Dubois, P. (2010). Evaluating the effort expended to understand speech in noise using a dual-task paradigm: The effects of providing visual speech cues. J Speech Lang Hear Res, 53(1), 18-33.

Frtusova, J. B., Winneke, A. H., and Phillips, N. A. (2013). ERP evidence that auditory-visual speech facilitates working memory in younger and older adults. Psychol Aging, 28 (2), 481-494.

Gevins, A., and Cutillo, B. (1993). Spatiotemporal dynamics of component processes in human working memory.Electroencephalogr Clin Neurophysiol, 87(3), 128-143.

Holube, I., Fredelake, S., Vlaming, M., and Kollmeier, B. (2010). Development and analysis of an international speech test signal (ISTS). Int. J. Audiol, 49, 891-903.

Jasper, H. H. (1958). The ten-twenty electrode system of the International Federation. Electroencephalogr Clin Neurophysiol, 10, 371–375.

Just, M. A., and Carpenter, P. A. (1992). A capacity theory of comprehension: Individual differences in working memory. Psychol Rev, 99, 122–149.

Kahneman, D. (1973). Attention and Effort. Englewoods Cliffs, NJ: Prentice-Hall.

Klimesch, W., Sauseng, P., and Hanslmayr, S. (2007). EEG alpha oscillations: the inhibition-timing hypothesis. Brain Res Rev, 53, 63– 88.

Klink, K. B., Schulte, M., and Meis, M. (2012). Measuring listening effort in the field of audiology – a literature review of methods, part 1. Audiological Acoustics, 51(2), 60-67.

Kochkin S (2010) MarkeTrak VIII: Consumer satisfaction with hearing aids is slowly increasing. The Hearing Journal; 63, 19-27.

Latzel, M., Wolfe, J., Appleton-Huber, J., and Anderson, S. (2014). Benefit of telephone solutions for children and adults. Poster presented at the 41st annual scientific and technology conference of the American Auditory Society. March 6 – 8, Scottsdale, AZ

McCoy, S. L., Tun, P. A., Cox, L. C., Colangelo, M., Stewart, R. A., and Wingfield, A. (2005). Hearing loss and perceptual effort: Downstream effects on older adults’ memory for speech. Q J Exp Psychol A, 58(1), 22-33.

McGarrigle, R., Munro, K. J., Dawes, P., Stewart, A. J., Moore, D. R., Barry, J. G., and Amitay, S. (2014). Listening effort and fatigue: what exactly are we measuring? A British Society of Audiology Cognition in Hearing Special Interest Group'white paper'. Int J Audiol, 53(7), 433-440.

Näätänen, R., and Picton, T. (1987). The N1 wave of the human electric and magnetic response to sound: A review and an analysis of component structure. Psychophysiology, 24, 375– 425.

Nyffeler, M. (2010). DuoPhone - Easier telephone conversations with both ears. Phonak Field Study News.

Obleser, J., Wöstmann, M., Hellbernd, N., Wilsch, A., and Maess, B. (2012). Adverse listening conditions and memory load drive a common alpha oscillatory network. J Neurosci, 32(36), 12376-12383.

Parasuraman, R., and Wilson, G. (2008). Putting the Brain to Work: Neuroergonomics Past, Present, and Future. Hum Factors, 50 (3), 468–474.

PhonerLite [Computer software]. (2014). Retrieved from https://www.phonerlite.de

Pichora-Fuller, M. K., and Singh, G. (2006). Effects of age on auditory and cognitive processing: implications for hearing aid fitting and audiologic rehabilitation. Trends Amplif, 10(1), 29-59.

Pichora-Fuller, M. K., Kramer, S. E., Eckert, M. A., Edwards, B., Hornsby, B. W., Humes, L. E., ... & Naylor, G. (2016). Hearing impairment and cognitive energy: the Framework for Understanding Effortful Listening (FUEL). Ear Hear, 37, 5S-27S.

Polich, J. (2007). Updating P300: An integrative theory of P3a and P3b. Clin Neurophysiol, 118, 2128–2148.

Rabbitt, P. M. (1968). Channel-capacity, intelligibility and immediate memory. Q J Exp Psychol, 20, 241–248.

Renard, Y., Lotte, F., Gibert, G., Congedo, M., Maby, E., Delannoy, V., Bertrand, O. and Lécuyer, A. (2010). OpenViBE: an open-source software platform to design, test, and use brain-computer interfaces in real and virtual environments. Presence-Teleop Virt, 19(1), 35-53.

Schneider, B.A., and Pichora-Fuller, M.K. (2000). Implications of perceptual deterioration for cognitive aging research. In Craik, F.A.M and Salthouse, T.A. (Eds.), Handbook of aging and cognition (2nd ed.). Mahwah, NJ: Lawrence Erlbaum, pp. 155–219.

Segalowitz, S. J., Wintink, A. J., and Cudmore, L. J. (2001). P3 topographical change with task familiarization and task complexity. Cogn Brain Res, 12, 451–457.

Strauss, D. J., Corona-Strauss, F. I., Trenado, C., Bernarding, C., Reith, W., Latzel, M., and Froehlich, M. (2010). Electrophysiological correlates of listening effort: neurodynamical modeling and measurement. Cogn Neurodynamics, 4(2), 119-131.

Strauß, A., Wöstmann, M., and Obleser, J. (2014). Cortical alpha oscillations as a tool for auditory selective inhibition. Front Hum Neurosci, 8 (350), 1-7.

Winneke, A.H., and Phillips, N.A. (2011). Does audiovisual speech offer a fountain of youth for old ears? An event-related brain potential study of age differences in audiovisual speech perception. Psychol Aging, 26(2), 427-438.

Winneke, A., Meis, M., Wellmann, J., Bruns, T., Rahner, S., Rennies, J., Walhoff, F., & Goetze, S. (2016). Neuroergonomic assessment of listening effort in older call center employees. In Zukunft Lebensräume Kongress. Frankfurt, April 20-21, Frankfurt. Berlin: VDE Verlag GmbH. 327-332.

Wolfe J., Schafer E., Mills E., John A., Hudson M., and Anderson S. (2015). Evaluation of the benefits of binaural hearing on the telephone for children with hearing loss. J Am Acad Audiol, 26(1), 93-100.

Yanz, J. L. (1984). The application of the theory of signal detection to the assessment of speech perception. Ear Hear, 5 (2), 64–71.

Zekveld, A. A., Kramer, S. E., and Festen, J. M. (2010). Pupil response as an indication of effortful listening: The influence of sentence intelligibility. Ear Hear, 31(4), 480-490.

Zokoll M.A., Wagener K.C., Brand T., Buschermöhle M., and Kollmeier B. (2012). Internationally comparable screening tests for listening in noise in several European languages: The German digit triplet test as an optimization prototype. Int J Audiol, 51(9), 697-707.

Citation

Winneke, A., De Vos, M., Wagener, K., Derleth, P., Latzel, M., Appell, J., & Wallhoff, F. (2018). Listening effort and EEG as measures of performance of modern hearing aid algorithms. AudiologyOnline, Article 24198. Retrieved from https://www.audiologyonline.com