Learning Outcomes

After this course learners will be able to:

- Describe the methods and purposes of different frequency-lowering technologies in hearing aids and evaluate their impact on speech signal processing and speech perception.

- Select and configure frequency-lowering hearing aids for different patients, considering individual audiometric profiles and technology differences, and justify their choices.

- Evaluate the outcomes associated with frequency-lowering amplification, including the limitations and potential side effects of frequency lowering, the need for individualized fitting, and the challenges and barriers in conducting research to inform evidence-based practices.

Introduction

This article delves into the advancements and methodologies in frequency-lowering technologies for hearing aids. The focus is on the technical aspects and real-world implications of these technologies. By examining different approaches and their outcomes, this article aims to provide valuable insights for audiologists and researchers, aiding in optimizing user outcomes.

Part I of this article is a big picture of the essential issues surrounding frequency lowering. Part II will review the basic steps when fitting frequency-lowering amplification. Part III provides an overview of how each manufacturer approaches frequency lowering. The remaining parts cover each manufacturer’s frequency-lowering method in detail.

Part I: Perspectives on Frequency-Lowering Amplification

Frequency Lowering is not just a ‘Feature’

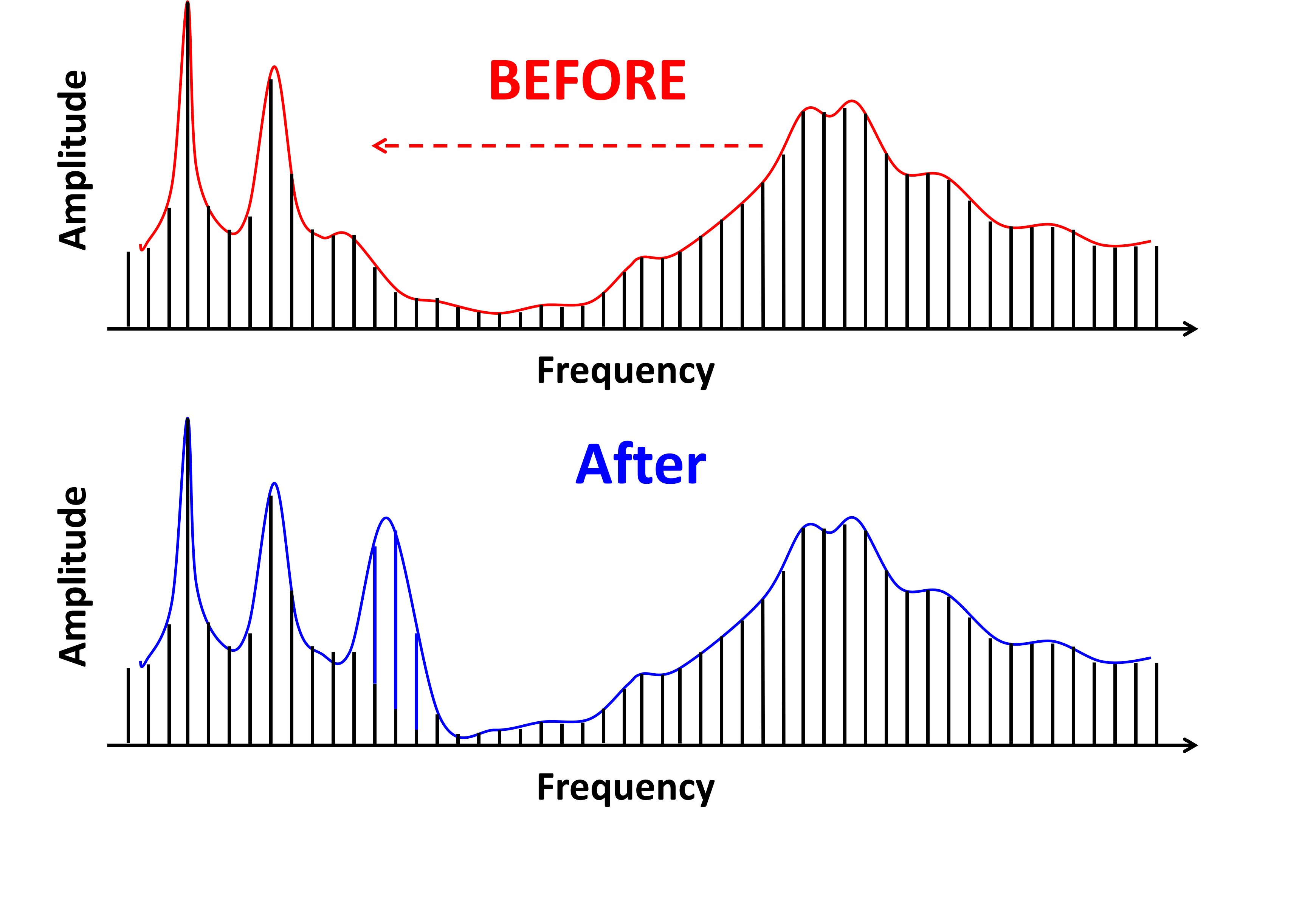

Frequency lowering is not just another hearing aid feature like our other hearing aid features that provide audibility or noise reduction or those that accentuate set static signal features. Frequency lowering is different because it inserts energy into the speech signal at frequencies and times where there was none before or even where there is already energy. In this regard, frequency lowering constructively distorts the speech signal for what we hope will provide a net benefit. Frequency lowering is ubiquitous among the major hearing aid manufacturers, but it is also the least understood topic among clinicians and researchers.

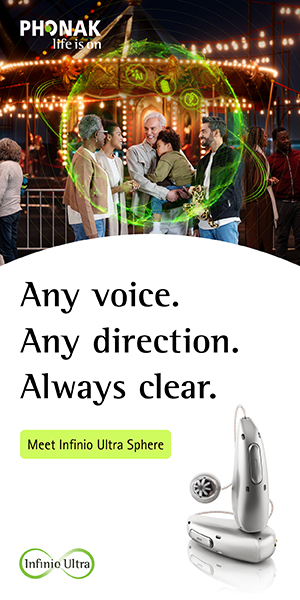

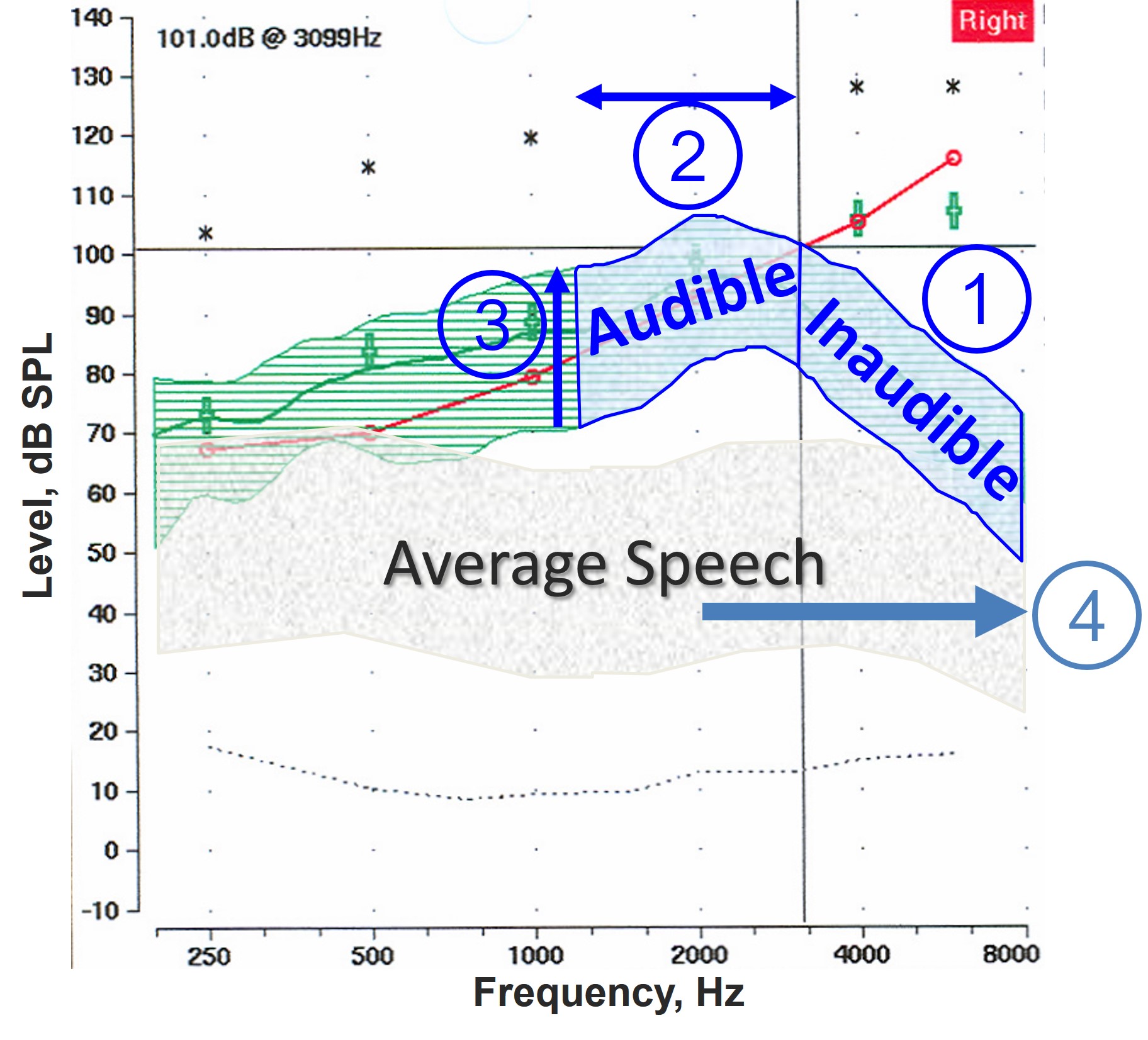

Figure 1. Probe microphone output illustrating the limitations of conventional hearing aid amplification with average speech spectrum (gray shaded area) and audiometric thresholds (red line) in dB SPL at the eardrum. The unaided speech is barely audible (below the red line). Despite amplification (green area), frequencies above 3100 Hz remain inaudible, highlighting the necessity for frequency-lowering techniques to re-code high-frequency sounds into audible lower frequencies.

Figure 1 is the output from a probe microphone and shows the fundamental problem we face with typical, conventional hearing aid amplification and the need for frequency lowering. Shown in the gray shaded area is the average speech spectrum. Everything is expressed in dB SPL at the eardrum, including the audiometric thresholds shown by the red line. It can be seen that without amplification, this particular hearing aid user is not receiving much audibility for unaided speech. The green area indicates the amplified speech spectrum and shows that despite our best efforts for this particular hearing aid user, we cannot achieve audibility much above 3100 Hz. This is where frequency lowering comes in. The idea is to take some or all of this information in the high frequencies, which is inaudible, despite our best attempts with conventional amplification, and somehow re-code it in the frequency domain. Specifically, we will take some of this information and re-code it in the lower frequencies where we can achieve audibility with our fitting.

Frequency-Lowering Information

So you might be wondering, “What is the information in the frequency-lowered signal?” Most of the representations you see depict frequency lowering in the frequency domain. This makes sense because we change the frequencies going in the hearing aid before they come out. But this is not very intuitive in terms of what the information actually is. Therefore, I propose another way of thinking about the problem in the time domain.

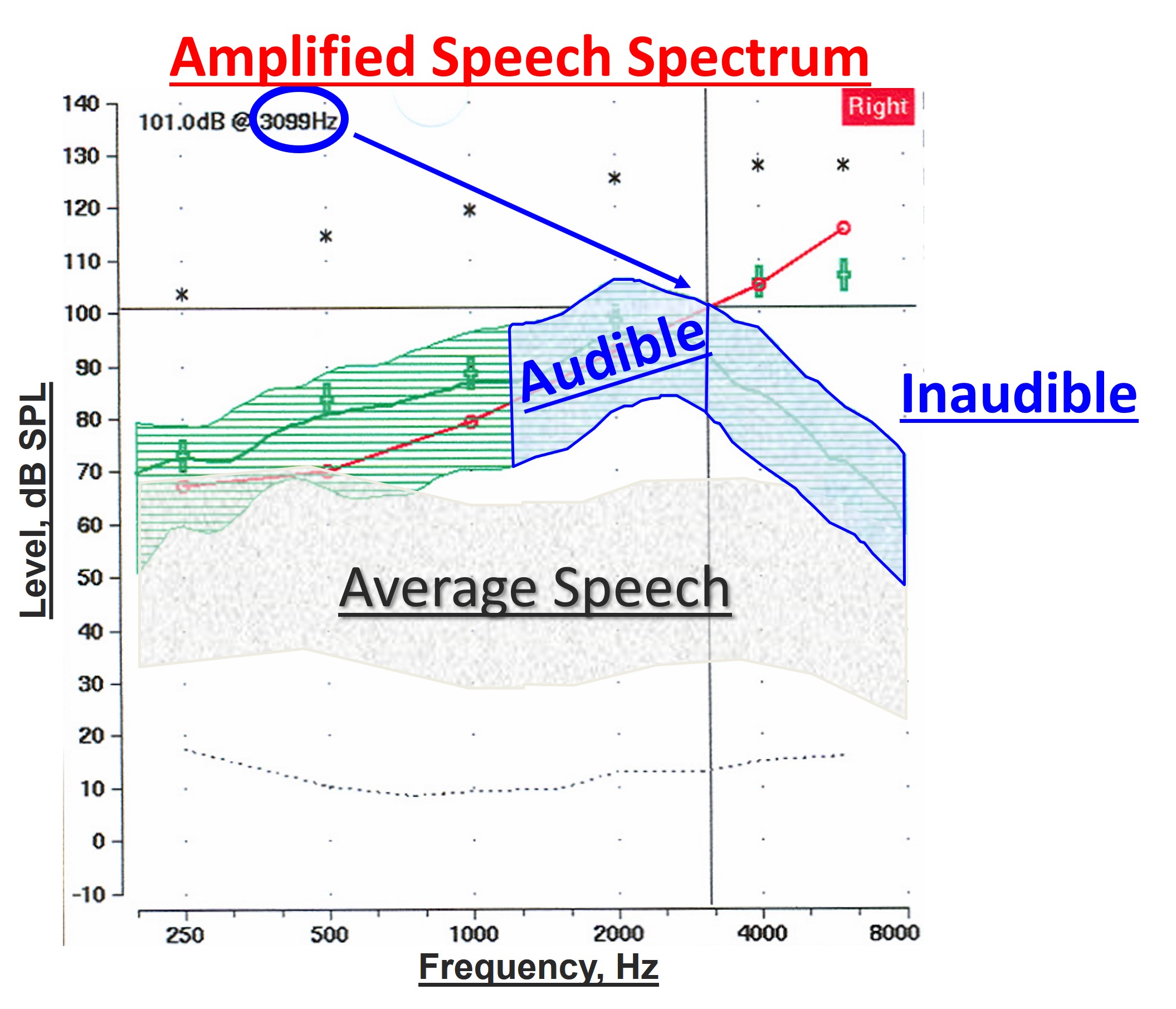

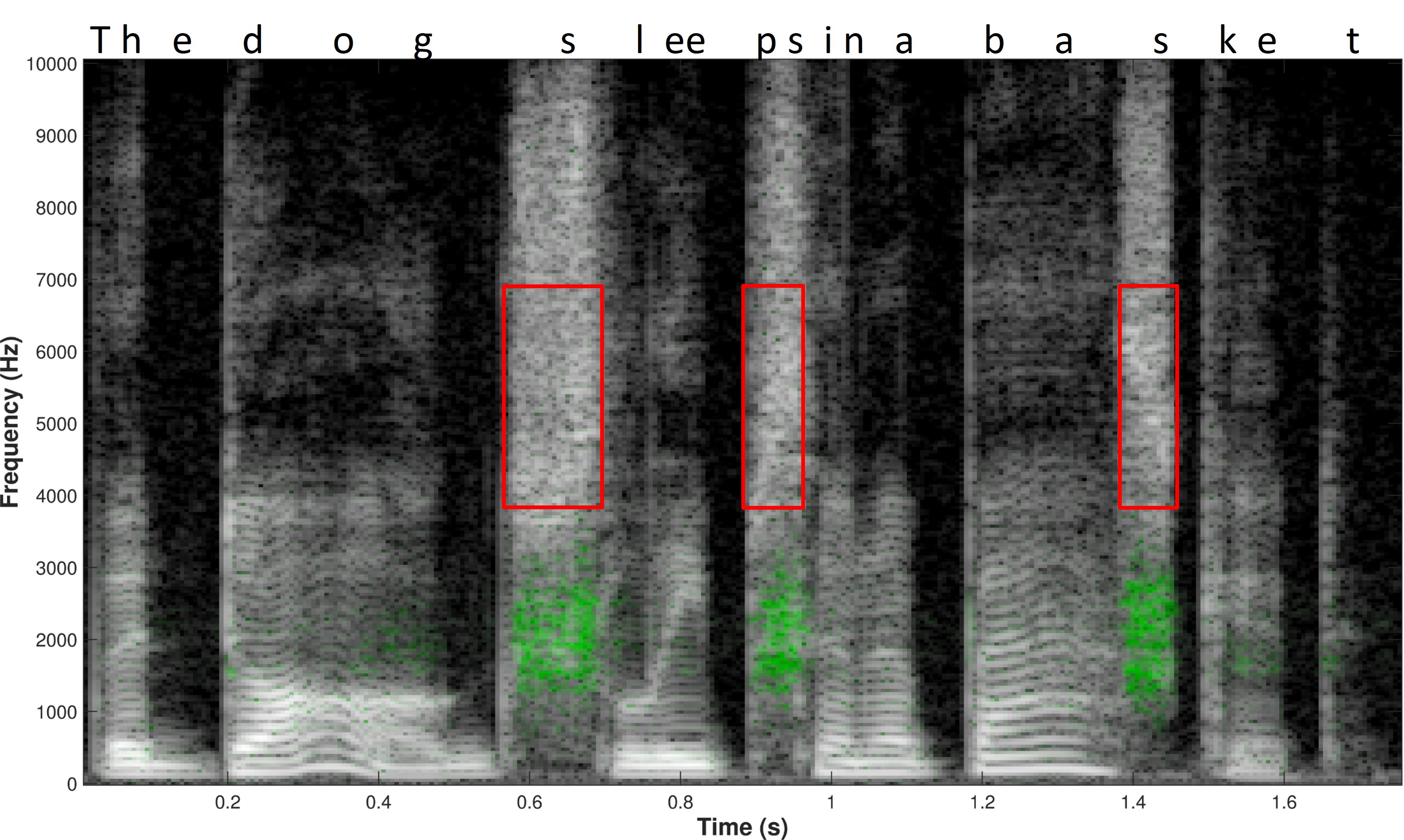

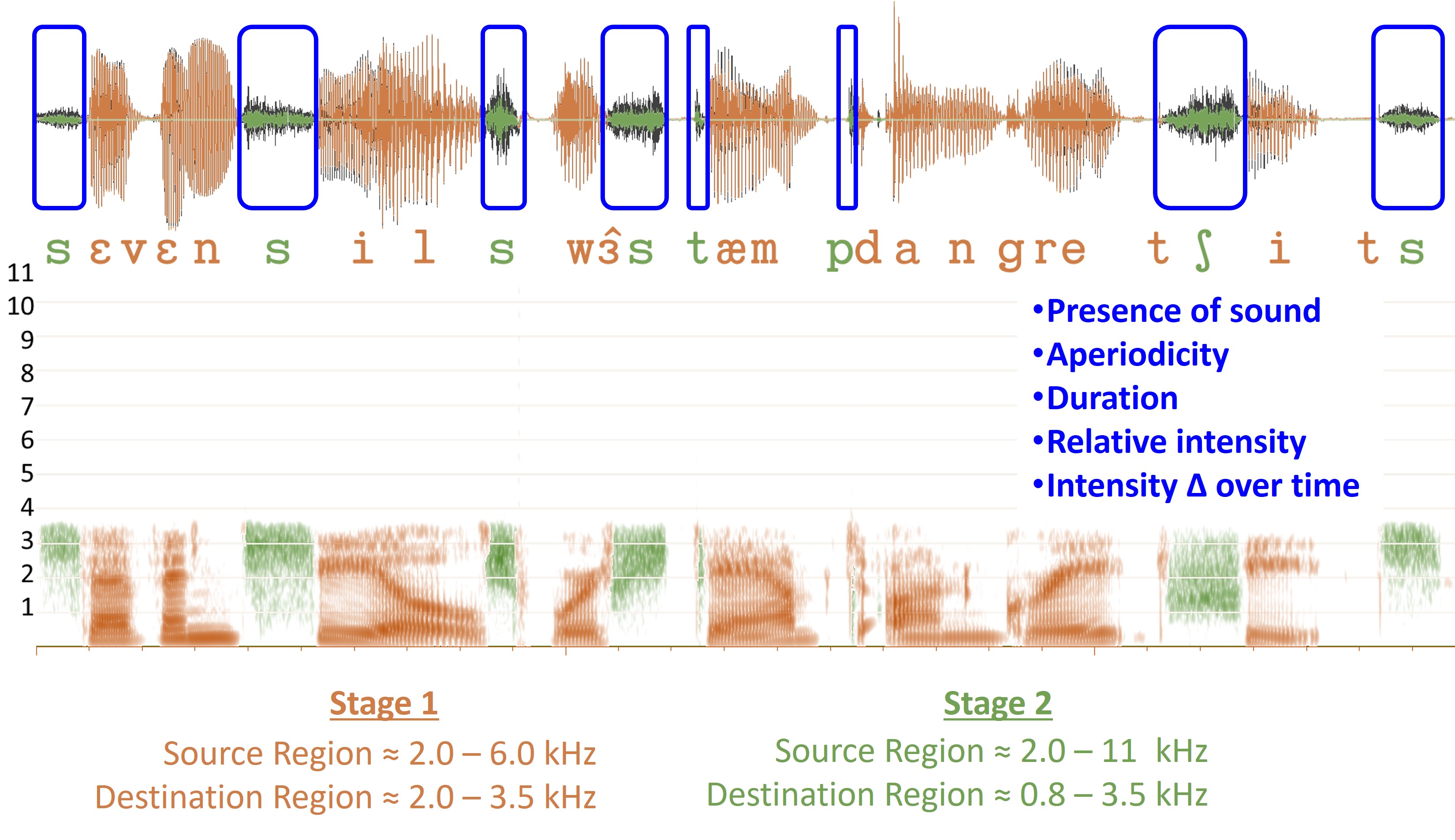

Figure 2. Comparative time waveforms of sentences with high-frequency hearing loss simulation (left) and the impact of frequency lowering (right). Inaudible phonemes for a hearing aid user are highlighted in red letters on the left. Red boxes on the right demonstrate the restoration of missing information via frequency lowering, emphasizing the enhancement in audibility and speech perception.

The left side of Figure 2 shows the time waveforms of three sentences that have been low-pass filtered to simulate high-frequency hearing loss. The letters in red are those particular phonemes that are inaudible for this particular hearing aid user. The red boxes on the right side of Figure 2 show what happens when we use frequency lowering. If you compare the left and right sides, you can see that the hearing aid user can miss a lot of information without frequency lowering. Thus, it is more apparent in the time domain that the hearing aid user now has something with frequency lowering, whereas they had nothing before.

In this respect, if we think about what information is being presented in the time domain, we have a lot of things. First, compared to when we had no frequency lowering and/or any of the acoustic signals shown here, the hearing aid user is now cued that something was said. That is, they have an awareness of the presence of sound. More specifically, they can tell from the aperiodicity of that sound that it is probably frication. And compared to other sounds that have been lowered, they have information about their duration. This might be a key feature for telling sounds apart after they have been lowered. The hearing aid user also has information about the relative intensity of the original input sound compared to others after lowering. And they have information about how that intensity changes over time, that is, the temporal envelope. You should think about these things when trying to understand the information in the frequency-lowered signal. Therefore, if you look at the time waveform, you can see it is very rich in information.

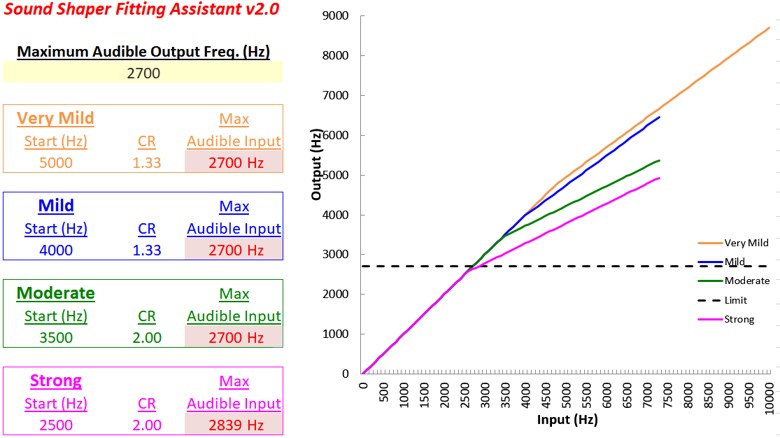

What is Lost with a Conventional Fitting?

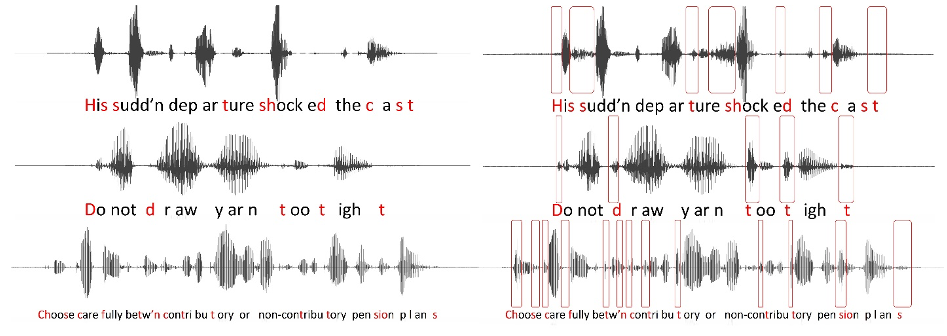

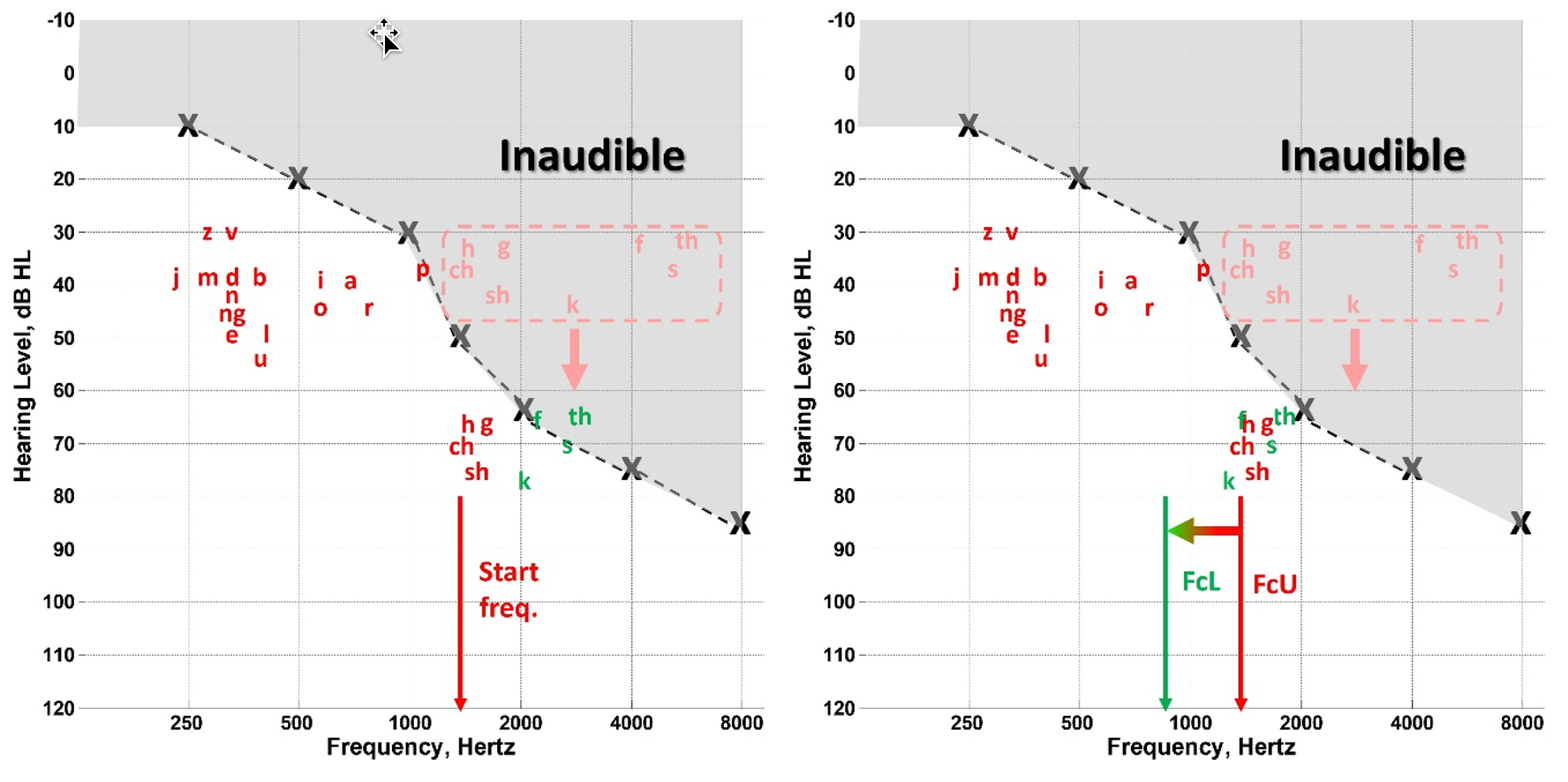

Figure 3. The ‘speech banana’ on an audiogram showing the distribution of speech sound energy by frequency and level, with low-frequency vowel energy and low-energy high-frequency fricatives (red), illustrating the difficulty in amplifying these sounds for individuals with high-frequency hearing loss (gray area). Arrows indicate the limited gain provided by conventional hearing aids for these critical sounds, especially above 5000 Hz, emphasizing the significant challenge in making high-frequency sounds audible due to minimal speech energy, significant hearing loss, and hearing aid frequency response limitations.

Figure 3 depicts the speech spectrum in a way you may be familiar with and affectionately know as the speech banana. It shows that the primary energy of importance for individual speech sounds tends to be a function of frequency and level when plotted on an audiogram. While we can appreciate that this is a gross oversimplification of speech, you can appreciate how these sounds tend to be distributed across frequency. For example, vowels tend to have more low-frequency energy. Also, the high-frequency consonants, namely the fricatives shown in red, tend to be the lowest in energy. This means that most of the energy of importance for these speech sounds is inaudible for a typical high-frequency sloping hearing loss, as shown in the gray region. We know that the primary job of hearing aids is to provide gain to make these sounds audible. However, as you see by the arrows, conventional amplification tends to be limited for these high-frequency sounds because they start with the lowest energy; therefore, they need the most gain. And because of the hearing loss configuration, they have the highest fence to clear for audibility. This is compounded by the fact that most hearing aids tend to have a frequency response that rolls off above 5000 Hz. Therefore, we have a triple whammy that conspires to make the perception of high-frequency speech difficult. Namely, that hearing aid gain is least where the speech energy is least and where the hearing loss is greatest.

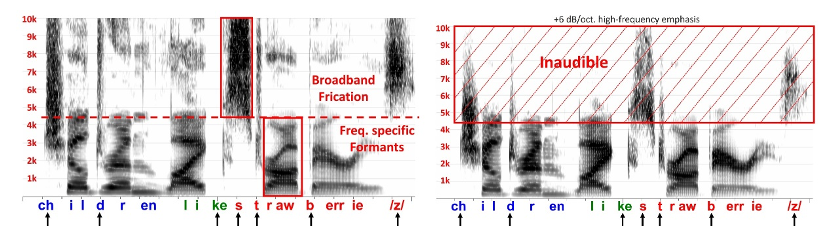

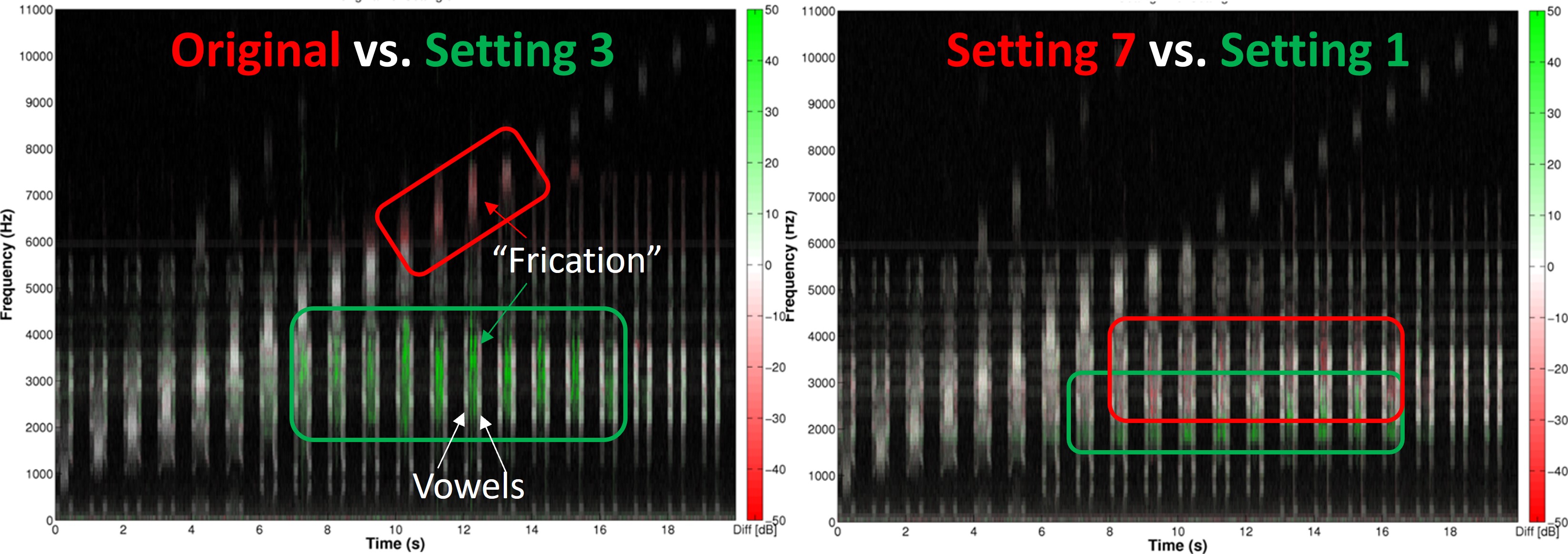

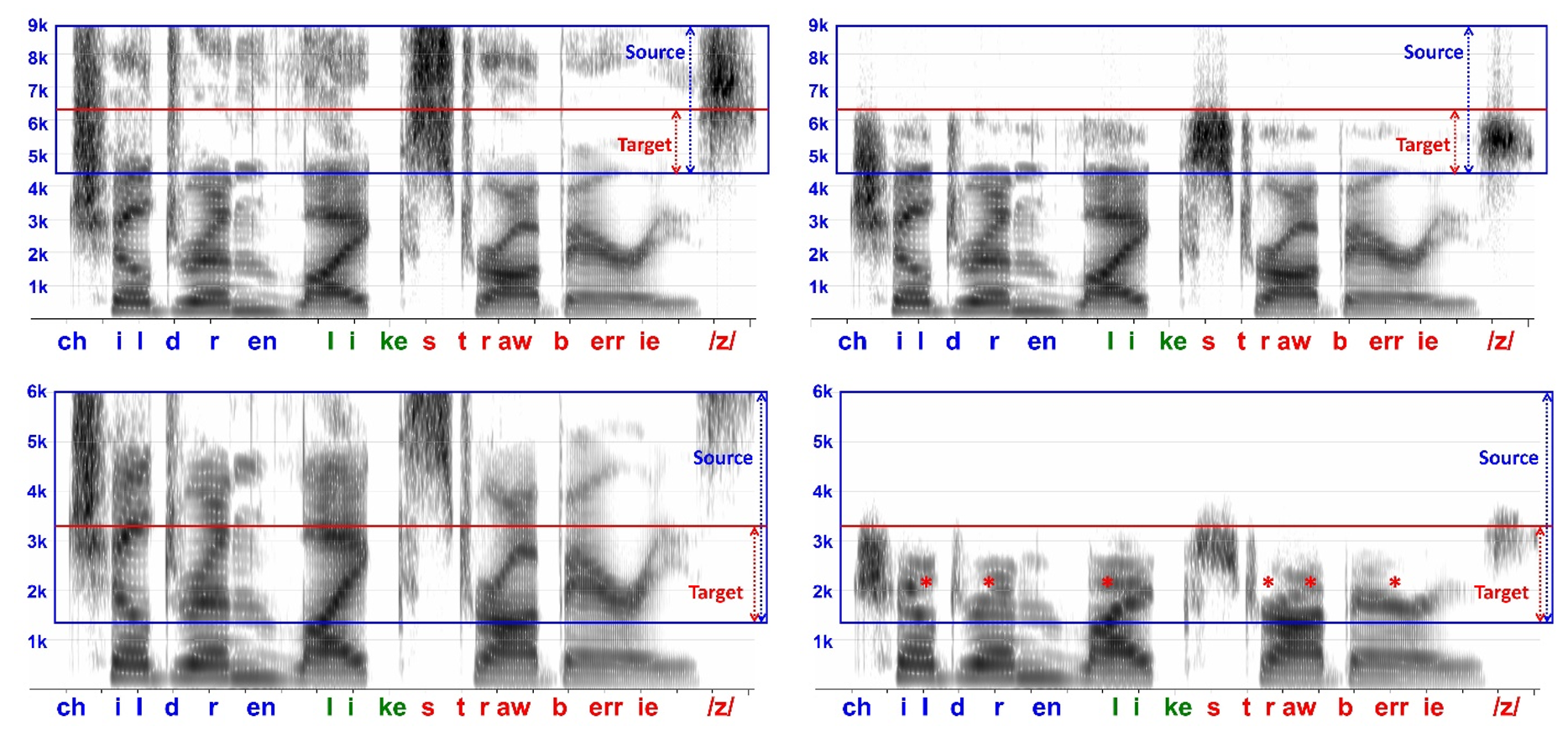

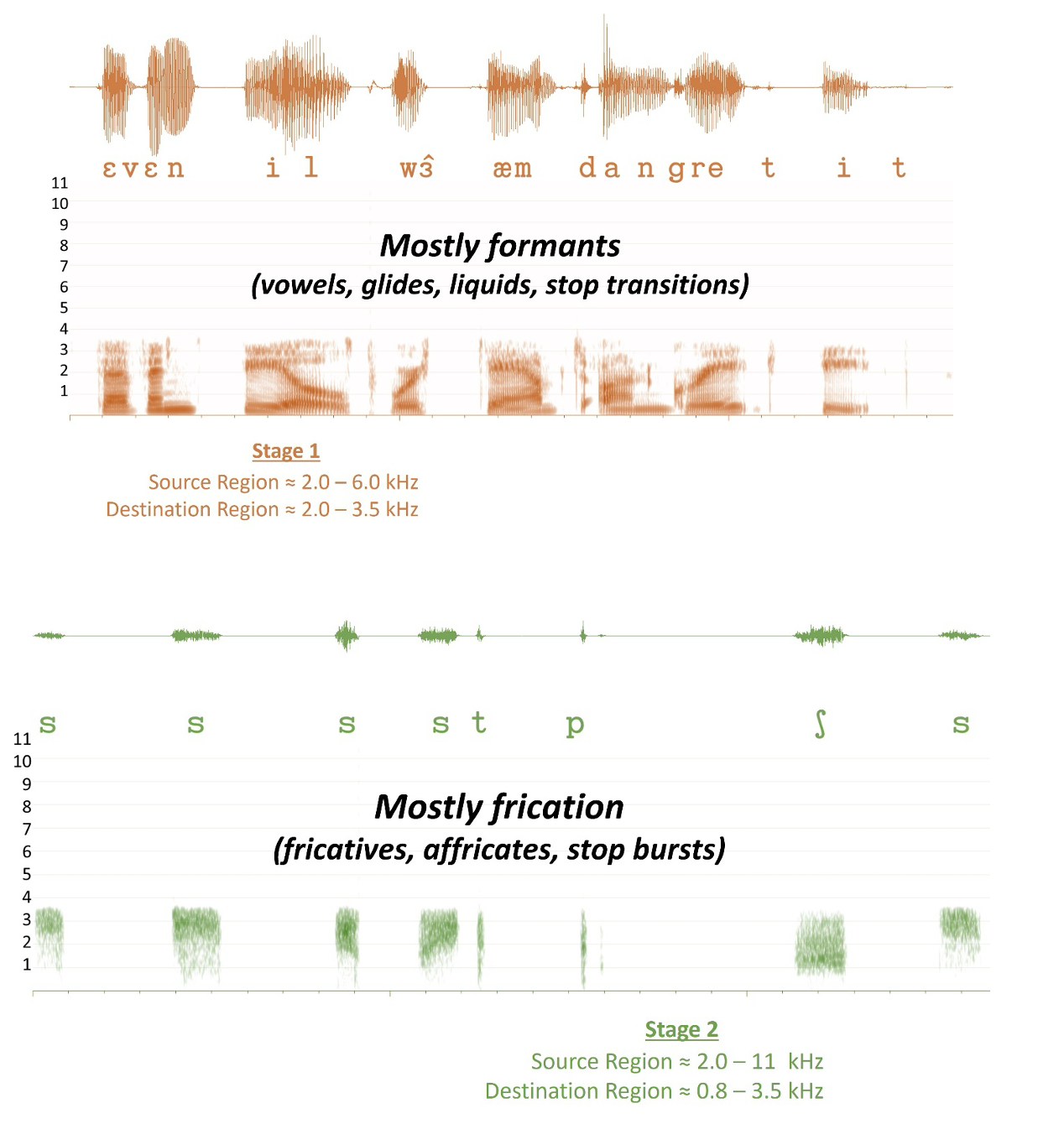

Figure 4. Left - Spectrogram of “children like strawberries” displaying the distribution of low-frequency formants (vowels, liquids, glides) versus high-frequency broadband frication (fricatives, affricates, stops). Right - The same spectrogram post typical hearing aid receiver response, highlighting the significant loss of high-frequency energy, which is not recovered even with conventional amplification, underscoring the need for frequency lowering to make fricative sounds accessible to those with high-frequency hearing loss.

The left side of Figure 4 shows a spectrogram of the sentence “children like strawberries.” Notice the overall distribution of the low-frequency sounds compared to the high-frequency sounds. In particular, notice how frequency-specific formants characterize low-frequency sounds like vowels, liquids, and glides. This differs from the speech signal in the high frequencies, which consists of broadband frication from the fricatives, affricates, and stop consonant bursts like the “k,” “t,” and “b.” The right side of Figure 4 shows the same spectrogram filtered with the typical hearing aid receiver response shown at the bottom of Figure 3. Again, notice how much energy we lose in the high frequencies. With high-frequency hearing loss, even mild to moderate hearing loss, this energy will be lost despite conventional hearing aid amplification. Therefore, the goal is to make some of this information, mainly in the fricatives, available to the user after frequency lowering.

“Just OK is not OK”

Perhaps you have seen those AT&T wireless commercials where “just OK is not OK.” Considering the potential amount of high-frequency information in the speech signal, the typical hearing aid frequency response above might be viewed as “just OK.” The thought might be that as long as you get information out to 5 or 6 kHz, you are OK because that is all you need. However, “just OK is not OK” in the context of the developmental need to access the full speech signal. A seminal study by Mary Pat Moeller and colleagues in 2007 indicated that children who were appropriately fit with conventional hearing aid amplification actually fell behind their normal-hearing peers in some of their production of the fricative and affricate sound classes. Again, “just OK is not OK.”

Clinical Barriers: Confusing Options

With the potential for frequency lowering to improve speech intelligibility and its ubiquity across major hearing aid manufacturers, you might question why it is not being utilized more in the clinic or researched more in the lab. That is, why are we still “just OK”? I think the problem lies in the fact that we have significant barriers when it comes to researching this technology and its clinical implementation. In the ideal world, evidence-based practice is where these two meet. We cannot progress until we overcome not just one of these barriers but both.

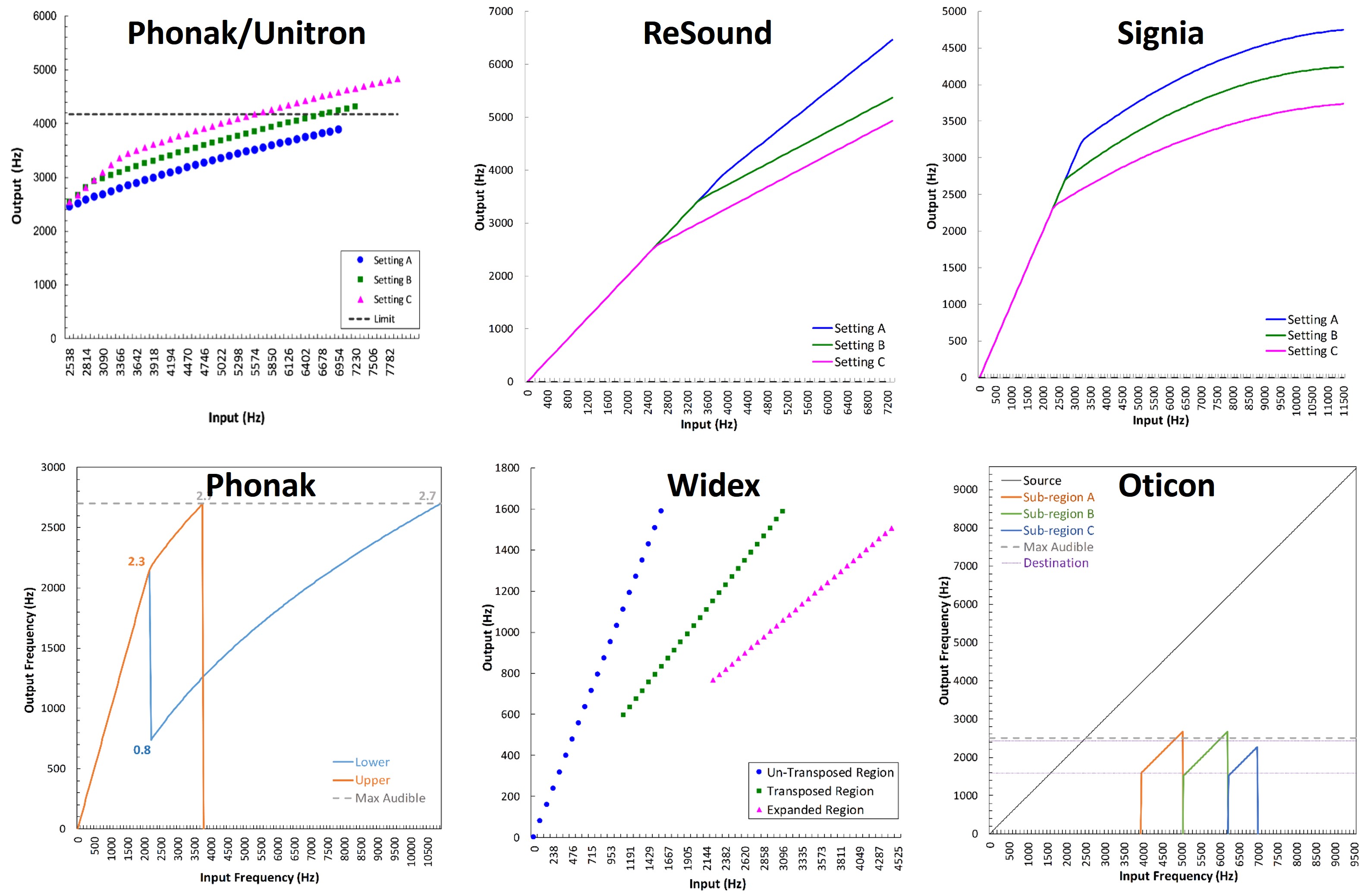

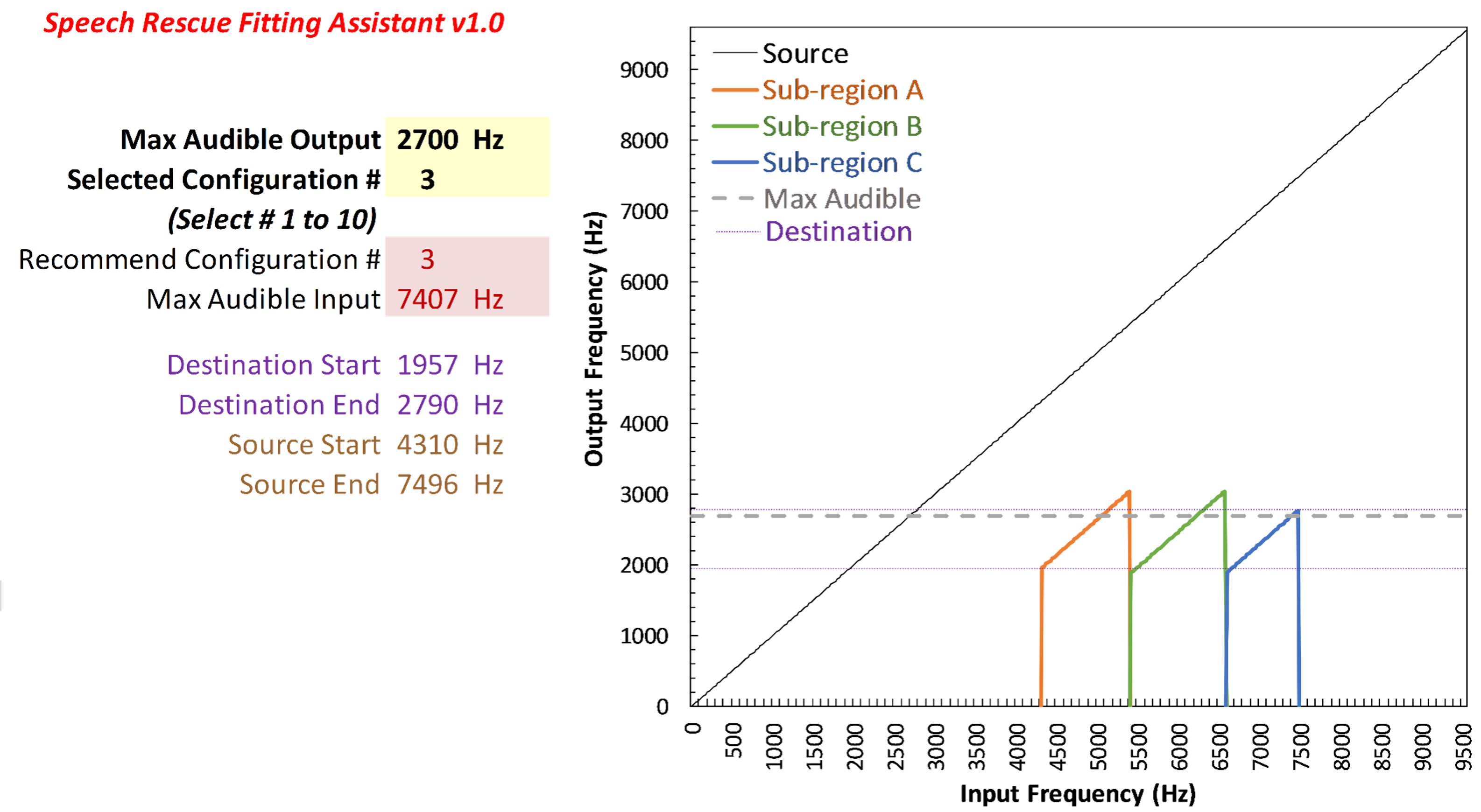

Figure 5. Panels depict examples of the frequency remapping functions used in frequency lowering by different hearing aid manufacturers, with input frequencies on the x-axis and output frequencies on the y-axis. Taken from www.tinyURL.com/FLassist, each panel represents a distinct method, illustrating both the similarities and crucial differences among manufacturers’ approaches to frequency lowering, the details of which are further examined later.

Perhaps the most significant barrier to the clinical implementation of frequency lowering is the variety of options each manufacturer offers. It is unclear what is happening underneath the hood regarding the information provided by the different manufacturers. Each panel in Figure 5 shows a different frequency-lowering method using frequency remapping functions. These functions show frequencies going into the hearing aid along the x-axis and frequencies going out along the y-axis. While some of them look similar, they differ in significant ways discussed in detail later.

When it comes to research, we have conceptual and technological barriers. Of course, we know the number one thing you need for good quality research is double-blinded randomized control trials. A related reason is that there is limited access to control the technology and/or an understanding of what the hearing aids are doing to conduct the proper experiments in the first place. The idea is that you know if you are confined to just what you can do in the programming software, and if those options vary depending upon the audiogram you enter for your hearing aid user, it is difficult to do the proper control study. To a considerable extent, related to what is happening clinically, researchers do not have a solid understanding of the technology — and it is not their fault. The fact is that there is a limited amount of information provided by the manufacturers about what is really happening. This information is critical in order to carry out the proper experiments.

Research Barriers: Conceptual & Technological

We also have limited individualization within the research realm. Again, this is related to the lack of control and understanding of the technology. What happens in many of these studies is that the subjects are fit with the same settings. One of the things that I want to emphasize here is that you have to individualize the settings for your hearing aid user and the amount of audibility you have to work with. The other thing you see is that researchers simply use the manufacturer’s idea of what is right based on the audiogram, not aided audibility. As a result, the fitting is not individualized how it needs to be. This is equivalent to just giving everybody the same gain settings. If we give everybody the same gain as a function of frequency, you can expect it will be too much for some people. For other people, it is going to be too little. Therefore, when you look at outcomes across the board, you should not be surprised when you do not have conclusive results regarding whether it works.

Guarantees with Frequency Lowering

With all this being said, how does it translate into the outcomes expected with frequency lowering? First, let me summarize by explaining the guarantees associated with fitting frequency-lowering hearing aids. Give me a hearing aid with frequency lowering, and I can guarantee you that I can make speech understanding worse, a lot worse. Later, I will explain why and what you must be aware of when using this technology. I can almost guarantee you that I can preserve speech understanding. That is, “do no harm” if it is fit appropriately. I cannot guarantee you that I can always make speech understanding better.

Potential Side Effects

Regarding the potential side effects, we must remember that while the speech code is relatively scale-invariant, we can turn it up and down. Doing so will not change the identity of sounds because, as we know, it is heavily dependent on frequency. While I do not necessarily view frequency-lowering as a feature, no other hearing aid feature has as much potential to change the identity of individual speech sounds. Therefore, it can worsen speech understanding because the low-frequency information has to be altered somehow to accommodate the displaced high-frequency information. Said another way, the re-coded information from the high frequencies has to go to frequency regions that we would otherwise choose to fit normally with conventional amplification. Therefore, we intentionally alter the speech signal using constructive distortion — hopefully, for an overall net gain. The concern is not so much the fidelity of the recorded information, that is, how much it mimics or matches the existing high-frequency information. Instead, we must be concerned about how the newly introduced distortion and overall sound quality might work against us.

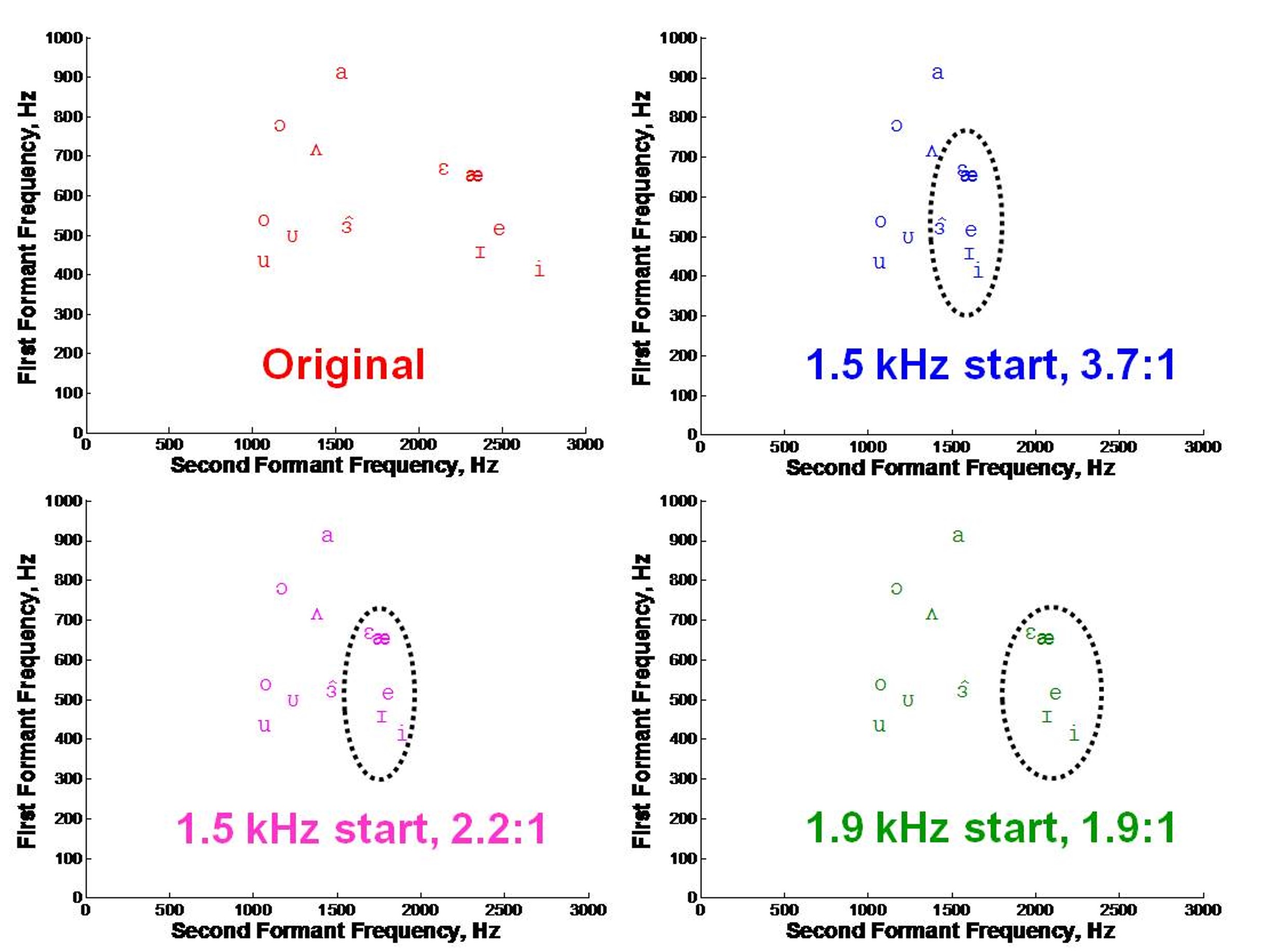

Figure 6. Vowel space representation with the first formant on the Y-axis and the second formant on the X-axis. The upper-left panel (in red) shows the standard vowel space, while subsequent panels demonstrate the effects of nonlinear frequency compression at various ratios, indicating the potential alteration of vowel identity due to the squeezing of formants, with higher ratios resulting in greater compression and potential confusion of vowel sounds.

As indicated earlier, most of the information in the low-frequency speech spectrum is frequency-specific. Specifically, we have formants with consonants like liquids and glides. We also have formants in the vowels. These formants transition into and out of the different consonants. Recall from basic speech acoustics that you can categorize our vowels acoustically according to their first and second formant frequencies. As shown in Figure 6, the first formant is on the Y-axis, and the second formant is on the X-axis. This is typically called the vowel space. The upper-left panel in red is the typical unaltered vowel space. With nonlinear frequency compression, which I detail later, the information above the start frequency is squeezed down by different ratios. Higher ratios result in more squeezing. We know that vowel identification is tightly tied to where the formants are, so we might change the vowel identity if we change the formants. And the closer the formants are, the more they will be confused.

Balancing the Positives Against the Negatives

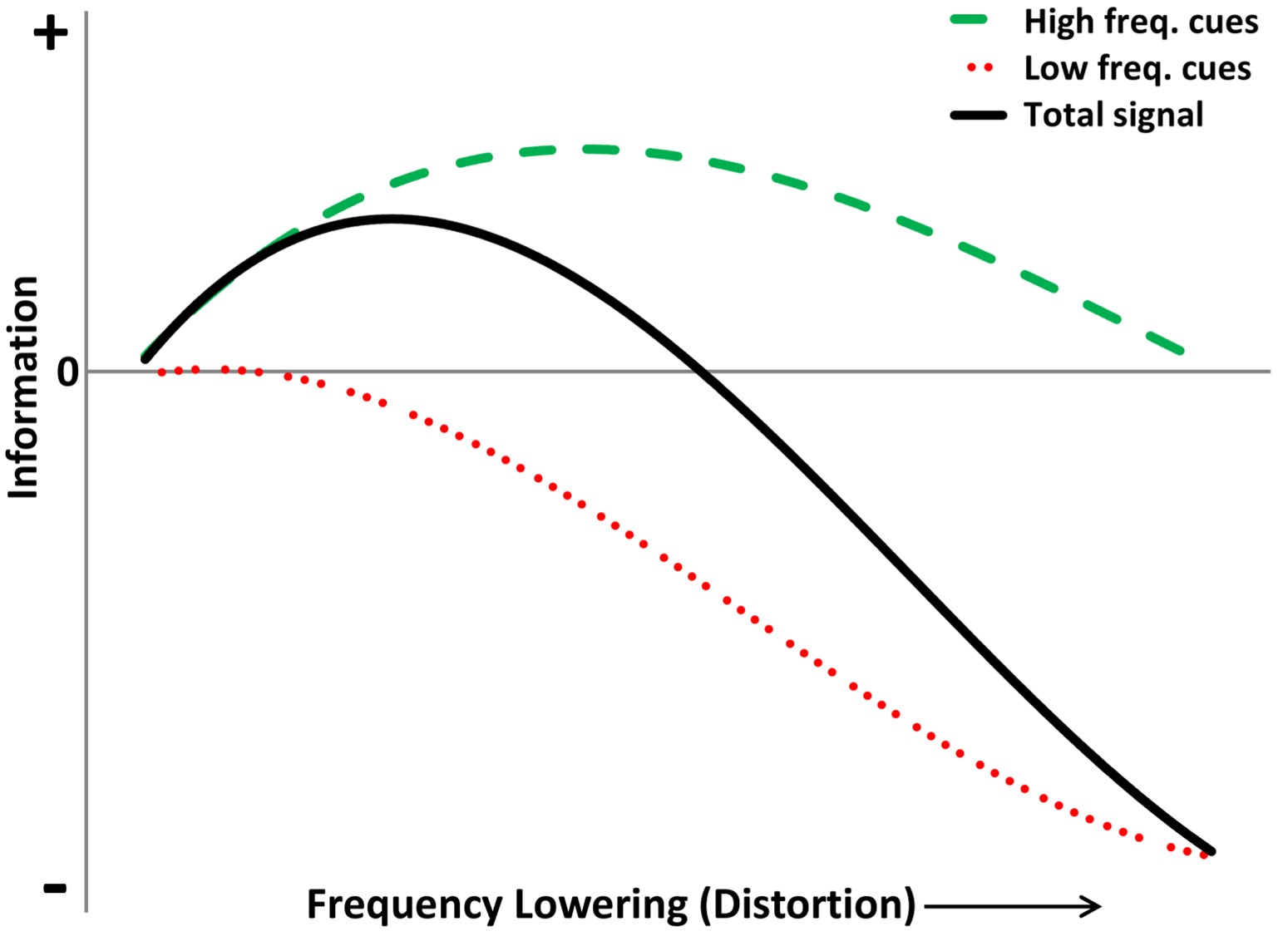

Figure 7. Hypothetical model correlating information (y-axis) with the degree of frequency lowering/distortion (x-axis). The green dotted line represents high-frequency information gain, which increases with frequency lowering to an optimal point before it diminishes due to acoustic overlap. The red line shows how low-frequency information is preserved or distorted by frequency lowering. The black line, the sum of green and red, illustrates the non-monotonic total information trajectory, peaking before decreasing as speech understanding worsens, emphasizing the balance needed in frequency lowering to enhance overall speech comprehension.

All of this can be summarized with the following hypothetical model. Shown along the y-axis is a metric of information, and along the x-axis is the amount of frequency lowering, which is synonymous with distortion. Recall that frequency lowering if done right, will be constructive distortion. What we want to avoid is destructive distortion. As shown by the green dotted line, as the amount of frequency lowering increases, so does the amount of information from the high frequencies, but only up to a point when the informational value decreases. It does this because the lowered signals overlap more and more so that the high-frequency sounds are no longer distinguishable — they sound the same. Considering the other side of the spectrum, the red line indicates that mild or moderate frequency lowering can preserve information from the low frequencies with most techniques. However, as shown in Figure 6, you can overdo it: if you keep increasing the degree of frequency lowering, you will increase the distortion of the low-frequency information. The total information, the black line, is the sum of the green and the red lines. The predicted relationship is non-monotonic such that it reaches a peak, then it becomes negative at some point such that overall speech understanding worsens. The point at which this occurs likely depends on the interaction with other factors like the individual hearing loss and the specific frequency-lowering technique. The critical teaching points are that less can be more and that a successful fitting will give the listener more than what is taken away.

To Fit or Not to Fit, that is the Question

Ultimately, a decision has to be made whether or not the potential pros outweigh the cons. If the hearing aid user experiences speech perception deficits with conventional amplification despite your best efforts to achieve high-frequency audibility, it might be a deciding factor. If the decision is to fit, there are a few things that you need to ask. First, “how does the technology of choice work?” The manufacturers have fundamental differences regarding the techniques, terminology, and what happens when you adjust the settings. Refer back to Figure 5, showing all the differences between the manufacturers. Second, you must know what is happening underneath the hood to make an efficacious decision for your hearing aid user. Third, you must also know how much of the lowered information is audible. This will be the topic of Part II. And then, finally, you must ask, “Can the hearing aid user use the lowered information?” Your validation measures will answer this, whether they consist of a speech test, a pencil and paper questionnaire, or simply open-ended questions.

Part II: Using Probe Microphone Measures to Optimize Outcomes with Frequency Lowering

The Importance of Probe Microphone Measures: Making Sure the Cure is Not Worse than the Disease

One of the takeaway points from Part I was the importance of minimizing potential side effects associated with frequency lowering. To facilitate this goal, knowing what to look for when doing probe microphone measurements is essential. You know the importance of doing probe microphone measures for conventional amplification, which does not change for hearing aids with frequency lowering. However, when using frequency lowering, you want to look for additional things in the amplified spectrum. Therefore, with the possibility of side effects causing harm by altering low frequencies or implementing too much frequency lowering, you must know what you are delivering to the hearing aid user. Part of this has to do with knowing what the output is. Probe microphone measures will tell you the hearing aid output after frequency lowering. You might know what the input is, but you will not know the relationship between input and output. The relationship between input and output for each manufacturer will be discussed later. While probe microphone measures will not tell you this relationship, there are things you want to look at for the output.

It is common for individuals to stop taking a medicine because they prefer the original disease to the side effects. Let us think about this in the context of a hearing aid with frequency lowering. If you fit it inappropriately — to the point where they have side effects — they may not understand speech as well. Therefore, they may go without hearing aids, return the product, and never see you again.

Primary Goals for Probe Microphone Measures

There are specific things you want to do for each manufacturer and how they approach frequency lowering. However, there are some commonalities when doing probe microphone measurements. Three key goals transcend all of the different techniques.

- The audible bandwidth after frequency lowering is activated should not be less than before. The critical thing is that you do not want to reduce the audible bandwidth. If you can achieve audibility up to a particular frequency, you want to use that real estate as much as possible. You want to avoid using so much frequency lowering that it takes away from what you had to begin with because you are working against yourself. Think about this in the context of overall net benefit: you want to provide additional high-frequency information while maintaining or minimizing detriment to the existing low-frequency information.

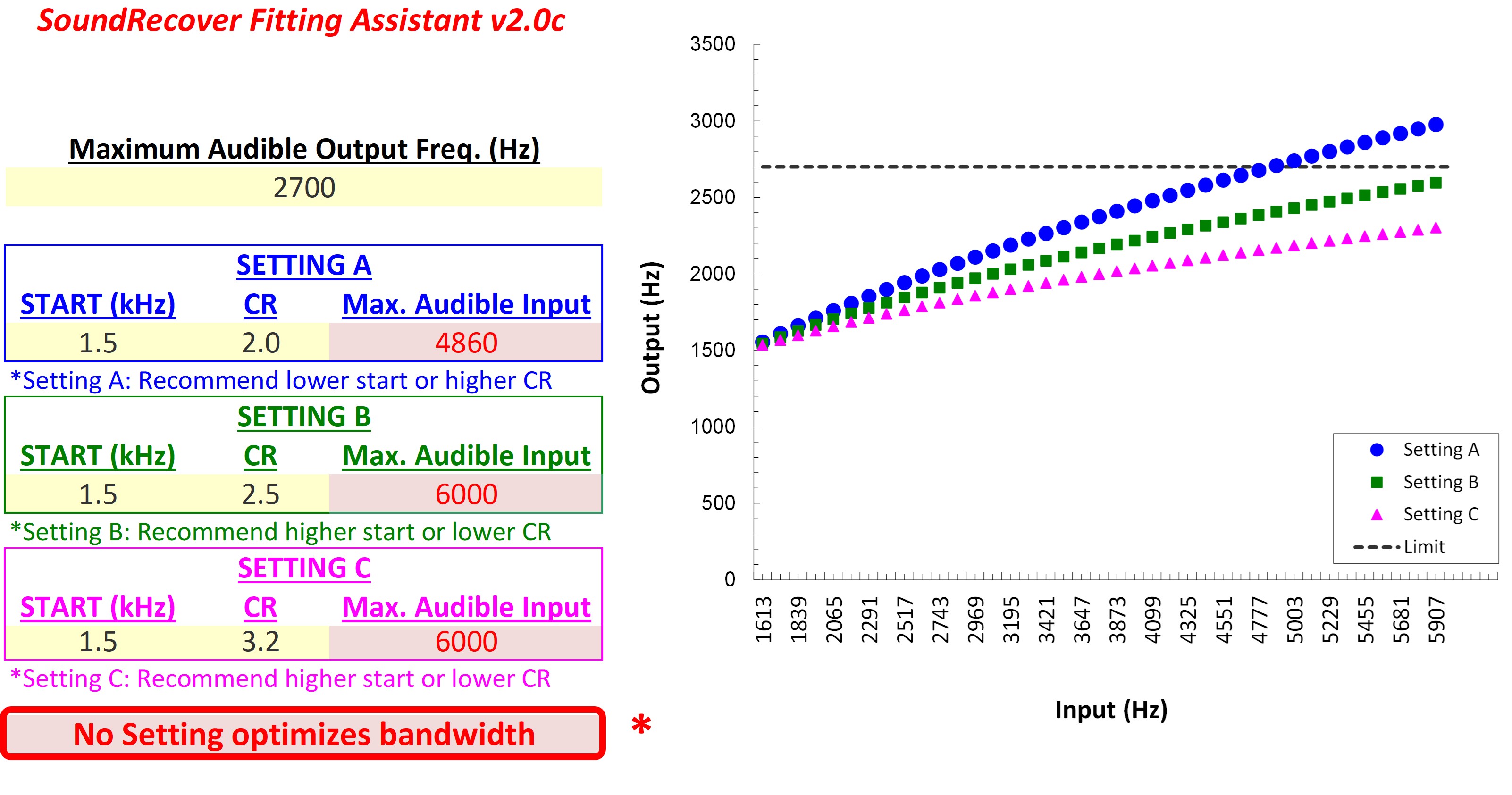

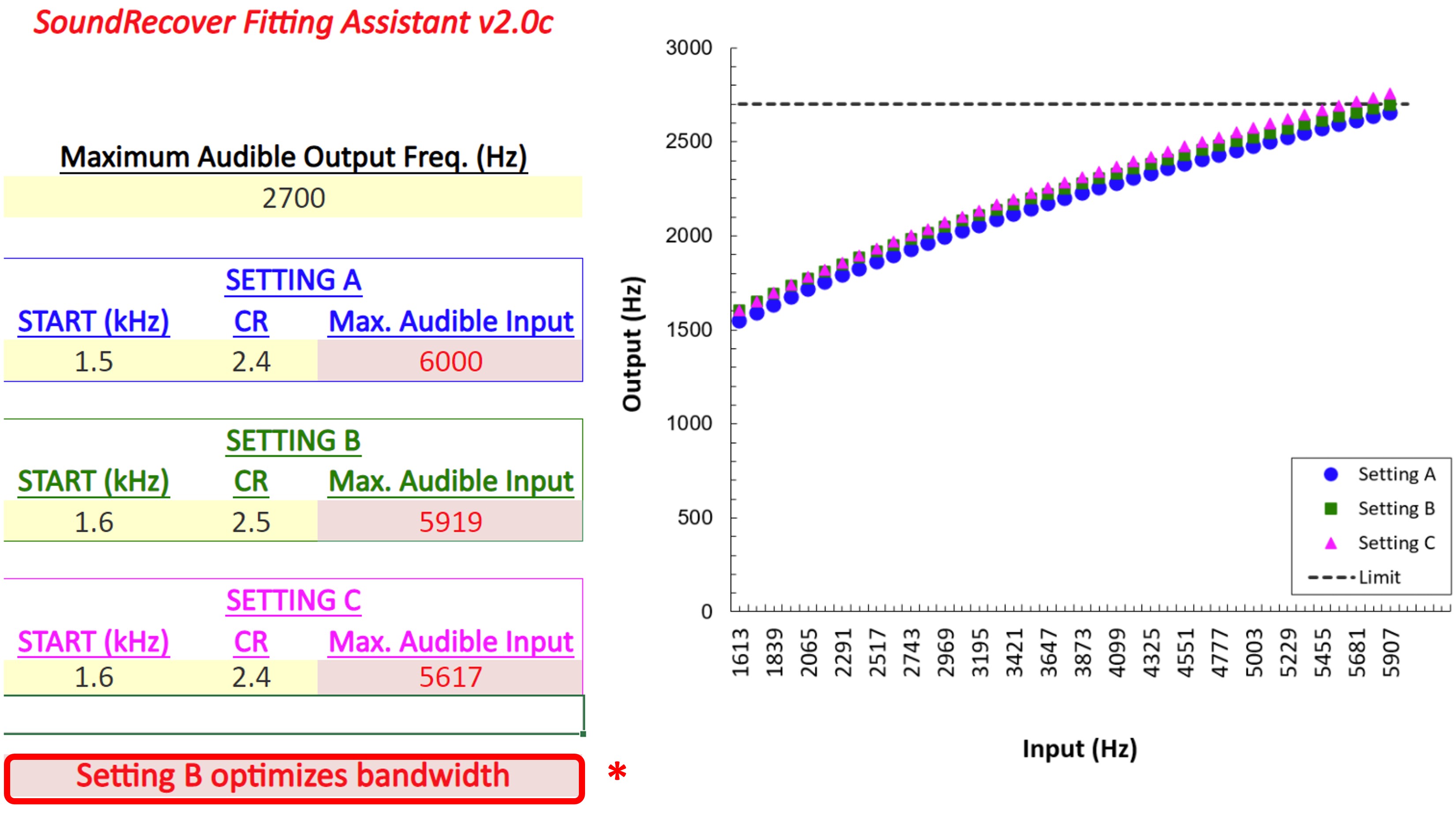

- The lowered information should be audible. This seems obvious, but it is not because, depending upon the technology, you cannot always tell that the lowered information is audible by looking at the output. How can you know what went into the input when all you have is the output? Manufacturers define things slightly differently and operate in different ways, so it is possible to get confused. It is not uncommon for all frequency lowering to happen at a range where the hearing aid user has no audibility, especially if you use the manufacturer’s default settings. In this case, you should not be surprised that the hearing aid user reports no benefit. Remember Part I, where it discussed the clinical and research barriers? It is the same situation on the research side of things. If everybody is fit with the same setting using a manufacturer’s default, then you really have no control over how much of the lowered information is put into a range of audibility. I have developed online tools to help address this goal; these will be discussed later.

- Use the weakest frequency-lowering setting to accomplish your objective. This objective may vary depending on the severity of hearing loss. For example, you might do slightly different things if you are fitting a mild-to-moderate hearing loss versus a moderately-severe hearing loss. How do you know which setting is the weakest, and how do you know you are accomplishing your objective? Again, this is where my online tools come into play.

A Protocol for Fitting Frequency-Lowering Hearing Aids

While the methods used for frequency lowering differ across manufacturers, we can establish a generic protocol for fitting all frequency-lowering hearing aids.

- Deactivate the frequency lowering and then fit the hearing aid to prescriptive targets with the DSL, NAL-NL2, etc., using probe microphone measures just as you would for a conventional hearing aid. The point is to see how much you can reach the targets.

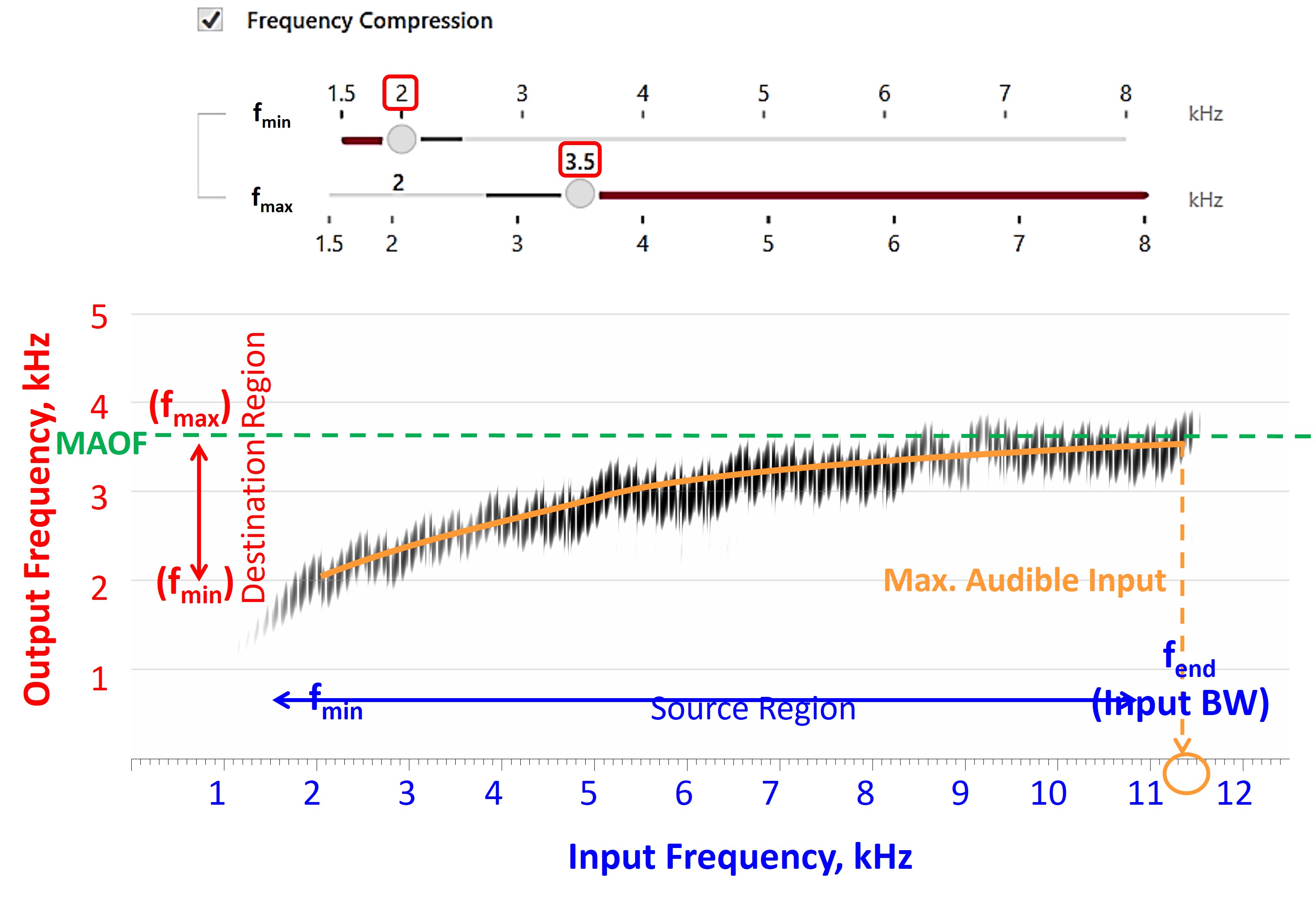

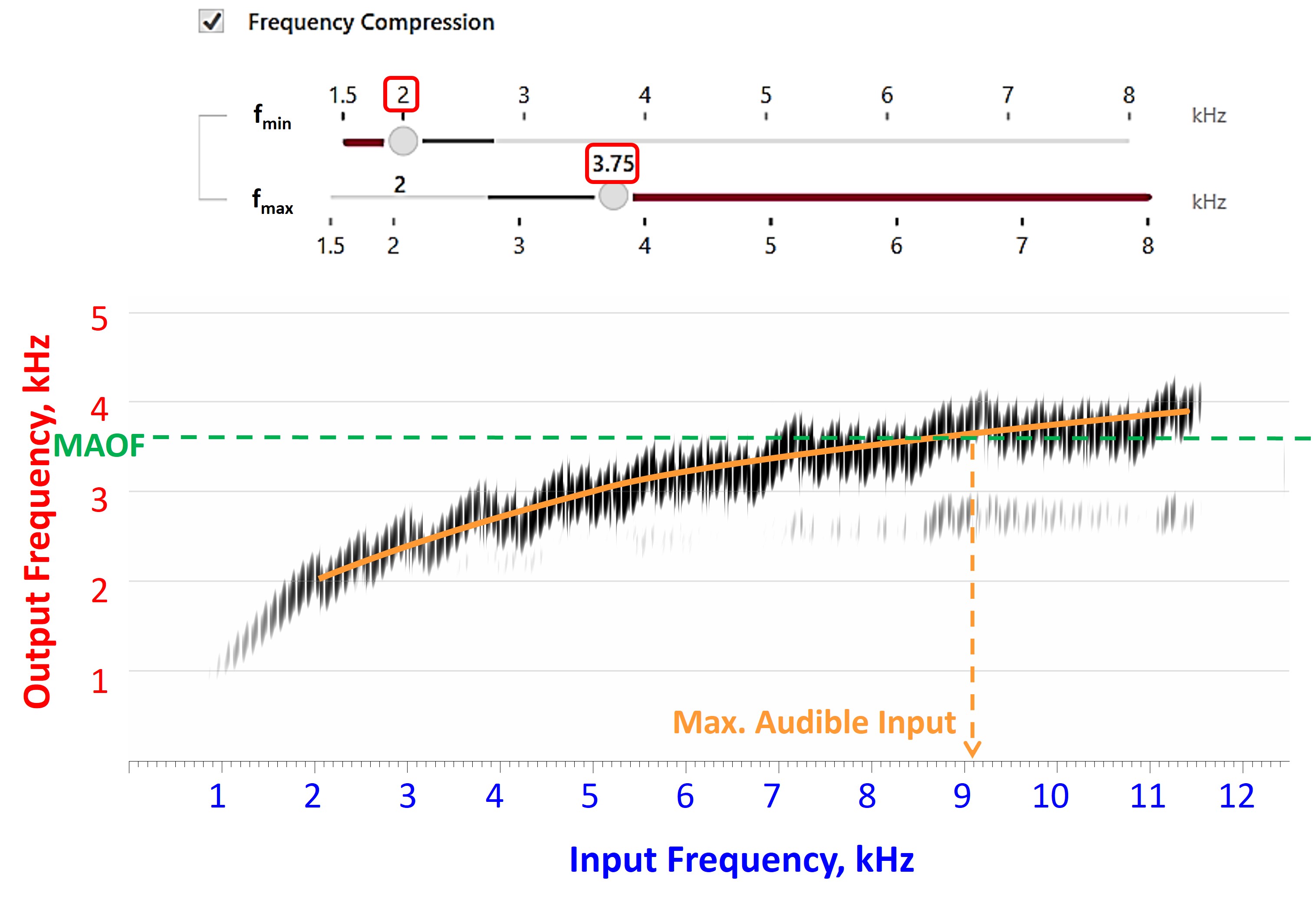

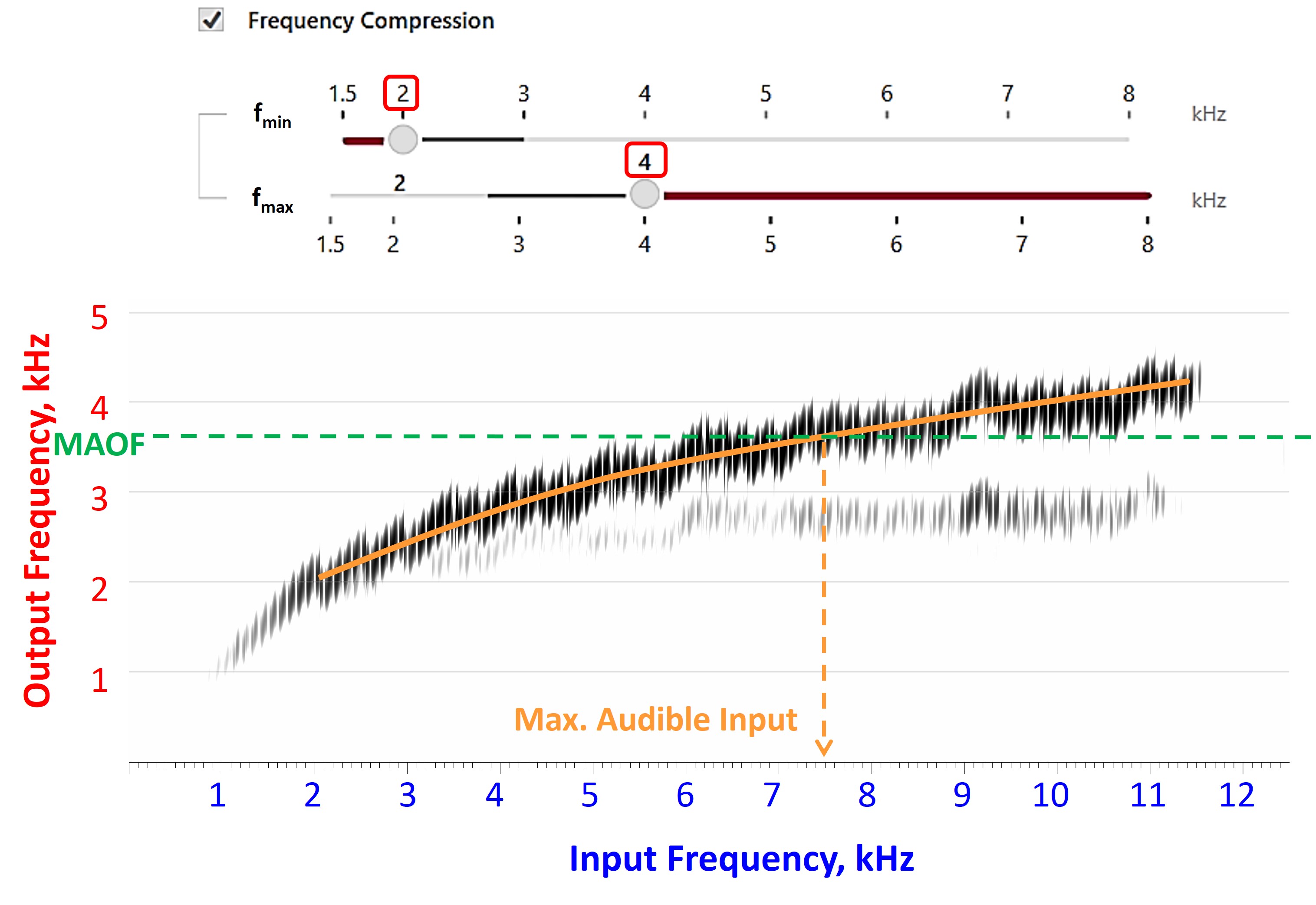

- With the frequency lowering still deactivated, find the maximum audible output frequency (MAOF). The maximum audible output frequency is the highest frequency at which the aided output exceeds the threshold on the SPL-o-gram. The objective is to determine how much real estate you have to work with.

- Activate frequency lowering and use the online tools to position the lowered speech into the audible bandwidth, that is, below the MAOF. While you want to fit the lowered speech in that region, you do not want to reduce what you had to work with before. The destination region is where the frequency-lowered information will be at the output, so most of the lowered speech must be below the MAOF to be audible. At the same time, you want to avoid too much lowering, which can introduce side effects to the point where you have zero or negative overall net benefit, thereby compromising speech intelligibility.

- Once you have selected the setting that matches the overarching goals highlighted earlier, the last thing to do is re-run the speechmap and ensure that the MAOF is reasonably close to what it was when frequency lowering was deactivated.

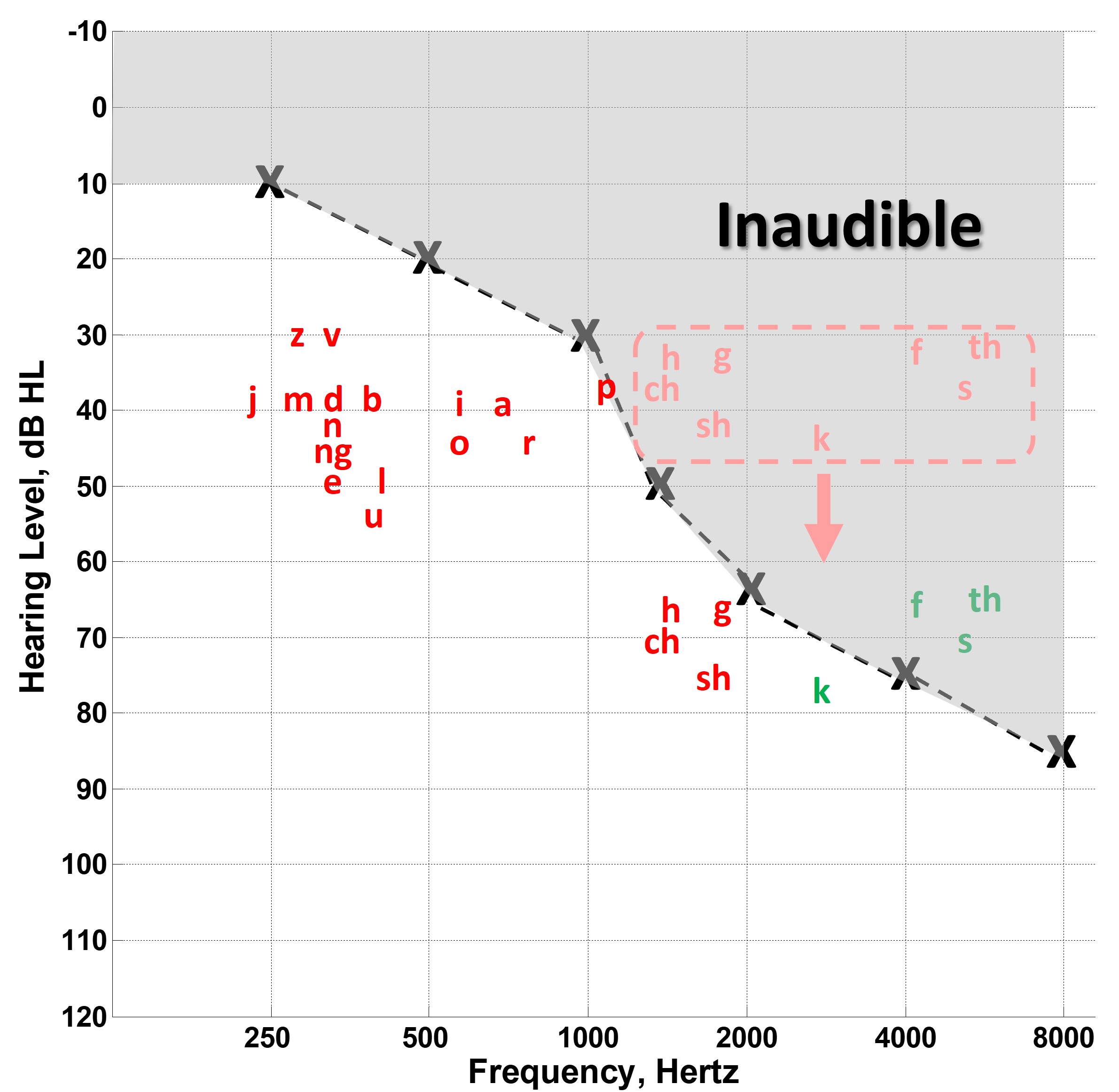

The Maximum Audible Output Frequency (MAOF)

Going back through my records, the term “maximum audible output frequency,” affectionately known as the MAOF, was born on March 30, 2009. Referring to Figure 1, when you look at the aided speech spectrum shown by the green-shaded area, the maximum audible output frequency tells you how much real estate you have to work with before frequency-lowering is activated. As shown by the arrow pointing to 3100 Hz, the MAOF is somewhere between the frequencies at which average speech and the peaks of speech cross the line (red in this example) corresponding to the threshold. You must use your judgment about where you choose along this line, but in this example, I chose where the peaks of speech cross the threshold line. The MAOF tells you at least two things. First, it tells you the severity of the deficit — that is, how much sound the hearing aid user is missing or how much of the speech spectrum is inaudible. Second, it gives you an idea of how much real estate you have to work with or the range of audible frequencies available for re-coding some of the inaudible high-frequency speech information. Of course, the lower the MAOF is, the more deficit you have and the less room you have to work with when trying to re-code it.

Figure 8. Probe microphone output illustrating the challenges of enhancing audibility while preserving speech information integrity with frequency-lowering techniques, including (1) potential distortion or truncation of the inaudible information, (2) possible masking of existing sounds when repositioning the information, (3) the necessity of amplifying the newly coded signal in areas of cochlear damage, and (4) the inherent deficit created by limited bandwidth in the average speech spectrum, summarizing.

As indicated by Figure 8, the overall net benefit from frequency lowering is limited by at least four things.

- When you have a region of inaudibility, the particular method may have to distort this information. That is, you might have to squeeze it using compression and/or you might have to truncate it by taking only a limited part of the total inaudibility range instead of the full bandwidth. So, you may not be moving all the information in the original input signal.

- When you move the missing information, you must put it where the hearing aid user can hear it. Sometimes, there may be coexisting sounds at the same time at those particular frequencies so you run the potential of masking the information already there. Or, to accommodate the new frequency-lowered information with compression techniques, you may have to move information in the audible region that would be amplified normally through the hearing aid.

- As indicated by the up arrow, you must amplify the frequency-lowered signal. The significance of this is that newly re-coded information must be put on the cochlea where there is probably some existing outer and inner hair cell damage.

- As indicated by the rightward-facing arrow in the average speech spectrum (gray-shaded area), the size of the deficit may limit the benefit from frequency-lowering. In other words, the amount of information lost when bandwidth is reduced for normal-hearing listeners. So, in this sense #4 establishes the size of the deficit, that is, the net loss of information due to limited bandwidth and #1, #2, and #3 influence how much this deficit can be recovered with frequency-lowering.

This is just a laundry list of things that limit the total benefit you may get compared to simply extending bandwidth. In summary, you may need to put a limited amount of information from the inaudible region in a region with low-frequency speech information and sensorineural hearing loss.

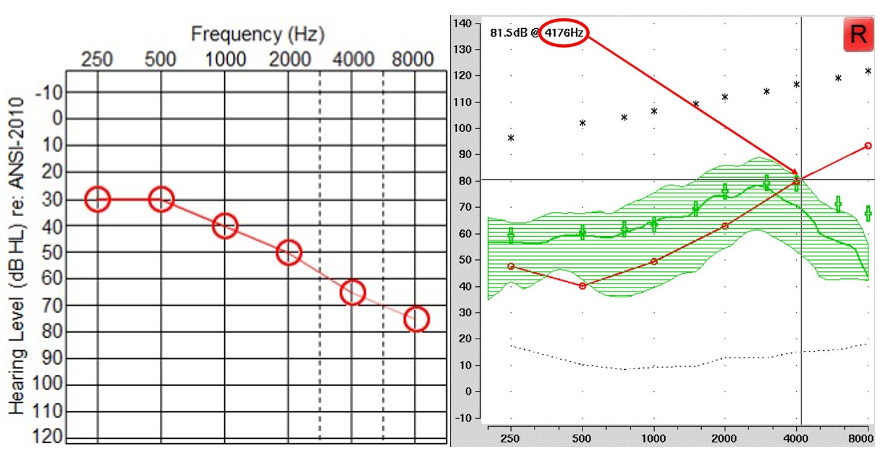

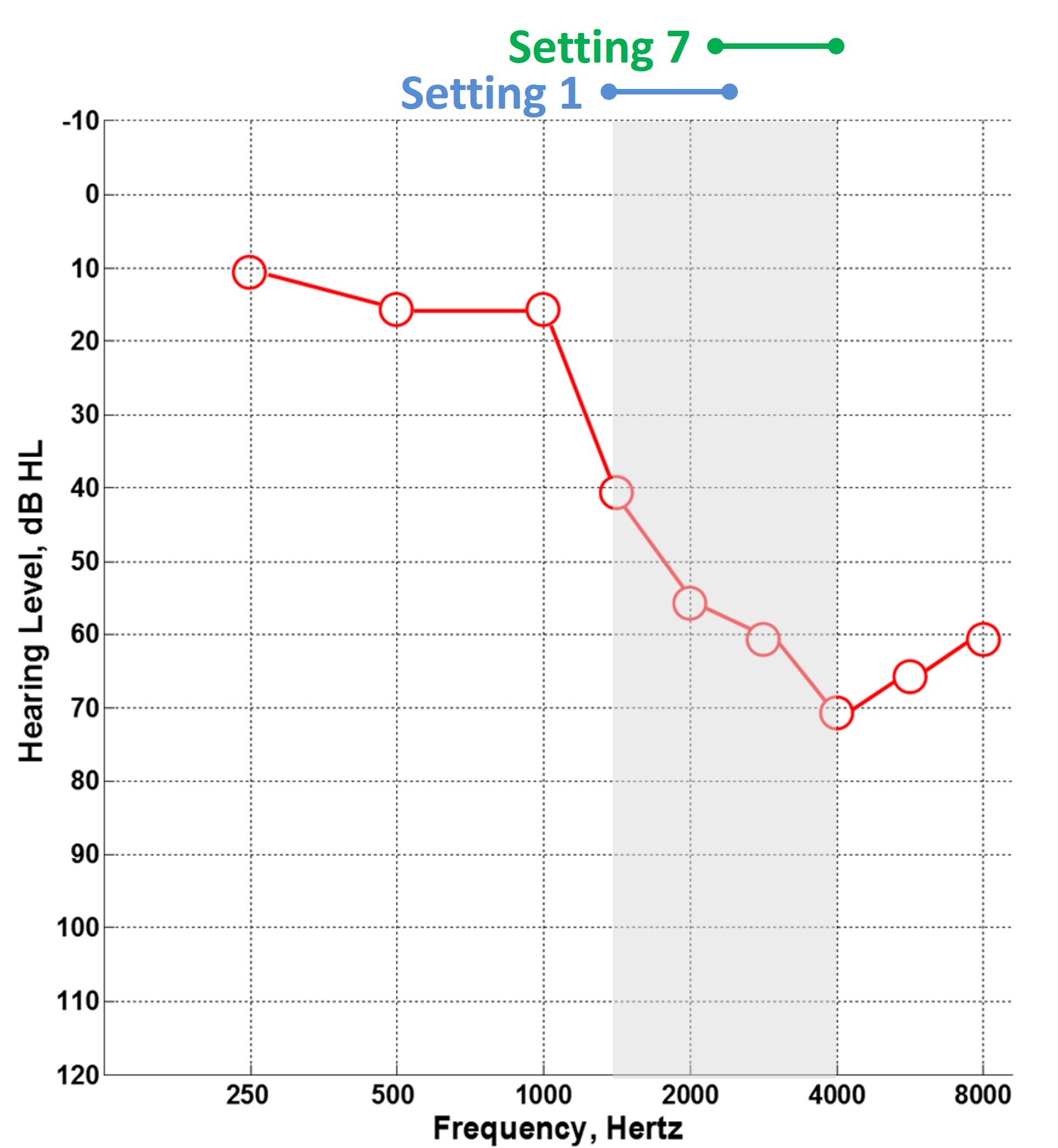

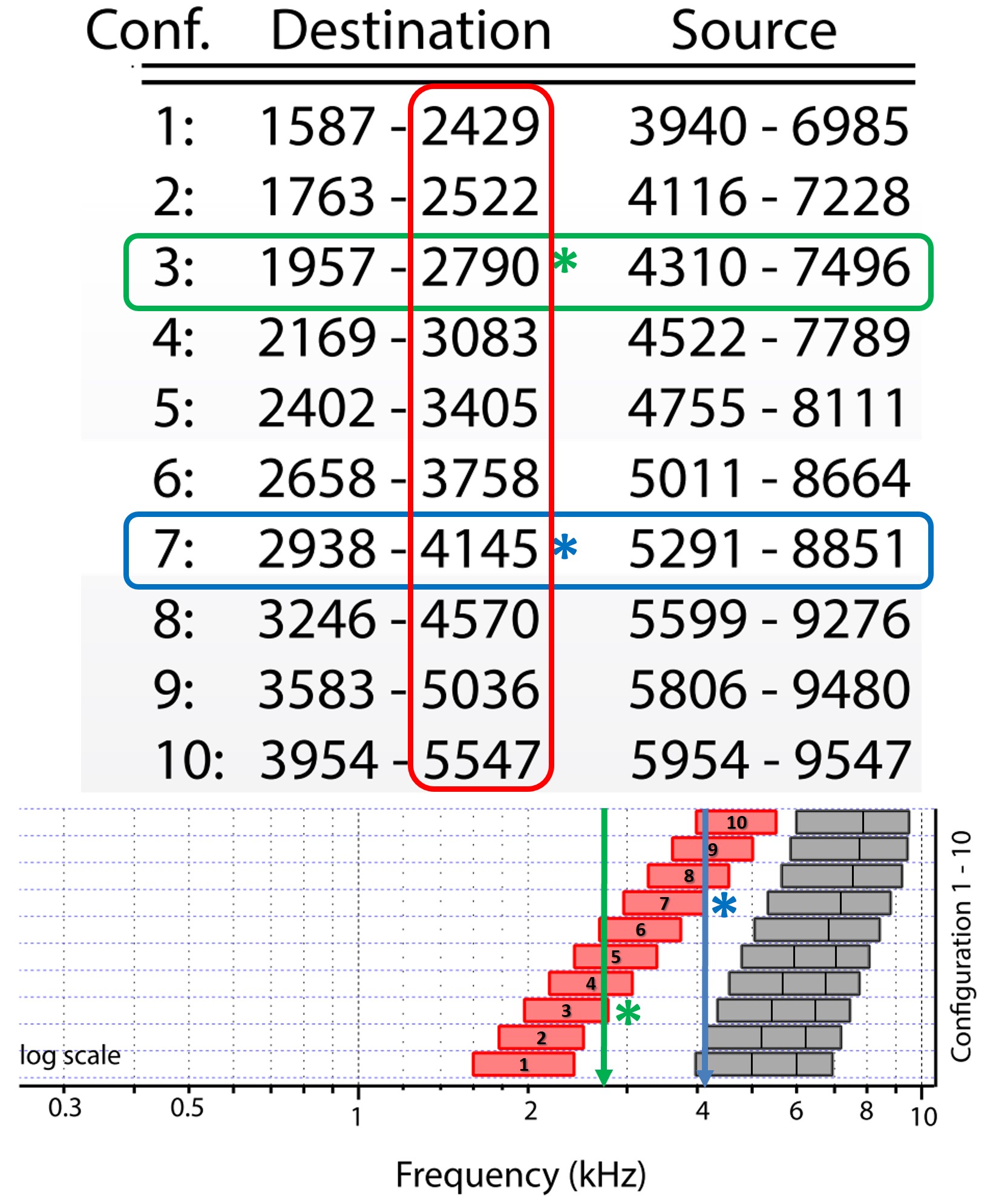

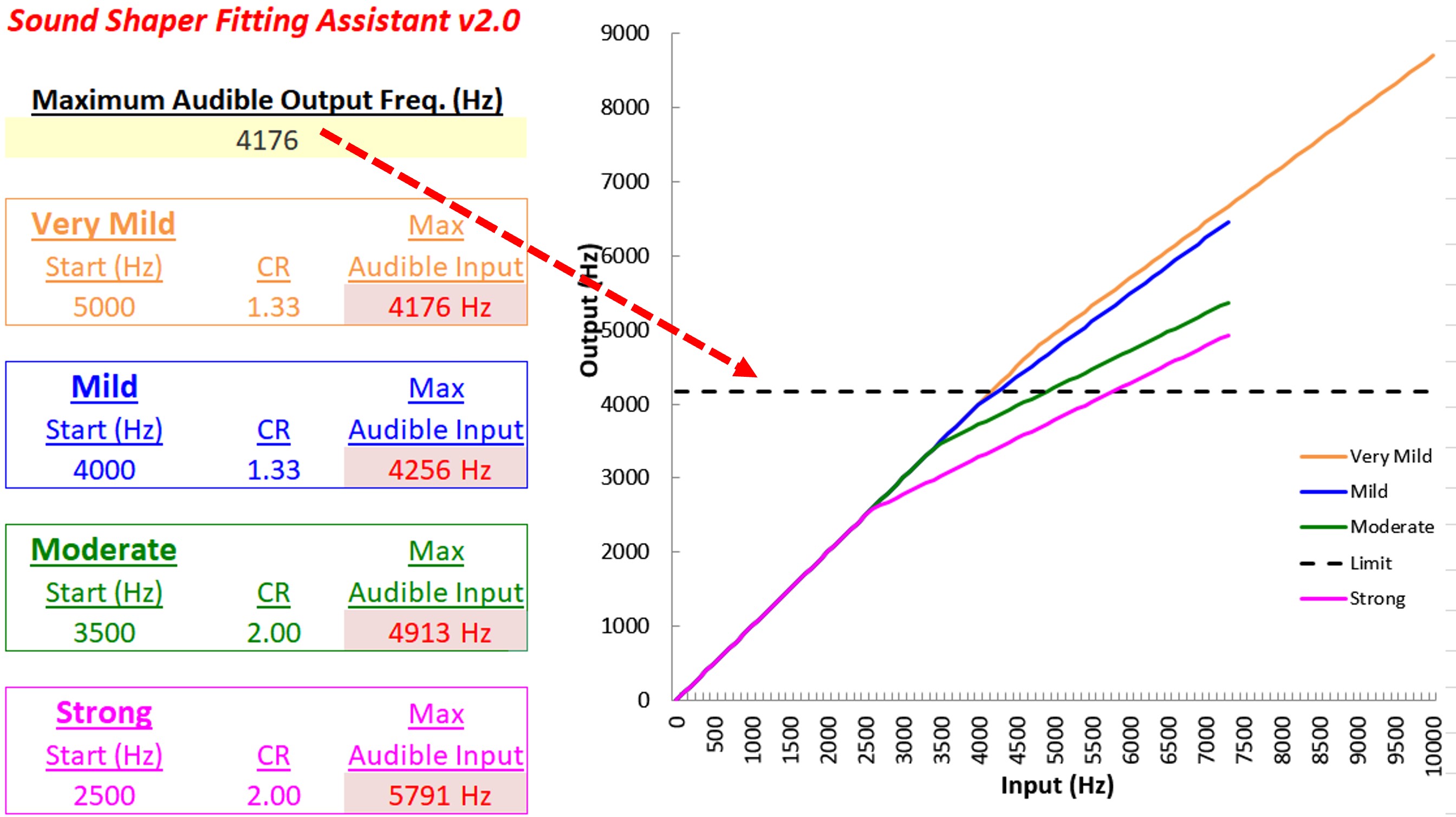

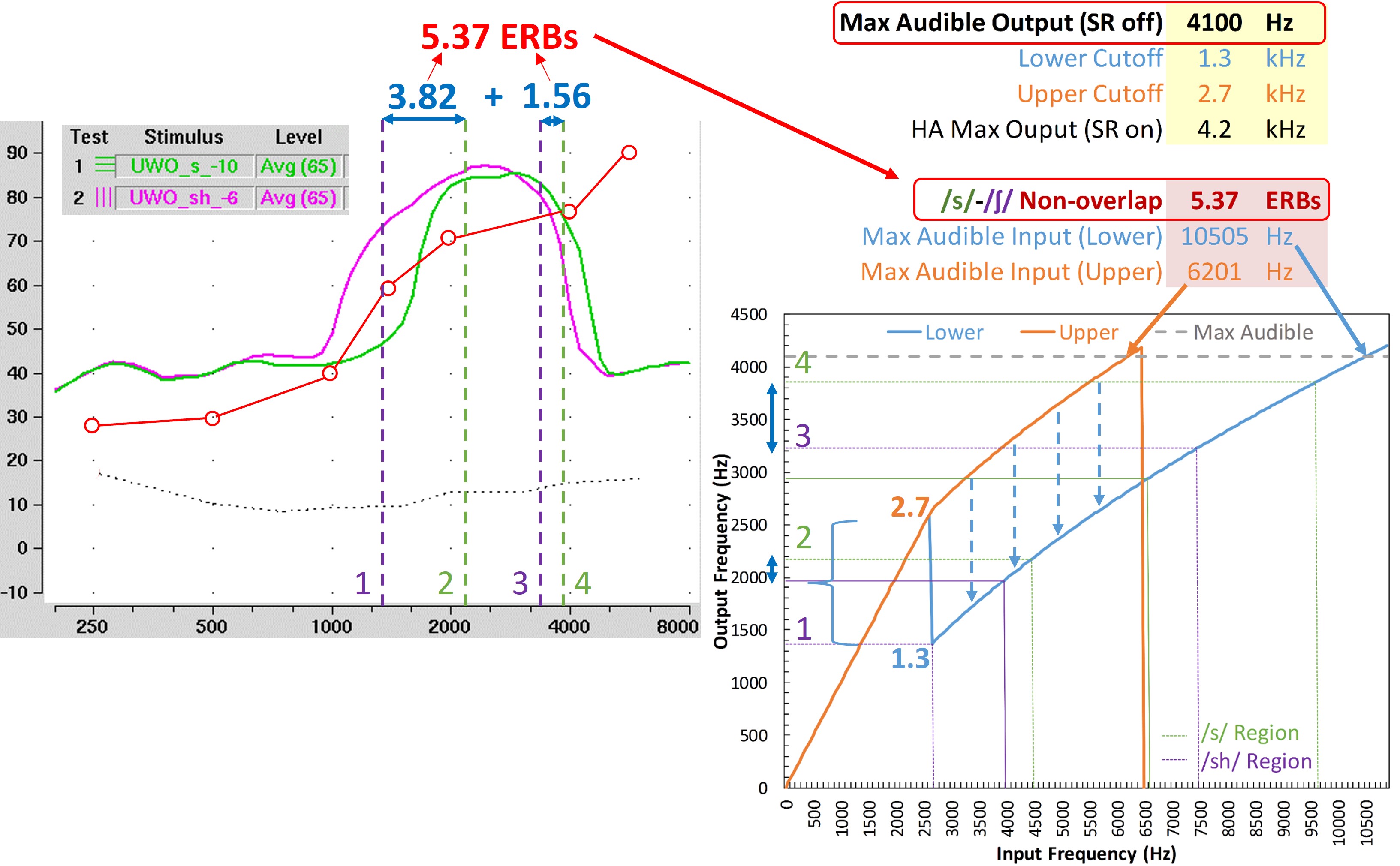

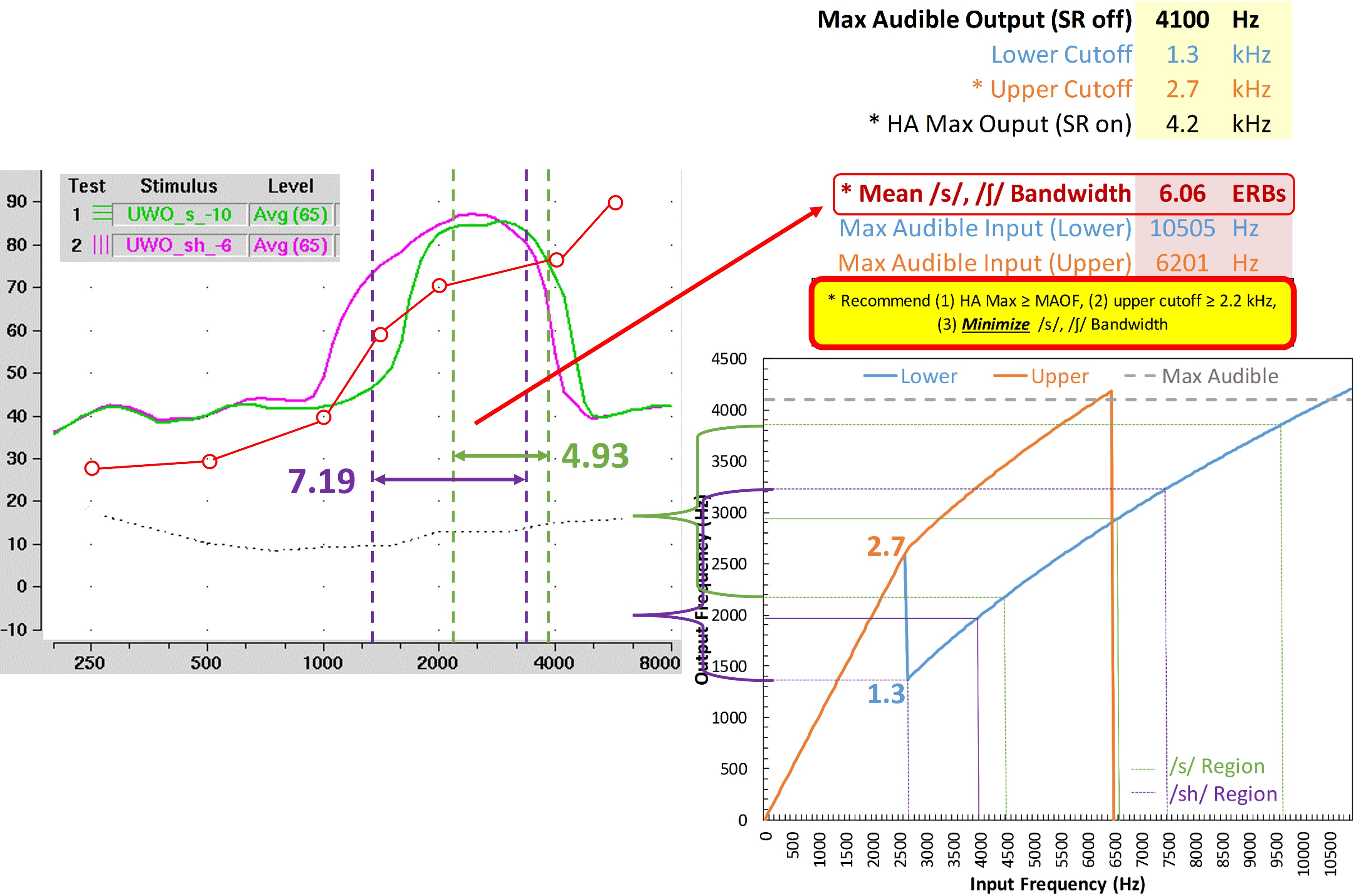

Figure 9. Left panel displays an example audiogram, while the right panel shows the SPL-o-gram of the aided speech spectrum. The maximum audible output frequency is marked by a red arrow at 4176 Hz, indicating the highest frequency at which audibility is achieved.

I go through two example audiograms for each manufacturer. Figure 9 shows the first example. The audiogram is shown on the left, and the SPL-o-gram of the aided speech spectrum is shown on the right. Again, the maximum audible output frequency is the highest frequency at which we have audibility. In this case, it is 4176 Hz, as indicated by the red arrow. Some people have argued that you should use the average. I have always used the peak since the frequency where the aided speech spectrum crosses audibility can vary depending on vocal effort and environmental acoustics. Furthermore, the peaks of some high-frequency sounds like “s” and “sh” contain speech information that can be useful, so I have always used the peak, but I also tell people to use their best judgment. For example, if we instead had only a sliver of barely audible information extending over a range of 1000 or 2000 Hz, then using your judgment, you would say there is no way that a person can use that information. So again, it does not have to be an absolute thing, but this is a nice, clean case.

Figure 10. Left panel displays an audiogram with more significant hearing loss than the example in Figure 9. The right panel shows the SPL-o-gram of the aided speech spectrum and the maximum audible output frequency at 2700 Hz.

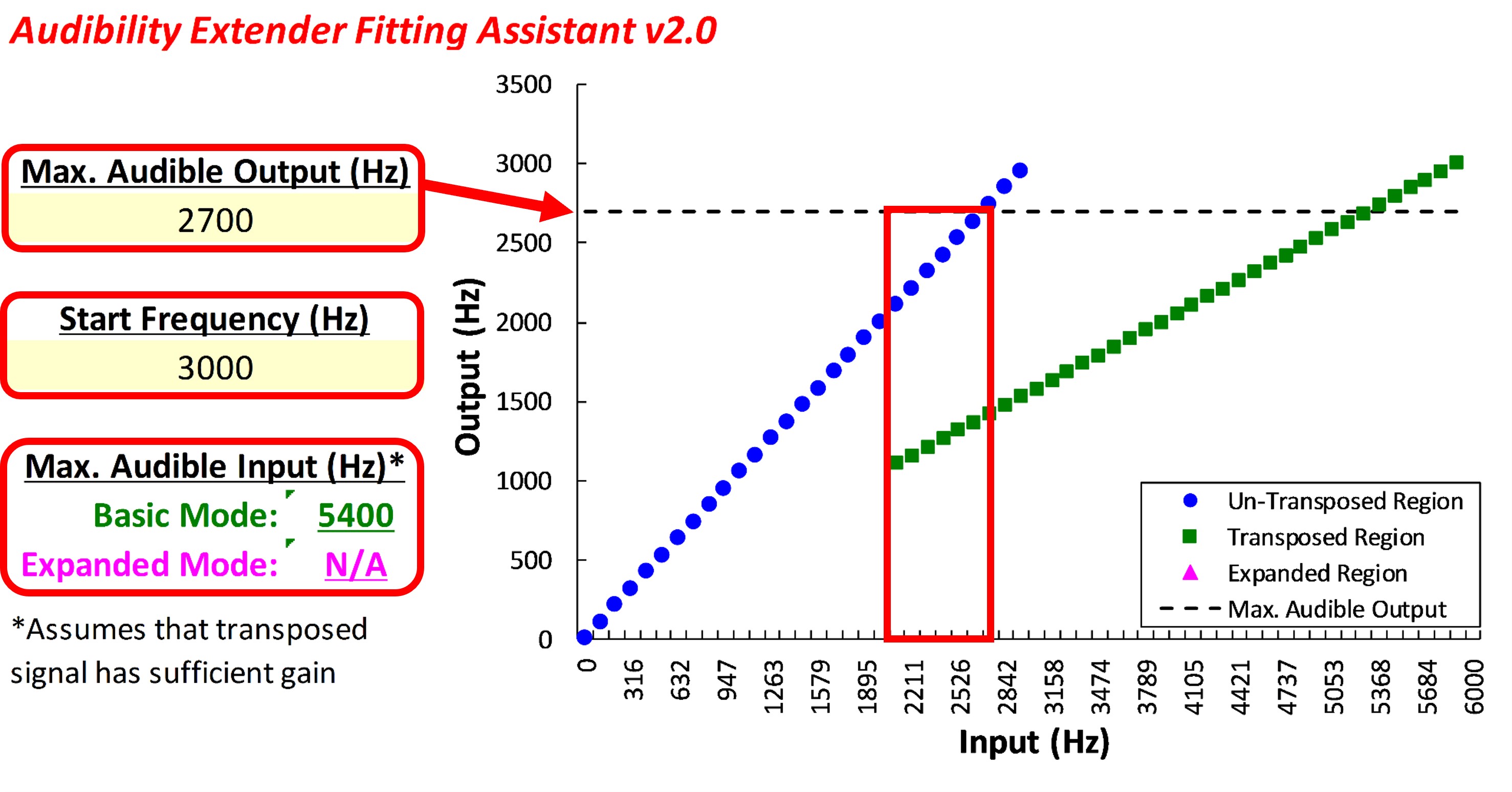

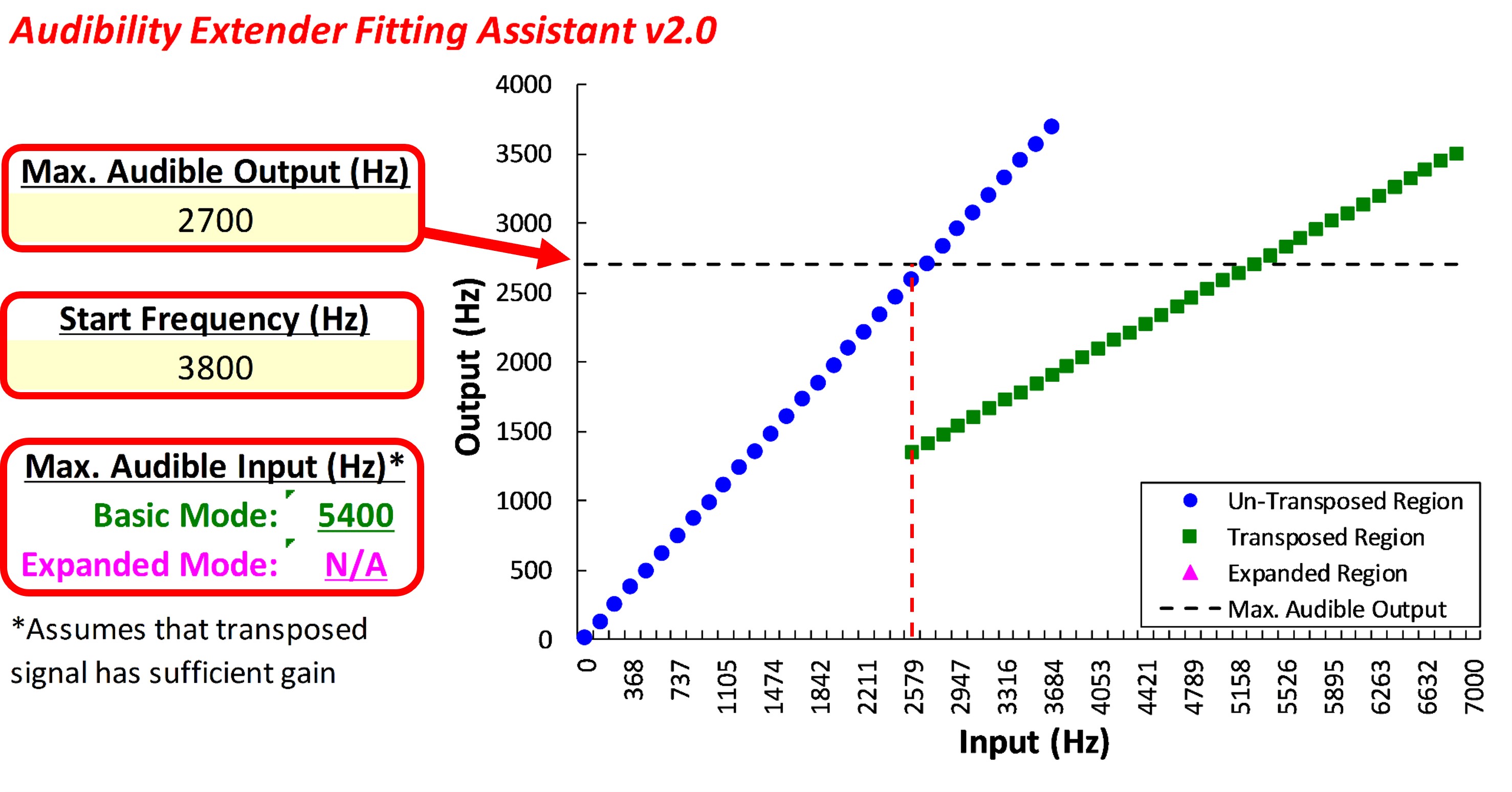

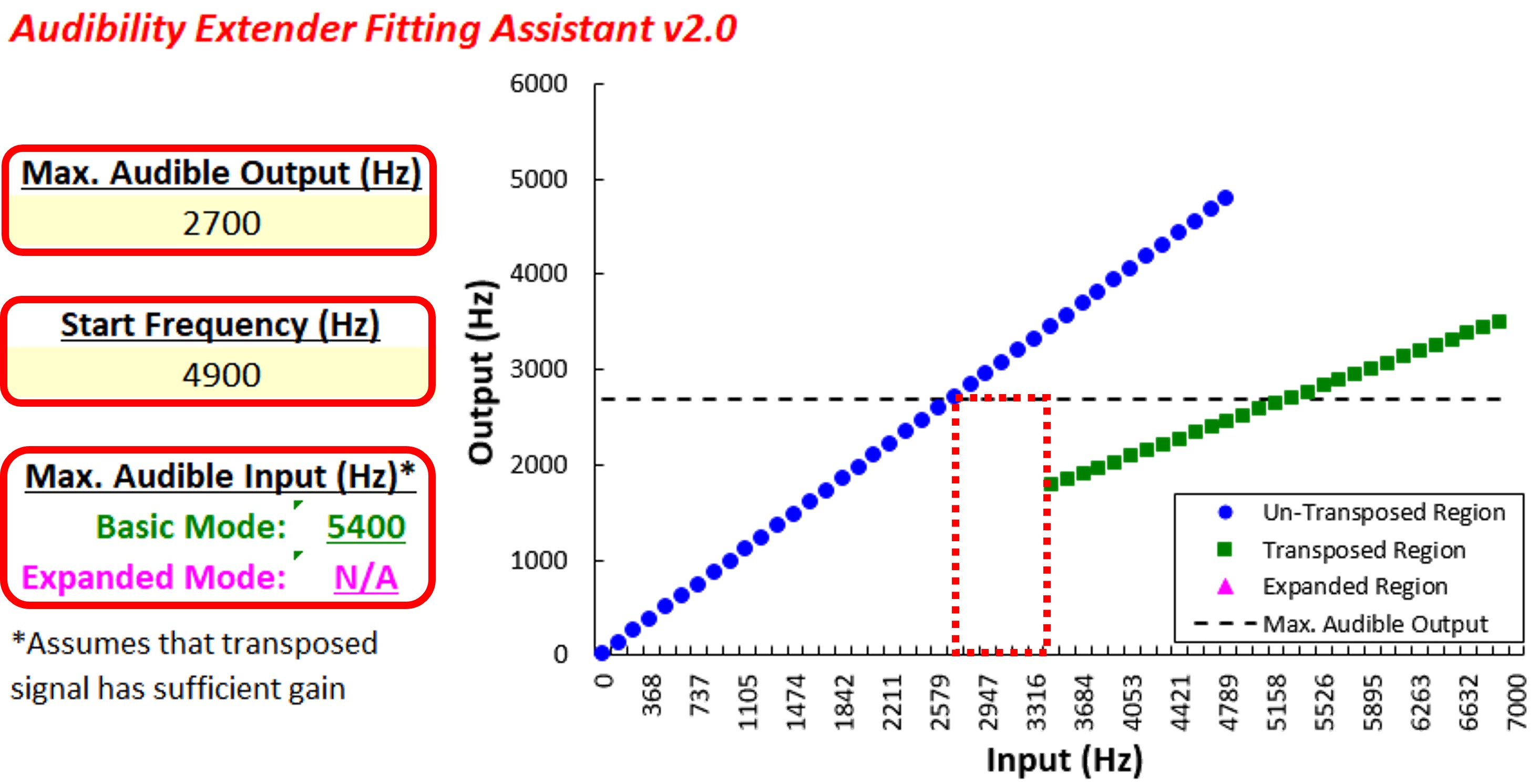

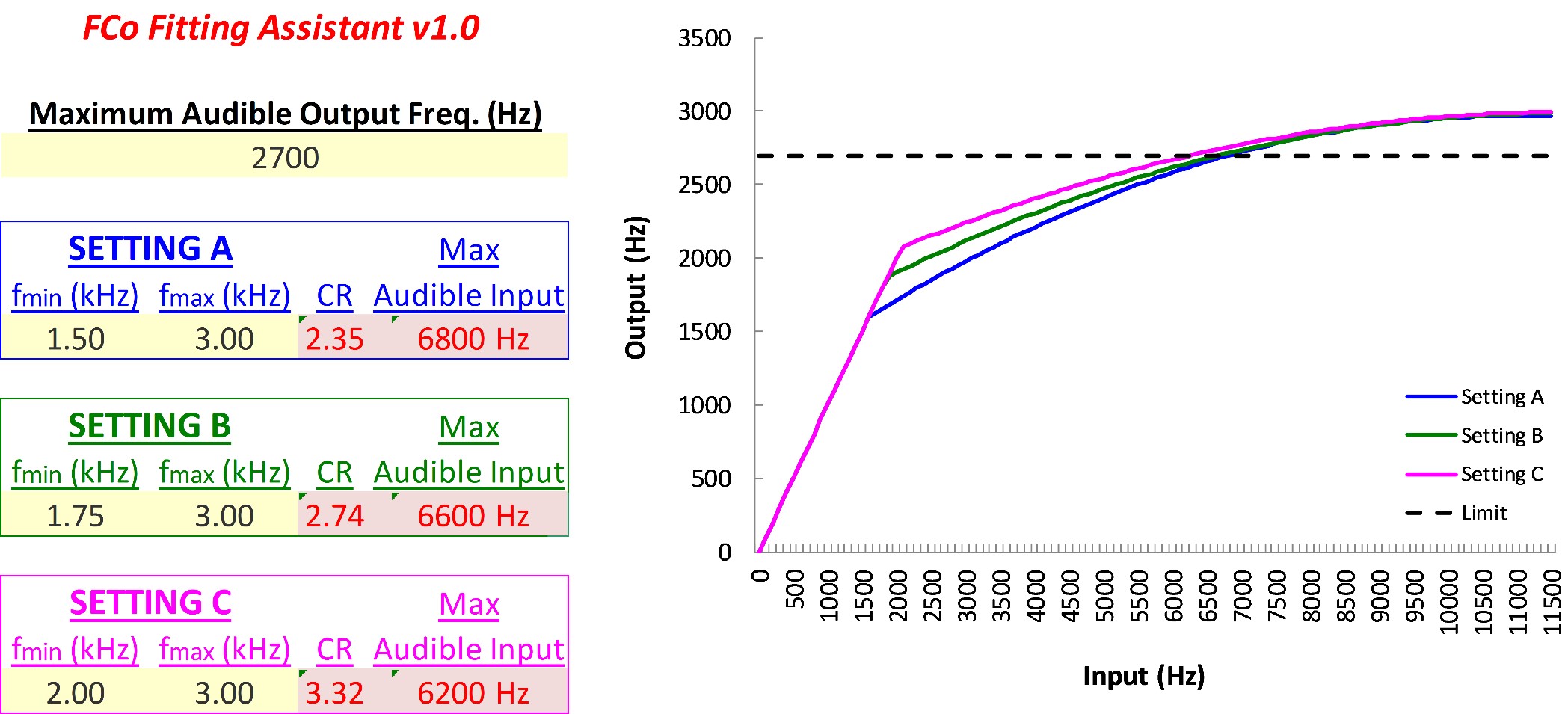

Figure 10 shows the second example that will be used in later. Again, using the frequency where the peak of the aided speech spectrum crosses the line of audibility, the maximum audible output frequency for this example is 2700 Hz.

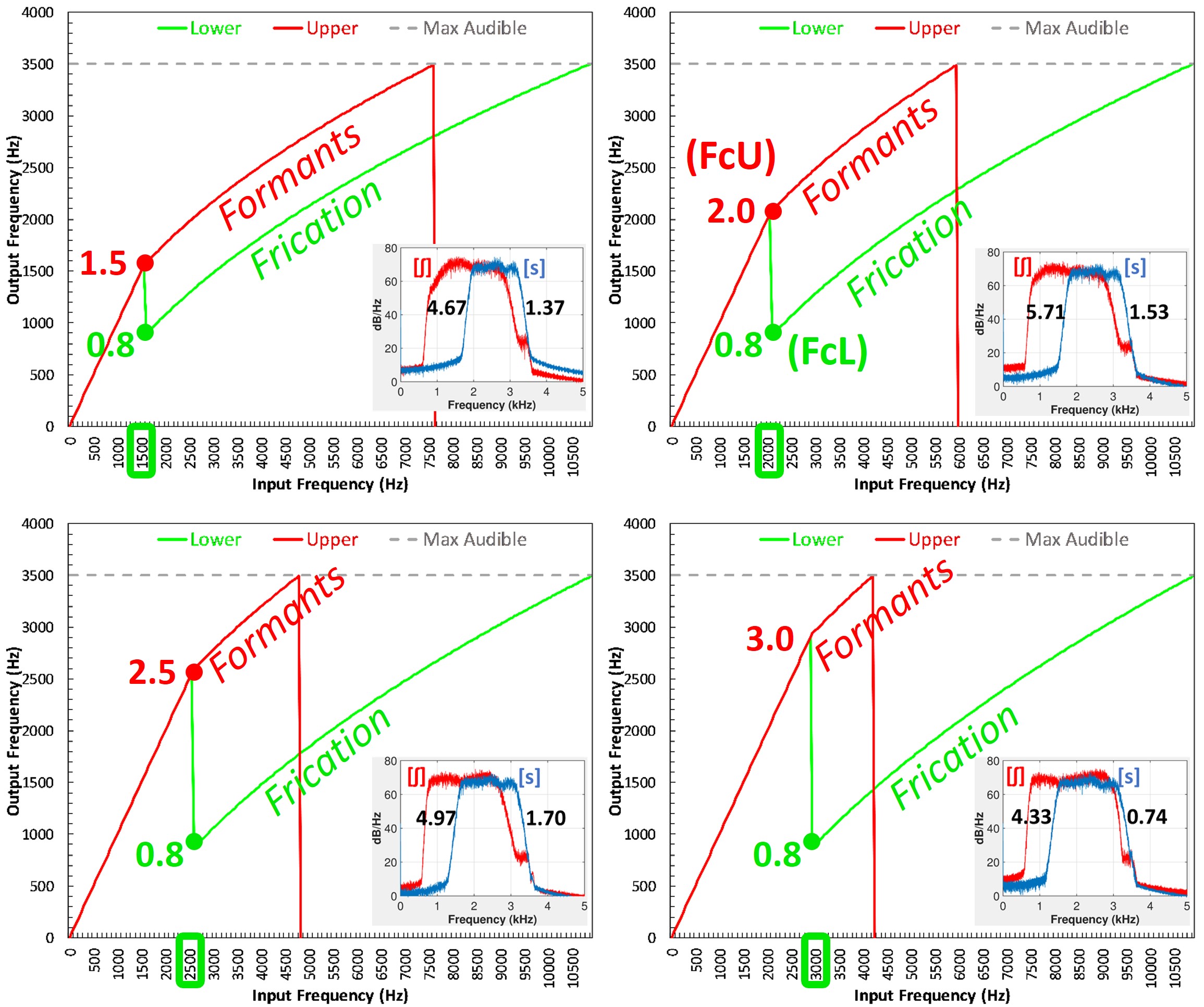

Using the MOAF and the Frequency-Lowering Fitting Assistants

The information obtained from the probe microphone measures, namely, the maximum audible output frequency, can be used in conjunction with my frequency-lowering fitting assistants, which will be the focus of the latter parts of this article. I have developed these tools for each major hearing aid manufacturer to show you how frequency is allocated with their different frequency-lowering settings. So, even if you do not buy into the goals I establish for each manufacturer, demystifying what is going on with the individual technology can effectively reduce the clinical barriers I discussed earlier.

The purpose of the frequency-lowering fitting assistants is not necessarily to determine optimal settings but rather to empower the clinician. They can do this by providing information about how sounds are changed with the different settings. Then, they can help you reduce all the available settings into a reasonable set based on first principles. These are our guiding principles for making different parameter selections. For example, the primary first principle is to maintain the audible bandwidth available before frequency lowering was activated. The other first principles will vary depending on the technology reviewed in Part III.

Recall the research and clinical barriers discussed in Part I, manifesting as a lack of control and standardized methodology. Therefore, if nothing else, they can set a benchmark to promote uniformity across clinicians, protocols, test sites, or whatever else. The idea is that, as a field, we can establish some agreed-upon way of fitting this technology, and once we do this, we can start to examine whether or not there is any other, maybe different, set of principles that we should be using. But we have to start somewhere.

What Acoustic Feature(s) Should We Optimize for Best Outcomes?

With what has been said so far, we must stop and question what should be optimized when looking at the output from probe microphone measures. At some point, we need to question what is meant by “optimization.” These questions have important implications for algorithm design. They also have important implications for selecting a particular method of frequency lowering and adjusting its parameters for individual hearing aid users.

While there is no firm conclusion, conventional wisdom adheres to an untested assumption that the spectral features of the high-frequency cues need to be preserved or replicated in the lowered signal. This has led to two common recommendations for optimizing fittings. The first one is in terms of the input bandwidth. That is, how much of the input signal can we actually lower? The problem with that is that more is not always better. For example, suppose you have two frequency-lowering settings, one of which can lower input frequencies up to 9000 Hz and the other up to only 7000 Hz. Under this premise, the setting that puts sounds up to 9000 Hz into the region of audibility would be more optimal than the one that puts sounds only up to 7000 Hz. However, as I highlighted in Part I, too much frequency lowering can harm speech intelligibility. So, while one setting might make more information from the input audible, the usability of that information might not be as good. The second recommendation that falls out of the notion that optimization should occur in the frequency domain involves the “s” and “sh” sounds because they constitute the biggest source of confusion with frequency lowering. The idea is to keep these key sound contrasts as separate as possible in the output after lowering. An example of this will be illustrated at the end of Part II.

Other, Less Precise Methods using Probe Microphone Measures

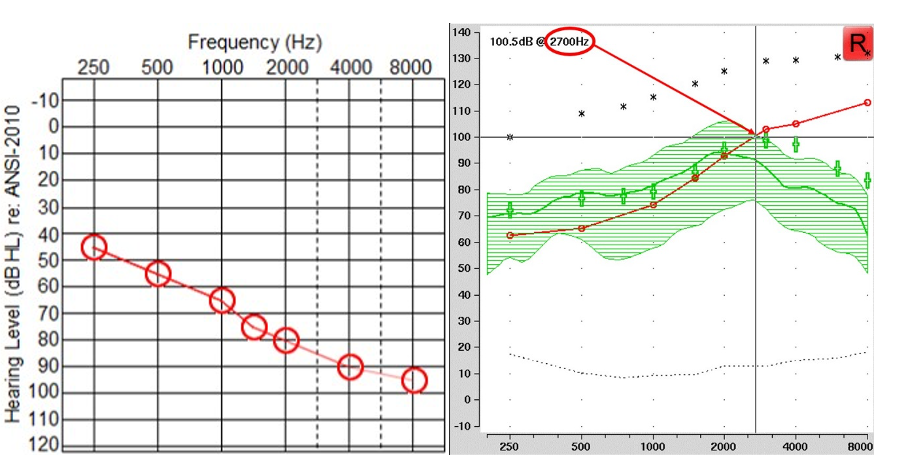

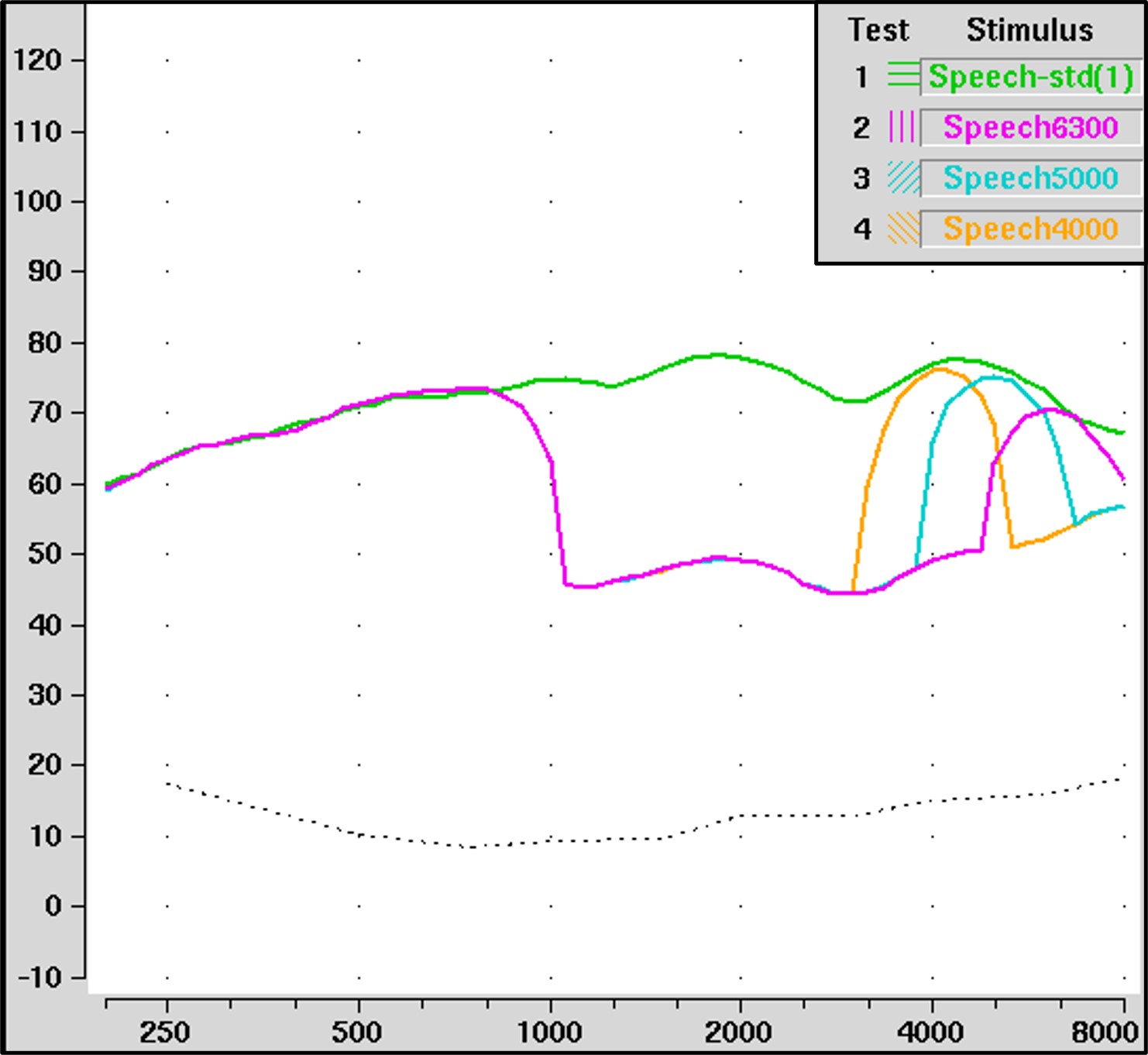

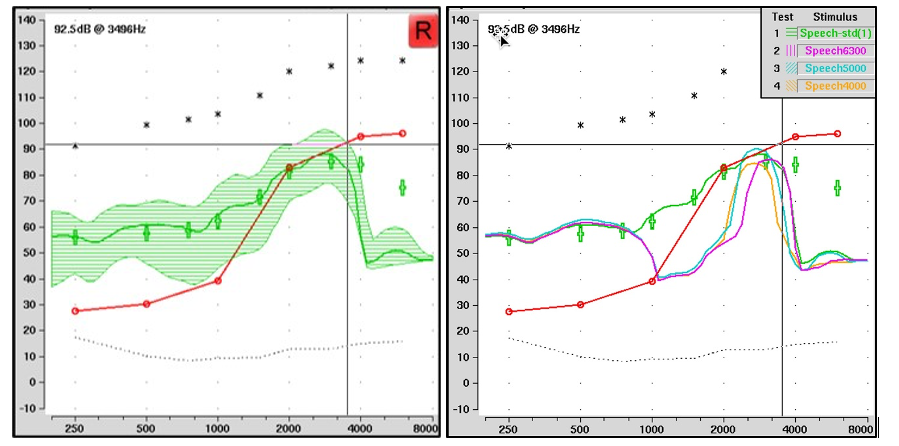

Figure 11. The input spectrum for the standard speech stimulus in the Audioscan Verifit is shown in green. The special speech signals with high-frequency energy centered at 6300, 5000, and 4000 Hz are shown in magenta, blue, and yellow, respectively. Not shown is the special speech signal centered at 3150 Hz.

A few years after the first modern hearing aids with frequency lowering were introduced, Audioscan created special speech signals to help clinicians visualize frequency lowering in action. These are available from the selection menu containing the rest of the input signals. Essentially, they reduced most of the energy in the standard speech signal above 1000 Hz except for a 1/3-octave band centered at 3150, 4000, 5000, or 6300 Hz. As I discussed earlier, you do not know the input-to-output frequency mapping since you can only see the output. The purpose of these signals is to constrain the input to make it easy to visualize where it goes in the output.

Figure 12. After frequency lowering, the left panel depicts the aided speechmap for the standard speech signal, without a clear indication of the input. The right panel displays the output of the special speech signals: the magenta line represents the 6300 Hz input remapped to 3200 Hz; the blue line shows the 5000 Hz input shifted to around 2500 Hz; and the yellow line indicates the 4000 Hz signal shifted to around 2000 Hz. The visibility of these lines relative to the threshold line (red) offers insights into the audibility of specific input frequencies, with some important caveats.

The left panel of Figure 12 shows the aided speechmap for the standard speech signal with frequency lowering activated. It is not apparent which parts have been lowered and from whence they originated just by looking at it. The right panel shows the output for each of the three special speech signals shown in Figure 11. The magenta line is for the speech signal that originated at 6300 Hz. Notice that it shows up around 3200 Hz with frequency lowering. Similarly, the blue line for the 5000-Hz special speech signal shows up in the output at around 2500 Hz, and the yellow line for the 4000-Hz signal shows up at around 2000 Hz. One idea is to use these signals to determine how much audibility you have from the original input signal. For example, for the 6300-Hz signal, you can see that it is below audibility, while the 5000-Hz signal is just barely audible because it is slightly above the threshold line. Interestingly, notice that the 4000-Hz signal does not appear to be audible. I explain why this might be in the following paragraphs.

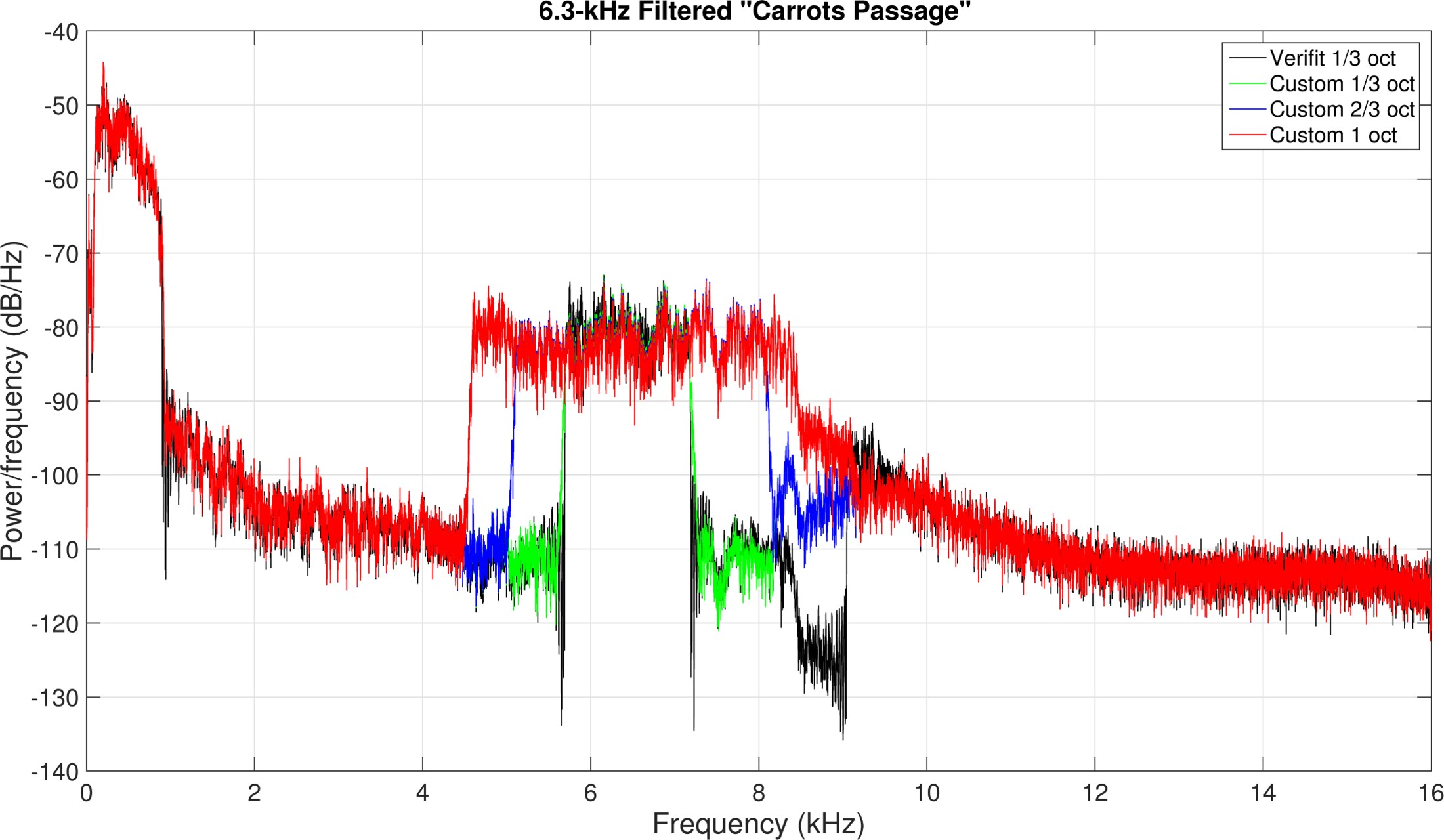

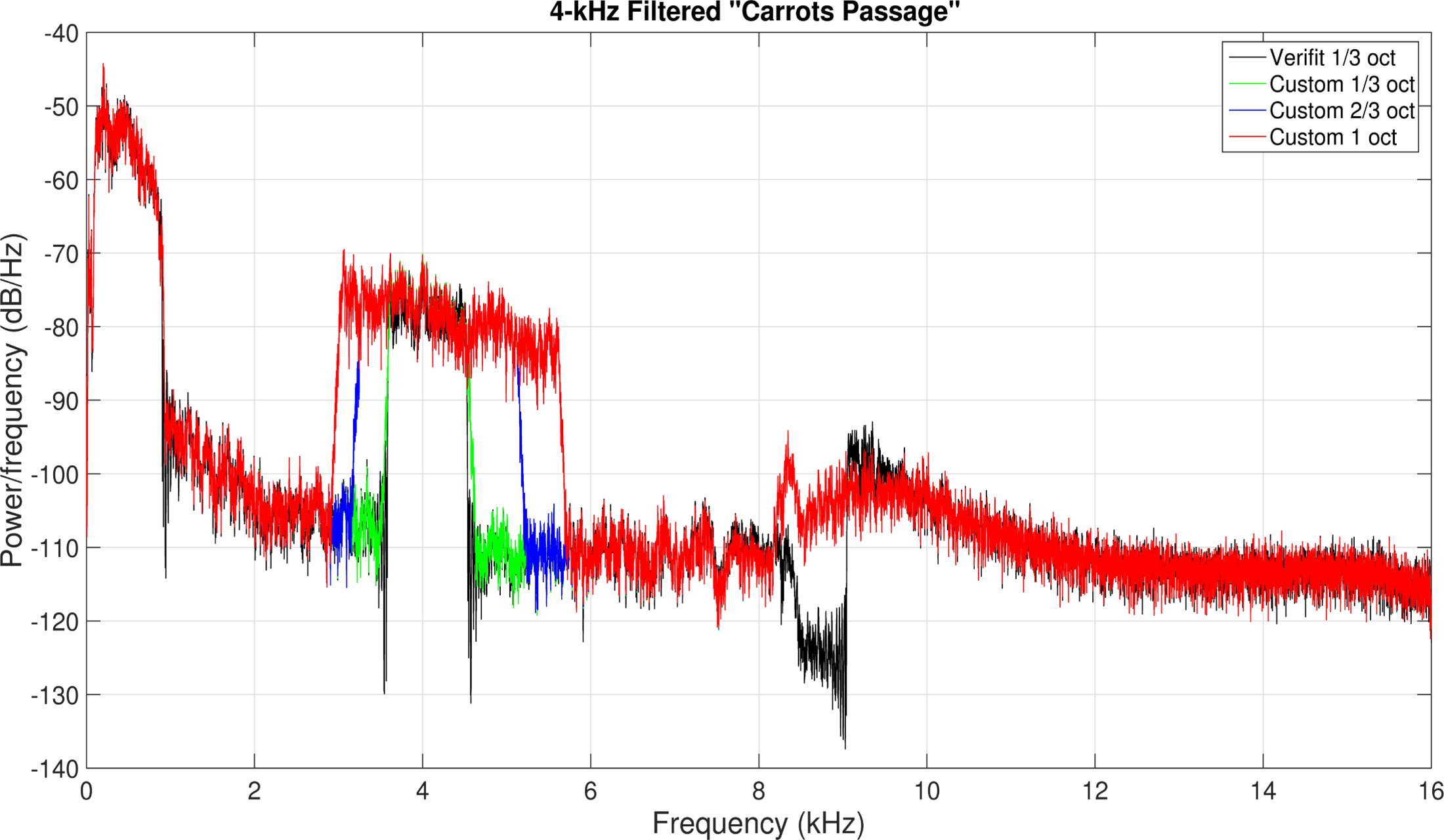

While Figure 12 demonstrates the utility of the special speech in providing some gross information about where you lose audibility for the lowered input signal, it is an incomplete picture of the entire frequency remapping function. Furthermore, there are other problems I have identified with them. First, recall that they are only 1/3-octave wide at the input to the hearing aid. Also, note that the analysis bands are only 1/3-octave wide. The problem is that frequency compression (discussed in Part III) will squeeze the input signal so that its output is narrower than 1/3 octave. Because actual running speech has high-frequency energy wider than 1/3 octave, the special speech signals may lead you to underestimate the actual output from the hearing aid when frequency compression is engaged.

Figure 13. The top panel displays spectra of signals centered at 6300 Hz. The bottom panel shows spectra of signals centered at 4000 Hz, created to highlight issues with using the special speech signals in hearing aids using frequency compression. The original special speech signals from the Verifit are depicted in black, while the green signals represent those recreated for method validation. The blue and red signals illustrate the effects of different filter widths, 2/3-octave, and 1-octave, respectively.

Figure 13 shows signals I created to demonstrate the problem faced when using the special speech signals on hearing aids with frequency compression. I used the standard carrot passage from the Verifit and filtered it with three different filters. The top panel shows the spectra of the signals centered at 6300 Hz, and the bottom panel shows the spectra of the signals centered at 4000 Hz. The original special speech signals from the Verifit are in black, and the ones I created to check my methods are in green. The 2/3-octave wide signals are in blue, and 1-octave wide signals are in red.

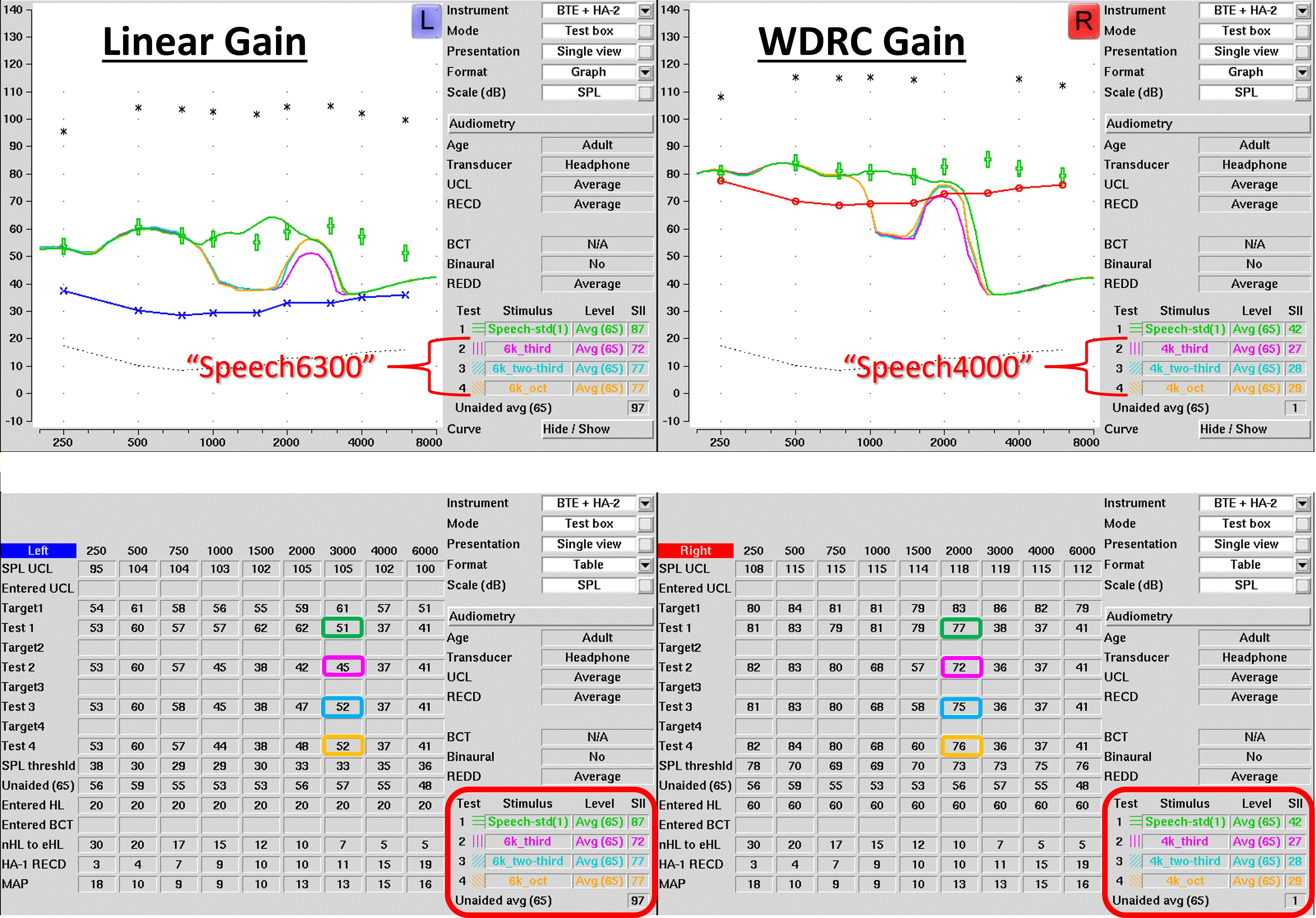

Figure 14. Speechmaps of signals (from Figure 13) processed by a hearing aid with frequency compression. The left example uses linear gain, displaying green (standard speech signal), magenta (1/3-octave wide signal at 6300 Hz), blue (2/3-octave wide), and gold (1-octave wide) lines, with all special signals appearing around 3000 Hz in output. Notably, the magenta line is lower than others, as quantified in the accompanying table. The right example demonstrates a hearing aid with WDRC gain, showing a smaller error for the magenta special speech signal because WDRC provides more gain for lower input levels.

Figure 14 shows the speechmaps for the signals shown in Figure 13 when processed with a hearing aid using frequency compression. The example on the left is for a hearing aid with linear gain. Just as before, the green line corresponds to the standard speech signal. The magenta line corresponds to the 1/3-octave wide speech signal centered at 6300 Hz. The 2/3-octave wide and 1-octave wide signals are shown in blue and gold, respectively. Notice that each special speech signal originating from 6300 Hz appears in the output around 3000 Hz. Also, notice that the magenta line, equivalent to the Verifit special speech signal, is lower than the others. The differences can be quantified using the numbers in the table. Notice for the standard speech signal (Test 1), the analysis band centered at 3000 Hz is 51 dB. Meanwhile, the output for the 1/3-octave wide input signal is 45 dB (Test 2), which is 7 dB less than the output for the wider signals, whose output levels are about the same as for the standard speech signal. Again, this happens because the output bandwidth of a signal 1/3-octave wide at the input will be less than 1/3-octave wide when compressed in frequency. On the other hand, the other two signals in Test 3 and Test 4 fill the full analysis band after frequency compression, so their output levels are higher. The example on the right is for a hearing aid with WDRC gain. For this example, there is still an error associated with the special speech signal in magenta, but it is smaller because lower input levels receive more gain.

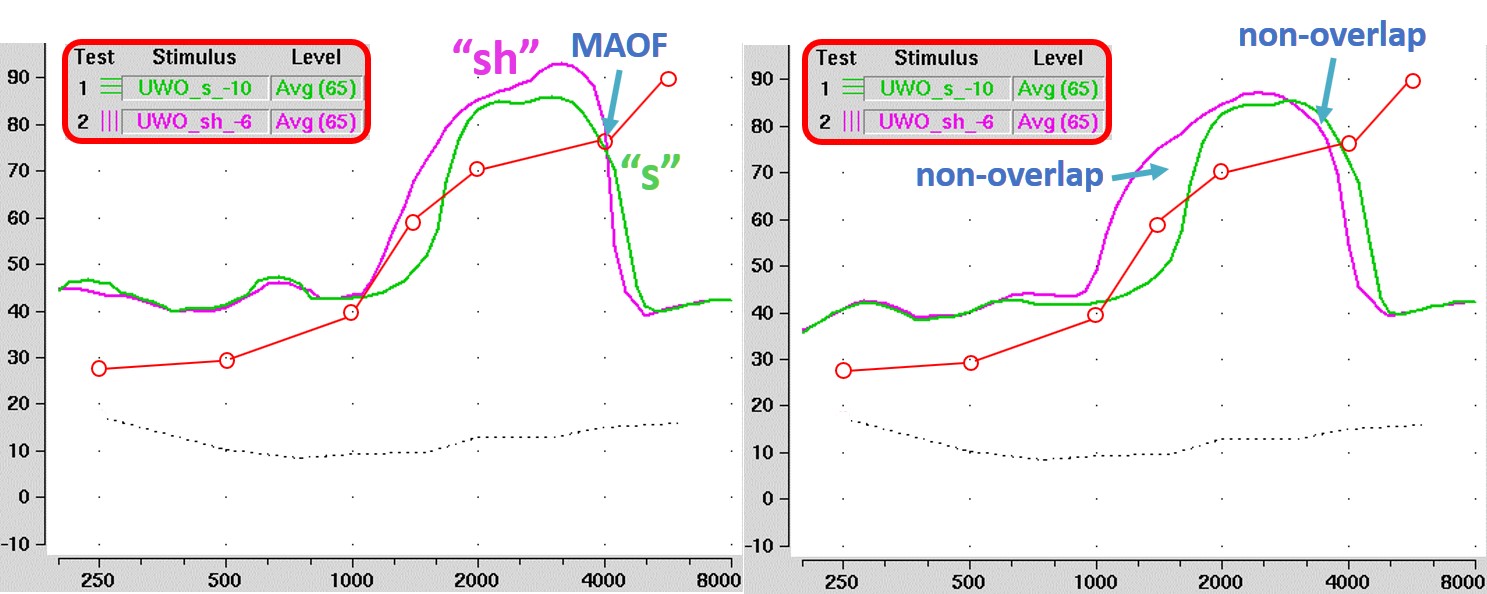

Figure 15. Illustration of the process for utilizing the calibrated “s” and “sh” sounds in the Verifit. Step 1 involves determining the Maximum Audible Output Frequency (MAOF) without frequency lowering. Step 2 (left) checks the audibility of these sounds by comparing their speechmaps (with “s” shown in green) against the threshold line, aiming for the “s” output to align approximately with the MAOF. Step 3 (right) involves selecting settings that minimize the overlap between the “s” and “sh” sounds, assuming that this will optimize the clarity and distinctiveness of these sounds with frequency lowering activated.

The second set of signals in the Verifit designed for frequency-lowering hearing aids falls out of the notion that optimization should occur in the frequency domain. In particular, these are calibrated “s” and “sh” sounds because, as indicated earlier, this is the biggest source of confusion with frequency lowering. The calibrated “sh” has energy up to 6000 Hz, and the “s” has up to 10,000 Hz. The goal is to keep these key speech contrasts as separate as possible after lowering.

Figure 15 demonstrates the procedure for using these special signals. After obtaining the MAOF with frequency lowering deactivated (step 1), step 2, as shown on the left, is to ensure the two sounds are audible by comparing their speechmaps to the threshold line. Ideally, the upper edge of the output for “s” (green line) will approximate the MAOF. The purpose is to ensure that all or most of the lowered sounds are audible. After you have found the settings that make “s” audible, step 3, as shown on the right, is to choose the setting that has the least amount of overlap between the “s” and “sh.” My only problem with this metric is that according to my research, the separation between these two sounds after lowering is often not big enough to be perceptible even to people with normal hearing. In other words, you must make the frequency differences between these sounds relatively large to notice them.

Part III: Overview of Frequency-Lowering Techniques

The purpose of Part III is to provide a broad overview of the different techniques by which manufacturers today achieve frequency lowering. Subsequent parts will focus on each manufacturer in greater detail.

Frequency Lowering Techniques by Manufacturer

Frequency lowering is ubiquitous; each major manufacturer offers some version of frequency lowering. However, it is also the most misunderstood feature of hearing aids today. The remainder of this article aims to clarify some of this misunderstanding.

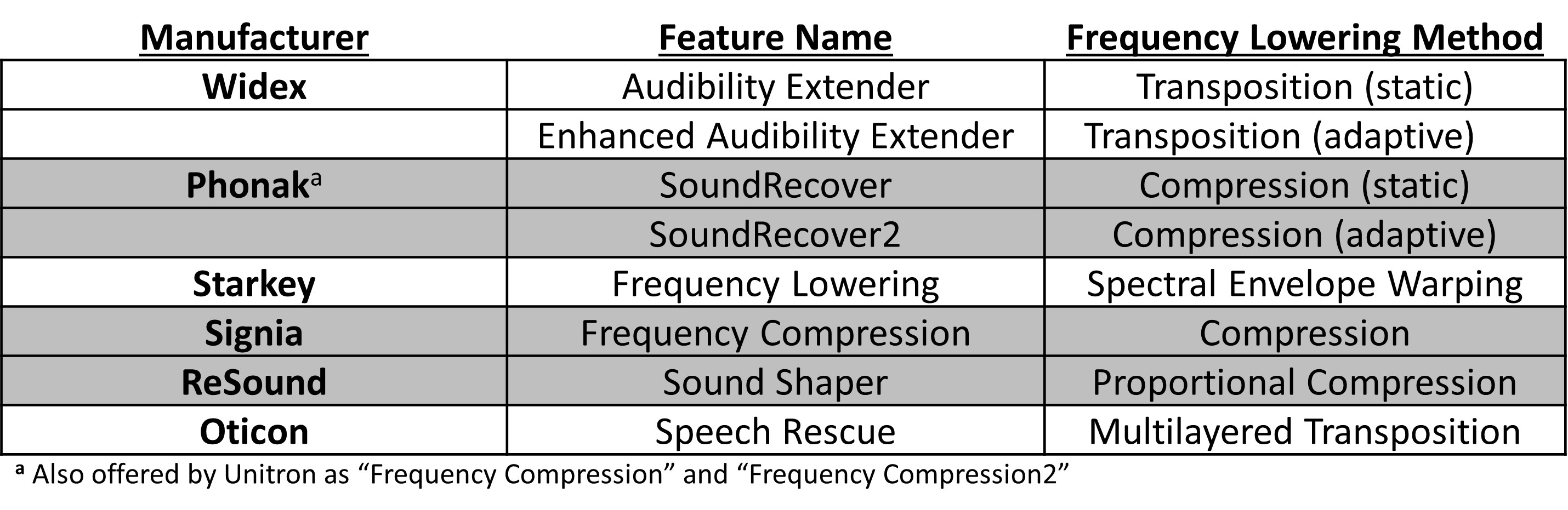

Table I. A categorization of the major hearing aid manufacturers by their frequency-lowering feature, listing them in chronological order of release (first column). The second column provides the specific names of these features. The table also distinguishes the frequency-lowering techniques used — color-coded as transposition (white rows) and compression methods (gray rows), with the specific method listed in the third column.

Table I provides a comprehensive overview of the frequency-lowering techniques utilized in modern hearing aids. The first column lists the names of hearing aid manufacturers in the order of the historical release of their frequency-lowering features, beginning with Widex and concluding with Oticon. This chronological listing provides a historical perspective on the evolution and adoption of frequency-lowering technology. The second column lists the specific names assigned by each manufacturer to their frequency-lowering technology. It also notes subsidiaries and associated brands that offer similar technologies using different terminology. The final column describes the specific technical approach employed in the frequency-lowering process. Further details on these techniques are provided in the subsequent parts of the article. The rows of the table are also color-coded: white rows denote transposition techniques, and gray rows indicate compression techniques.

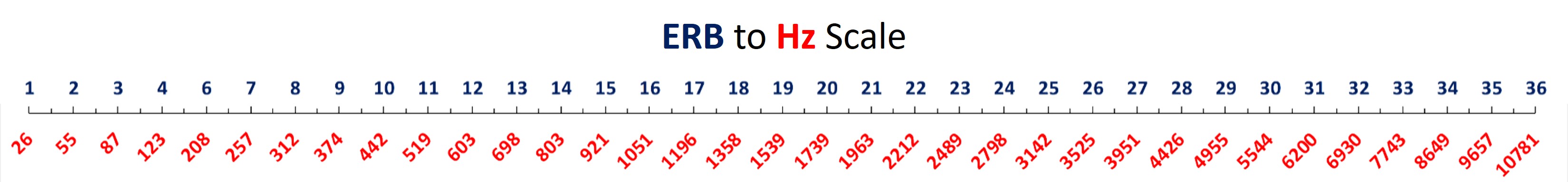

Terminology Review

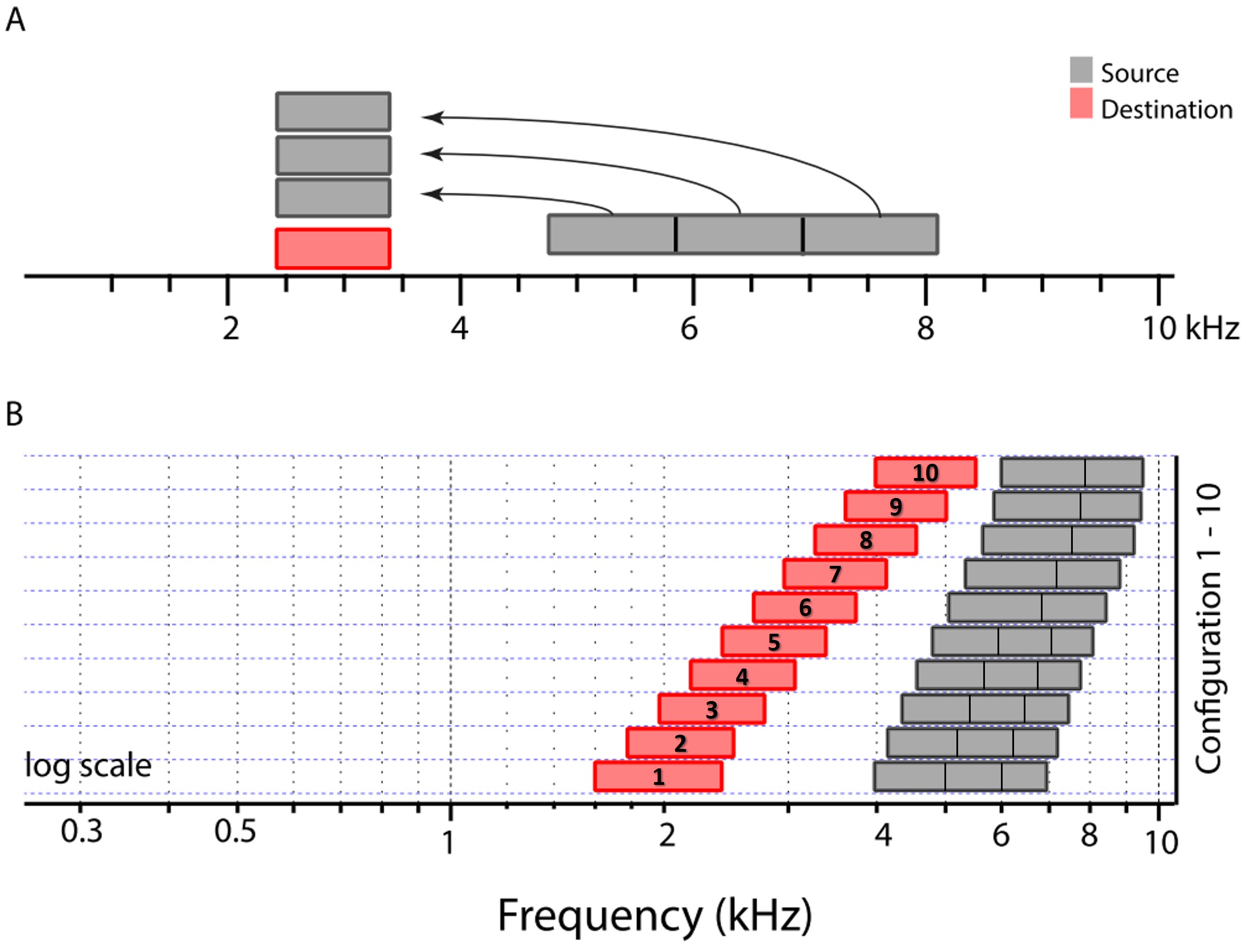

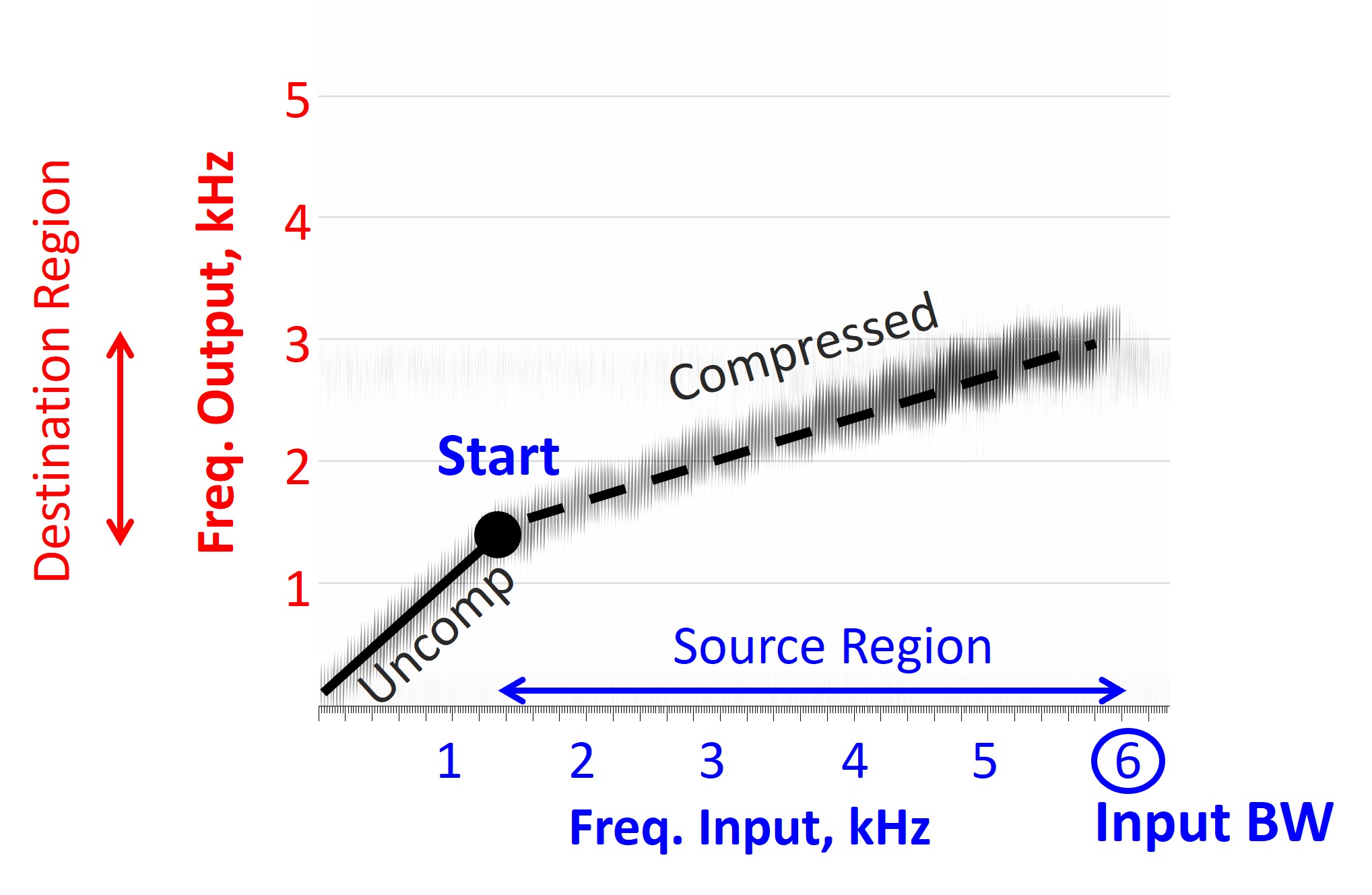

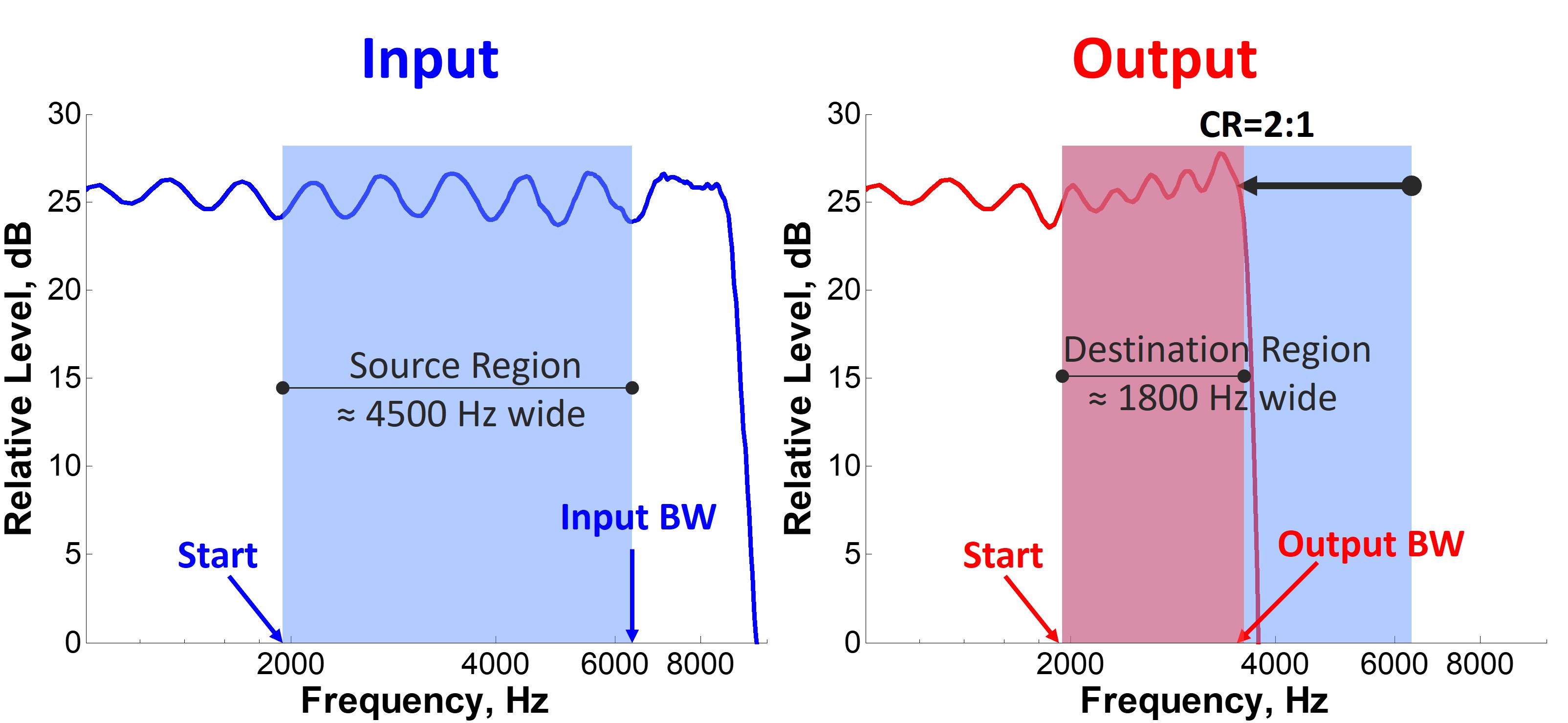

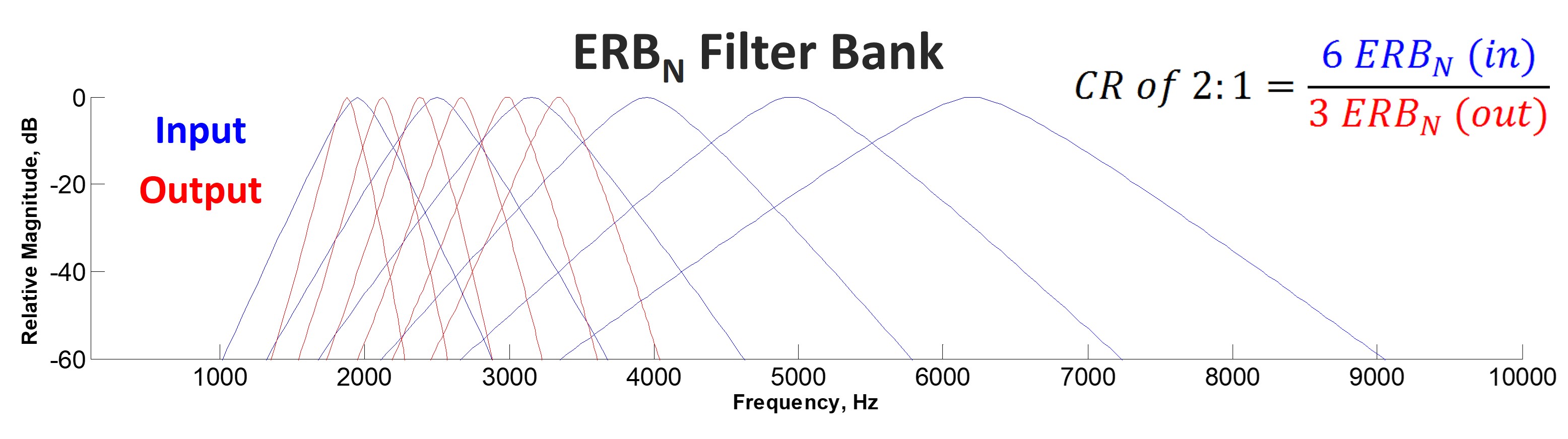

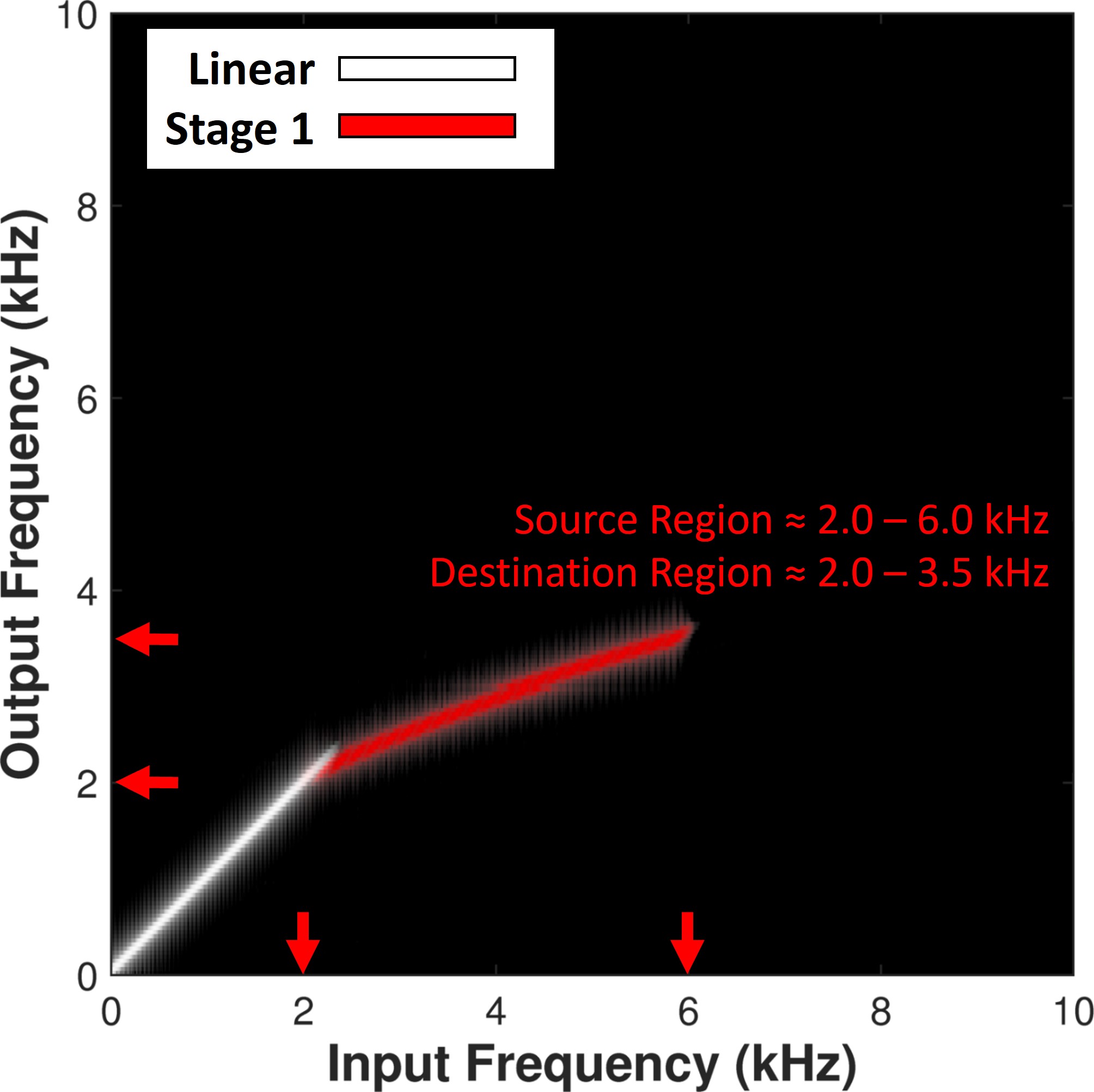

It will be helpful to review some terminology before getting into specifics. The source region refers to the frequency range from which information is being moved. It refers to the frequencies that are being moved down lower. Depending upon the manufacturer, the lowest frequency in this source region is the “start,” “cutoff,” or “fmin” for the minimum frequency. The target or destination region is the frequency range where information from the source region is moved to. Depending on your source of information, there is some confusion between and between frequency compression and frequency transposition, which are often used interchangeably. However, the terms refer to particular techniques that should not be confused.

Frequency Compression

Imagine frequency compression like an uncoiled spring divided into two sections, divided by a “start” frequency. The first half is uncompressed, while the second half is squeezed. If the length of the spring represents frequency, frequency compression progressively moves the higher frequencies down so that they end up being squeezed, and the differences between them become closer. The target region (the squeezed high-frequency section) is contained within the source region (the uncoiled high-frequency section), with the target region being pushed toward the start frequency; thus, the output bandwidth is reduced. Part II emphasized the importance of maintaining the audible output bandwidth after frequency lowering so that it is at least as large as before frequency lowering.

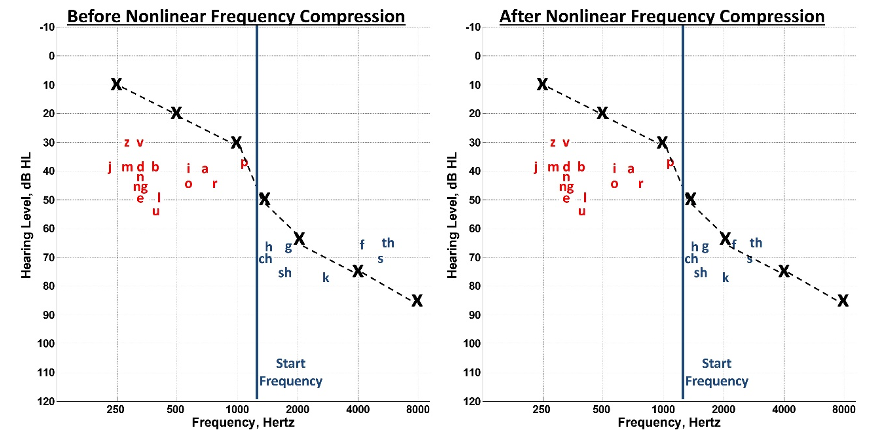

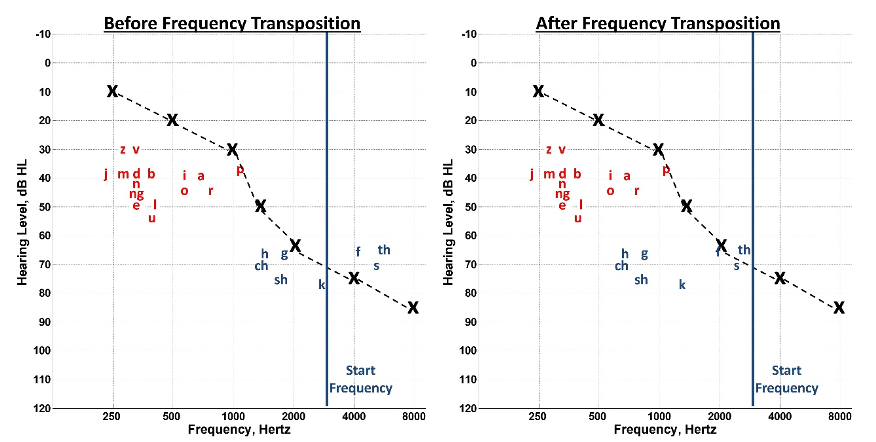

Figure 16. Comparison of speech information before (left) and after (right) nonlinear frequency compression. The frequency range is divided at a start frequency (blue line), with sounds below this point (in red) remaining unchanged, while sounds above it (in blue) are shifted downwards to enhance audibility through the hearing aid.

Part I reviewed how low-intensity, high-frequency sounds, namely the fricatives, are still inaudible despite our best attempts to amplify speech (see Figure 3). Figure 16 represents the speech information shown in Figure 3 before (left) and after (right) nonlinear frequency compression. The basic idea is to divide the frequency range into two parts at a given start frequency, as represented by the blue line. The sounds below the start frequency, shown in red, are unaltered. The sounds above the start frequency, shown in blue, are moved down into a region where audibility with the hearing aid can be achieved.

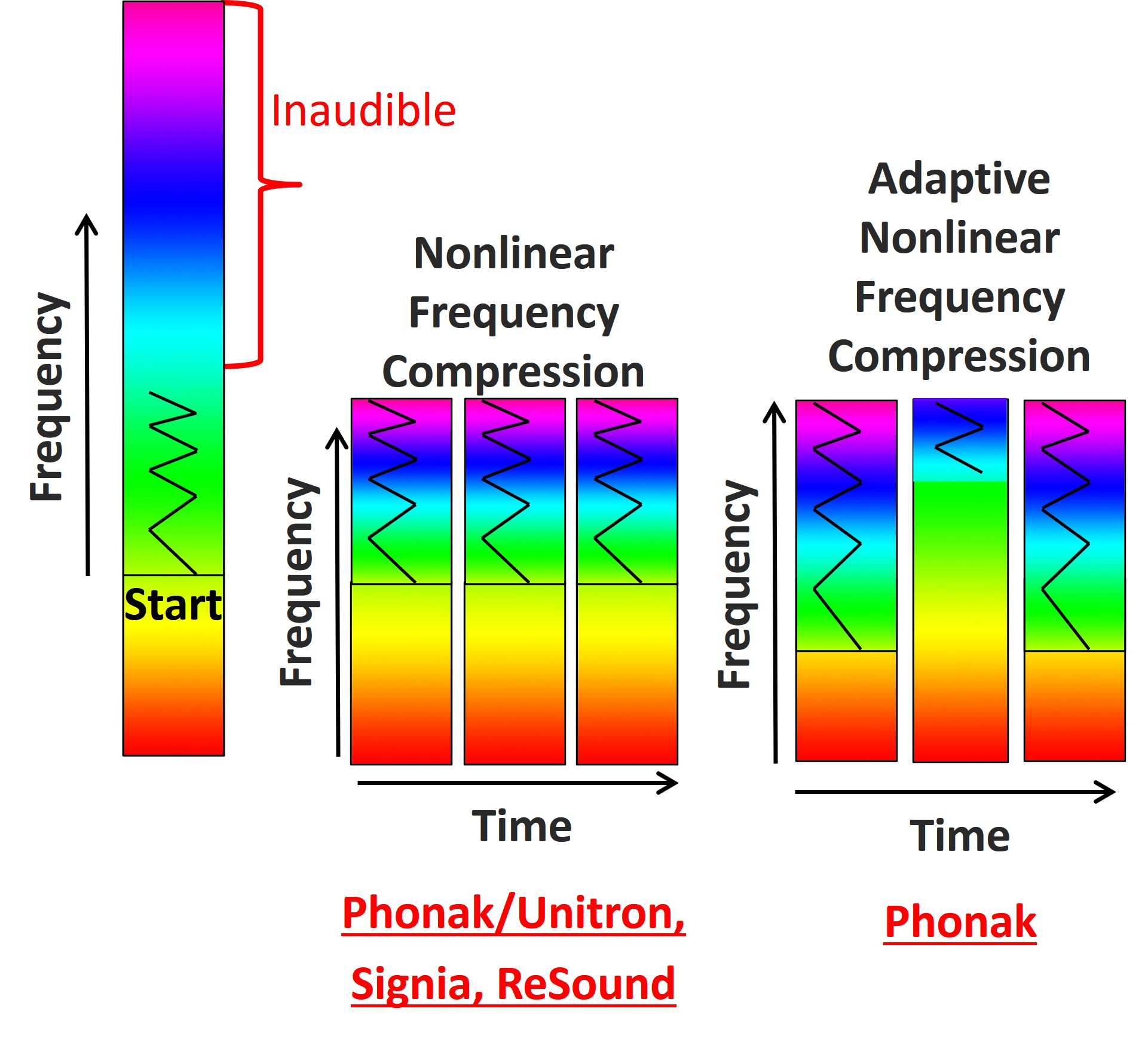

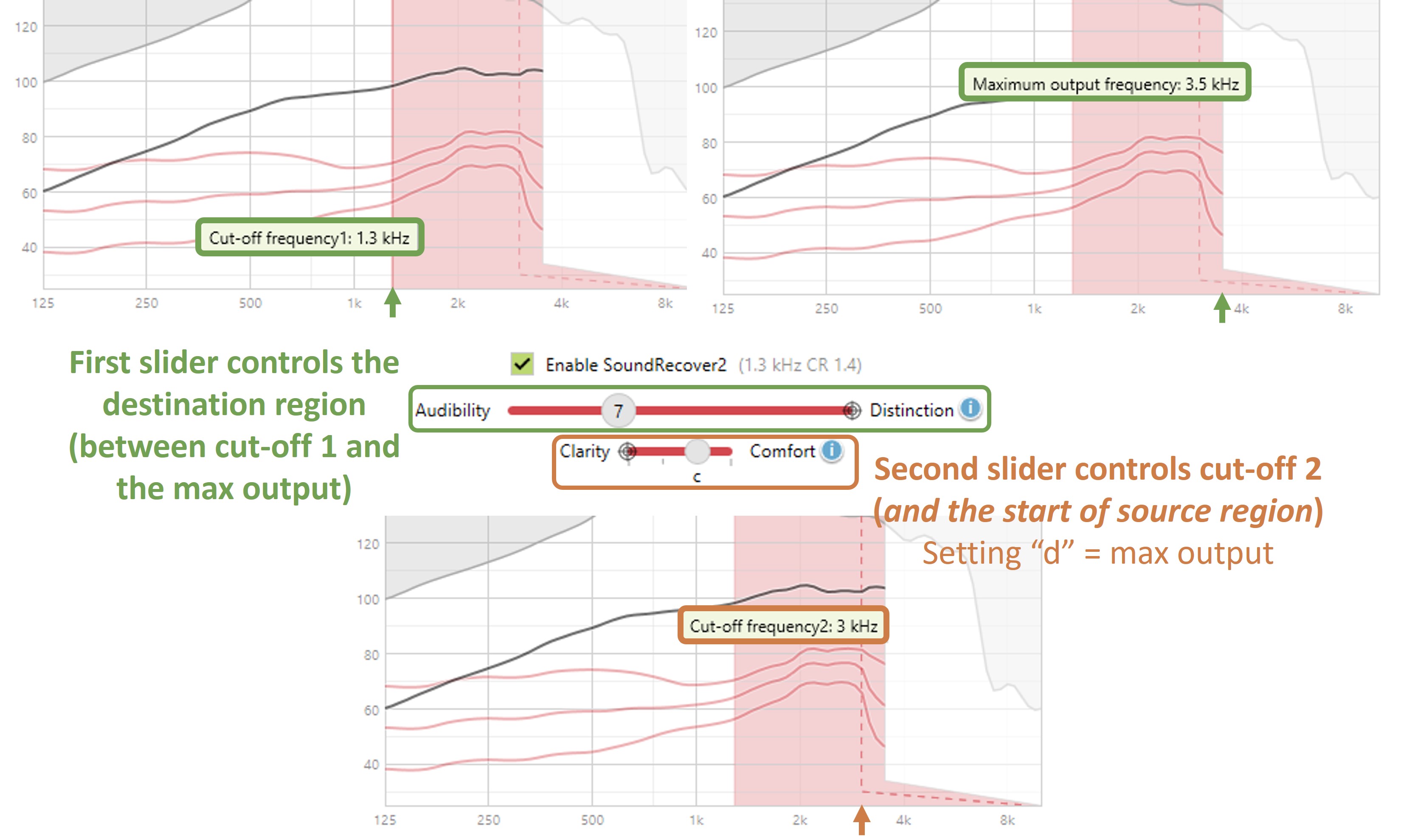

Figure 17. Schematic of the nonlinear frequency compression approaches in hearing aids. The color bar on the left indicates incoming frequencies, with low frequencies in red at the bottom and high frequencies in magenta at the top. The figure illustrates the division of the spectrum at a start frequency, where frequencies above are lowered. Middle of the figure shows the basic technique used by Phonak, Unitron, Signia, and ReSound, highlighting their nonlinear frequency compression method. The right side depicts Phonak’s latest approach, adaptive nonlinear frequency compression, which employs two cutoff frequencies to adaptively alter high-frequency sounds, like fricatives, and preserve low-frequency sounds, like vowels and harmonics, enhancing audibility without significantly altering the sound quality.

Figure 17 is a schematic of the different frequency compression approaches. The color bar on the left represents the frequencies going into the hearing aid. The low frequencies are toward the bottom in red, and the high frequencies are toward the top in magenta. Assume that the upper half of the frequency range is inaudible despite our best attempts to achieve audibility with the hearing aid. One of the characteristics of nonlinear frequency compression is that it divides the incoming spectrum into two parts as designated by the start frequency. Everything above the start frequency is then subjected to frequency lowering. The source region is then squeezed down toward the start frequency. Phonak, Unitron, Signia, and ReSound use the basic technique shown in the middle of the figure. As I will discuss later, they all have some form of nonlinear frequency compression, albeit their approaches have fundamental differences. Furthermore, the dynamics of this technique are similar to the Oticon technique discussed below because it always uses the same frequency reassignment across time; it does not change and is always activated.

The most recent technique, by Phonak, is called adaptive nonlinear frequency compression (right). It is a form of nonlinear frequency compression like the other techniques, but it is adaptive because it has two cutoff frequencies instead of one start frequency. It has a very low cutoff frequency for the sounds dominated by high-frequency energy, which allows them to be put lower in the destination region than before. Because these are primarily high-frequency fricatives, there is little concern about altering formats. Then, when the incoming sound is dominated by low-frequency energy, it engages frequency compression with the higher cutoff frequency. This way, it can more or less preserve the existing low-frequency transitions and harmonics associated with vowels, etc.

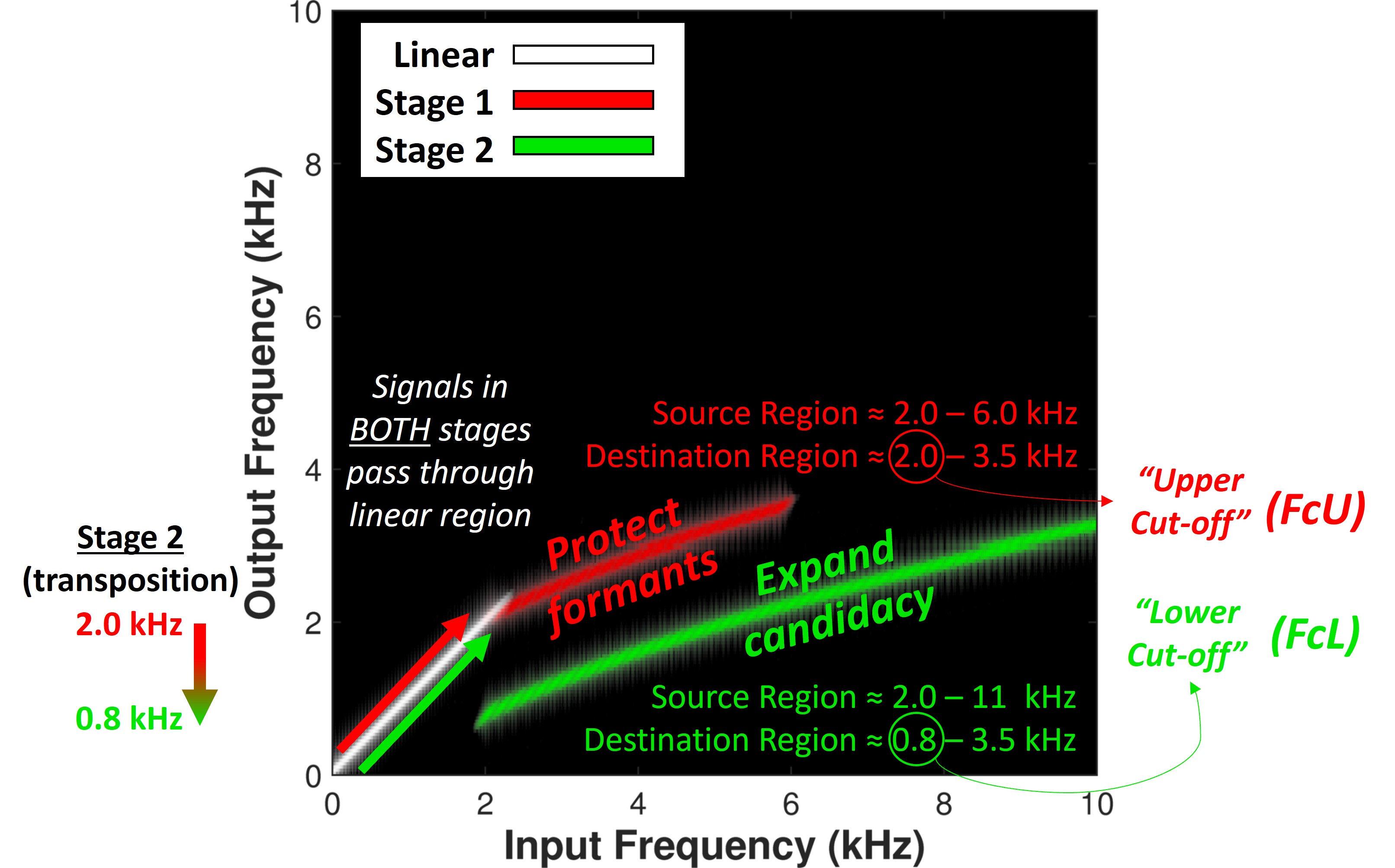

Frequency Transposition

With frequency transposition, frequency lowering is like a copy-and-paste technique, whereby a portion of the high frequencies from the source region is copied in some manner or form and moved down into the target or the destination region. Depending upon the transposition-like technique, you may have the option of maintaining the source bandwidth. This way, if you select an overly aggressive amount of frequency lowering, you would not accidentally reduce the bandwidth that the hearing aid user had originally. Finally, depending on the frequency-transposition technique, the start frequency may be moved to a lower frequency.

Figure 18. Illustration of frequency transposition in the same manner as Figure 16. This approach involves a ‘copy-and-paste’ technique where high-frequency components (in blue) are replicated and moved to a lower frequency range below the start frequency.

Figure 18 represents the same problem as depicted in Figures 3 and 16 but uses frequency transposition as a solution. In contrast to the start frequency for compression techniques, one manufacturer defines the start frequency for transposition as the point where all sounds are moved below. It can be thought of as the start of inaudibility or the regions on the cochlea that are no longer responsive to sound — that is, cochlear dead regions. Unlike frequency compression, the sounds are picked up and moved lower in frequency with transposition. There is not necessarily a compression of the source frequency; instead, only a band of energy with the highest intensity in the source region is usually moved down into a lower frequency. Also, transposition-like techniques can move sounds to a slightly lower frequency range because the high-frequency sounds are placed in the same regions as existing low-frequency sounds. As discussed later, frequency compression techniques are limited by low-frequency harmonics, usually below 1500 Hz or so. If the harmonics of voiced speech are moved below this, hearing aid users may complain about unpleasant sound quality. In contrast, with frequency transposition, the low-frequency sounds in the original signal are unaltered. However, one concern with transposition is masking the existing low-frequency sounds with the newly lowered high-frequency sounds.

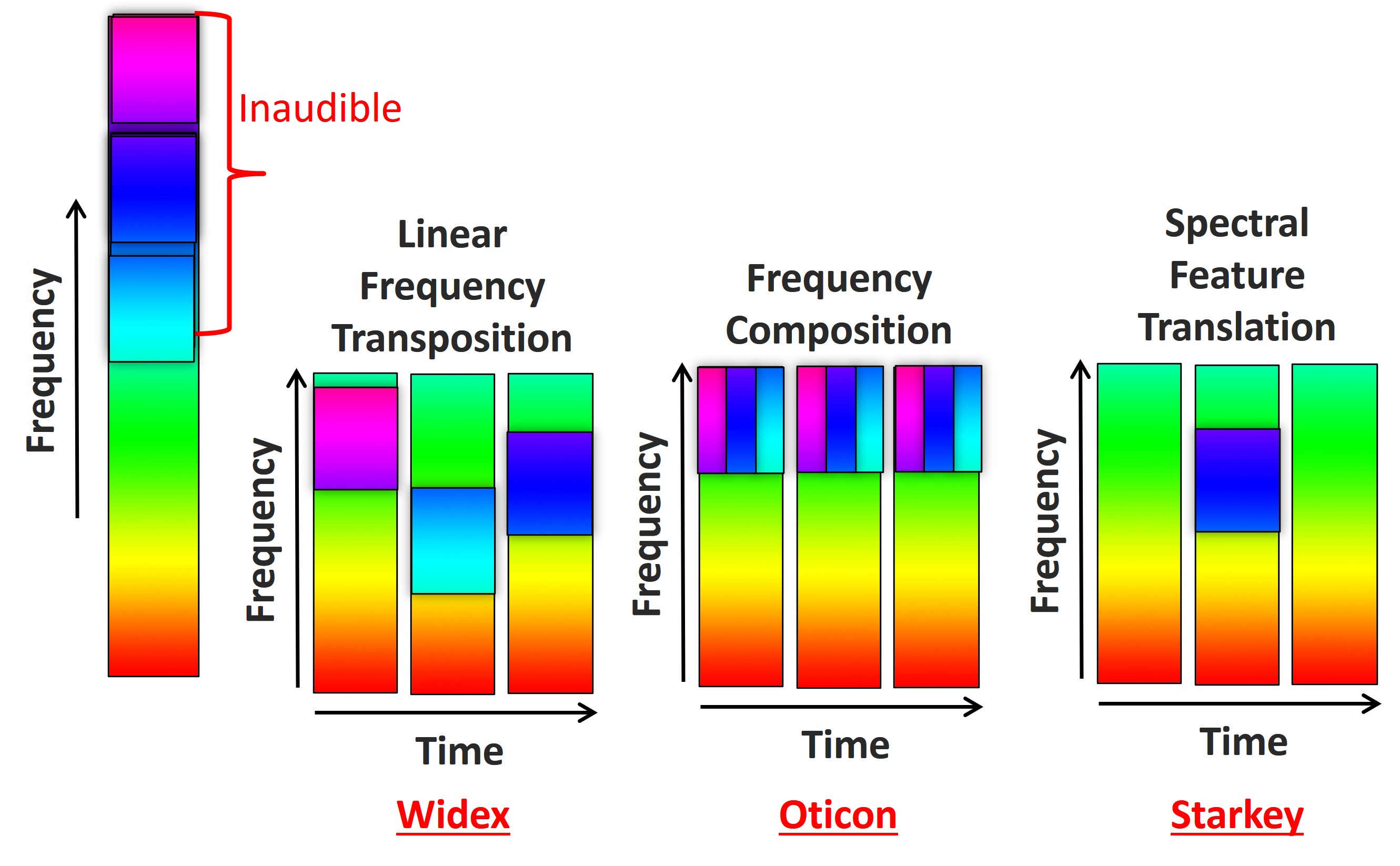

Figure 19. Schematic of the frequency transposition approaches in hearing aids in the same manner as in Figure 17. Widex employs linear frequency transposition, copying and pasting the highest intensity band from the source region (left color bar) to lower frequencies without compression, resulting in a linear shift in Hertz. Oticon’s method divides the source into bands (three in this example), merging them into a single output frequency space, thus overlapping rather than compressing them. Starkey’s approach, once known as spectral feature translation, selectively translates significant speech energy from high to low frequencies, activating only when detecting specific speech features, unlike the continuous transposition of the Widex and Oticon methods.

Figure 19 illustrates the differences between the frequency transposition methods used in hearing aids today. The transposition technique by Widex searches the input (color bar on the left) across time for the highest intensity band in the source region, copies a frequency range around that band, and then pastes it to lower frequencies without compressing it. This technique is called linear frequency transposition because sounds are shifted down by a linear factor in terms of Hertz. A band from the source is always lowered regardless of how soft it is. In addition, as shown, it picks up different parts of the source spectrum over time, depending on the input.

The transposition technique by Oticon, I call frequency composition. Oticon does not favor this terminology, but I like it because it nicely summarizes what the technique accomplishes. First, the technique divides the source region into two or three bands (three bands are shown in the figure). Then, unlike Widex’s linear frequency transposition, it combines all these bands and puts them in the same frequency space in the output. In this sense, it is multilayered frequency transposition. I call it composition because it is also like compression in that it takes a wide range of frequencies in the input and puts them in a smaller range in the output; however, instead of individually squeezing these frequency bands, it overlaps them.

The transposition technique by Starkey was once known as spectral feature translation. It is like the Widex technique because it finds when and where significant speech energy exists in the high frequencies. Then, when and where it detects it, it moves it to a lower frequency using the existing energy already in the low-frequency bands. But, unlike the other two techniques, it does not always lower something; instead, it will only lower frequency when it detects a spectral feature of speech.

Comparison of Techniques

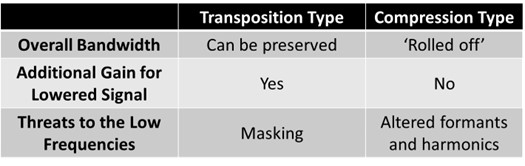

Table II. Summary of key differences between transposition and compression techniques in frequency lowering.

Table II summarizes the broad differences between the transposition and the compression techniques used for frequency lowering. Concerning the overall bandwidth, all transposition techniques allow the audiologist to select an option to keep the original, unlowered signal so that the bandwidth after frequency lowering is activated is the same as before it was activated. However, if you put the destination region close to the maximum audible output frequency, you can turn this option off since users cannot hear it. In contrast, compression techniques lower the original high-frequency sounds, meaning no high-frequency sound is above the lowered signal. Therefore, with these techniques, you must be careful not to have too much compression, which could reduce the audible bandwidth you had to begin with.

All the transposition techniques have a separate handle to adjust the gain of the lowered signal. I will call this the mixing ratio because transposition mixes the frequency-lowered signal with the original source signal. It is a delicate balancing act between making the lowered sounds perceptually salient so that the user can hear them versus having them so intense that they pop out of the perceptual stream and become segregated from the rest of the speech signal. This is an unnecessary option with the compression techniques because the signals are not mixed.

As noted earlier, with transposition, one threat to the low frequencies is that you risk moving some high-frequency masking noise into the region where there is existing low-frequency speech information. One of the concerns with compression is the risk of altering formant frequencies and their transitions. In addition, depending on how low you start the frequency compression, you risk altering the harmonics, resulting in unpleasant sound quality for the hearing aid user.

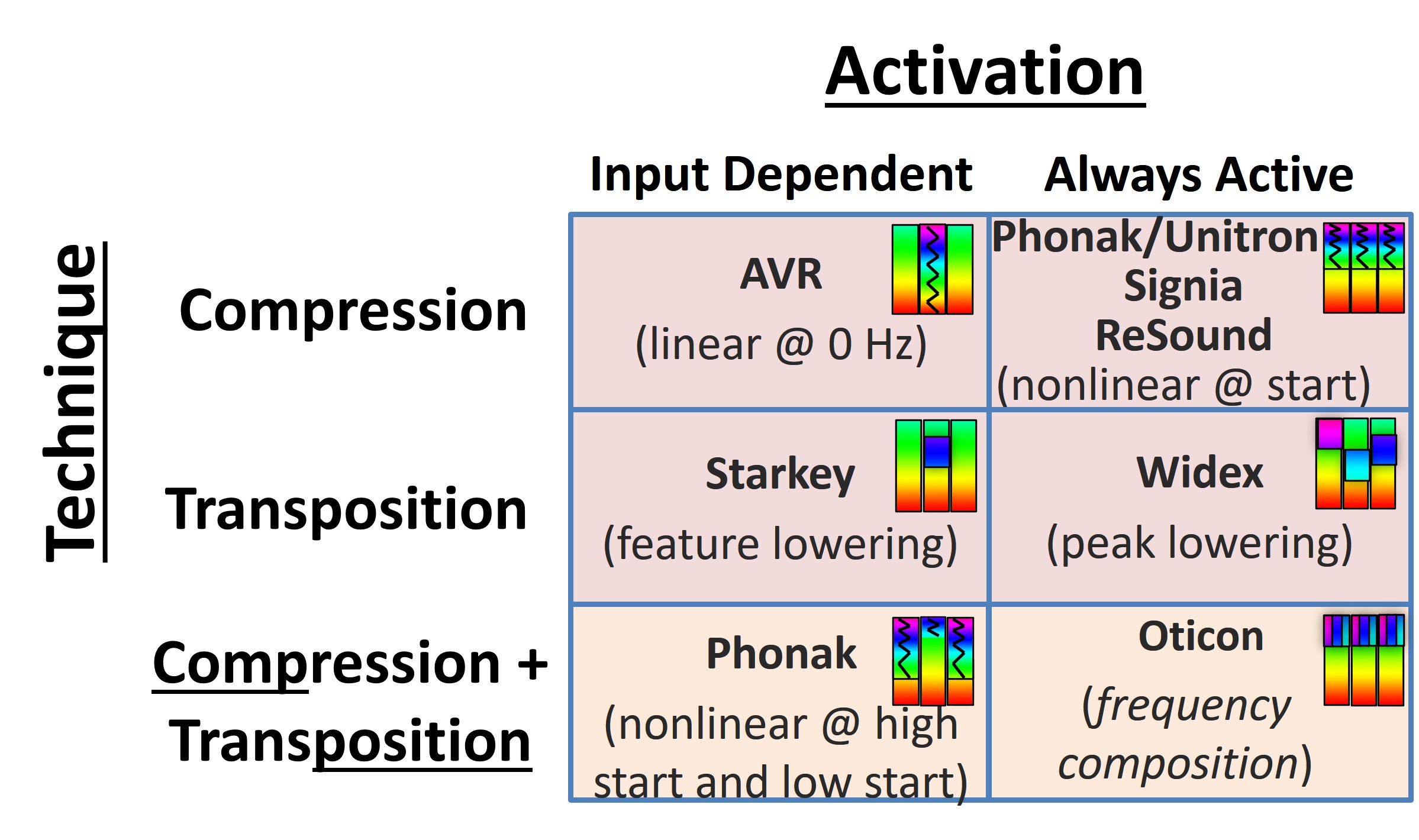

Figure 20. Summarizes all the frequency-lowering methods used by individual manufacturers (see text for details).

Figure 20 summarizes all the frequency-lowering methods used by individual manufacturers. One way of classifying the different strategies is whether or not frequency-lowering depends on the input (first column) or if they are always active (second column). The rows correspond to the specific techniques I just discussed: compression, transposition, and hybrid techniques between the two.

Starting with the upper-left is AVR Sonovation, which is no longer in business. However, I have included them for completeness. They made the first commercial digital hearing aid with frequency lowering. Whenever it detected significant high-frequency energy, it would go into full-on compression.

Then, there is the nonlinear frequency compression from Phonak, Unitron, Signia, and ReSound. As I already discussed, frequency lowering is always active with this strategy. It does not depend on the input, so the same frequency reassignment is maintained across time. The same goes for Widex; it is always active, continually lowering something — speech, noise, etc. Starkey, however, as indicated, lowers sound only when it detects there is a likelihood of a speech feature in the high-frequency source region.

Next, with Oticon, I have discussed why I put it in the category of compression and transposition. I use the term “composition,” which I borrowed from one of their subsidiaries. It, too, is always active and is continually lowering something. Furthermore, its behavior does not depend upon the input. It is like a hybrid compression type because it takes three source bands corresponding to different frequency ranges and puts them into a smaller range in the output. This contrasts with Widex and Starkey, who maintain the bandwidth of the lowered sounds in the output.

Finally, Phonak’s newest strategy in the lower left is adaptive nonlinear frequency compression. It depends on the input, so high-frequency dominated sounds can go lower in frequency with less compression, and low-frequency dominated sounds can have a higher cutoff to help preserve the formants and harmonics. I put this strategy in the compression and transposition category because it uses transposition to shift the compressed high-frequency dominated sounds even lower in frequency.

Widex Frequency Lowering

Part IV focuses on Widex’s linear frequency transposition, a feature in their hearing aid known as the Audibility Extender. In 2006, Widex was the first hearing aid company to offer frequency lowering in its main product line. This started a ten-year trend in which each major manufacturer would distribute one or more versions of frequency lowering.

Start Frequency

As with all frequency-lowering techniques, linear frequency transposition first divides the spectrum into a source region and a target or destination region. The source region is the frequency region where information is moved from. The target region is the frequency region where the lowered information is moved to. The start frequency can be considered the start of inaudibility or a dead region because the action happens below the start frequency, and everything above it is a candidate for frequency lowering. It is imperative to know the distinction between how the start frequency is used with this method compared to others. For example, with frequency compression techniques, all of the action happens above the nominal start frequency, which is also called the cutoff frequency or F min. Widex’s actual start frequency is about a half-octave below the nominal start frequency. Therefore, in this example, the nominal start frequency is 2500 Hz, and the actual start frequency is around 1800 Hz. Furthermore, the actual source region extends an octave above the nominal start frequency, which is 5000 Hz in this example.

Frequency Lowering Method

Over short time intervals, the algorithm continually searches for the most intense peak in the source region. Wherever it finds this peak, it creates a one-octave wide filter centered around it. Then, it lowers the entire filtered region one octave down into the target region. That is, the algorithm divides the frequencies in the filter by a factor of two and copies them down, so the original peak is maintained along with its lowered copy.

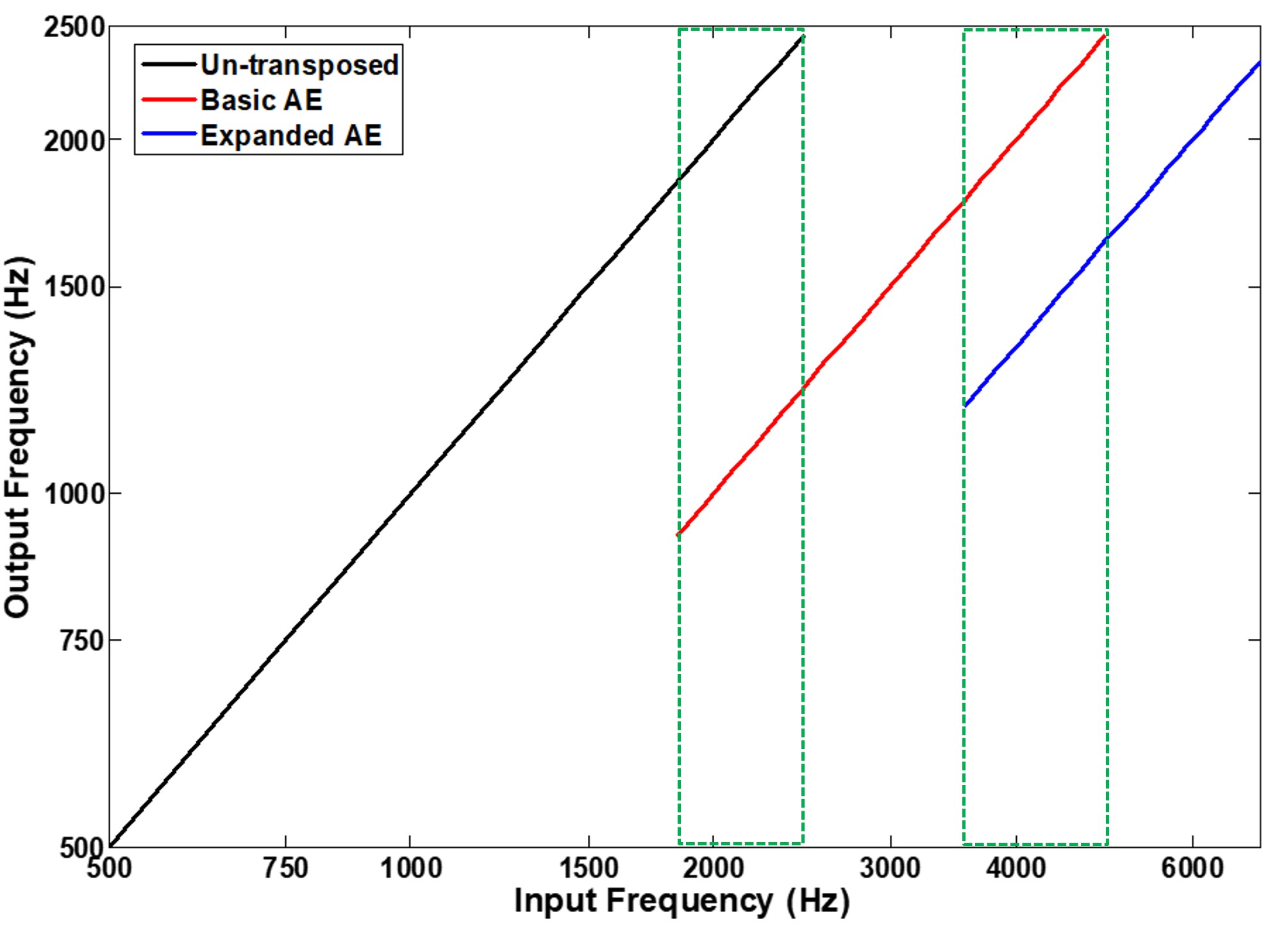

Figure 21. Input-output functions illustrating the action of Widex’s frequency lowering. The x-axis represents input frequencies, while the y-axis represents output frequencies. The black line corresponds to the un-transposed signal, showing no frequency change. The red line represents the basic Audibility Extender mode, with input frequencies halved in the output. Overlapping frequencies between transposed and un-transposed signals are denoted by green boxes. The blue line represents the expanded Audibility Extender mode, starting half an octave higher with output frequencies at one-third of the original input. Overlap between the un-transposed and transposed signals from the basic mode is maintained, resulting in peaks appearing two or three times in the output.

Figure 21 shows the action of Widex’s frequency lowering in terms of input-output functions. Frequencies going into the hearing aid are shown on the x-axis, and frequencies coming out are shown on the y-axis. The black line shows the un-transposed signal, for which you can see the frequencies coming into the hearing aid are the same in the output. The red line shows the transposed signal for the basic Audibility Extender mode. Notice that the nominal start frequency of the source region is 2500 Hz, but the actual start frequency is a half-octave lower, around 1770 Hz. The source region extends for an octave above the nominal start frequency, which is 5000 Hz in this case. Also, notice that the output frequencies associated with the red line are exactly half of the input frequencies, so 2000 Hz comes out at 1000 Hz, 3000 Hz comes out at 1500 Hz, 4000 Hz comes out at 2000 Hz, and so on. The green boxes show areas of overlap between the transposed and un-transposed signals. These frequencies will show up twice in the output: once at their original frequency and then again at their transposed frequency.

The blue line shows the transposed signal in the expanded Audibility Extender mode. First, notice that its source region starts a half-octave above the nominal start frequency, around 3500 Hz. This is also a full octave above the start frequency for the source region of the basic Audibility Extender. The source region for the expanded Audibility Extender extends an octave above its start frequency, which is 7000 Hz. Furthermore, its output frequencies are 1/3 of the original input, so 4500 Hz comes out at 1500 Hz, 6000 Hz at 2000 Hz, and so on. It is also important to note that the un-transposed signal and the transposed signal from the basic audibility extender are both maintained when the Audibility Extender is in expanded mode, so just as before, as indicated by the green box, there will be overlap between the transposed signal in expanded mode, the transposed signal in basic mode, and some cases even the un-transposed signal. Therefore, peaks at these frequencies will show two or even three times in the output!

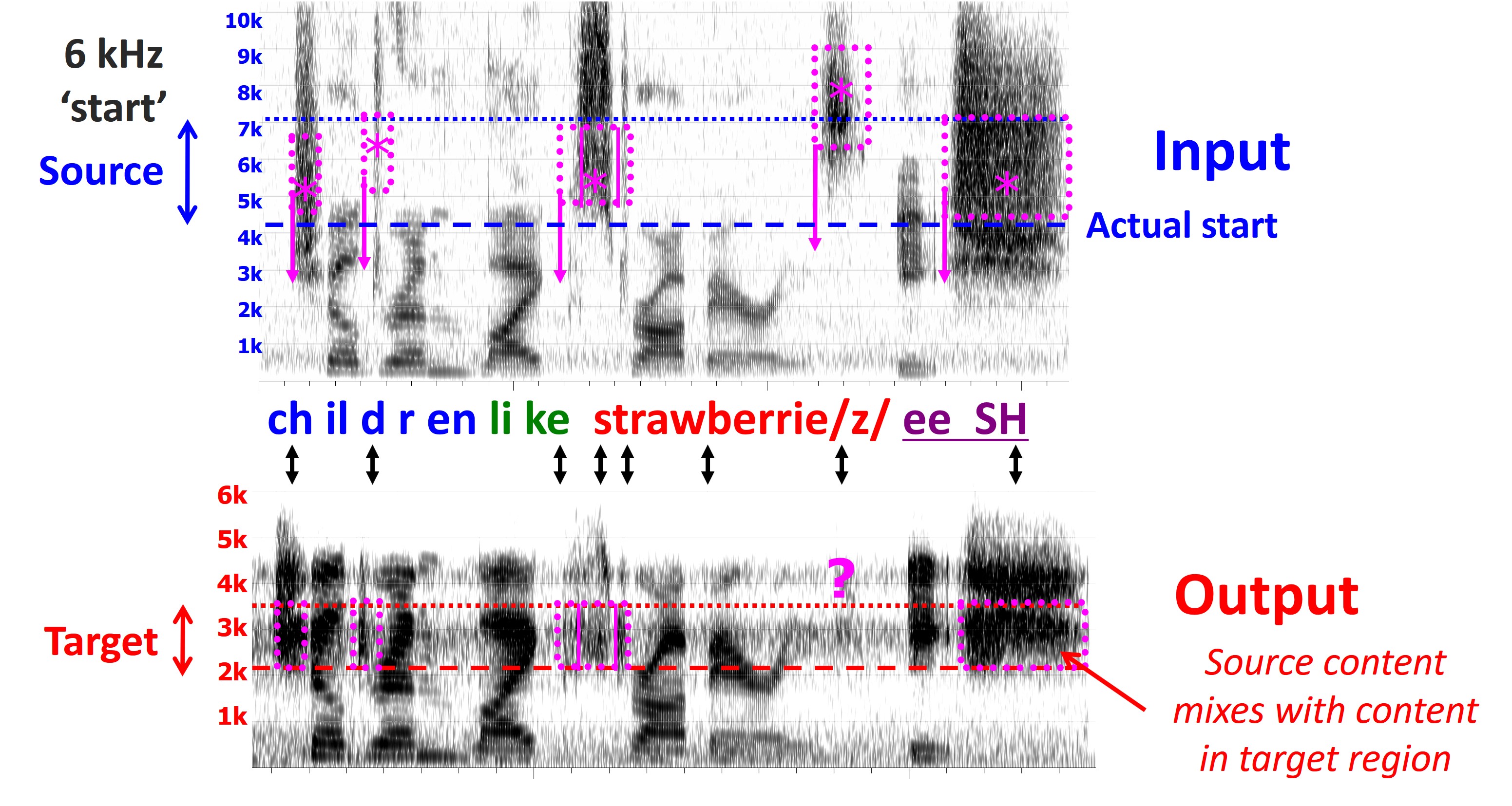

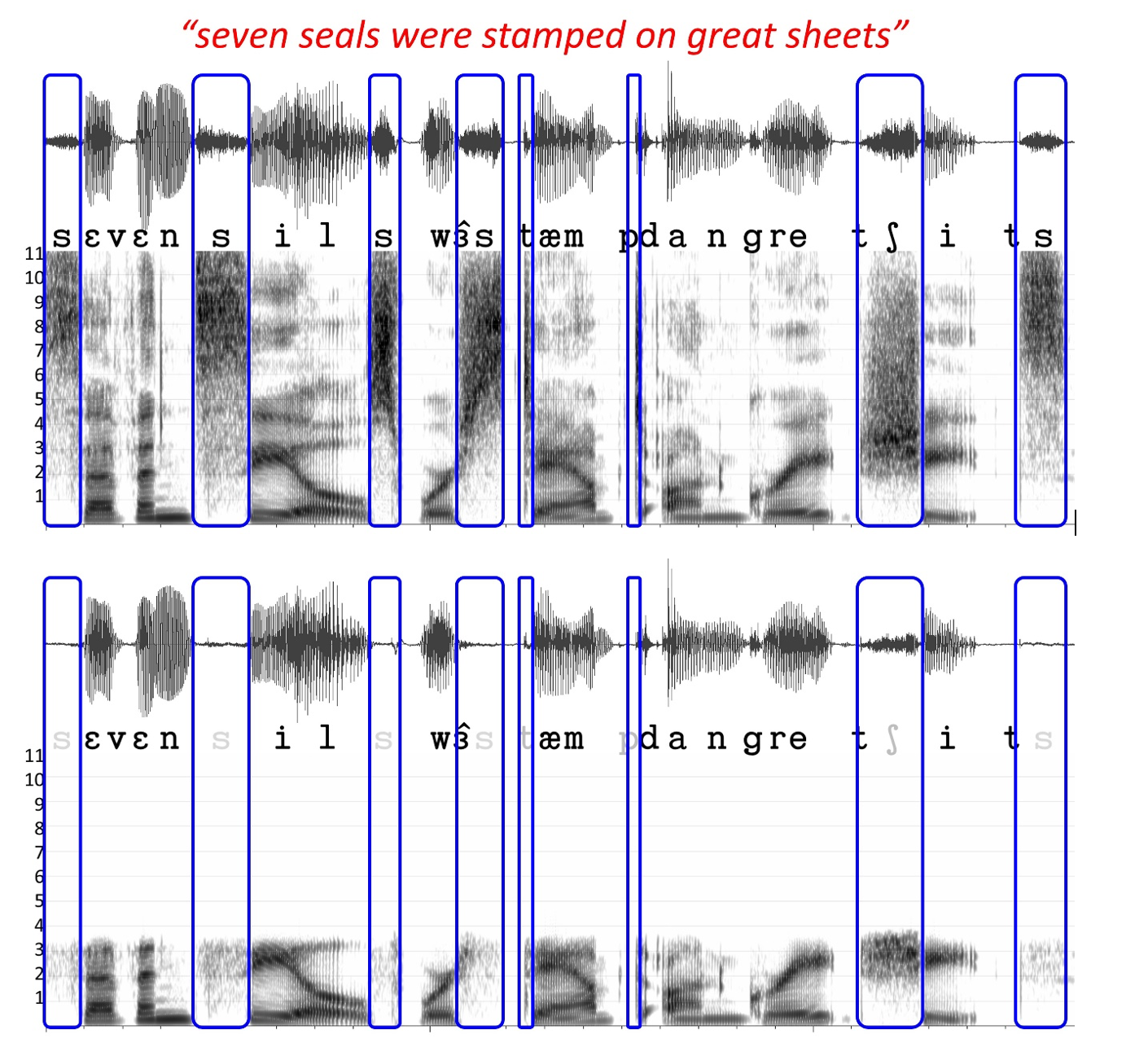

Figure 22. Spectrograms comparing the input and output of a hearing aid with frequency transposition, demonstrating the lowering of high-frequency sounds from the source region to the target region. Magenta boxes highlight indications of lowered information, while the question mark suggests energy beyond the device’s input range. The resulting output represents a mixture of source and target region energy.

The top spectrogram in Figure 22 shows the input to a hearing aid with frequency transposition, and the bottom shows the spectrogram of the output. The sentence is “children like strawberries,” followed by “eeSH,” one of the test stimuli I frequently use in my research. This sentence is useful because it has a lot of high-frequency energy associated with the fricatives, like “s” and “z,” the affricates, and the stop consonants. These sounds are indicated by the arrows. The nominal start frequency in programming software was set at 6 kHz. However, as you now know, the actual start frequency is really a half-octave lower at around 4200 Hz. For this particular device, input was limited to about 7 kHz. Next, the most intense sounds within the source region are moved down to the target region, as indicated by the bottom spectrogram’s red lines. Recall that the frequencies of the sounds in the source region are divided by two in the target region. Also, recall that the algorithm continually searches for the most intense peaks in the source region and filters them before lowering them. The magenta boxes and asterisks are areas in the source region where I can see some indication that the information has been lowered by looking at the output spectrogram. The magenta boxes in the output spectrogram correspond to the sounds in the upper spectrogram after lowering. The question mark indicates that it is hard to see any indication of the “z” being lowered at that time, probably because the energy was beyond the input range of the device. Another important thing to note is that this energy in the source region is mixed with whatever existing energy is present in the target region, so what you see in the output and what the hearing aid user hears is a mixture of the two.

Possible Pros of the Method

The algorithm’s behavior is dynamic because the exact frequency range that is lowered depends upon the input spectrum. The algorithm continually searches the short-term input spectrum for a peak, which is lowered by a factor of two or three. Therefore, the peak will appear at different frequencies in the target region across time. The algorithm is also active from one time to the next, even if there is no prominent peak in the input. It has been argued that this is beneficial because it can minimize discontinuities in the output signal and inadvertent artifacts. Finally, because peaks are linearly shifted by an integer factor (2 or 3), the harmonics within the peak will be generally maintained after lowering. The motivation behind this is to help promote a more natural and pleasant sound quality. As indicated earlier, only a portion of the spectrum around the peak is transposed, specifically a one-octave-wide region. As long as the algorithm picks the frequency region with the most essential information, this can be beneficial because we do not have to worry about compressing the signal. It also helps limit the amount of space in the target region occupied by the lowered signal, further reducing the concern that the newly transposed high-frequency energy may mask the existing low-frequency information.

Possible Concerns of the Method

One possible concern is that the high-frequency speech spectrum is often characterized by diffuse energy across a wide range, possibly discarding potential information. The term ‘potential information’ is used because we are unsure what information people extract from the frequency lowered signal. In addition, mixing the transposed and un-transposed signals in the low-frequency region may cause some issues with masking. Furthermore, this can make segregating the newly introduced high-frequency energy from the original energy difficult. Finally, if the lowered peak is associated with noise instead of speech, then the signal-to-noise ratio of the existing low-frequency signal will decrease.

Enhancements to the Method

Frequency transposition came out in 2006. In 2015, Widex came out with some enhancements to their original algorithm to improve the naturalness of the frequency-lowered signal. One of these enhancements was a voicing detector. Voiced speech is characterized by a harmonic relationship of its component constituent frequencies. Therefore, when the algorithm detects voiced speech, it applies less gain than voiceless speech. The rationale is that voiced speech is naturally more intense than voiceless speech, so it will perceptually pop out of the speech mixture if it has too much gain after lowering. That is, it will not blend in naturally, so the newly introduced high-frequency energy will segregate from the low-frequency speech and will not sound like speech. On the other hand, voiceless speech may need additional gain to make it perceptually salient. Another enhancement was the addition of a harmonic tracking system. The idea is to keep track of the harmonics of the voiced phonemes from the source region to align them better with the harmonics already in the target region. When the harmonics of the mixed-signal align, more pleasant sound quality and improved naturalness are expected. The last point is critical because it is not true with all transposition techniques.

An additional enhancement that is also a property of the other transposition techniques is the ability to amplify the full bandwidth of the original source signal as you would typically do without frequency lowering. Ideally, this should not be an issue if you put the frequency-lowered signal at the edge of the hearing loss, where you lose audibility for the amplified output. Because the hearing aid user cannot hear that information anyway, the thought is to remove it from the output so you do not increase the risk of feedback. However, because we cannot always guarantee that the clinicians will put the transposition setting where it needs to be, the concern is that the bandwidth will be artificially reduced compared to what the hearing aid user had to begin with. This may result in adverse outcomes because you may do more harm than good. Therefore, even if the clinician chooses the wrong frequency-lowering setting, the hearing aid user will be more likely to perform as well as without frequency lowering. It also gives clinicians more options regarding where to put the lowered signal without concern about rolling it off above the lowered output.

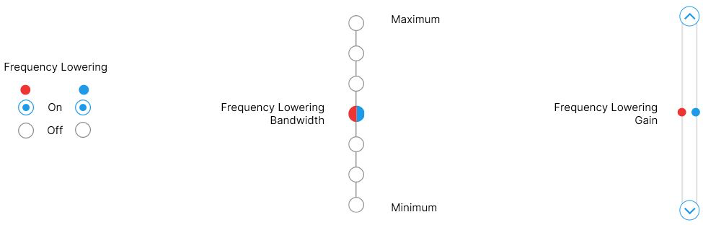

Programming Software Settings

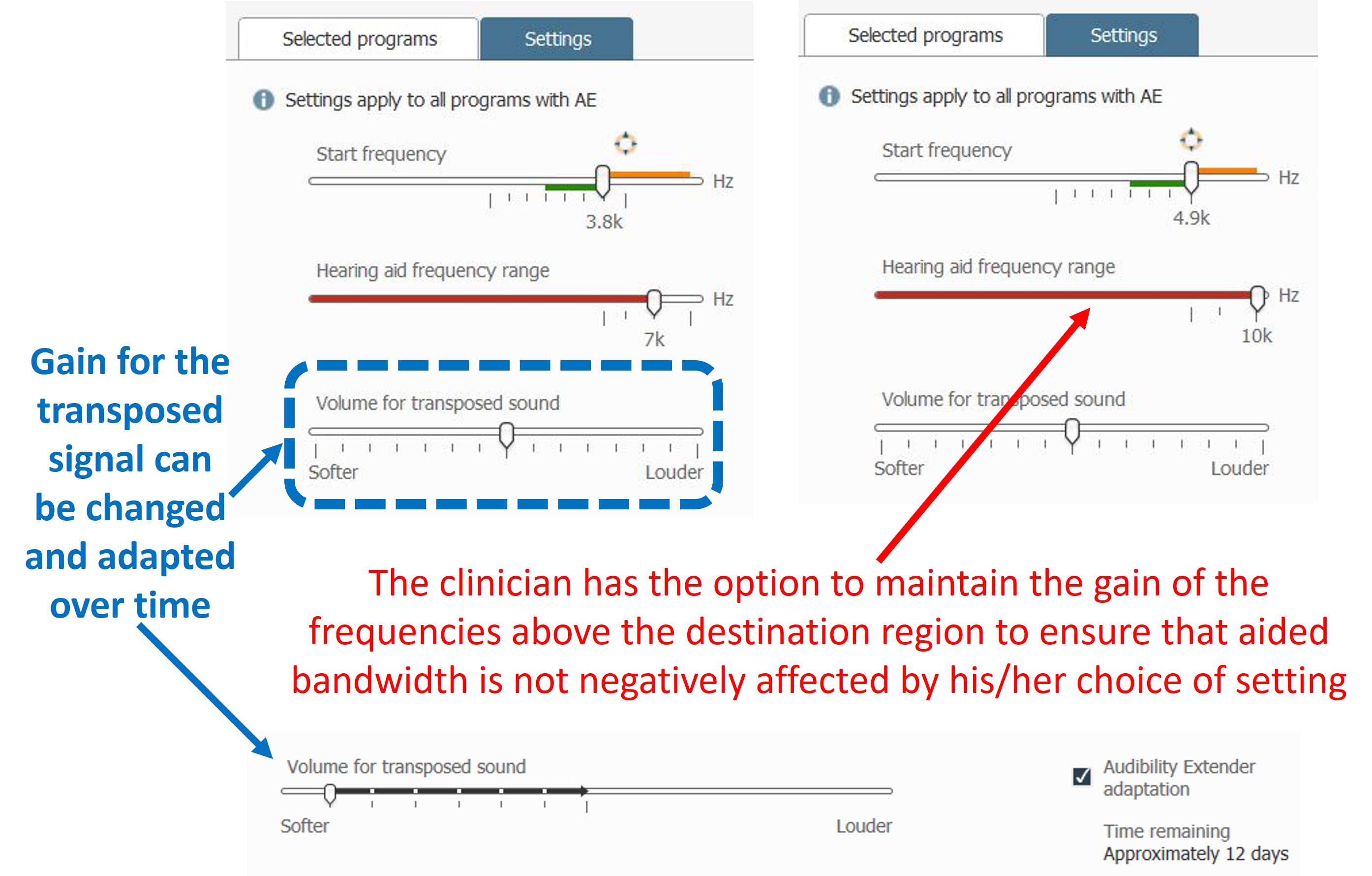

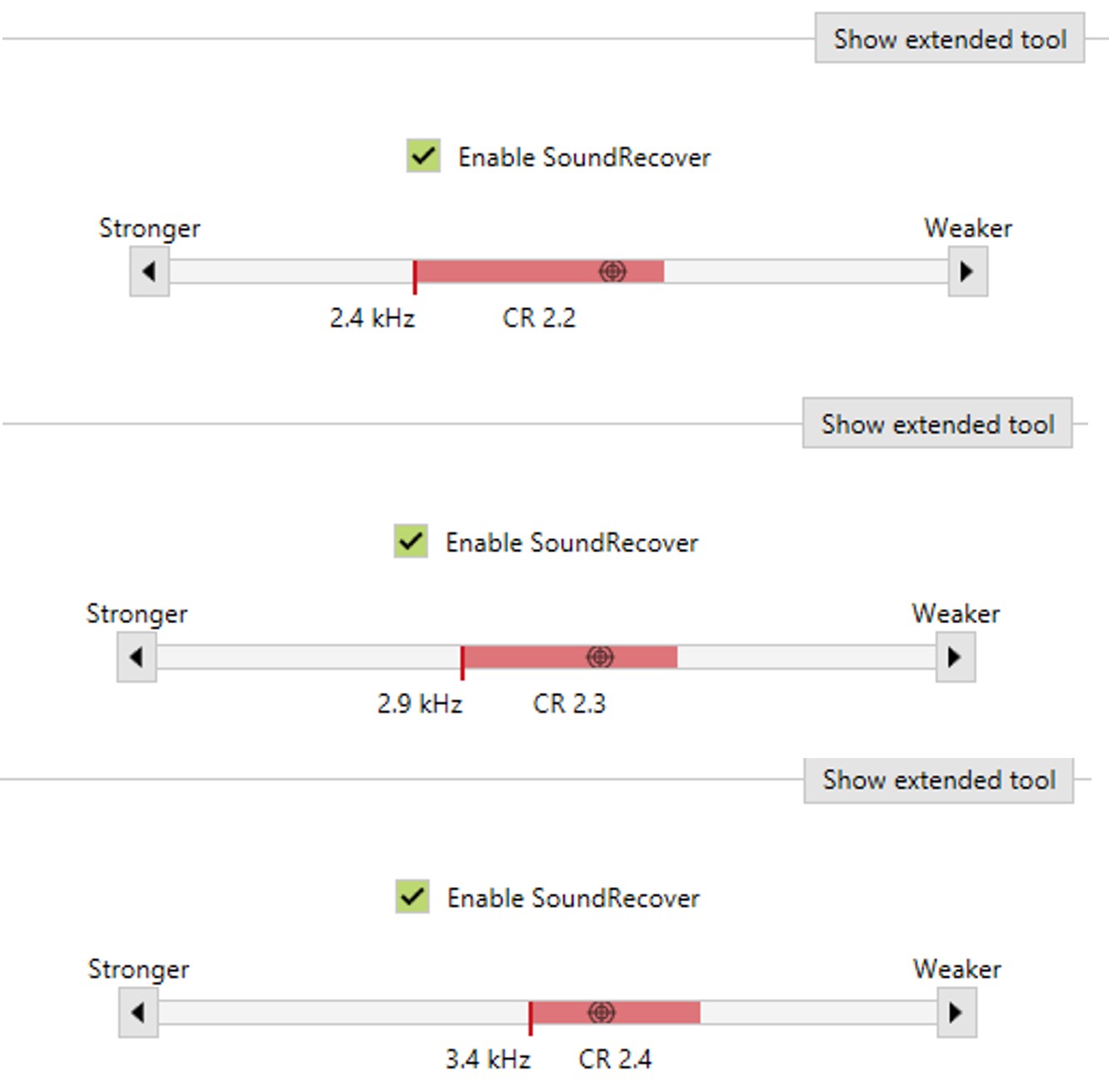

Figure 23. Screenshots from Widex COMPASS GPS version 4.5 illustrating settings for the Audibility Extender. The top shows a slider for selecting the start frequency, with options at 3.8 kHz and 4.9 kHz. The second slider, labeled “Hearing aid frequency range,” provides the ability to maintain the amplification of the original signal, ranging from the start frequency to the fullest bandwidth. The third slider, “Volume for transposed sound,” controls the relative gain of the lowered signal, aiming to balance audibility without distracting the user. The option to adapt the gain of the transposed signal over time is also available, allowing gradual adjustment to avoid overwhelming the user. The example indicates 12 days remaining to reach the final gain setting, adjustable via a slider.

Figure 23 shows screenshots from Widex COMPASS GPS version 4.5 of how the settings appear for the Audibility Extender. At the top is a slider corresponding to different start frequency selections. There are a finite number of settings. As you shift it to the right, you will increase the start frequency; as you shift it to the left, you will decrease the start frequency. With the fitting assistant, we are trying to optimize this setting based on the three goals discussed above. The screenshot on the left shows a start frequency of 3.8 kHz, and the one on the right shows a start frequency of 4.9 kHz.

The second slider is named “Hearing aid frequency range,” corresponding to the ability to maintain the original source spectrum. When Audibility Extender was first introduced, the hearing aid would roll off the frequency response above the start frequency. However, for reasons already discussed, clinicians can now maintain the amplification of the original signal. The option varies from the start frequency to its fullest bandwidth. As indicated before, if you know you are pretty sure what you are doing, you can keep this setting close to its lowest setting to minimize the likelihood of feedback.

The third slider is named “Volume for transposed sound,” corresponding to the relative gain of the lowered signal. All transposition methods offer this option because the lowered signal is mixed in with the existing low-frequency energy. One concern is that if it is too loud, it will perceptually segregate and pop out of the speech stream, distracting the hearing aid user. Likewise, if it is too low, the hearing aid user may not hear it.

Finally, you have the option to adapt the gain of the transposed signal over time. With adaptation, you can check the box and change the rate at which it will increase the gain for the lowered signal. You may want to gradually work the hearing aid user up the frequency lowering so the newly introduced information does not take them aback. This example shows approximately 12 days remaining for the hearing aid user to reach the final gain setting. You can change the adaptation rate by moving the slider.

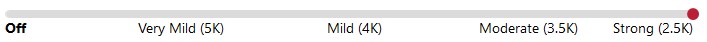

Fitting Assistant: Goals

I created a fitting assistant to help clinicians make an informed decision when choosing a setting for the Widex Audibility Extender. The first step is to establish some goals. These goals may not be correct, but we must have some starting principles to work with. If there is some agreement in how the process is approached, then we can at least constrain some of the variability in how people are fit so that we can examine whether there are alternatives that will improve outcomes. The first goal is to choose the Audibility Extender setting that provides the most input bandwidth or information from the source region. The second goal is to minimize the overlap between the source spectrum of the unlowered and lowered signals so that the same input signal does not appear in the output at two different frequencies. This will be accomplished by choosing a higher start frequency. However, the start frequency should not be so high that gaps or holes exist in the input spectrum between the unlowered and lowered signals. These goals will be more apparent with the examples that follow.

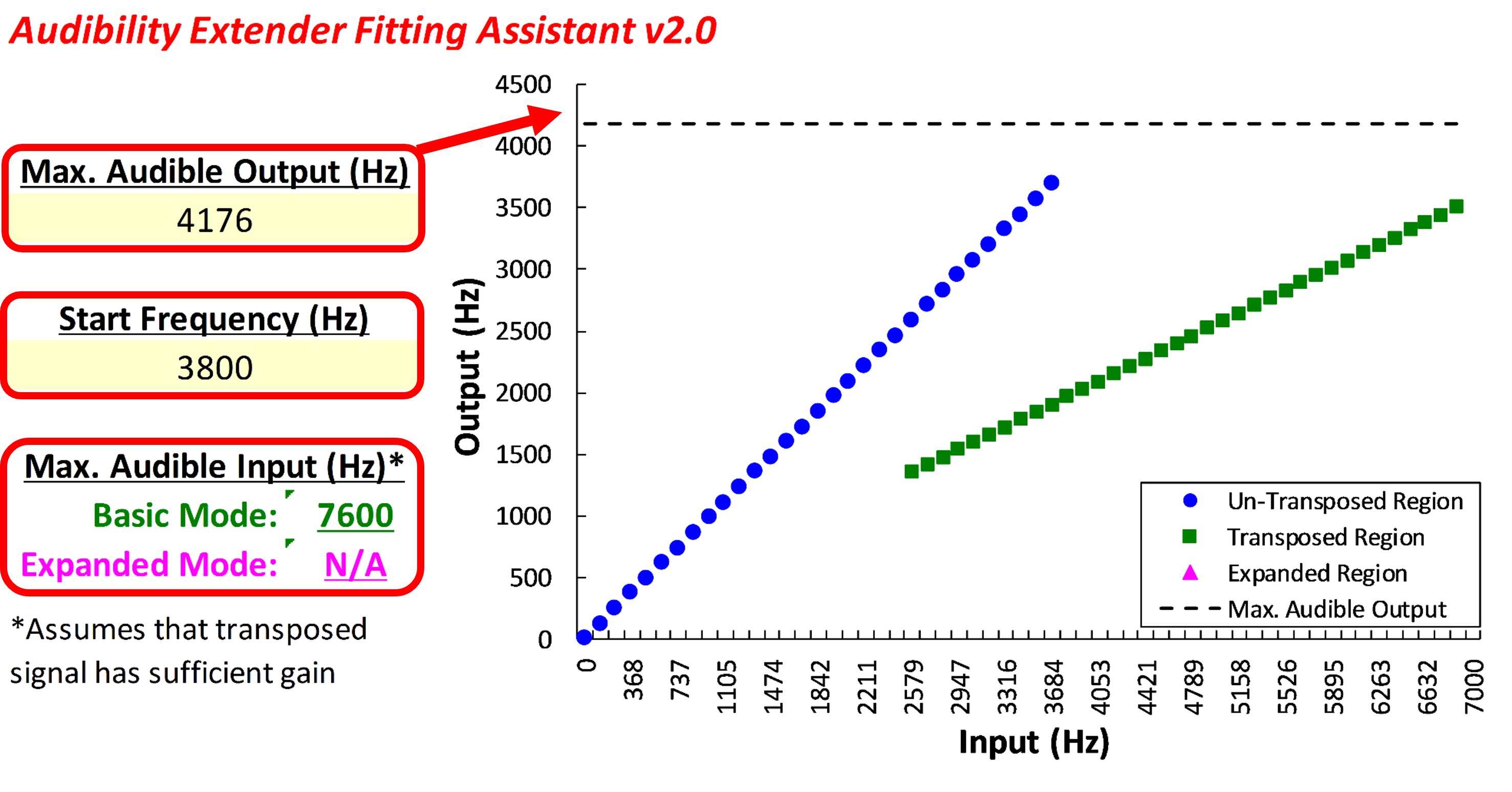

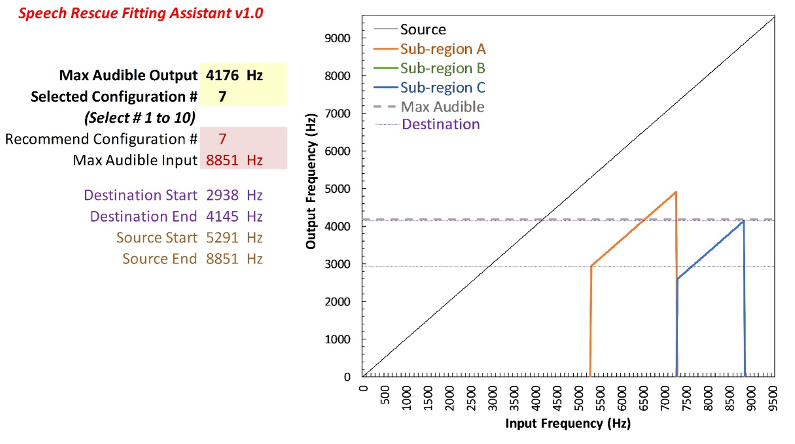

Fitting Assistant: Example 1

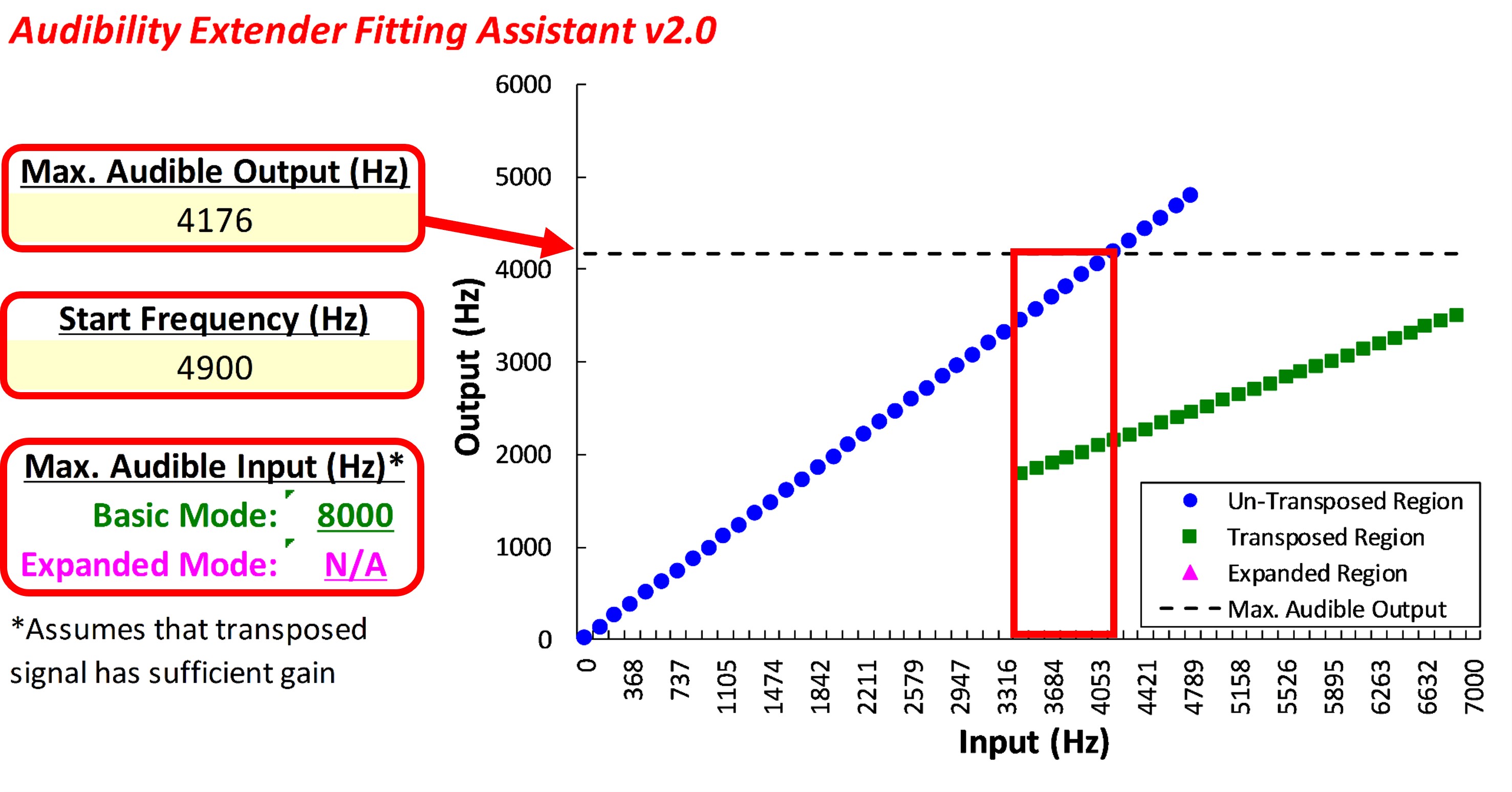

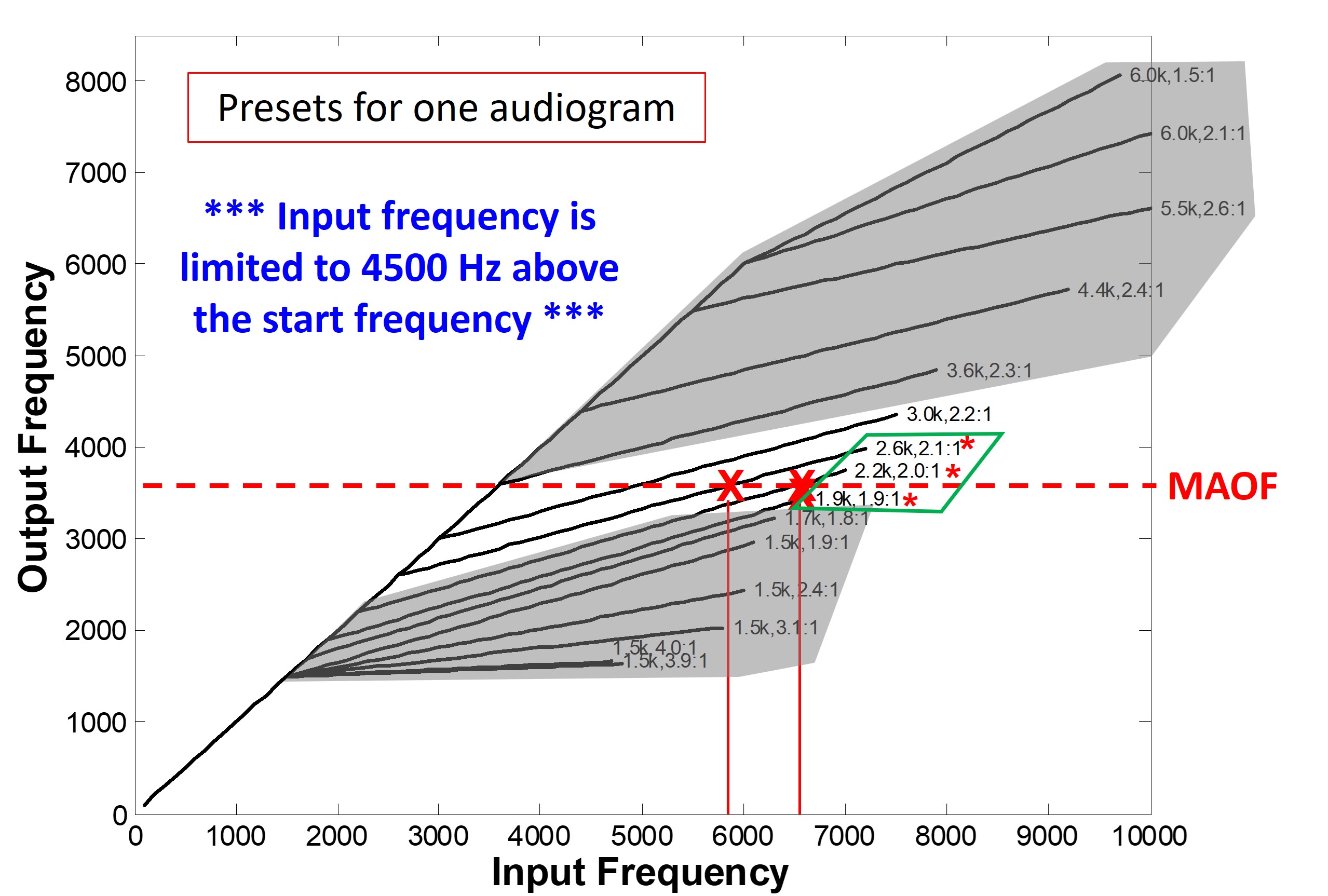

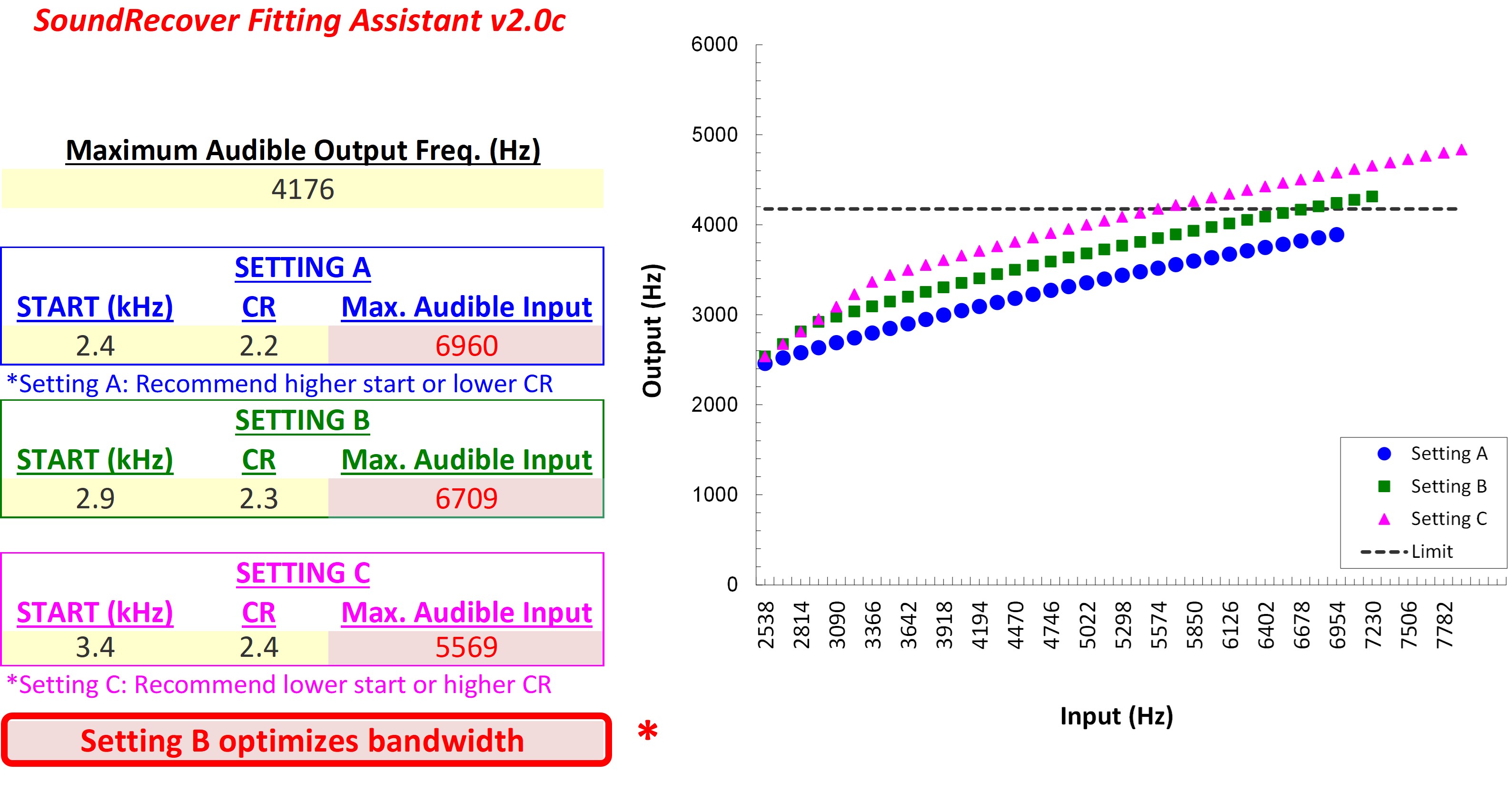

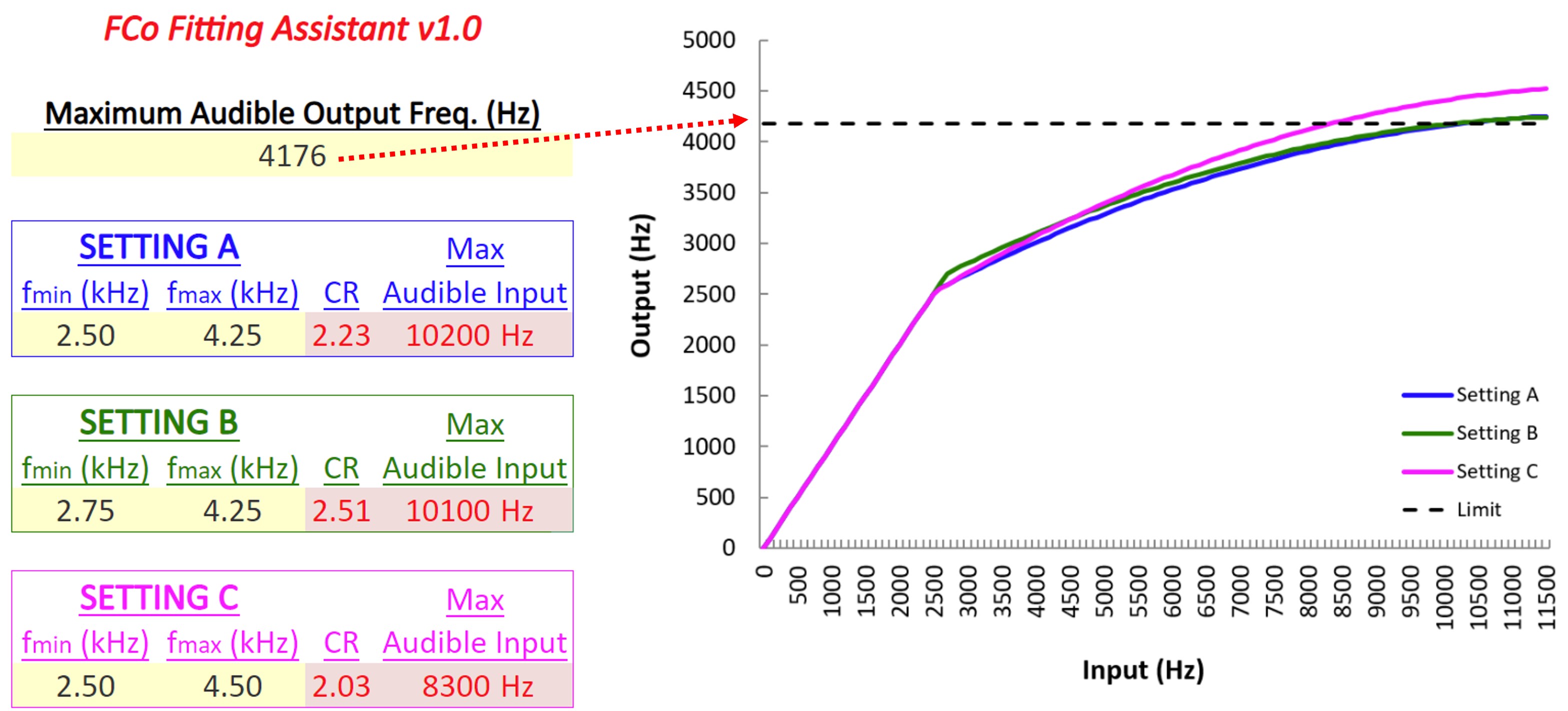

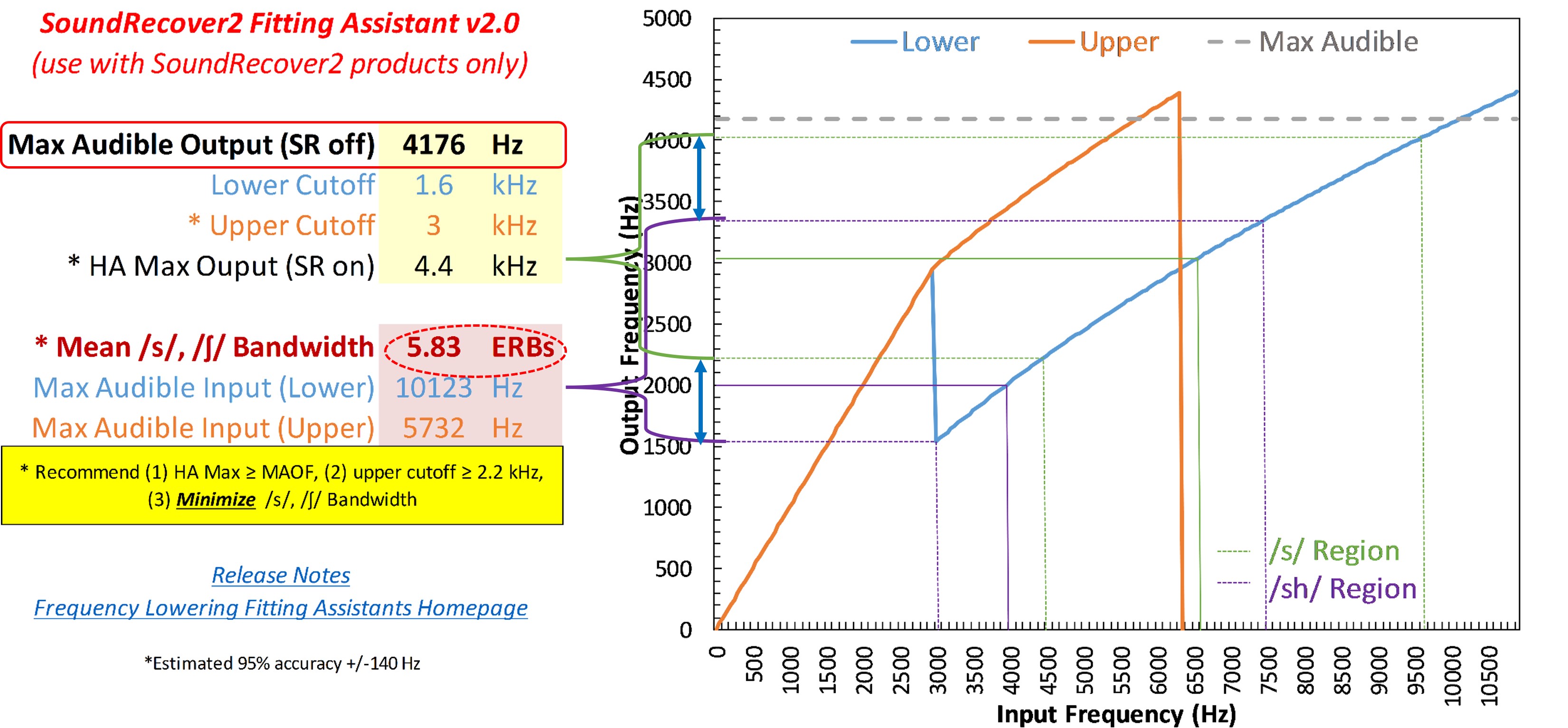

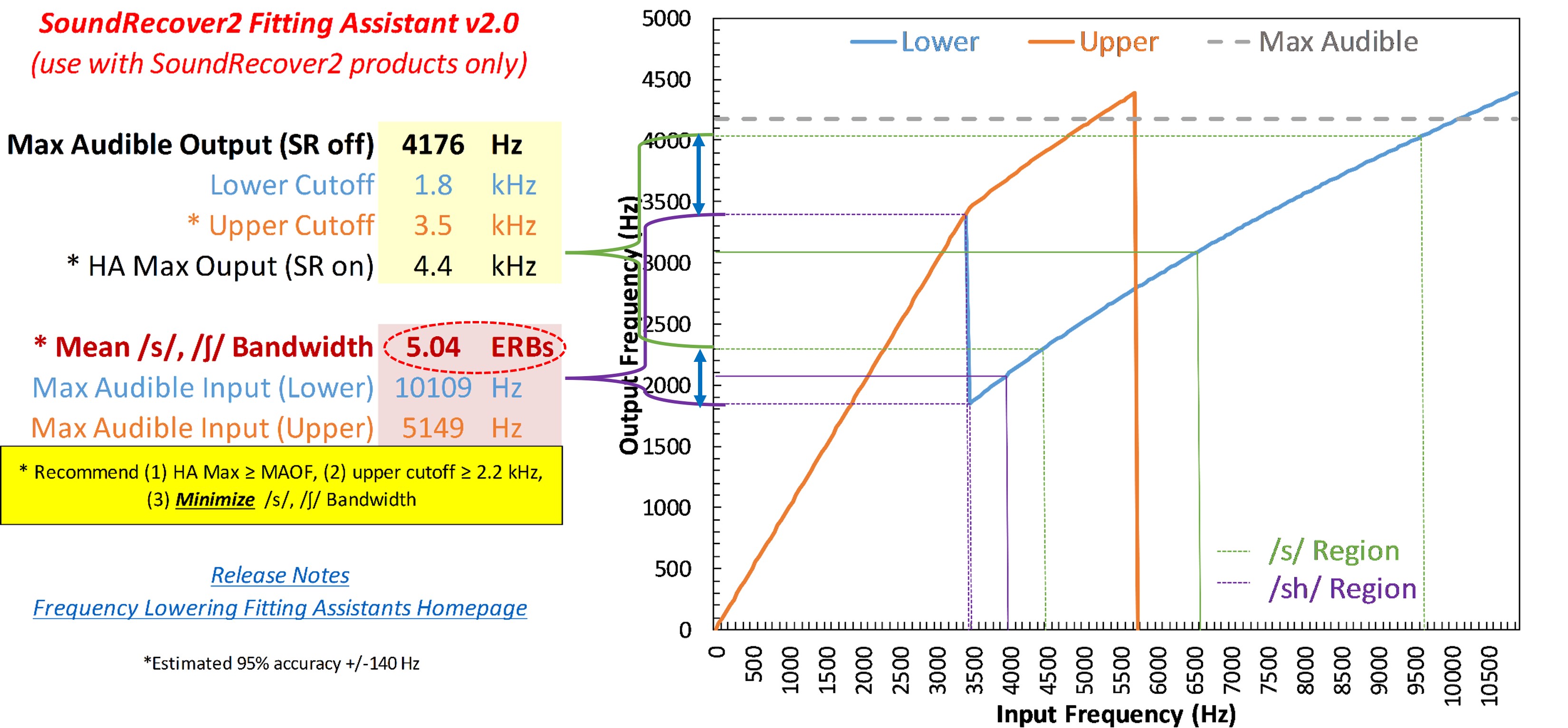

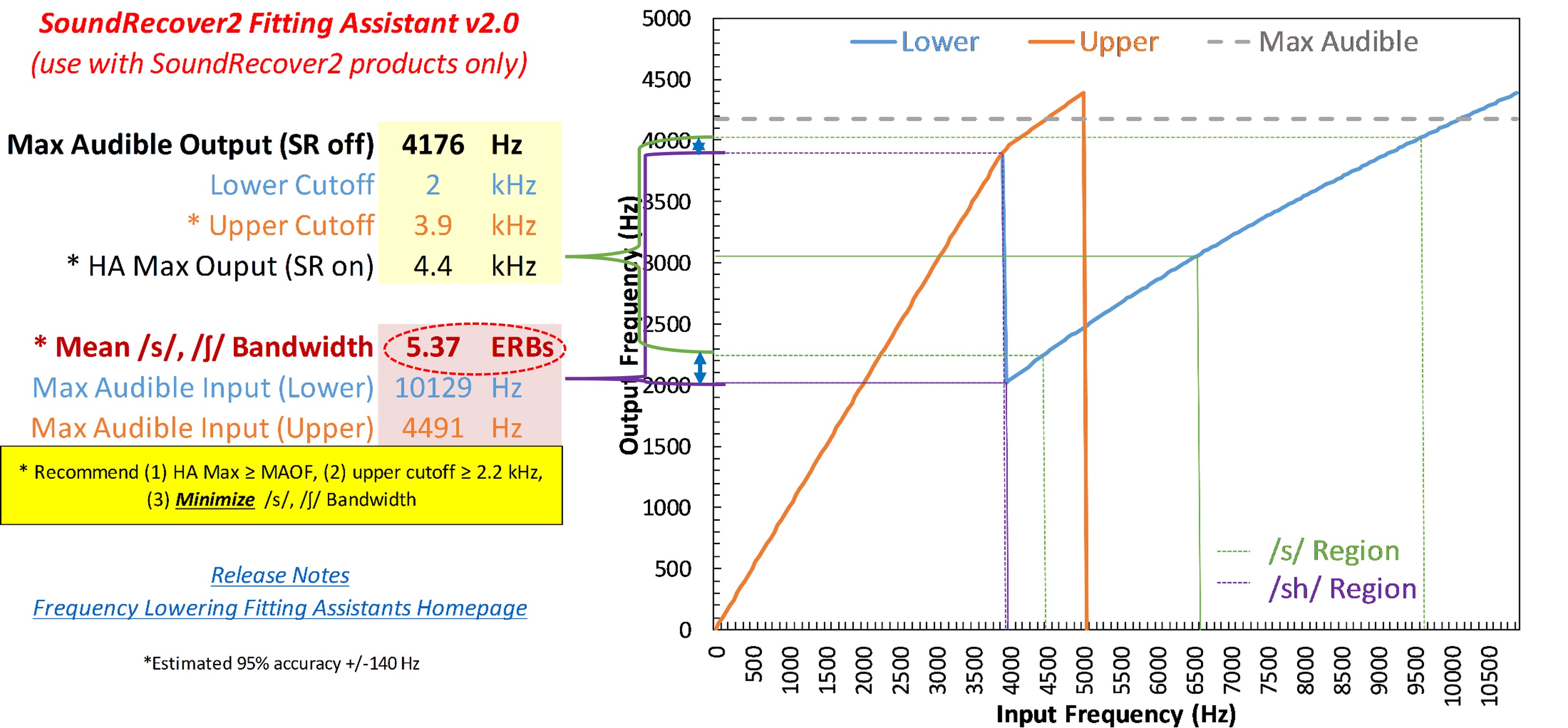

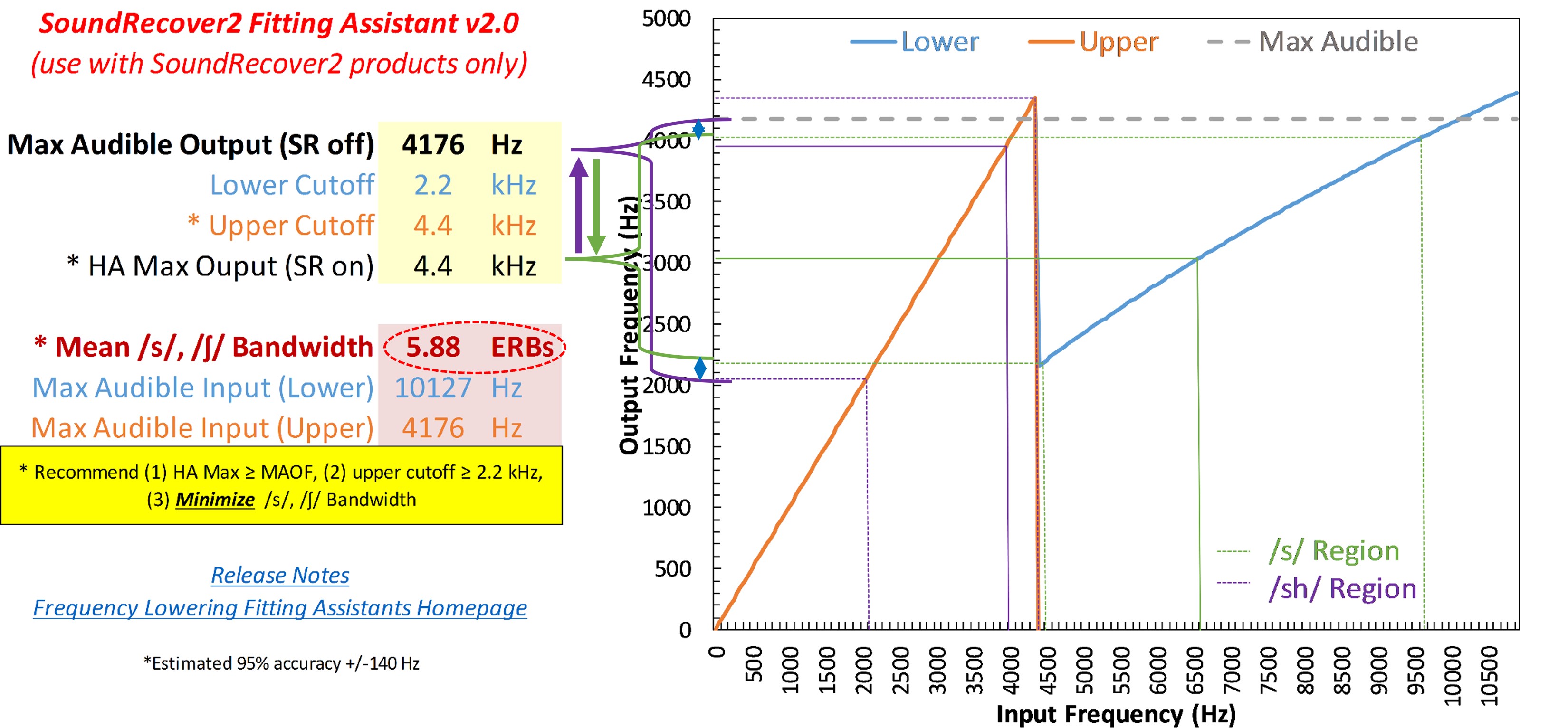

This example is from Part II, which reviewed how to select the maximum audible output frequency, also known as the MAOF. In this example, the maximum audible output frequency was 4176 Hz. Remember that this indicates that sounds above this frequency will be inaudible for the hearing aid user with the chosen hearing aid when fit to prescriptive targets without frequency lowering activated.

The fitting assistant is a tool to help inform and empower clinicians so you know what is happening underneath the hood so you can make more informed decisions. You can use this information to make the best selection based on those three goals established before.

Figure 24. Screenshot of the Audibility Extender Fitting Assistant for Example 1. The yellow boxes allow input for the maximum audible output frequency (4176 Hz) and the start frequency (3800 Hz). The graph displays input frequencies on the x-axis and corresponding output frequencies on the y-axis. The blue line represents the un-transposed signal, while the green line indicates frequencies above 2700 Hz being transposed by a factor of 2 in the output. The horizontal dotted line visually depicts the maximum audible output frequency, serving as a bandwidth indicator. The maximum audible input frequency is reported as 7600 Hz, highlighting the expanded audible input bandwidth achieved through frequency lowering.