Learning Outcomes

After this course, participants will be able to:

- Describe the role of EEG alpha activity as an indicator of listening effort.

- Describe the association between objectively measured brain activity and subjectively measured listening and memory effort scales.

- Describe the effect of modern hearing aid technology with respect to listening effort in complex listening situations.

Objective

Measuring listening effort is an interesting approach to evaluating directional hearing algorithms in hearing aids. Design: The current study investigated whether data of an electroencephalogram (EEG) can detect changes in neurophysiological indices of listening effort in adults with hearing impairments. To assess the effect of directional hearing in hearings aid on listening effort, EEG activity while performing a cognitive memory task was measured under low and high SNR conditions with either complex or simple spatial noise processing. This was done in two different hearing aids from different manufacturers. Results: Both, subjective ratings and neurophysiological data indicate a reduction in listening effort when complex spatial noise processing is activated. EEG alpha frequency spectral density was larger for simple as compared to complex spatial noise processing. The neurophysiological responses with the complex spatial noise processing algorithm activated were comparable to the effect of a 4 dB increase in the SNR. Interestingly, the effect on listening effort reduction was larger when using the Phonak device than when using a device equipped with an alternative approach of spatial noise processing. Conclusions: The results of the study show that complex spatial noise processing reduces listening effort which is also reflected in neural parameters linked to listening effort. Listening effort for the complex spatial noise processing algorithm using the Phonak device was smaller than when using the alternative device, possibly because in the latter the spatial noise processing is less focal and more open than for the Phonak device. This allows more noise to enter the auditory system and hence requires more suppression thereof which in turn requires more listening effort. More generally, this study underlines EEG as a promising methodology to assess neural correlates of listening effort.

Introduction

Speech perception can be challenging and effortful in noisy environments especially for individuals with impaired hearing even when fitted with hearing aids (CHABA - Committee on Hearing and Bioacoustics, 1988; McCoy et al., 2005; Klink et al., 2012). Impaired speech intelligibility due to background noise or impaired hearing function requires mental effort to compensate for the impoverished signal quality, which in turn can impair cognitive performance (Schneider and Pichora-Fuller, 2000).

The aim of the study is to investigate whether spatial noise processing implemented in modern hearing aids reduces listening effort in noisy environments.

The design of this study is based on the idea that the brain operates on a limited amount of (neural) resources shared by sensory, perceptual and cognitive processes (“Limited resources theory” (Kahneman, 1973)). When trying to listen to speech in a noisy environment the suboptimal signal quality has to be overcome by investing more resources with the consequence that processing becomes more effortful (“effortfulness hypothesis” (Rabitt, 1968)). Due to this redistribution of resources, fewer resources for cognitive processing are available. This is also reflected on the neural level which has been shown in previous studies in different contexts (Winneke & Phillips, 2011; Winneke et al., 2016 a,b). Likewise, more efficient sensory processing should free up resources to benefit higher cognitive processing (Frtusova et al., 2013; Just and Carpenter, 1992; Schneider and Pichora-Fuller, 2000).

The topic of listening effort is of large interest with regards to hearing aid fitting (Pichora-Fuller and Singh, 2006). On the basis of the Framework for Understanding Effortful Listening (FUEL; Pichora-Fuller et al., 2016), in our understanding, listening effort refers to an intentional act of investing mental resources, which have corresponding neural underpinnings, in order to comprehend a (speech) signal of interest in an acoustically challenging environment. The topic of quantifying listening effort has been addressed through various approaches (McGarrigle et al., 2014), such as dual-task paradigms (e.g., Fraser et al., 2010) or pupillometry (e.g., Zekveld et al., 2010). However,no “gold standard” in the sense of reliable objective measurement of listening effort has yet been established. A promising approach is the recording of electrophysiological brain activity by means of electroencephalography (EEG). By recording and analyzing EEG data, change in effort can be quantified on a neural level in addition to subjective rating. This could be demonstrated in previous studies (Winneke et al., 2016a,b; Strauss et al., 2010; Bernarding et al., 2014; Strauß et al., 2014).

The EEG signal can be decomposed into different frequency bands. These frequency bands have been shown to be related to cognitive functioning. For example, there is evidence linking increased alpha power (8 –12 Hz) to increase in cognitive effort (e.g., Klimesch et al., 2007; Obleser et al., 2012). Results of an auditory EEG study (Obleser et al., 2012) showed an increase in alpha activity when the signal-to-noise ratio (SNR) decreased. This increase in alpha activity could be seen as an indication of more listening effort when listening environments are suboptimal (e.g., Klimesch et al., 2007; Obleser et al., 2012). Activity in the alpha frequency band, in studies using acoustic signals, is assumed to have an inhibitory function of the interfering, irrelevant noise signal (Strauß et al., 2014). The more the noise signals interfere with the task at hand (i.e. understanding speech) the more the noise has to be suppressed, to better understand the target signal, namely speech. Listening effort increases accordingly, mirrored by an increase in alpha activity.

Various hearing aid algorithms have been developed to improve speech perception. The objective of this study is to evaluate listening technologies that accentuate a speech source (e.g. a single speaker) in diffuse noise in terms of the effect on listening effort.

The hearing aid algorithm StereoZoom (SZ) developed by Phonak uses a complex binaural, spatial noise processing technology to create a narrow sound acceptance beam directed at a target area including a sound source of interest (e.g. a speech source) in especially challenging listening situations. In conversations with loud background noise (e.g. in a restaurant) SZ improves speech intelligibility compared to a technology that uses a simple monaural spatial noise processing technology simulating the directionality of the pinna (Real-Ear-Sound, RES) (e.g. Appleton & König, 2014). Results from a previous study have shown a behavioral benefit for listening effort linked to directional microphone technology as an example for spatial noise processing (Picou et al., 2014). The effect of complex spatial noise processing technology such as SZ on listening effort has so far not been investigated with a neurophysiological approach. As index for the effect of SZ on listening effort the focus in this study was placed on analysis of the EEG alpha frequency band (8 – 12 Hz) (Winneke et al., 2016 a,b). The first goal was whether a complex spatial noise processing technology (SZ) and a simple spatial noise processing technology (RES) differ from each other in terms of their effect on listening effort. Other hearing aid manufacturers employ alternative spatial noise processing approaches in order to improve speech understanding in their devices. Therefore, the second goal was to investigate how two different hearing aid algorithms for spatial noise processing modulate listening effort when following a single speaker source in a noisy environment. To do this, the effect of two complex spatial noise processing technologies (StereoZoom vs. alternative device) was compared to each other.

With respect to listening effort the comparisons were based on three dependent variables:

- Subjective: 13-level scale of listening effort based on the ACALES scale (Krüger et al., 2017)

- Objective: response accuracy (percentage correctly recalled sentence parts)

- Objective: spectral density of the EEG Alpha frequency band (8 – 12 Hz)

Hypotheses

Regarding the first goal, it is expected that the complex spatial noise processing technology (SZ) will improve speech perception and hence yield a reduction in listening effort compared to simple spatial noise processing technology (RES). This reduction will be reflected both on the subjective level (i.e. rating of experienced effort), the behavioral level (improved memory performance) as well as on the neurophysiological level. Regarding the EEG activity in the alpha frequency band we expect a reduction in the spectral density under SZ compared to RES as indication of a reduction in listening effort. The reason being that due to the complex spatial noise processing technology less of the interfering noise has to be suppressed by the brain.

Regarding the second goal of investigating complex spatial noise processing, both hearing aid technologies chosen in this study (Phonak vs. alternative device) are intended to acoustically emphasize a speech source in diffuse noise. However, compared to Phonak’s SZ, the algorithm of the alternative device gives access to other sound sources besides the frontal one and hence allows more objects from the environment to be processed. Although the alternative device also selects and focuses on the speaker, it is hypothesized that due to the more open character, more listening effort is required than for the more focused SZ algorithm.

Materials and Methods

Participants

A total of 20 experienced hearing aid users participated in the study (age: m = 70.90 years; SD = 7.28; 12 women). Participants had mild to moderate hearing loss (Pure Tone Average Air Conduction (PTA-AC): right: m = 51.00; SD = 10.64; left: m = 50.69; SD = 10.54) without neurological deficits or cognitive impairments (DemTect (Kalbe et al., 2014); m = 16.95; SD = 1.50). Each participant was fitted with two sets of hearing aids: Audéo B90-312 from Phonak and the alternative hearing aid, both with closed coupling with silicone based earmoulds (SlimTips). The study was approved by the local ethics committee of the Carl-von-Ossietzky University in Oldenburg. Participants were compensated for participation.

Stimuli and Task

The speech material used in this study was based on the German OLSA sentence matrix test (Wagener & Kollmeier, 1999). All sentences are structured identically and consist of five word categories (name, verb, number, adjective and object, e.g. “Peter kauft fünf rote Blumen” (engl.”Peter buys five red flowers”)). The sentences are constructed in a random fashion based on a database that contains 10 instances for each category. Based on these sentences, a Word-Recall-Task (i.e. memory task) was developed. Participants heard two sentences and then had to answer either which names, which numbers, or which objects they had heard (i.e. memory task). The recall task had to be completed after every second sentence (‘Which names/numbers/objects did you hear?’). The answer was given via touchscreen. For each of the sentences, there were 10 possible answers to choose from, for each of the sentence part that was asked for. The part of the sentence which was asked for was random and the participant did not know beforehand. Therefore, the participant had to essentially remember both sentences entirely, or at least the three possible sentence parts that might be asked for (names, numbers, and objects). The resulting depending variable was the percentage of correctly recalled sentence parts (i.e. accuracy).

After each block (8 x 2 sentences), participants were asked to rate their experienced listening effort, memory effort (i.e. how effortful it was to recall the items) and noise annoyance (i.e. how annoying was the background noise). Answers could be given based on a 13 point scale adapted from the ACALES scale (Krüger et al., 2017) via touch screen. The original ACALES scale was modified in this study by taking out the final level (noise only) because some of the speech signal was always perceivable. These values constitute the subjective behavioral data regarding the personal experience of listening effort, memory effort and noise annoyance.

The experiment included 8 conditions in a 2 x 2 x 2 design with the factors SNR (high vs low SNR), program (spatial noise processing: complex vs. simple) and device (Phonak vs. alternative device).

The conditions were presented in blocks in a randomized sequence. Each block consisted of 16 sentences. Each condition was repeated twice (i.e. 32 sentences per condition). To minimize the effort of switching hearing aids, the sequence of the factor device was not randomized but simply balanced. That is, half of the participants started with the Phonak device and the other half with the alternative device.

The noise signal was a diffuse cafeteria noise at a constant level of 65 dB SPL played via loud speakers positioned at 30°, 60°, 90°, 120°, 150°, 180°, 210°, 240°, 270°, 300°, 330°. In front of the loud speakers, was a non-transparent curtain so that the participant only saw the touch screen but not the speakers.

SNR was modified by adjusting the level of speech signal which was presented via a loudspeaker facing the participant at 0°. The level of the speech signal was adjusted individually. First, the individual speech reception threshold of 50% (SRT50) was determined (see Procedures below). Based on this individual SRT50 the high SNR (HiSNR) and low SNR (LoSNR) conditions were defined individually as follows:

· HiSNR = SRT50 + 3 dB + 4 dB

· LoSNR = SRT50 + 3 dB

Procedure

After the study process was described to the participants and the informed consent was signed, an audiogram was recorded, hearing aids were fitted based on the manufacturers’ default fitting formulas and participants were equipped with an EEG cap. This was followed by assessment of the individual SRT50 using the OLSA sentence matrix task (see Wagener & Kollmeier, 1999 for details)at a fixed noise level of 65 dB SPL. No EEG was recorded during the SRT50 OLSA sentence task. The simple spatial noise processing mode of the Phonak hearing aid (RES) was used during the OLSA task to obtain the SRT50. For the Word-Recall memory task, the previously obtained speech level of the SRT50 was increased by 3 dB SPL in the LoSNR condition to ensure a speech identification rate of at least 90%. For the HiSNR condition, the speech level was increased by an additional 4 dB. Before commencement of the experimental session, participants were allowed to practice the Word-Recall memory task.

EEG Data

A continuous EEG was recorded using a 24-channel wireless Smarting EEG system (mBrainTrain, Belgrade, Serbia) while participants were listening to the OLSA sentences and answering the questions. The brain activity was recorded from 24 electrode sites mounted into a custom-made elastic EEG cap (EasyCap, Herrsching, Germany) and arranged according to the International 10–20 system (Jasper, 1958). Lab Streaming Layer und Smarting Streamer 3.1 (mBrainTrain, Belgrade, Serbia) software were used to record EEG data. The EEG was recorded at a sampling rate of 500 Hz, with a low-pass filter of 250 Hz. EEG data offline processing and analysis was conducted using EEGLab v.14 (Delorme & Makeig, 2014). EEG recordings were re-referenced off-line to a linked left and right mastoid reference. Continuous EEG data were filtered using 1 Hz to 30 Hz bandpass filter after applying a 50Hz notch filter. Excessive ocular artifacts, such as eyeblinks, and other EEG artifacts were identified and removed using an independent component analysis.

The continuous EEG data were epoched into 2500 ms time windows around the onset of each OLSA sentence. A spectral density analysis between 3 and 25 Hz was conducted on these time windows. The focus was placed on the EEG alpha frequency band (8 – 12 Hz). Based on findings from previous studies as well as findings reported in the literature, analyses were restricted to electrodes over the left (C3, CP5, P3) and right (C4, CP6, P4) temporo-parietal cortex.

Results

Behavioral Data (N = 20)

To compare the effect of spatial noise processing for each hearing aid model, the behavioral data (response accuracy, subjective listening effort, subjective memory effort, subjective noise annoyance) were analyzed using a 2 x 2 repeated measures ANOVA (2 levels for SNR (HiSNR vs. LoSNR) x 2 levels for program(complex vs. simple spatial noise processing ). To compare Phonak vs. alternative device, the behavioral data during the complex spatial noise processing modi were analyzed using a 2 x 2 repeated measures ANOVA (2 levels for SNR (HiSNR vs. LoSNR) x 2 levels for device (Phonak vs. alternative device). A Bonferroni correction was applied for multiple comparisons.

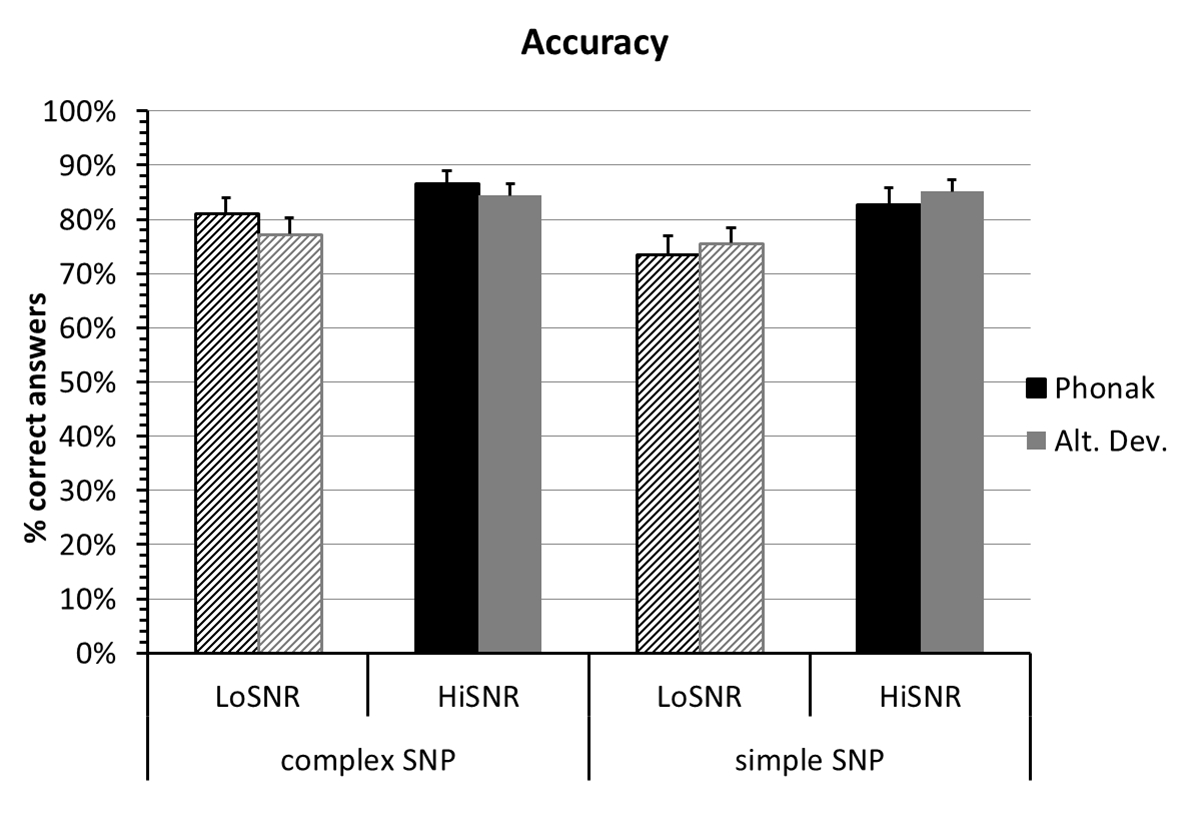

Response accuracy. As seen in figure 1, for both devices there was a main effect for SNR with the percentage of correctly recalled items higher in HiSNR compared to LoSNR (Phonak: F(1,19) = 27.09 p < .001; alternative device: F(1,19) = 9.18; p = .007 ). Only for the Phonak device a significant main effect of program was revealed with accuracy values higher for the complex spatial noise processing (SZ) mode as compared to simple spatial noise processing (RES) (F(1,19) = 7.12; p = .015). The difference between the complex SZ modus compared to the simple RES modus corresponds to 5.8% more correctly recalled items during the directional SZ mode.

The comparison between the complex spatial noise processing modi of the Phonak and the alternative device with respect to accuracy confirmed the main effect of SNR (F(1,19) = 9.18; p = .007) but revealed no statistically significant differences between the two devices.

Figure 1. Average accuracy in percent with standard error bars for complex and simple spatial noise processing (SNP) programs for the Phonak (black) and the alternative device (grey) in high (solid) and low SNR (line hatch) conditions.

Figure 1. Average accuracy in percent with standard error bars for complex and simple spatial noise processing (SNP) programs for the Phonak (black) and the alternative device (grey) in high (solid) and low SNR (line hatch) conditions.

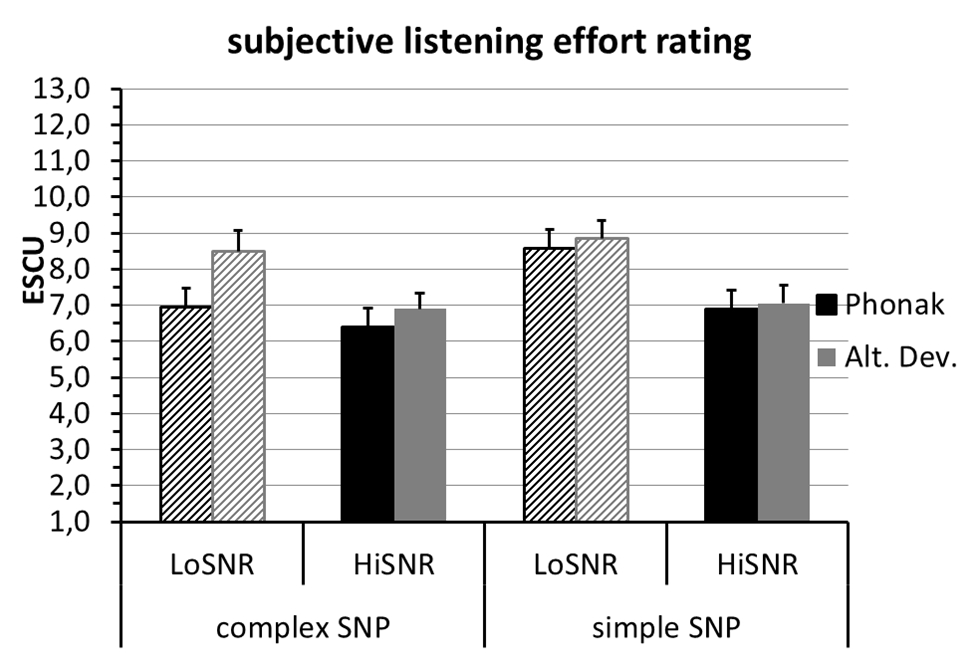

Listening effort. As shown in figure 2, for both devices there was a main effect for SNR with listening effort under HiSNR being lower than under LoSNR conditions (Phonak: F(1,19) = 16.61 p < .001; alternative device: F(1,19) = 23.08 p < .001). Only for the Phonak device a significant main effect of program was revealed with subjective listening effort lower for complex (SZ) than simple (RES) spatial noise processing (F(1,19) = 8.17; p = .010). Furthermore, for the Phonak device, a significant interaction between SNR and Program revealed that under poor SNR conditions (LoSNR) listening effort is statistically lower for complex (SZ) than simple (RES) spatial noise processing (F(1,19) = 6.59; p = .019). The difference corresponds to a reduction of 18.95% with respect to listening effort for SZ compared to RES in LoSNR (see figure 2). In good SNR conditions (HiSNR) there is no statistically significant difference for listening effort between the two modi. In other words, for the Phonak device, the benefit of the complex, spatial noise processing technology for listening effort becomes more apparent when it matters most, namely in suboptimal listening environments.

The comparison between the spatial noise processing modi of the Phonak and the alternative device with respect to listening effort reveals a main effect of device (F(1,19) = 4.56; p = .046) with listening effort being lower for the Phonak device than the alternative device. This effect is modulated by a significant interaction between Device and SNR (F(1,19) = 9.32; p = .007), because this effect of device with respect to subjective listening effort is statistically significant only under LoSNR conditions. The difference corresponds to a reduction of 18.24% with respect to listening effort for the spatial noise processing algorithm in the Phonak device (SZ) compared to the spatial noise processing algorithm in the alternative device in suboptimal listening environments (LoSNR) (see figure 2).

Figure 2. Average subjective listening effort rating (estimated scaling units (ESCU)) with standard error bars for complex and simple spatial noise processing (SNP) programs for the Phonak (black) and the alternative device (grey) in high (solid) and low SNR (line hatch) conditions.

Figure 2. Average subjective listening effort rating (estimated scaling units (ESCU)) with standard error bars for complex and simple spatial noise processing (SNP) programs for the Phonak (black) and the alternative device (grey) in high (solid) and low SNR (line hatch) conditions.

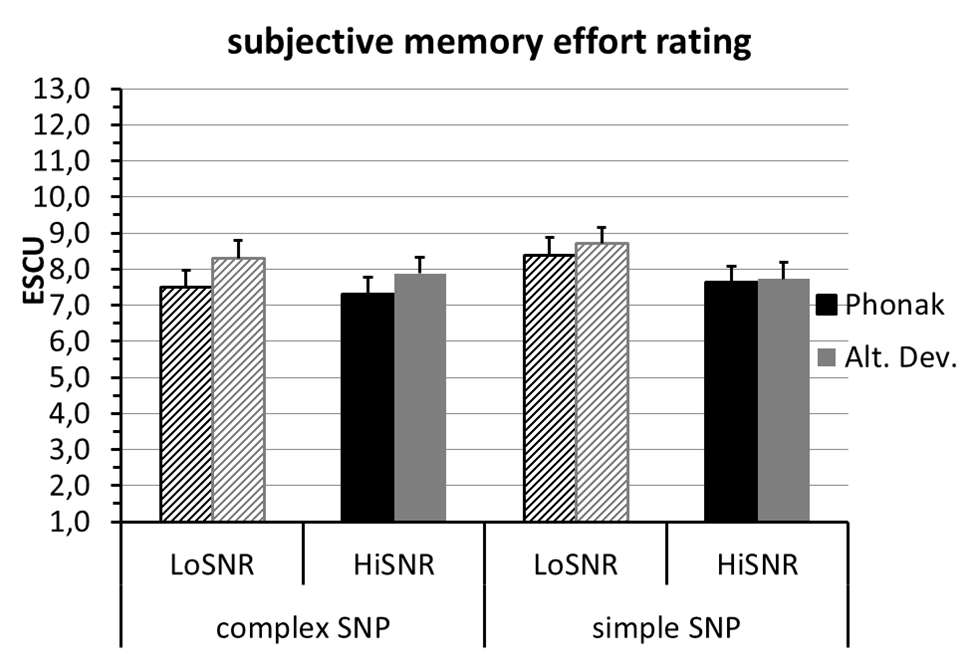

Memory effort. As shown in figure 3, for both devices there was a main effect for SNR with memory effort under HiSNR being lower than under LoSNR conditions (Phonak: F(1,19) = 6.85; p = .017; alternative device: F(1,19) = 7.67; p = .012). For the Phonak device, a trend towards a main effect of program was revealed with subjective memory effort lower for complex (SZ) than simple (RES) spatial noise processing (F(1,19) = 4.23; p = .054). Furthermore, for the Phonak device, a significant interaction between SNR and Program revealed that under poor SNR conditions (LoSNR) memory effort is statistically lower for complex (SZ) than simple (RES) spatial noise processing (F(1,19) = 4.81; p = .041). Yet, in good SNR conditions (HiSNR) there is no statistically significant difference for memory effort between the two modi. In other words, for the Phonak device, the benefit of complex, spatial noise processing technology for memory effort becomes more apparent in suboptimal environments.

The comparison between the spatial noise processing technology of the Phonak and the alternative device with respect to memory effort revealed a main effect of device (F(1,19) = 4.81; p = .041) with memory effort being lower for the Phonak device than the alternative device. In other words, the complex spatial noise processing technology outperforms that of the alternative device with regards to memory effort.

Figure 3. Average subjective memory effort rating (estimated scaling units (ESCU)) with standard error bars for complex and simple spatial noise processing (SNP) programs Phonak (black) and the alternative device (grey) in high (solid) and low SNR (line hatch) conditions.

Figure 3. Average subjective memory effort rating (estimated scaling units (ESCU)) with standard error bars for complex and simple spatial noise processing (SNP) programs Phonak (black) and the alternative device (grey) in high (solid) and low SNR (line hatch) conditions.

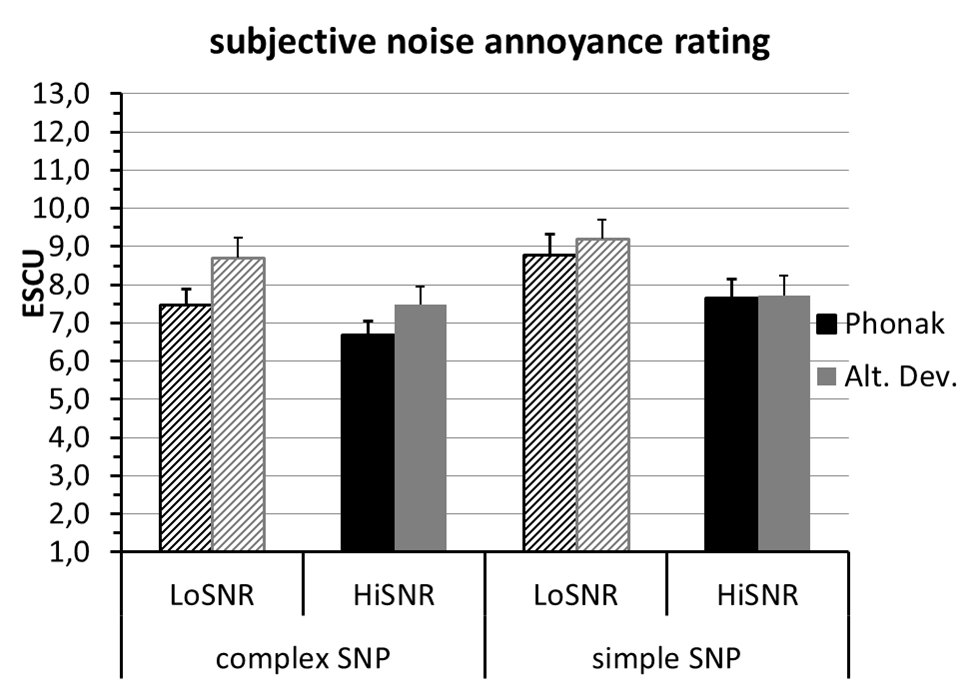

Noise annoyance. As shown in figure 4, for both devices there was a main effect for SNR with noise annoyance under HiSNR being lower than under LoSNR conditions (Phonak: F(1,19) = 11.62; p = .003; alternative device: F(1,19) = 25.14 p < .001). For both devices there was a main effect for program with subjective noise annoyance lower for complex than simple spatial noise processing (Phonak: F(1,19) = 15.89; p = .001; alternative device: F(1,19) = 6.33; p = .021).

The comparison between the spatial noise processing modi of the Phonak and the alternative device with respect to noise annoyance revealed a main effect of device (F(1,19) = 6.87; p = .017) with noise annoyance being lower for the Phonak device than the alternative device. In other words the Phonak complex spatial noise processing technology outperforms the alternative device with regards to noise annoyance.

Figure 4. Average subjective noise annoyance rating (estimated scaling units (ESCU)) with standard error bars for complex and simple spatial noise processing (SNP) programs the Phonak (black) and the alternative device (grey) in high (solid) and low SNR (line hatch) conditions.

Correlation between rating scales. To analyze the correlation between the subjective rating data, average rating values were computed across all levels of SNR, program and device. Correlational analysis show a moderate correlation between subjective ratings of listening effort and memory effort (r(18) = .56; p = .01) and a high correlation between subjective ratings of listening effort and noise annoyance (r(18) = .85; p < .001).

EEG Data

For the EEG data analysis data from all participants (N = 20) were initially considered. After data inspection, data from one participant was excluded from all further analyses due to poor impedance values during recording and very noisy EEG. For two additional participants there were technical difficulties due to lost Bluetooth connection between EEG amplifier and PC. This caused data for some conditions to be lost. For one participant, data loss occurred during Phonak RES conditions. In the other participant, data loss occurred during some trials when the complex spatial noise processing mode of the alternative device was activated. This resulted in a sample size of n = 18 for all subsequent EEG data analyses.

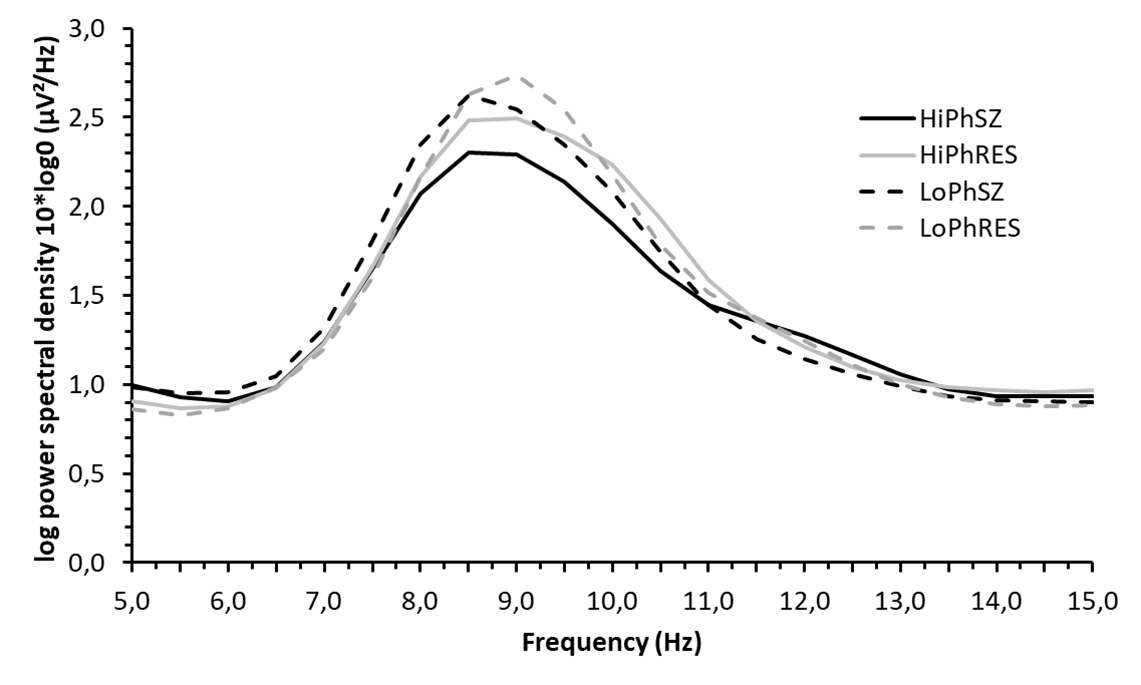

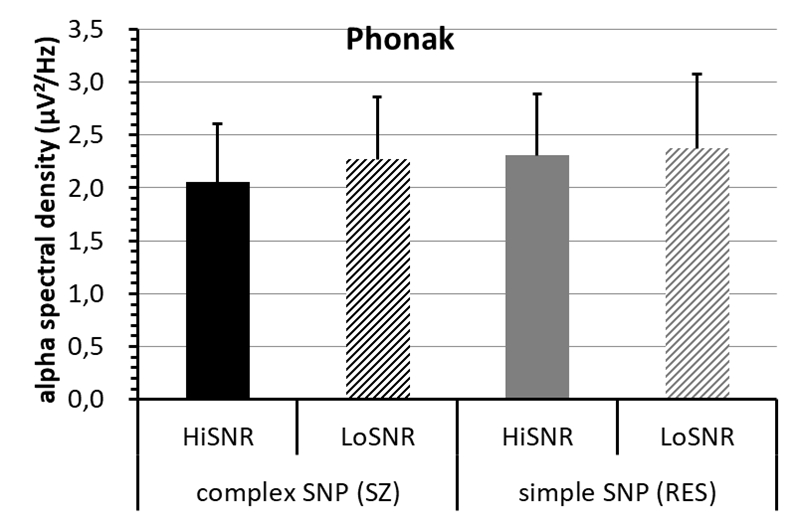

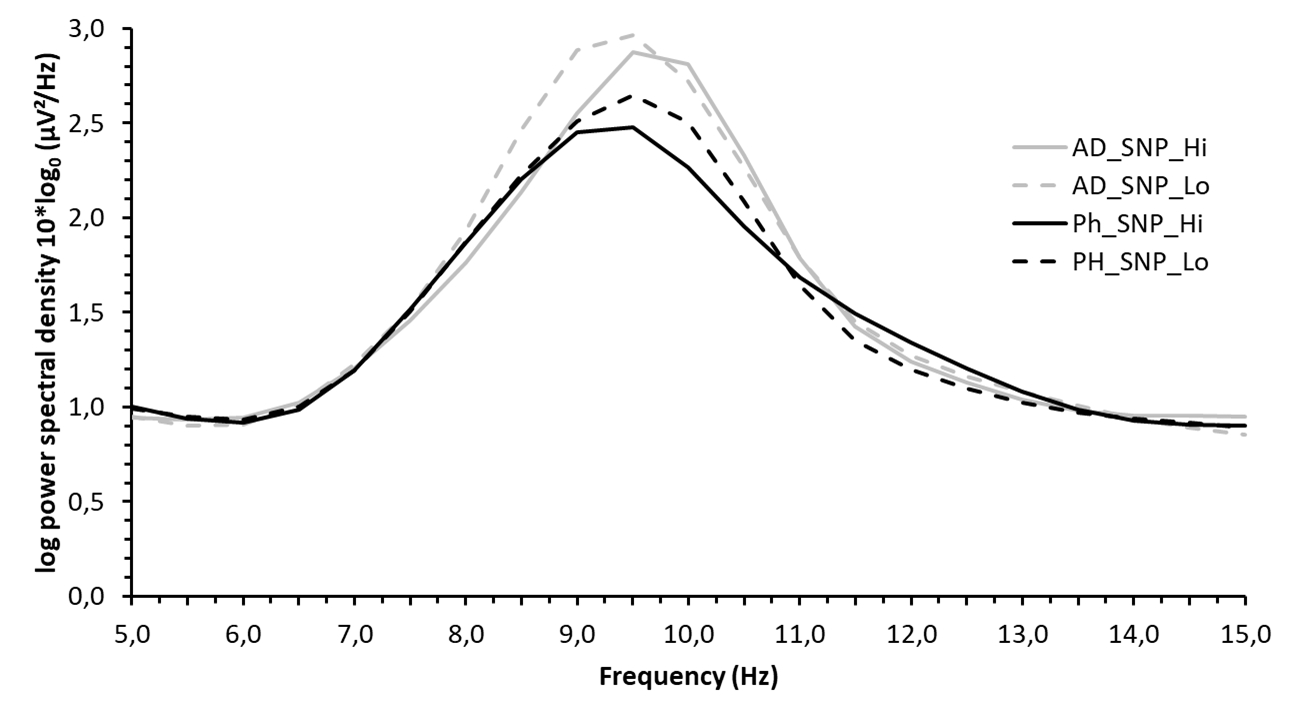

In the analysis of the alpha activity (alpha band spectral density) we included the average value of six temporo-parietal electrodes of the left (C3, CP5, P3) and right hemisphere (C4, CP6, P4) in the frequency range of 8 – 11 Hz of the alpha band spectrum where activity was most prominent (see figures 5 and 7).

Phonak – complex vs. simple spatial noise processing in LoSNR and HiSNR. EEG alpha activity (Alpha Spectral Density) for the Phonak device was analyzed using a 2 x 2 repeated measures ANOVA (2 levels for SNR (HiSNR vs. LoSNR) x 2 levels for program (complex vs. simple spatial noise processing). A Bonferroni correction was applied for multiple comparisons.

As shown in figures 5 and 6, the analysis of alpha spectral density revealed a main effect of program (F(1,17) = 4.50; p = .049) with alpha spectral density being lower for complex (SZ) as compared to simple (RES) spatial noise processing.

Figure 5. Average spectral density values between 5 and 15 Hz (averaged across electrode sites C3, C4, CP5, CP6, P3, P4, 18 subjects and over time) for Phonak‘s complex spatial noise processing technology StereoZoom (SZ; black) and simple spatial noise processing technology Real-Ear-Sound (RES; grey) in HiSNR (solid) and LoSNR (dashed). The image shows that the EEG-Alpha activity (8-11 Hz) is higher for RES than SZ in both LoSNR and HiSNR conditions.

Figure 5. Average spectral density values between 5 and 15 Hz (averaged across electrode sites C3, C4, CP5, CP6, P3, P4, 18 subjects and over time) for Phonak‘s complex spatial noise processing technology StereoZoom (SZ; black) and simple spatial noise processing technology Real-Ear-Sound (RES; grey) in HiSNR (solid) and LoSNR (dashed). The image shows that the EEG-Alpha activity (8-11 Hz) is higher for RES than SZ in both LoSNR and HiSNR conditions.

Figure 6. Average alpha activity (spectral density averaged across electrodes, 18 subjects, and time) with standard error bars between 8-11 Hz for Phonak’s StereoZoom with complex spatial noise processing (complex SNP (SZ), black) and Real-Ear-Sound with simple spatial noise processing (simple SNP (RES); grey) in HiSNR (solid) and LoSNR (cross-hatched).

Phonak vs. Alternative device. To compare Phonak vs. alternative device, alpha spectral density when complex spatial noise processing was activated was analyzed using a 2 x 2 repeated measures ANOVA (2 levels for SNR (HiSNR vs. LoSNR) x 2 levels for device (Phonak vs. alternative device). A Bonferroni correction was applied for multiple comparisons. As seen in figure 7 and 8, the analysis of alpha spectral density values revealed a main effect of device (F(1,17) = 4.75; p = .044) with alpha spectral density being lower for the Phonak device than the alternative device.

Figure 7. Average spectral density values between 5 and 15 Hz (averaged across electrode sites C3, C4, CP5, CP6, P3, P4, 18 subjects and time) for the Phonak (Ph; black) and the alternative device (AD; grey) in HiSNR (solid) and LoSNR (dashed) when complex spatial noise processing (SNP) modi were activated. The image shows that the EEG-Alpha activity (8-11 Hz) is higher for the alternative device than for Phonak in both LoSNR and HiSNR conditions.

Figure 8. Average alpha activity (spectral density averaged across electrodes, 18 subjects and time) with standard error bars between 8 – 11 Hz for the Phonak (black) and the alternative device (grey) in HiSNR (solid) and LoSNR (cross-hatched) when the complex spatial noise processing (SNP) modi were activated.

Discussion

The goal of the study was to evaluate spatial noise processing algorithms in hearing aids with respect to the effect on listening effort when listening to a single speaker in a diffuse noise environment. Data were collected regarding various behavioural measures including the subjective experience of listening effort, as well as physiological changes in the alpha frequency band of the EEG. Modulation in the alpha frequency band is a potential physiological indicator of listening effort or of processes that play a key role in modifying listening effort (Winneke et al., 2016 a,b). Studies using acoustic stimuli in background noise, suggest that activity of the alpha frequency band has a suppressing or inhibitory function of the interfering, irrelevant noise signal (Strauß et al., 2014). The more interfering the noise signal is, the more inhibition thereof is necessary to perceive the signal of interest (i.e. speech signal) well enough. Listening effort increases accordingly, reflected in an increase in activity in the alpha frequency band.

The first goal of the study was the comparison of the Phonak algorithms RealEarSound (RES; simple spatial noise processing technology which simulates the directionality of the pinna) and StereoZoom (SZ; directional microphone technology or complex spatial noise processing) with respect to listening effort. The second goal was the comparison of two spatial noise processing algorithms that emphasize a single speech source in diffuse noise by two different manufactures, namely Phonak’s SZ and an alternative device both of which use different complex spatial noise processing approaches.

The task of the participant was to listen to spoken sentences in diffuse cafeteria noise and complete a cognitive memory task regarding the content of the sentences. At the same time an EEG was recorded and at set intervals, participants were asked to indicate their subjectively experienced listening effort, their memory effort and the noise annoyance regarding the cafeteria noise.

Phonak: SZ vs. RES. The behavioral data show that the subjectively experienced listening effort is lower (19%) for the complex spatial noise processing algorithm (SZ) as compared to simple spatial noise processing (RES), particularly when SNR is suboptimal. Noise is also experienced as less annoying when complex spatial noise processing is activated. Interestingly, the cognitive performance (i.e. the percent correctly recalled items) is significantly better when complex spatial noise processing is activated. This supports the conclusion that a reduction in listening effort, due to enhanced sensory processing, enables more resources to be allocated towards cognitive memory processes. The subjectively experienced effort associated with the cognitive memory task is rated lower when complex spatial noise processing is activated. Interestingly, the correlation value indicates that memory and listening effort ratings map on to related but different dimensions that participants were able to distinguish from another. In other words, the changes in experienced listening effort cannot just simply be interpreted as by-product of the effort related to remembering words. Rather, listening effort seems to be linked to processes related to speech understanding and inhibition of irrelevant noise.

The results of the EEG data analysis (alpha spectral density) map onto the behavioral data, because the alpha activity is significantly lower for the complex compared to the simple spatial noise processing setting independent of SNR. The function underlying the algorithm of the binaural, directional SZ setting is a more prominent spatial noise processing. Thereby the speech signal is easier to understand and less listening effort has to be invested, because under SZ less of the interfering cafeteria noise has to be suppressed. This is reflected in a reduction in alpha activity for SZ as compared to RES, suggesting that during the latter more inhibition of incoming, irrelevant noise is required.

Upon closer inspection of figures 2 (listening effort rating) and 6 (EEG data), at least descriptively, listening effort in the complex spatial noise processing setting (SZ) during poor LoSNR conditions is comparable to listening effort in simple setting (RES) during good HiSNR conditions. In other words, the benefit due to binaural, directional microphone technology as implemented in SZ relative to the simple, monaural RES is comparable to an SNR improvement of 4 dB SPL.

Phonak (SZ) vs. Alternative Device. The results regarding the comparison of the complex spatial noise processing algorithms of the two devices used in this study resemble the results of the comparison of Phonak’s SZ and RES as described in the previous section. Behavioral data show that subjective listening effort is lower for Phonak’s SZ than for the complex spatial noise processing algorithm of the alternative device particularly in suboptimal listening conditions (LoSNR) and the cafeteria noise is experienced as less annoying independent of SNR conditions. The results of the EEG data analysis (alpha spectral density) reveal significantly lower alpha activity for SZ compared to the alternative device independent of SNR. This could indicate that the complex spatial noise processing in SZ has a larger, more focal effect than that of the algorithm implemented in the alternative device. This in turn causes listening effort, both subjectively as well as objectively as measured by EEG, to be smaller for Phonak’s SZ.

Interestingly, there are no significant differences between the spatial noise processing settings of the two devices with respect to performance (i.e. percentage correctly recalled words). The elevated listening effort for the alternative device might be a potential indicator of compensatory processes which are necessary to maintain a good level of performance. In other words, when using the alternative device, participants have to “invest” more (neural) resources, to perform at the same level as when using the binaural, directional spatial noise suppression algorithm SZ. This increase in investment reveals itself as an increase in listening effort – subjectively as well as neurophysiologically.

Conclusion

The data presented here indicate, that algorithms in hearing aids that facilitate directional hearing reduce listening effort on both a subjective as well as objective level in tasks that require understanding speech from a single source (i.e. single talker), in a diffuse noise environment. Different manufacturers implement different approaches to realize spatial noise processing. Here it was shown that differences emerge with respect to listening effort. Most likely this has to do with the degree of noise suppression. An open approach can be beneficial as peripheral information can still enter the auditory system. The disadvantage is that more noise comes in as well, which in turn has to be suppressed. This requires effort and (neural) resources and over an extended period of time can lead to fatigue.

The results of this study underline that EEG poses a promising tool, to quantify change in listening effort, to evaluate the effect of hearing technologies even when speech intelligibility is at, or close to ceiling. Changes in listening effort could not only be observed on a subjective scale, but results of the EEG data analysis indicate a reduction of listening effort, also on a neurophysiological level. This reduction in listening effort is seen in a reduction in EEG alpha activity and is assumed to function as noise suppression on the neural level. This reduction in activity used to suppress incoming noise, fits well into the general idea of listening effort because it is a reflection of a reduction in resource allocation to deal with irrelevant noise to understand a target signal of interest (Pichora-Fuller et al., 2016). Subjective ratings of the experienced noise annoyance back up this interpretation, because the algorithms associated with higher listening effort scores, subjective and objective, also produced higher noise annoyance values.

Acknowledgments

The authors would like to thank Müge Kaya from Hörzentrum Oldenburg GmbH for her support in conducting the experiments.

Conflict of Interest Declaration

The authors declare that there is no conflict of interest. However, please note that the hearing aid algorithm used for testing in the current study is integrated in commercially available hearing aids. The study received some financial support by Sonova AG and one of the co-authors is employed by Phonak AG (ML).

References

Appleton, J., and König G.(2014) Improvement in speech intelligibility and subjective benefit with binaural beamformer technology. Hearing Review. 21(11):40-42.

Bernarding, C., Strauss, D.J., Hannemann, R., Seidler, H., and Corona-Strauss, F. I. (2014). Objective assessment of listening effort in the oscillatory EEG: Comparison of different hearing aid configurations. In Conf Proc IEEE Eng Med Biol Soc, 36th Annual International Conference of the IEEE, pp. 2653-2656.

Committee on Hearing and Bioacoustics. (1988). Speech understanding and aging. J Acoust Soc Am, 83, 859–895.

Delorme A. and Makeig S. (2004) EEGLAB: an open source toolbox for analysis of single-trial EEG dynamics. J Neurosci Methods,134, 9-21

Edwards, E. (2007). The future of hearing aid technology. Trends Amplif, 11, 31–45

Foxe, J. J., and Snyder, A. C. (2011). The role of alpha-band brain oscillations as a sensory suppression mechanism during selective attention. Front Psychol, 2, 154.

Fraser, S., Gagné, J. P., Alepins, M., and Dubois, P. (2010). Evaluating the effort expended to understand speech in noise using a dual-task paradigm: The effects of providing visual speech cues. J Speech Lang Hear Res, 53(1), 18-33.

Holube, I., Fredelake, S., Vlaming, M., and Kollmeier, B. (2010). Development and analysis of an international speech test signal (ISTS). Int. J. Audiol, 49, 891-903.

Jasper, H. H. (1958). The ten-twenty electrode system of the International Federation. Electroencephalogr Clin Neurophysiol, 10, 371–375.

Just, M. A., and Carpenter, P. A. (1992). A capacity theory of comprehension: Individual differences in working memory. Psychol Rev, 99, 122–149.

Kahneman, D. (1973). Attention and Effort. Englewoods Cliffs, NJ: Prentice-Hall.

Kalbe, E., Kessler, J., Calabrese, P., Smith, R., Passmore, A. P., Brand, M. A., & Bullock, R. (2004). DemTect: a new, sensitive cognitive screening test to support the diagnosis of mild cognitive impairment and early dementia. International journal of geriatric psychiatry, 19(2), 136-143.

Klimesch, W., Sauseng, P., and Hanslmayr, S. (2007). EEG alpha oscillations: the inhibition-timing hypothesis.Brain Res Rev, 53, 63– 88.

Klink, K. B., Schulte, M., and Meis, M. (2012). Measuring listening effort in the field of audiology – a literature review of methods, part 1. Audiological Acoustics, 51(2), 60-67.

Krueger, M., Schulte, M., Brand, T., & Holube, I. (2017). Development of an adaptive scaling method for subjective listening effort. The Journal of the Acoustical Society of America, 141(6), 4680-4693.

LabStreamingLayer: code.google.com/p/labstreaminglayer

McCoy, S. L., Tun, P. A., Cox, L. C., Colangelo, M., Stewart, R. A., and Wingfield, A. (2005). Hearing loss and perceptual effort: Downstream effects on older adults’ memory for speech. Q J Exp Psychol A, 58(1), 22-33.

McGarrigle, R., Munro, K. J., Dawes, P., Stewart, A. J., Moore, D. R., Barry, J. G., and Amitay, S. (2014). Listening effort and fatigue: what exactly are we measuring? A British Society of Audiology Cognition in Hearing Special Interest Group'white paper'. Int J Audiol, 53(7), 433-440.

Obleser, J., Wöstmann, M., Hellbernd, N., Wilsch, A., and Maess, B. (2012). Adverse listening conditions and memory load drive a common alpha oscillatory network. J Neurosci, 32(36), 12376-12383.

Pichora-Fuller, M. K., and Singh, G. (2006). Effects of age on auditory and cognitive processing: implications for hearing aid fitting and audiologic rehabilitation. Trends Amplif, 10(1), 29-59.

Pichora-Fuller, M. K., Kramer, S. E., Eckert, M. A., Edwards, B., Hornsby, B. W., Humes, L. E., ... & Naylor, G. (2016). Hearing impairment and cognitive energy: the Framework for Understanding Effortful Listening (FUEL). Ear Hear, 37, 5S-27S.

Picou, E. M., Aspell, E., Ricketts, T. A. (2014). Potential benefits and limitations of three types of directional processing in hearing aids. Ear and Hearing, 35(3), 339-352.

Rabbitt, P. M. (1968). Channel-capacity, intelligibility and immediate memory. Q J Exp Psychol, 20, 241–248.

Schneider, B.A., and Pichora-Fuller, M.K. (2000). Implications of perceptual deterioration for cognitive aging research. In Craik, F.A.M and Salthouse, T.A. (Eds.), Handbook of aging and cognition (2nd ed.). Mahwah, NJ: Lawrence Erlbaum, pp. 155–219.

Segalowitz, S. J., Wintink, A. J., and Cudmore, L. J. (2001). P3 topographical change with task familiarization and task complexity. Cogn Brain Res, 12, 451–457.

Strauss, D. J., Corona-Strauss, F. I., Trenado, C., Bernarding, C., Reith, W., Latzel, M., and Froehlich, M. (2010). Electrophysiological correlates of listening effort: neurodynamical modeling and measurement. Cogn Neurodynamics, 4(2), 119-131.

Strauß, A., Wöstmann, M., and Obleser, J. (2014). Cortical alpha oscillations as a tool for auditory selective inhibition. Front Hum Neurosci, 8 (350), 1-7.

Wagener, K., Brand, T., & Kollmeier, B. (1999). Entwicklung und Evaluation eines Satztests für die deutsche Sprache II: Optimierung des Oldenburger Satztests. Zeitschrift für Audiologie (38), S. 44-56.

Winneke, A.H., and Phillips, N.A. (2011). Does audiovisual speech offer a fountain of youth for old ears? An event-related brain potential study of age differences in audiovisual speech perception. Psychol Aging, 26(2), 427-438.

Winneke, A., Meis, M., Wellmann, J., Bruns, T., Rahner, S., Rennies, J., Walhoff, F., & Goetze, S. (2016). Neuroergonomic assessment of listening effort in older call center employees. In Zukunft Lebensräume Kongress. Frankfurt, April 20-21, Frankfurt. Berlin: VDE Verlag GmbH. 327-332.

Winneke, De Vos, Wagener, Latzel, Derleth, Appell, Wallhoff (2016). Reduction of listening effort with binaural algorithms in hearing aids: an EEG Study. 43rd Annual Scientific and Technology Meeting of the American Auditory Society, March 3 – 5, Scottsdale, AZ.

Yanz, J. L. (1984). The application of the theory of signal detection to the assessment of speech perception. Ear Hear, 5 (2), 64–71.

Zekveld, A. A., Kramer, S. E., and Festen, J. M. (2010). Pupil response as an indication of effortful listening: The influence of sentence intelligibility. Ear Hear, 31(4), 480-490.

Zokoll M.A., Wagener K.C., Brand T., Buschermöhle M., and Kollmeier B. (2012). Internationally comparable screening tests for listening in noise in several European languages: The German digit triplet test as an optimization prototype. Int J Audiol, 51(9), 697-707.

Citation

Winneke, A., Schulte, M., Vormann, M., Latzel, M. (2018). Spatial noise processing in hearing aids modulates neural markers linked to listening effort: an EEG study. AudiologyOnline, Article 23858. Retrieved from https://www.audiologyonline.com