Editor's note: This is a transcript of the live expert e-Seminar presented on 2/23/11.

Introduction

Two of the most important technologies used in modern hearing aids are directionality and noise reduction. This discussion will be a review of these two technologies within the context of how they are designed to work in realistic situations. All modern hearing aids today have variations of directionality and noise reduction, and patients who are fit with these technologies don't always have an accurate understanding of how these systems are designed to work.

Basic Operating Principles

To start, it is important to review the basic operating principals of these systems as they are normally applied in modern hearing aids. This ensures that everyone is working from the same basis. This way, the professional using these technologies can frame them for the patient and frame them in his or her own mind in an appropriate way. Furthermore, it is even more important that the patient understands how these technologies work, when they work well, when they don't work well, and how to make best use of them. At the end of the day we all have the same goal, which is for patients to operate as effectively as possible with the hearing that they have, but there are limits to how well noise reduction and directional technologies can help a patient in complex listening situations.

These technologies are extremely valuable to have in hearing aids; however, it is important to know the limits to avoid patient dissatisfaction. If the patient understands how well these systems can work but also when they will run into some limitations, then they will be able to better focus on the situations where they do work appropriately.

The first expectation issue often stems from what we consider "noise". What an audiologist uses for noise for clinical testing is certainly not what noise is from the patient's perspective when they're out in the real world. When we talk about speech understanding in noise, we are referring to word or sentence testing under headphones or in a controlled soundfield with a very stable background noise. One stable noise we use a lot, clinical babble, is not a true representation of multiple people talking. It is a representation of speech designed to be very stable, which is not what talking in the presence of other talkers typically sounds like. Even further removed from reality is speech-shaped noise, which is a spectrally shaped white noise. In either case, when we test speech understanding in noise in a clinical context, we are not testing under conditions that replicate the real world. Right from the start, our notion of how well a patient handles noise is based on their signal to noise ratio requirement or how they perform on the HINT or QuickSIN. Signal-to-noise ratio loss is useful in describing something about the distortional aspect of the patient's hearing loss, but it is not necessarily the same thing as how well the patient will do in realistic complex environments. This is a reality check that has to be the starting point of this discussion.

Complex Environments

I prefer to talk about speech understanding in complex environments instead of speech understanding in noise. I want to make sure that the conversation starts with a much more realistic description of the challenge that the patient actually faces. What do complex environments mean? Complex environments can include multiple talkers; movement; stable, non-speech sources; unstable, non-speech sources; distractions; shifting focus; or a little bit of everything.

Typically patients will talk about environments where there are multiple talkers. The patient is trying to have a conversation with one person or multiple people and other conversations are going on in the same environment. It is a party or restaurant environment in which there is other speech-based competition in the environment. The fact that the competition is speech-based is very important, because it is important to recognize how noise reduction systems react when the competition is speech.

To make an environment even more complex, many times there is movement of sound sources, either movement of what you're trying to listen to or movement of the competition. Directional systems are very much dependent on having a separation in space in what you're listening to and trying to ignore, and those systems can be compromised if what you're trying to ignore moves. Imagine being at the mall and you're trying to have a conversation with someone, while the other sound sources in the environment are moving past you. This is very relevant for how the noise reduction and directionality systems will function.

Sometimes the competition is stable, non-speech noise sources, such as the blowing of an air conditioner or a fan running. These sounds, that are both location and spectrally stable, can be handled in a certain way by noise reduction technology. Oftentimes, noise is not stable, such as with traffic noise. Traffic noise is a very good example of an unstable, non-speech source where the spectra level, intensity, and location may change all the time. Stable versus unstable non-speech sources are two different challenges.

In a realistic environment, there might be distractions such as sounds coming on and off, visual distractions, and/or situational distractions. A good example of this is having a conversation when you're driving in a car. Depending on the level of traffic, you sometimes have to pay more attention to the conversation or more attention to the act of driving. That distraction and having to shift focus can alter the person's ability to understand the conversation.

Another complex situation is when you're not listening to any one person in particular. A good example is at the airport. Let's say you are watching CNN on the TV monitor at the airport when a boarding announcement comes over the loud speaker. In this case, the signal of interest or what is important to you changes from moment-to-moment.

When you talk about the challenge of noise abatement technology, which is a term I use to describe both noise reduction and automatic directionality together, you need to keep in mind the fact that what you want to listen to may change. The reality is that in truly complex situations in which people find themselves all the time, the noise could be a little bit of everything. There can be both non-speech and speech competition going on with possible distractions, movement, and a shift in focus. All of these things could be going on at once, and it is a very complex situation for the hearing aids.

To better illustrate how challenging the situation can be, I want to walk you through a few situational photographs.

Situational Photograph - kitchen scene

In this first example, let's imagine the older gentleman is wearing hearing aids with noise abatement technologies and is having a conversation with his grandchildren at the kitchen island. Perhaps he is trying to listen to the children going through the photo album and suppress the conversation going on in the background between his wife and his son in law. To make things more complex, his daughter is also trying to have a conversation with him. Or, maybe he wants to hear the conversation between his wife and son-in-law and he's trying to ignore the chopping sounds that his daughter is making on the cutting board in front of him and the chattering of his two grandchildren as they go through the album. Here is a case where there can be two or three major noise sources in the room. What happens if the dishwasher is running at the same time or the faucet is running in the sink? All of those sound sources can be occurring at any given time and his job will be to pay attention to different parts of the speech signal at that point in time.

The idea that one set technology can handle any competition is a little bit erroneous. Let's imagine for a moment that the person who wears amplification is the younger woman on the right hand side of the photograph. She's trying to have a conversation with someone sitting at the kitchen table and her conversation competes with the activity in the kitchen. This is an extremely challenging situation for a person with hearing loss and an extremely challenging situation for a noise abatement technology to handle, but it is also a very realistic situation.

Situational Photograph - restaurant scene

The second photograph is busy restaurant scene with small pedestal tables placed closely together. A person with normal hearing could probably have a conversation in this situation without too much trouble. A person with sensorineural hearing loss is going to be at an extreme disadvantage in a situation like this. However, they see normal hearing people being able to handle the situation, and they want to operate as well as a normal hearing person would. This is the goal, but it is very challenging for technology to meet this goal.

Situational Photograph - classroom

In the third example, imagine the teacher is wearing amplification. She is trying to monitor several different conversations or inputs from the children in the classroom at any given time. It is important for her to be aware of many different directions. This is another situation where the listening environment can be very complex.

How Does the Brain Get the Job Done?

Before discussing hearing aid technology, I want to review how the brain handles complex listening environments. The way the human cognitive system works is different than how a noise abatement system works. When a person with normal hearing is in a complex environment, nothing gets subtracted away. The person will take in all of the information and the brain categorizes everything into various different sound sources. It will then decide which sound source it wants to pay attention to and ignore the rest. In other words, the cognitive system does noise abatement by taking in all the information, organizing it, and then selecting the part that the listener wants to pay attention to.

This is a very different approach than the technology approaches that we use currently in hearing aids. Hearing aids are subtractive. If you have directionality or you have noise reduction in your hearing aid, those systems work by trying to subtract the signals you don't want to listen to. It is trying to move functional performance as close to normal as possible, but it is doing it in a way that operates very differently than how the brain would normally operate.

It is important to recognize the functional difference in the way these systems work. It is also important to recognize that for people with hearing loss, their gold standard for hearing better is normal hearing. They can see the way normal hearing individuals operate. Perhaps they remember how they used to be able to operate when their hearing was better. They know they have a hearing loss now, but they still want technology that allows them to operate as effectively as individuals with normal hearing. As audiologists, we know hearing aids are not going to close the gap entirely, but it is also important to recognize how the normal system works in comparison.

Noise Reduction

Although specific manufacturers have different names and terms for their technology, most noise reduction strategies work in very similar ways, at least in very broad strokes. In this discussion, I'm going to be talking in very broad strokes.

The first goal of noise reduction is to remove the noise and leave the speech signal. That's the goal, however, that's not what happens with noise reduction as currently applied in hearing aids. That's a simple reality that professionals may have come to terms with, but most patients don't understand. Most patients' family members don't understand it either because they don't have an appreciation for just how disruptive noise can be for a person with sensorineural hearing loss.

The real advantage of current noise reduction technology in hearing aids is in improving acceptability and reducing annoyance when noise is present. This is an important distinction to make because this benefit is important. There's no doubt that noise reduction in the hearing aid is a very good thing in situations where there's a lot of background noise. This is especially true for very stable background noise that may not be as overwhelming, loud, disruptive, or as annoying when the noise reduction technology is operating in the hearing aid.

Again, noise reduction is not doing the basic job of removing the noise and leaving the speech. Any amount of reduction it does to the signal to reduce the noise is also going to take some speech information along with it. This doesn't mean that noise reduction isn't a good thing in hearing aids; it just means that it works in a different way than patients may expect it to work. It is the audiologist's job to make sure that this message gets across. This has been true for as long as we have had noise reduction in hearing aids, but every patient who is new to the hearing aid process typically walks in with this misconception. So, it is important for the professional to try to clean up this misconception.

We are not the only industry that wants to see better noise reduction in technology. There are a lot of industries, including the military, cell phone industry, etc. with a lot of money and smart engineers who would like to have circuitry that can remove the noise and leave the speech. They cannot solve the problem either, so we are not alone in tackling this problem. The ability to take a mixed signal, find the speech, and get rid of the noise is fundamentally very complex. As mentioned before, the normal cognitive system handles noise not by getting rid of anything, but organizing it and deciding what part to pay attention to. Creating a computer-based machine to do that is a very challenging problem that no industry has yet been able to accomplish in an effective way.

One of the reasons for consumers' misconceptions about noise reduction is advertising from Bose or other companies. There is a Bose ad for noise reduction headphones that states its system, "actively monitors and counteracts unwanted noise and reduces it before it reaches your ears." This statement is a perfectly accurate description of the way noise reduction headsets work. However, patients and family members want to know why hearing aids can't work that well. They might even come to you and ask why Bose can solve the problem and hearing aids can't. We can't solve the problem for a very fundamental reason, and we should be able to explain this to a patient.

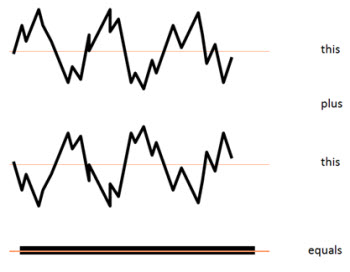

Noise reduction headsets work by using basic phase cancellation, meaning they reverse the signal. If you have a picture of the exact ongoing waveform of the noise and if that picture is completely independent of the signal that you're trying to listen to, then you can subtract out the noise by reversing the noise waveform. For this to happen, you need to have an independent and complete ongoing image of what that noise is; it is not enough to just have the spectral region of the noise. If you do have an independent and complete waveform, you can take that signal and flip it 180° out of phase, so you have exactly the same signal, just flipped. When the flipped signal is added back to the original signal, you end up with nothing, just a cancellation of the signal.

An Example of Phase Cancellation

In headphones, the signal that you want to hear, the clean signal, is coming through the wire plugged into to your mp3 player, computer, airplane armrest, etc. There is a clean image of the desired signal going directly to the person's ear canal. In addition to this, unwanted noise can leak around the ear cup of the headphones to the patient's ear. To avoid this, noise reduction headphones have a monitoring microphone on the outside of the ear cup that takes an exact measure of the noise in the environment. It then takes that waveform of the noise in the environment, flips it 180° out of phase, and adds it to the signal that's in the ear cup to cancel out the noise.

With this system, wearers have three signals in their ear canal. They have the original signal that they want to listen to that's coming in on the wire, they have the noise that's leaking around the ear cup, and then they have another copy of that noise that's leaking around the ear cup but that's 180° out of phase. When you add those two noise signals, the original noise signal plus the 180 degree flip signal, the noise is cancelled and you are left with the clean signal. The reason that this works is that the signal is totally independent of the noise that is leaking around the ear cup.

Phase cancellation is a very effective technology. We just cannot use it in hearing aids for noise reduction because the hearing aid never gets to see the noise and speech independently. By the time the signals get to the hearing aid they are already mixed. Unfortunately, patients can be confused, because they want hearing aids to work the same way as noise reduction headphones.

In contrast to headphones, hearing aids use single channel noise reduction. The term channel is not used here in the same way it is used with hearing aids. Single channel noise reduction is a term that is used in the signal processing literature to refer to noise reduction technologies that are designed to take a mixed signal of noise and speech together and try to remove the noise. This is the difficult problem that no industry can solve effectively.

The trick to single channel noise reduction is that you have to know when and where to subtract signals. Theoretically, in a certain frequency region you drop gain or you drop the signal level. What you're trying to do is capture that moment in that frequency region where it is just noise and not speech. The problem with this approach is that speech is a broadband signal. The frequency spectrum of a speech signal jumps around all the time. Every phoneme has a different pattern of energy that covers different parts of the frequency spectrum, so every 10, 20, 30, or 40 milliseconds you have a different spectral pattern of the speech signal. This makes the subtractive approach in hearing aids very challenging. The signal you are trying to protect is jumping around all the time so it is hard to know exactly when and where to subtract out the signal. The speech signal could happen at any time, anywhere across the spectrum of the hearing aid bandwidth, and it is very difficult to predict.

Subtractive approaches work much better if the noise has a limited bandwidth, for example, if you're listening to speech and you have an ongoing noise that is relatively narrowband but at a high level. If it is a half octave or even an octave wide, you can drop gain in that region to drop the noise signal out of the spectrum. You will also take out whatever speech happens to fall in that region, but there's enough other speech across the spectrum that cognitively you don't miss it that much.

Noise reduction for very band-limited, stable, non-speech noises works well. The problem is that noise is often other speech signals, which are broadband and unpredictable. With competing speech, you don't know where in the spectrum the speech you want to hear is relative to the speech you are trying to ignore. Therefore, using a subtractive approach to noise reduction when the competition is speech will provide little if any ongoing benefit to the patient.

One misinterpretation that people have with noise reduction is the difference between identification or classification of signals versus separation. In the case of a mixed speech plus noise signal, a hearing aid can identify on a frequency by frequency region basis when one particular channel is dominated by a speech signal or a stable non-speech signal. However, just because the hearing aid can identify that, doesn't mean it can effectively separate out the two signals. Noise reduction systems can be relatively good at finding non-speech noise signals but that doesn't mean they can separate that signal out from the ongoing speech signal; these are two different functions. I may know that there's peanut butter in my cookie, but that doesn't mean I can take the peanuts out before I eat the cookie if I don't like peanuts. Identifying the presence of something is not the same thing as removing it.

Most hearing aid noise reduction systems are based in some way, shape, or form on modulation detection. Modulation detection is an evaluation of the intensity changes of a signal over time. Amplitude variations in speech change many times per second. The syllable structure of speech is such that we produce three to five syllables per second. Each syllable has a relatively high level stressed vowel or unstressed vowel with lower level consonant sounds, pauses, or gaps in the signal. Speech has an up and down pattern of energy that changes over time.

In contrast, environmental noise, like the blow of an air conditioner, can be very stable.

Intensity patterns may over time change, but in a very different way as compared to speech. The noise may be a stable type of white noise signal that has some variation on a peak to peak basis, but not the same movement as the clean speech signal.

Most noise reduction systems in hearing aids employ some variation of modulation detection that divides the signal into different channels and looks at the amplitude pattern within that channel. If the amplitude pattern has a lot of variation, then the system assumes that the channel is dominated by a speech signal. If the amplitude pattern looks more stable, the assumption is that the signal is predominantly non-speech, and it will reduce gain in that particular frequency region.

It is clear how this works well for stable non-speech signals, particularly stable non-speech signals that are band limited. For example, if you are listening to speech and a tea kettle whistles, it will be band limited and stable. Therefore, if you have a noise reduction system that finds that particular frequency region where the stable tea kettle whistle is going on, then you can drop gain in that region and not affect most of the speech signal. The patient will be less bothered by the whistle and not really miss much of the speech signal. With broadband noise, however, all the frequency regions are affected and so a system that drops gain in all frequency regions will take the speech signal along with it.

With broad spectrum background noise, like the noise of a crowded café, the noise can be as broad of a spectrum as the desired speech signal that you want to hear. Noise reduction is going to try to reduce gain across a broader band within the hearing aid. For example, with a café, it might reduce the gain in the low and mid frequencies where the spectrum of the noise is higher than the speech signal. However, in the high frequency region where the speech signal is the more dominant signal, there is not any sort of reduction. The problem is that you're not improving the signal to noise ratio anywhere. You're not separating out the two signals, you're just changing the spectrum balance. That is why it is difficult to separate a speech signal from a broad background of other sounds through a noise reduction system. This is especially true for sounds that modulate relatively closely to the modulation pattern of speech; you are not necessarily going to get a lot of relief from the noise in those situations.

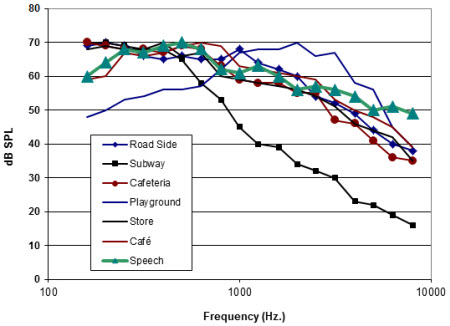

Long Term Spectrum of Different Noises

If you look at the long term spectrum of a variety of different sound samples of noises in comparison to speech (green triangles as shown above), you can see that there are a lot of different situations that have a very broad spectrum. Some situations, like a subway, have a very different spectrum from speech that is more low frequency dominated. In this case although the subway has a similar spectrum in the low frequencies when compared to speech, when you get to the mid and high frequencies, the speech signal would be dominant above that subway sound. This can be contrasted to a recording made on a playground where there may be the sounds of children running around and screaming. This type of playground scene would have a high frequency tilted spectrum.

No matter how the spectrum tilts, typical environments are going to have relatively broadband spectrums. Therefore, the challenge for noise reduction in most situations is to find a frequency region where there is just noise and not speech.

Directionality

Directionality is another noise abatement technology that is used in hearing aids, and there are some limitations to it as well. If the environment is right, directionality works well at reducing background noise. The misinterpretation around directionality is not so much about the technology, but where it works well and where it doesn't work well.

Automatic versus Adaptive Directional systems

It is important to differentiate between automatic and adaptive directionality. Mid level and high technology levels are usually both automatic and adaptive, but these are two different functions. Adaptive directionality refers to continuously changing polar plots depending on where the competition is coming from in the environment. Automatic refers to the automatic switching between a directional mode to an omni-directional mode and vice versa. These are two different functions in the hearing aids. Most automatic directional systems allow you to override the automatic function so that the patient can switch the directionality on or off, but by default for most companies they're set to automatic switching.

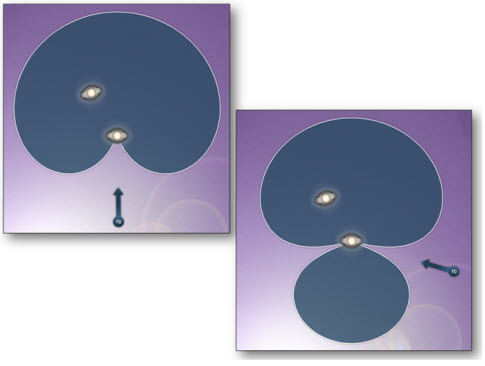

Examples of Polar Plots

Polar plots

Polar plots are a representation of the microphone sensitivity to sounds around a person wearing hearing aids. There are two polar plots shown above. The one on the left shows a microphone that is maintaining sensitivity to sounds from the front while reducing sensitivity from behind. This would be beneficial for a person wearing hearing aids when listening to a person in front of them, with noise coming from behind. The polar plot on the right shows a microphone with less sensitivity for sounds coming from the back and to the side. Adaptive directional systems change the shape of the polar plot in order to reduce sensitivity in the direction the noise is coming from.

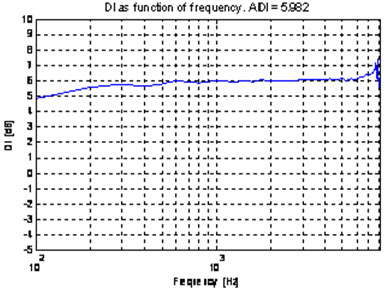

Directivity index

DI is directivity index. This is a measure of how effective the directionality is in a soundfield of noise. AIDI is the articulation index weighted directivity index, which is designed to give a single number that represents the overall effectiveness of the directionality to handle the noise in a diffuse background situation.

Example of AIDI

Here are some data. This is the directivity index across the frequencies for one particular hearing aid that shows somewhere between 5 to 6 dB reduction in a diffuse noise environment when the directionality is active. In other words, DI gives you a range for how much reduction you would expect to see with this particular directional system. The AIDI, which is a frequency weighted average across the frequencies based on the articulation index, for this hearing aid is 5.982. This means there is approximately 6 dB of improvement on a speech intelligibility weighted measure of the directivity index for this hearing aid. AIDI gives you an estimate of the maximum effectiveness you might expect from this directional system in a realistic noise environment, which in this case is about 6 dB improvement in SNR. When you test these systems on patients in terms of speech understanding in noise, you generally do not see the full AIDI; on average you will be able to measure approximately 3 - 5 dB improvement in SNR.

Multi-band directionality

The last important term in regards to directionality is multi band directionality. Many systems today are designed for the directionality to work independently in different frequency regions. In multi band directional systems, if you have noise sources that are coming from different directions with different frequency content, then you can have one polar plot in one direction to reduce a noise source from that direction and a different polar plot in a different frequency region designed to reduce in a different direction. As long as the environment is not too reverberant that system should work pretty much as designed.

These automatic systems are very sophisticated. They make decisions about whether or not you should be in omni-directional or directional based on criteria such as the overall level of the environment, the spectral characteristics of the environment, or for instance like in Oticon aids, a modulation test of the signal using the output in the person's ear.

With the modulation test, we know that a clean speech signal has certain modulation characteristics, and many noise environments have different modulation characteristics. We then assess the noise in a room to find the better signal-to-noise ratio of the combined signals in the room. Based on this information, the system decides whether or not the signal-to-noise ratio needs to be improved and if the hearing aid should be omni or directional.

The key to using directionality successfully is to communicate situations in which directionality is expected to work well. Users will tell you they prefer the sound quality of the hearing aid when it is omni-directional. Because of the lack of reduction and the perceived loudness, patients tend to like the sound quality of an omni-directional system. They like directionality to work if it is going to help out, but if it is not going to help the signal to noise ratio and overall performance, they would rather the hearing aid stay in omni-directional because it sounds better. That's a realistic criteria and that's the way the automatic systems are designed to work.

When does directionality work?

When the hearing aid is in directional, when does it work best? A hearing aid is going to work well if the talker you're listening to is in front of you, if there's noise in the environment and the noise is coming from either the back or sides of you, and if the reverberation is not too excessive. In these cases, directionality should work well for the patient and the patient will prefer to have directionality active. If these signal conditions are not met, then the patient is going to prefer that the hearing aid stay in omni.

It is important that the patient has an appreciation that these acoustics of the environment are going to matter - again, the location of the talker, the location of the competition, and the amount of reverberation in the environment. For instance, if you're talking to somebody sitting across the table from you and the noise is behind you, directionality should work well. If you're talking to somebody across the table from you and the noise in the environment is behind them such that both the noise and the signal are coming in front of you, then directionality will probably not help much. Likewise, if you are in a very reverberant environment, the benefit of directionality will also be compromised.

As far as reverberation goes, our patients are not walking around with sound level meters and spectral analysis systems to measure the reverberation time of an environment, but most people know a reverberant room from a nonreverberant room. A room with tile and hard surfaces will be a very reverberant environment, whereas a room with predominantly soft surfaces will be a nonreverberant environment. Describing reverberation is a little bit tricky but it is an important component of how well directionality will work.

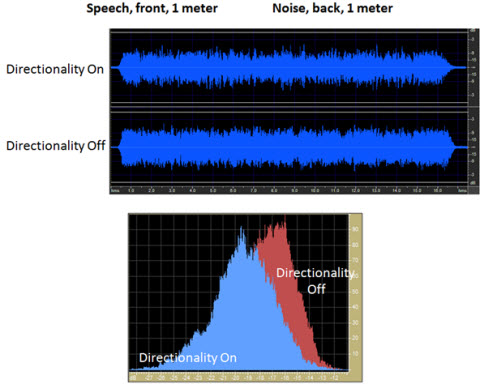

Example 1. Directionality measurements in nonreverberant room, speech at 1 meter.

Directionality at Work

Example 1 is a recording I made with KEMAR wearing a hearing aid in a nonreverberant room. It was a bedroom of a home with a lot of soft surfaces, including carpet, a comforter on the bed, etc. The waveform in the top panel is with directionality on; the bottom panel is with directionality off. This recording was made with speech coming from in front 1 meter away and noise from behind. To measure directionality, you can do a histogram. You can count the number of times different levels occur in the output signal of the hearing aid to get an idea of whether or not the directionality did anything to reduce the noise in the environment. The histogram above shows a peak-to-peak change between directionality on and directionality off. The distribution of levels dropped about 4 or 5 dB, which means a 4 to 5 dB improvement in the signal-to-noise ratio. This is exactly what you would expect from a directional system in a nonreverberant situation with speech in front and not too far away, and noise coming from behind.

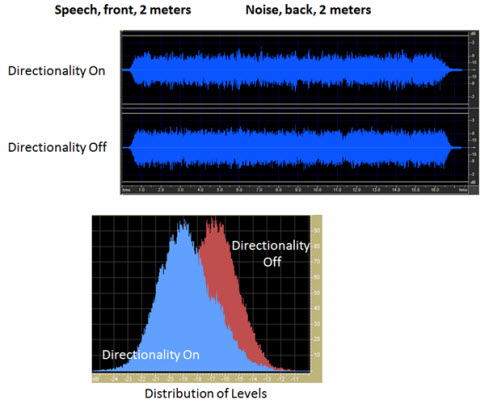

Example 2. Directionality measurements to show the effect of distance.

Effect of Distance

Now, I want to show you the effect of distance. The recording shown in Example 2 was made in the same nonreverberant bedroom environment but now speech was 2 meters in front and noise was 2 meters from behind. When you compare directionality off and directionality on, you still see the directional effect. It is reduced from the last example where the speaker was only 1 meter away, but you still get a directional effect. It is still a pretty good benefit, and that's because the environment was not too reverberant. It is still the soft bedroom environment without a lot of noise in the background. The directional effect decreases substantially when the speaker is farther than 2 meters away. So patients will not notice much benefit in directionality if they are trying to listen to someone across the room.

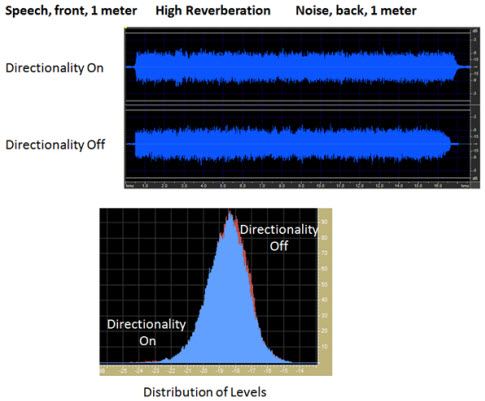

Example 3. Directionality measurements in reverberant room with speech at 1 meter in front, noise at 1 meter from behind.

The Effects of Reverberation

To show the effect of reverberation, I took Kemar and all the recording equipment into a bathroom with a lot of hard surfaces. It had a shower enclosure that was all glass, a tile floor, a hard tile countertop, a bathtub with hard surfaces, and I took towels and everything soft out of the room that I could. It was a very reverberant room. The speech was 1 meter in front of Kemar with noise 1 meter behind. Even though the speech and noise were the exact same as before, with this much reverberation, there is absolutely no effect of directionality. The signal levels were exactly the same whether directionality was on or off. The directionality system was basically defeated because the noise that should have been coming from behind was actually bouncing off the hard surfaces and coming at Kemar's ear from many different directions at once. That is an example of one of the signal conditions you need to discuss with patients when you fit them with directionality.

Summary

Patients expect their hearing aids to work well in every situation, and the simple reality is that hearing aids don't work well in every noisy environment. As we've discussed, directional systems work well depending on where the speech is coming from, where the noise is coming from, and the amount of reverberation in the environment. Noise reduction systems work well, but within certain conditions. This is part of the reality check that we need to do with patients.

The onus is on the audiologist to counsel effectively so that patients and their families understand these situational limitations of noise abatement technology. These systems perform well and offer great benefit. It is the responsibility of the professional to communicate how and when they will and will not work best in order to ensure that patients are successful when using them.

I hope that this talk gave you a few insights that you didn't have before. If you have any questions or further comments, please e mail me at DJS@oticonusa.com.

Questions and Answers

Question from participant: When the effects of reverberation impact the effect of directional microphones because you have signals coming from different directions, do you make a transition to assisted technologies such as FM? How do you see that coming into the conversation?

Dr. Schum: The willingness of adult hearing aid users to use assistive technology in hearing aids has historically been a problem, especially with personal FM systems. However, with the new connectivity options available in hearing aid technology, including personal mics that can be attached to the connectivity options, there's a new opportunity to engage the patient in that discussion. Some audiologists are a little bit hesitant to do that because they're already orienting a new user to pretty advanced technology. It can sometimes be a tricky conversation to have, but as these connectivity options get better and better from the various manufacturers, it might be an easier conversation to have. As long as the professional points out both the benefits and limitations to the advanced technologies in an open and honest way, then it is easier to make a case for some of the extra assistive technology to the patient.

Situational Performance of Noise Reduction and Directionality

May 16, 2011

Related Courses

1

https://www.audiologyonline.com/audiology-ceus/course/auditory-wellness-what-clinicians-need-36608

Auditory Wellness: What Clinicians Need to Know

As most hearing care professionals know, the functional capabilities of individuals with hearing loss are defined by more than the audiogram. Many of these functional capabilities fall under the rubric, auditory wellness. This podcast will be a discussion between Brian Taylor of Signia and his guest, Barbara Weinstein, professor of audiology at City University of New York. They will outline the concept of auditory wellness, how it can be measured clinically and how properly fitted hearing aids have the potential to improve auditory wellness.

auditory

129

USD

Subscription

Unlimited COURSE Access for $129/year

OnlineOnly

AudiologyOnline

www.audiologyonline.com

Auditory Wellness: What Clinicians Need to Know

As most hearing care professionals know, the functional capabilities of individuals with hearing loss are defined by more than the audiogram. Many of these functional capabilities fall under the rubric, auditory wellness. This podcast will be a discussion between Brian Taylor of Signia and his guest, Barbara Weinstein, professor of audiology at City University of New York. They will outline the concept of auditory wellness, how it can be measured clinically and how properly fitted hearing aids have the potential to improve auditory wellness.

36608

Online

PT30M

Auditory Wellness: What Clinicians Need to Know

Presented by Brian Taylor, AuD, Barbara Weinstein, PhD

Course: #36608Level: Intermediate0.5 Hours

AAA/0.05 Intermediate; ACAud inc HAASA/0.5; AHIP/0.5; ASHA/0.05 Intermediate, Professional; BAA/0.5; CAA/0.5; Calif. SLPAB/0.5; IACET/0.1; IHS/0.5; Kansas, LTS-S0035/0.5; NZAS/1.0; SAC/0.5

As most hearing care professionals know, the functional capabilities of individuals with hearing loss are defined by more than the audiogram. Many of these functional capabilities fall under the rubric, auditory wellness. This podcast will be a discussion between Brian Taylor of Signia and his guest, Barbara Weinstein, professor of audiology at City University of New York. They will outline the concept of auditory wellness, how it can be measured clinically and how properly fitted hearing aids have the potential to improve auditory wellness.

2

https://www.audiologyonline.com/audiology-ceus/course/vanderbilt-audiology-journal-club-clinical-37376

Vanderbilt Audiology Journal Club: Clinical Insights from Recent Hearing Aid Research

This course will review new key journal articles on hearing aid technology and provide clinical implications for practicing audiologists.

auditory, textual, visual

129

USD

Subscription

Unlimited COURSE Access for $129/year

OnlineOnly

AudiologyOnline

www.audiologyonline.com

Vanderbilt Audiology Journal Club: Clinical Insights from Recent Hearing Aid Research

This course will review new key journal articles on hearing aid technology and provide clinical implications for practicing audiologists.

37376

Online

PT60M

Vanderbilt Audiology Journal Club: Clinical Insights from Recent Hearing Aid Research

Presented by Todd Ricketts, PhD, Erin Margaret Picou, AuD, PhD, H. Gustav Mueller, PhD

Course: #37376Level: Intermediate1 Hour

AAA/0.1 Intermediate; ACAud inc HAASA/1.0; AHIP/1.0; ASHA/0.1 Intermediate, Professional; BAA/1.0; CAA/1.0; Calif. SLPAB/1.0; IHS/1.0; Kansas, LTS-S0035/1.0; NZAS/1.0; SAC/1.0

This course will review new key journal articles on hearing aid technology and provide clinical implications for practicing audiologists.

3

https://www.audiologyonline.com/audiology-ceus/course/61-better-hearing-in-noise-38656

61% Better Hearing in Noise: The Roger Portfolio

Every patient wants to hear better in noise, whether it be celebrating over dinner with a group of friends or on a date with your significant other. Roger technology provides a significant improvement over normal-hearing ears, hearing aids, and cochlear implants to deliver excellent speech understanding.

auditory, textual, visual

129

USD

Subscription

Unlimited COURSE Access for $129/year

OnlineOnly

AudiologyOnline

www.audiologyonline.com

61% Better Hearing in Noise: The Roger Portfolio

Every patient wants to hear better in noise, whether it be celebrating over dinner with a group of friends or on a date with your significant other. Roger technology provides a significant improvement over normal-hearing ears, hearing aids, and cochlear implants to deliver excellent speech understanding.

38656

Online

PT60M

61% Better Hearing in Noise: The Roger Portfolio

Presented by Steve Hallenbeck

Course: #38656Level: Introductory1 Hour

AAA/0.1 Introductory; ACAud inc HAASA/1.0; AHIP/1.0; BAA/1.0; CAA/1.0; IACET/0.1; IHS/1.0; Kansas, LTS-S0035/1.0; NZAS/1.0; SAC/1.0

Every patient wants to hear better in noise, whether it be celebrating over dinner with a group of friends or on a date with your significant other. Roger technology provides a significant improvement over normal-hearing ears, hearing aids, and cochlear implants to deliver excellent speech understanding.

4

https://www.audiologyonline.com/audiology-ceus/course/easy-to-wear-hear-for-39936

Easy to Wear, Easy to Hear, Easy for You…Vivante

Your patients can benefit with a broader portfolio and range of style choices so they can choose the best model for their needs. We will review why Vivante is easy to wear, easy to hear, easy for you, focusing on sound performance, connectivity and personalization. Be ready to open up to a wide world of choice.

auditory, textual, visual

129

USD

Subscription

Unlimited COURSE Access for $129/year

OnlineOnly

AudiologyOnline

www.audiologyonline.com

Easy to Wear, Easy to Hear, Easy for You…Vivante

Your patients can benefit with a broader portfolio and range of style choices so they can choose the best model for their needs. We will review why Vivante is easy to wear, easy to hear, easy for you, focusing on sound performance, connectivity and personalization. Be ready to open up to a wide world of choice.

39936

Online

PT60M

Easy to Wear, Easy to Hear, Easy for You…Vivante

Presented by Kristina Petraitis, AuD, FAAA

Course: #39936Level: Intermediate1 Hour

AAA/0.1 Intermediate; ACAud inc HAASA/1.0; BAA/1.0; CAA/1.0; IACET/0.1; IHS/1.0; Kansas, LTS-S0035/1.0; NZAS/1.0; SAC/1.0

Your patients can benefit with a broader portfolio and range of style choices so they can choose the best model for their needs. We will review why Vivante is easy to wear, easy to hear, easy for you, focusing on sound performance, connectivity and personalization. Be ready to open up to a wide world of choice.

5

https://www.audiologyonline.com/audiology-ceus/course/real-ear-measurements-the-basics-40192

Real Ear Measurements: The Basics

Performing real-ear measures ensures patient audibility; therefore, it has long been considered a recommended best practice. The use of REM remains low due to reports that it can be time-consuming. This course will discuss the very basics of REMs.

auditory, textual, visual

129

USD

Subscription

Unlimited COURSE Access for $129/year

OnlineOnly

AudiologyOnline

www.audiologyonline.com

Real Ear Measurements: The Basics

Performing real-ear measures ensures patient audibility; therefore, it has long been considered a recommended best practice. The use of REM remains low due to reports that it can be time-consuming. This course will discuss the very basics of REMs.

40192

Online

PT30M

Real Ear Measurements: The Basics

Presented by Amanda Wolfe, AuD, CCC-A

Course: #40192Level: Introductory0.5 Hours

AAA/0.05 Introductory; ACAud inc HAASA/0.5; AHIP/0.5; ASHA/0.05 Introductory, Professional; BAA/0.5; CAA/0.5; IACET/0.1; IHS/0.5; Kansas, LTS-S0035/0.5; NZAS/1.0; SAC/0.5

Performing real-ear measures ensures patient audibility; therefore, it has long been considered a recommended best practice. The use of REM remains low due to reports that it can be time-consuming. This course will discuss the very basics of REMs.