Editor’s Note: This text course is an edited transcript of a live webinar. Download supplemental course materials.

James Martin: Today we are going to talk about Widex Dream: True to Life Sound. The agenda is broken up into three sections.

Widex Dream is our new hearing aid platform. Any time Widex introduces new technology, we go back to our mission statement. It says, “Through originality, perseverance, and reliability, our goal is to develop high quality hearing instruments that give people with hearing loss the same opportunity for communication as those with normal hearing.” We believe in making technology the best we can. This has been our mission statement back to our original Senso and Quattro technology. Although our patients do not all hear the same, we do want to give them opportunities to hear the best they can in different life environments. Today, we are going to talk about the three reasons why we are excited about Dream and all that it has to offer our patients. We will also talk about what Dream offers you as a professional when fitting a patient.

The Three Reasons

The first of the three reasons is more sound. We are going to spend some time talking about how we achieve that in the Dream technology. We also are going to talk about more words. Words are the tools we use to communicate with each other. We want to give our patients the ability to communicate in varying environments, whether they are in a one-on-one conversation, a birthday party or a sporting event. We want to give them the ability to take in and capture these words. Finally, we want the hearing aids to be more personal. We have listened to what you, as clinicians, have said that you would like to see, and we have tried to incorporate that into our strategy for improving the patient’s ability to communicate within this platform.

More Sound

Let’s start off talking about what more sound means. From our perspective, more sound means having a hearing aid that is able to capture the full spectrum and depth of sound in our environment and give a rich, quality output. From a hearing aid standpoint, the output of a hearing aid can never be better than the sound going in to a hearing aid. As the old adage says, “Garbage in, garbage out.” Our goal is to capture sound as naturally, cleanly, and clearly as possible so that the sound processed within the device is preserved and represented in the same way. Sound quality is an important focus for us.

I want to share a few real-world examples to illustrate this point. For some of you who are at least my age, you remember what a cassette tape looks like. Cassettes preceded compact discs (CDs). Cassettes were small and easy to use, but they were limited in a few ways. One was that the recorded signal on the metal tape was very much like an analog signal. If you ever listen to a cassette now compared to a digital recording, it sounds very scratchy. There was a lot of “hissing” that could be heard in the background on a cassette.

From the standpoint of hearing aid systems, think of this as analog software that we would use. In order to use this “software,” we had to have some type of device to play it. That device was a cassette player. In order to be able to appreciate the analog sound on that cassette, we had to put it into a cassette player, which is a hardware component. We take the cassette, which is our software, and we take the hardware, which is our cassette deck, and we merge the two together to get sound.

Because cassettes were analog technology, the sound quality was not as clean as what we have today. If you have ever tried to find a song on a cassette, you would have to hold the fast forward or rewind button and then stop to listen; you could not skip from one song to another. Occasionally, I would hold the button halfway down so I could listen for the song I wanted, and it still sounded like chipmunks in the background. Occasionally, you would be listening to your favorite song, and all of a sudden the tape would sound like it was being eaten. You would eject the cassette and find the magnetic tape caught inside the player. Then you would have to use your finger and twist all the tape back into the cassette by hand. You could attempt to play it, but often the thin tape became wrinkled or even broken. We had a lot of issues with this technology at times when we were trying to enjoy our music.

Technology improves over time, as we know. Currently, most of our album music is played on CDs, or compact discs. CDs are a new form of software. Cassettes were analog technology; CDs are digital technology. The benefit of a digital format is a cleaner sound. Now we have this new media that we can use, but we had to create new devices to play it on – CD players. Again, you have the hardware and software components pulled in together. The benefit now is you get a cleaner sound because it is digital. We have turned the analog signal into digital 0’s and 1’s. It is a richer, cleaner sound quality, and it is much easier now to find our favorite song than on a cassette tape. There are many benefits to having this new form of media, but it took developing a new type of hardware to play that media.

Even now, we have Blu-Ray DVDs that allow us to immerse ourselves in the sights and sounds of movies. It did, of course, mean developing new Blu-Ray technology. The point is that there are a few components to get more sound. One is the hardware. We need sufficient hardware to play our software. Software is something that most manufacturers spend a lot of time talking about. Clinically, it is our job to manipulate software so that we can make our patients happy by changing the settings or adjusting programs. As we have illustrated already, to get best sound quality, you need good software and good hardware. That synergy that allows us to capture all those nuances of the technology, and the software merges those two together. That is what we have done in the new Dream.

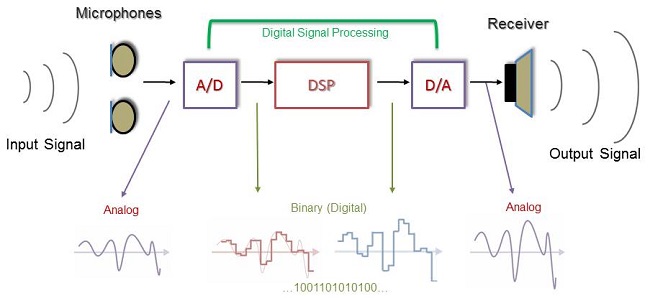

Let’s look at a simple block diagram of a digital hearing aid (Figure 1). The input signal comes into the hearing aid and reaches the microphones. The microphones capture the sound and send it into an analog to digital (A/D) converter. At that point, the analog signal is converted to a digital bit code of 0’s and 1’s that we can manipulate, change, and fine tune for our patient. Clinically, the digital sound processing (DSP) component is where we spend most of our time. We have to take this digital bit code and turn it back into an analog signal output by way of the D/A converter, because our ears do not hear 0’s and 1’s; they hear analog sound. The component that gives us the best sound quality is the A/D converter. The A/D converter to the DSP to the digital analog converter is what we consider digital signal processing. The gatekeeper that lets sound into the hearing aid is the A/D converter. Remember we said earlier that the output of a hearing aid can never be better than the input to the hearing aid. The ability to get a good sound starts at the very beginning of the process. If we can capture and replicate the sound as accurately as possible, it allows the digital signal processing system to use all the nuances to preserve all those sounds and minimize errors within the system.

Figure 1. Block diagram of an analog-to-digital converter in a digital hearing aid.

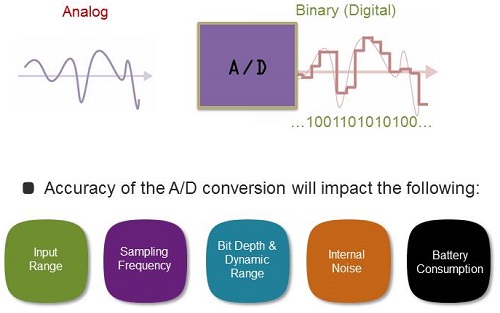

Widex built a new A/D converter in the Dream hearing aid. It took us five years to build, but we knew precise sound quality was important. The accuracy of the A/D conversion will impact several things. The first thing is the input range or the system limit. The A/D converter works within constraints of the system’s upper and lower input limits. You can think of it almost like a room in your house - you have a ceiling and a floor. Anything you put in that room will have to fit within the constraints of the physical limits of that room. We have raised the input ceiling up to 113 dB in this device. That is all part of the A/D conversion. Our sampling frequency is 33.1 thousand times (kHz) per second. The bit depth is how accurately we can replicate the signal from an analog waveform to a digital signal. The closer you can replicate the analog signal, the better your bit depth is. It means you are following the contours of that waveform more accurately, and with that, it allows us to have a dynamic range of 96 dB. Our system is a 16-bit system with a 96 dB dynamic range.

If you look closely at Figure 2 after the A/D converter, you will see where the square waves do not quite match up to the original analog waveform superimposed in the background. The small white spaces between the digital and analog waveforms are noise. The fewer of those spaces and the closer we can follow the contour of that original waveform, the less internal noise we have in the system. Having a good digital replication of the sounds makes for a cleaner system, which is, again, the job of the A/D converter. All hearing aids have an A/D converter, and they all follow system limits that are set by the manufacturer.

Figure 2. Analog waveform converted to a binary, digital representation.

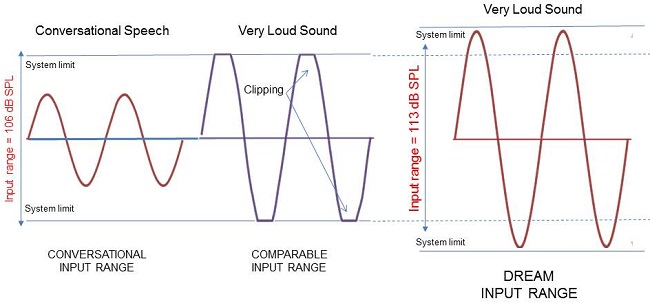

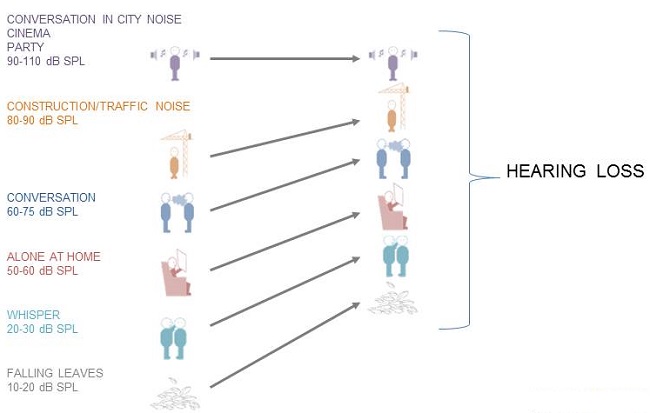

Our ears encounter soft to loud sounds on an everyday basis. Falling leaves are somewhere around 10-20 dB SPL. Conversational speech is somewhere around 65-75 dB SPL, and construction sites and traffic noise are in the 80-90 dB SPL range. There are environments that are significantly louder than what the ear experiences on a general basis, like music concerts, sporting events and parties with loud music. Most hearing aids have an input system limit of around 92 dB SPL. There are one or two with a ceiling of 106 dB SPL, but 80% to 85% of the manufacturers have a ceiling of 92 dB.

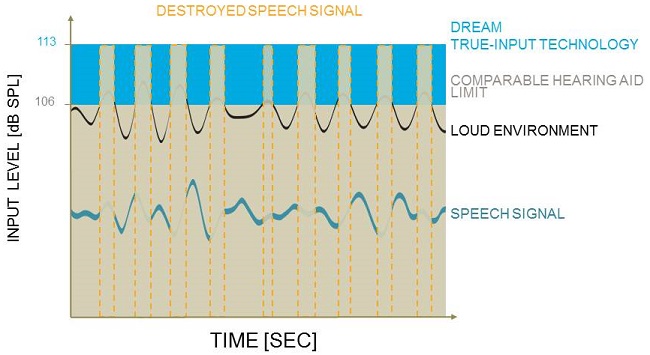

With that said, there are sounds that we experience that are louder than 92 dB SPL. Even walking down a city street can be anywhere from 90 to 110 dB SPL. If the ceiling of the hearing aid is lower than some “normal” environmental sounds, how does a hearing aid manage it? In most cases, there are two applications. The first is input clipping. When a sound exceeds 92 dB, the system will not process the sound, but, rather, will peak clip anything above that. The other way of handling sound in excess of the ceiling is input compression. This means that as the input level gets louder and closer to the ceiling, the system internally turns the volume down. It brings the level lower so that the input does not cross that limit. There are inherent problems with both of those strategies. Peak clipping adds distortion and chops off the amplitude and shape of the sound. Input compression brings everything down, which results in a loss of the intensity contrasts that you normally experience in conversation. In order to minimize that effect, Widex raised the input system limit to 113 dB in the Dream products (Figure 3). Now, louder sounds can come transparently into the processor so that we can manipulate and use all of that spectral information. We are not chopping off the input or causing an error because of the system limit.

Figure 3. Comparison of traditional hearing aid with 106 dB SPL input range and Widex Dream hearing aid with 113 dB SPL input range; the loud sound introduced to the traditional hearing aid experiences peak clipping (left), while the same input is fully captured in the Dream hearing aid with no clipping.

With analog technology, when the sound became too loud, we would turn the entire hearing aid down. Because it was analog, it functioned more linearly, and we could dial down the sound almost linearly as well. However, when you peak clip a digital signal, the hearing aid does not know how to categorize that sound. Is it a 0 or a 1? Because it does not know how to categorize, an error or inaccuracy in the signal occurs. It does not only affect loud inputs; it can affect the soft inputs as well. If you look at Figure 3, you can see that anything that has been clipped at either end of the system limit is now an error in the system. In order to minimize that, we raised our limit to 113 dB so that we can accurately capture those sounds and minimize distortion within the system.

Our previous A/D converter was a 16 bit system with a 96 dB dynamic range (7 dB SPL to 103 dB SPL). When we moved to the new Dream A/D converter, we shifted everything up, so we have a range of 17 dB SPL to 113 dB SPL. Because we raised the input floor to 17 dB, we have less microphone noise. Our goal with the new Dream platform and chip design is to capture more sounds that give you better sound quality within the hearing aid. More sound matters because we want to make sure, regardless of the environment, that we give users the ability to hear and enjoy those moments, whether it be walking down the street, riding in the car, having a conversation with a spouse, going to the movie or going to a sporting event. We know that patients will often take their hearing aids out when they get into uncomfortable environments where they are struggling. Providing an adequate dynamic range where they can hear both soft and loud sounds will give them better audibility and sound quality. A normal-hearing person has a range of hearing falling leaves all the way up to cinemas or parties, but a person with hearing loss has a decreased range (Figure 4). We have to bring the soft sounds into a range that the patient can use, as cleanly as possible.

Figure 4. Dynamic range comparison between normal hearing and hearing loss.

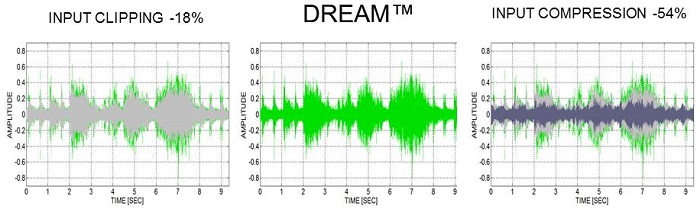

We wanted to test this in the real world to see how it worked. We set up KEMAR with Dream 440 Micros fitted to a flat loss. We completed a feedback test, and we set it to a master program for this test. Then we compared the Dream to two other high-end products from different manufacturers; one used peak clipping and the other used input compression. We took them to different environments. The first was a popular visitor’s square in Denmark called Tivoli Square. There is an amusement park on one side of the park and a subway on the other side. It is very loud with a lot of equipment, and there are a lot of people with noise and activity. We took the three devices, put them on our KEMARs and tested them to see how they performed in this environment. We also set up KEMAR in a car and drove around to experience what the hearing aids would do in a typical car environment. This was not a luxury brand. We also took KEMAR to the movies and a handball game. This is a very popular game in Denmark. It is very loud with a lot of people. They bring in little strips of plastic and slap them together. So we put the KEMARs in the stands and with shirts on them so they would blend in to the crowd a little better. We took measurements in these environments with all three of the different technologies. Let me discuss what we came up with.

Figure 5 is from the sporting event comparison. We captured the input waveforms with each technology. You can see the peaks and valleys within the waveform. The left graph shows peak clipping or input clipping, which is in gray. The Dream capture is in green behind that. When you compare the gray to the green, there is still more green sticking out. With the input clipping, you have lost 18% of the original waveform. The peaks and troughs are not as unique. If you look at the lower amplitudes (Figure 5), you can still see green, which represents softer inputs. Dream preserves even the softer inputs, when compared to input clipping.

Figure 5. Comparison of inputs from three different technologies on KEMAR at a sporting event: left=input clipping; middle=Dream; right=input compression.

The blue waveform on the right graph (Figure 5) shows input compression, which starts turning down sound as it reaches the system limit. Behind that, you will see the input clipping in gray, and then behind that you can see some of the Dream waveform in green. By using input compression, you lose 54% of your waveform in this graph. You have lost the peaks and troughs that are unique to speech. We need those peaks and troughs; they are the temporal and spectral cues of speech. The signal becomes distorted and the dynamics of speech are reduced. It may seem that everything is softer, which can sacrifice audibility in a particular environment. There is spectral and temporal smearing as a result of the input compression.

We also compared this with patients wearing the Dream and other high-end hearing aids. We performed a paired comparison looking at different levels for speech and music. We found that 80% of those patients preferred the Dream. It sounded clearer and more natural than peak clipping or input compression.

More Words

With this new platform, our goal is to provide more words for our patients to give them greater speech intelligibility in different environments. Figure 6 represents the waveform of a loud environment. Normally, speech is also entered into that loud environment, but for the sake of illustration, I am going to take it out. The gray box represents the system limit that this particular aid uses. We have the lower limit and we have the upper limit of 106 dB. In a case like this, once the sound crests above the system limit of 106, you inherently get distortion of the speech signal in that environment. All of the columns circled in yellow dashes represent errors where the signal has been distorted or an error was created because of the system limit. It affects both soft and loud inputs. This error is going to be carried through all of the processing. By raising the ceiling in the Dream to 113 dB, we can capture the fullness of sounds, minimize errors, and minimize destroying speech cues that these patients would get in that environment.

Figure 6. Example of a waveform from a loud environment with a 106 dB input ceiling. The yellow dotted columns indicate a destroyed speech signal due to ceiling limit. The blue region shows the extended input ceiling of Dream technology.

How does this affect directionality in the system? In a quiet environment, we know that hearing aids tend to be in an omnidirectional configuration. It is quiet, and we want patients to hear what is happening in front of them and in back of them. As the input level increases, there may be a change in signal-to-noise ratio, and the system will adapt. It will change the directionality to try to preserve the speech and minimize the noise. Once you increase to a higher intensity, the system gets more aggressive and changes to a cardioid or hypercardioid polar pattern to preserve the speech coming from the front, just like our pinnae do on the side of our head. Once the intensity goes above the input limit, however, the system does not know how to set itself and errors are introduced. It does not know how to compute and calculate errors. It defaults back to an omnidirectional configuration. If this is a loud environment, how many of you would recommend your patient to go into an omnidirectional configuration? None of you, because then they would hear the noise, not only from the front, but now equally in the back. That is what is happening when the sound level goes above the system limit. It defaults back to the omnidirectional configuration.

Because we have raised our ceiling to 113 dB, the system is now capable of setting itself to an appropriate directionality based on the information that it has. Digital hearing aids deal with adaptive polar patterns. The digital hearing aid takes 0’s and 1’s and uses that information to set itself. If it does not know if the signal is a 0 or a 1, it does not know how to set itself. In this case, it affects directionality.

When noise management is activated, the system will change based on the environment, and it may turn the volume down by a certain level in a certain environment. The noise management system uses the soft sounds and pauses in the environment and compares them to louder inputs. It determines the signal-to-noise ratio, how it needs to be set, and if it needs to turn the system up or down. Most classic noise reduction algorithms turn down. In a soft environment, the system knows how to set itself to preserve the sounds and manage the noise management system appropriately, even at moderate and loud levels. Once the input limit is exceeded, the system does not know how to categorize itself or preserve the signal-to-noise ratio in a challenging environment, because it now has errors and distortion.

By raising the system limit, we give the DSP part of the system the capability of using information to make the right calculations to set the hearing aid output appropriately for that patient in any given environment. We preserve cues for speech, whether it be in a quiet or loud environment. That is the goal. We want to give them more words in all environments.

The average level of background noise when you are walking down the street can be around 80 dB SPL, with peaks occurring from 90 to 100 dB SPL. Remember that most systems have an input ceiling set at 92 dB. Even in this moderate average background level, errors could be created for patients having a conversation while walking down the street with that technology.

If the background noise is an average of 90 dB with peaks up to 110 dB, which happens in a movie, there will be more errors. Thirdly, a loud environment where the noise reaches levels of 100 to 120 dB SPL, such as a professional basketball game, will cause the patient significant difficulty, because the hearing aid is processing so many errors above the input ceiling. Patients will take out the device because they feel like they hear better without it. With these three environments, which are environments that we encounter fairly regularly, patients will experience distortion and errors due to a low input ceiling.

One of the things that I recommend is to take advantage of the apps and programs available on smartphones and tablets now. One app lets you use your smartphone as a sound level meter. I encourage clinicians to take the time to examine their everyday environments and get a feel for average noise levels. It is a great way to understand how levels in common venues can affect the processing of hearing aids for patients. Widex also has a free app for the iPad that you can use to demo sounds for your patient, such as driving, being in a cinema, or going to the stadium. You can hear the effects of those sounds on the processing.

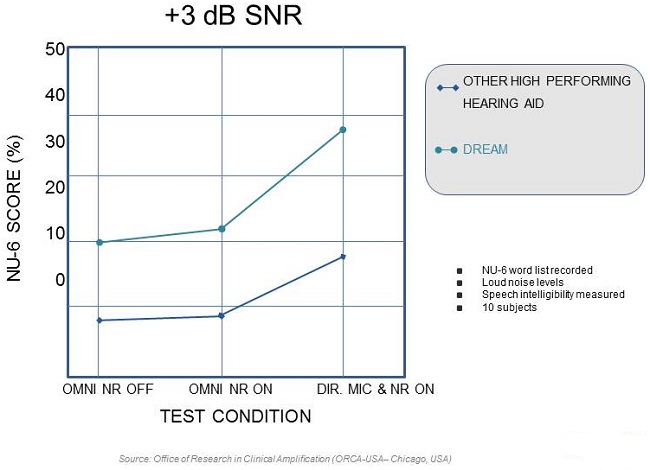

We also tested speech in noise to see how well our Dream hearing aids performed against other high-end products using the NU-6 test in three different conditions (Figure 7). We put the hearing aids in the omnidirectional condition with noise reduction off, omnidirectional with noise reduction on, and directional mode with the microphones on and noise reduction on. There were three conditions of varying signal-to-noise ratios. The speech was constant at 100 dB SPL, with added noise at 0 dB SNR, -3 dB SNR and -6 dB SNR, which would be the most challenging condition.

Figure 7. NU-6 test results in varying conditions of noise reduction off, noise reduction on, and directional microphones with noise reduction on for a +3 dB SNR. (light blue=Dream; dark blue=other high-end hearing aids)

We compared the Dream against other high-end technologies using this test. Because we had a higher ceiling of 113 dB, we were able to capture all the sound without clipping or compression and maximize performance in a noisy environment. The performance of the Dream hearing aid exceeded that of other high-end hearing aids in all three conditions.

More Personal

The third reason the Dream is exceptional is that it makes listening more personal. We listened to you, as clinicians, and incorporated some of the things you recommended to make this more personal for your patients. You can customize this technology for your patient. One of the things that we did was create a Widex website (my.widex.com) that allows us to counsel our patients and help them learn more about their hearing loss and their hearing aids, get more educated, and help them to remember things. We know that 40% to 80% of what a patient is told is forgotten once they leave the office. We wanted to provide a resource for redundancy, because the best way to learn is to repeat over and over; redundancy helps retain information.

My.widex.com gives patients detailed information about the function of their hearing aid, as well as advice and answers to questions they might have. There is information about their personal audiogram. There are videos on how to use the hearing aid, such as putting it in, taking it out, maintenance suggestions and ideas. There is a hearing loss simulator for family members also. You can simulate what it sounds like for Uncle John to hear through his hearing loss, whether he is listening to speech or music. This is a great educational tool for the family. There is a hearing aid questionnaire that is paired within the new Compass software that allows you to get feedback about the patient and what they are experiencing before they come to see you in your office. You will already have a game plan on how to help them based on this questionnaire. They fill it out at home, submit it, and then you can see it within NOAH and Compass. There are also wonderful stories and successes of users and what they have experienced; I think it is useful for them to realize that they are not alone.

There are also some more personal features that we have added within our Compass software, such as personal acclimatization. This allows you to set the software to adjust the gain in small increments over time. Your patient can start at the softest level, then the system can be programmed to reach an end point automatically in one week, two weeks or three weeks. It gradually gives them time to adjust to their new technology without sacrificing audibility or lingering in a less-than-optimal fitting.

We also made our audibility extender more personal because it is now available as a global program. It can be assigned and adjusted to any program that the patient has, such as TV, quiet, or music. It lets them benefit from our linear frequency transposition in any program.

We also have a new feature within the Dream 440 called Zen shaped noise, which can be used with our Widex Zen Therapy Protocol for managing tinnitus patients. This particular feature allows the clinician to customize the bandwidth of the noise and filter it depending upon the needs of that patient.

Integrated Signal Processing

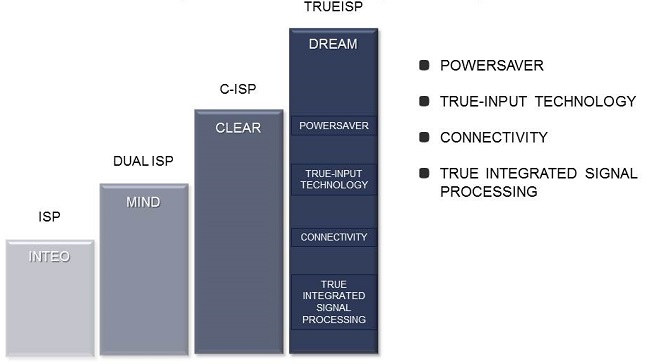

Lastly, I want to share some information about our True-ISP (integrated signal processing), with patent-pending True-Input technology (Figure 8). ISP was a technology that we introduced in the Inteo product to help make sure that no one feature in the hearing aid worked in isolation; all the features should be integrated, taking into consideration the person’s hearing loss, so that it works as a unit. With TruSound ISP, we are taking that integrated signal processing to another level. When we introduced ISP in the Inteo product, we introduced our dynamic integrater, which is the brains of the system ensuring that all of the features work together. We also introduced speech enhancer, which is our noise management system. Not only does it reduce noise across different frequency ranges, but it looks at the person’s audiogram and can turn up any of the channels as much as 6 dB. The goal is to preserve audibility. It was peer-reviewed and shows a 2 to 3 dB signal-to-noise ratio improvement using this particular feature. We also introduced the audibility extender in the Inteo product.

Figure 8. ISP platforms.

We then had Dual ISP in the Mind product where we made a stronger, more sophisticated chip that worked on two levels. We called one the listening level and one the awareness level. The awareness level was where we introduced Zen, which is now used as part of the Widex Zen Therapy Protocol for tinnitus. We included smart speech, which continues today and allows the system to talk to the patient in 22 different languages. We also introduced the multidirectional feedback cancellation, which is unique to Widex. We use two different feedback paths to minimize the occurrence of feedback dynamically.

We introduced the C-ISP in the Clear product, and added Widex Link, which is our proprietary wireless system specifically for hearing aids. This system allows two hearing aids to work in tandem to accomplish more sophisticated processing, calculations, and adjustments. It allows inter-ear communication in eight different features, whether it is compression, the speech enhancer, Zen, volume controls, or programming adjustments; they are all shared. It gave us some unique features like Free Focus and Reverse Focus, as well as TruSound Softener which automatically minimizes the transient noises that can occur in the environment. This could be someone typing on their keyboard or putting a cup in a sink; these are very sharp, quick sounds that can be annoying to our patients.

The fourth level is TrueISP (Figure 8). This is the featured platform in the Dream, including all the processing features mentioned in the beginning of this talk. Because Dream is a more sophisticated system with a smarter algorithm, we are able to reduce battery drain by up to 20% in our hearing aids using the power saver system.

We also have the Interear technology with WidexLink, which was built specifically for hearing aids to give us wireless communication in short-range/long-range configurations. The hearing aids share information, but you can also connect to external devices, like your cellphone or TV system. Compass GPS is our software platform that integrates with WidexLink and my.widex.com.

We also look at preserving the fine speech details with the low compression threshold that we are famously known for. We took it to a new level with the input range of 113 dB to make sure that the system sets itself appropriately in different environments, whether it be in directionality, noise reduction or noise management. Having all those features allows us to pull the integrated signal processing components together, minimizing artifact within a 2 to 5 msec processing delay, which is the fastest in the industry. Taking all of that into consideration, it allows us to give our patients a true-to-life sound experience that they desire.

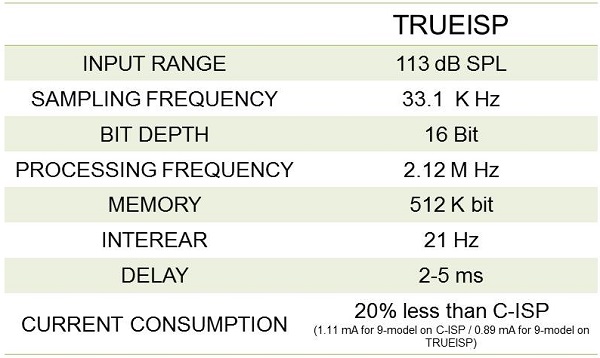

A summary of the technical details of the Dream platform can be found in Figure 9. The input range is 113 dB SPL, the highest in the industry. Our sampling frequency goes up to 33.1 kHz, which is one of the highest in the industry. Our bit depth is 16-bit, giving us a 96 dB dynamic range. Our Interear feature allows us to share information 21 times a second, which is the fastest in the industry, yet has minimal delay of only 2 to 5 msec. With all that, it still minimizes current consumption and uses up to 20% less power than what patients were seeing before. We have more in the Dream than we did in our C-ISP Clear platform.

Figure 9. Summary of Dream platform technical details.

Summary

The take home is to remember that rich sound in gives us true sound out. Preserving the input gives more for the processor to work with and, ultimately, gives us the best output, in terms of clarity. More sound in, more sound out. For more words, we want to remember that correct estimation of speech and noise in loud environments is more accurate with the Dream because we are not adding digital errors or distortion. We are capturing the full dynamic range of that sound. It is also more personal. We want to customize these features for individual needs so that we make it more personal. We make it more a part of their life so that they use it and adapt faster.

Questions and Answers

Understanding that the input dynamic range of a 96 dB range with 16 bit depth is the same as the previous A/D converter, is there truly any difference in the A/D converter other than raising the floor and the ceiling by 10 dB?

Yes. When you change the A/D converter, you have to adjust all of your algorithms and processing that happens in the DSP. By raising that, we did have to think about all the features that are in our hearing aid, how they are performing and adjusting within that system. There is a significant difference in just the processing that you get from having that ceiling raised to 113 dB SPL.

How does the hearing aid deal with people with tolerance or recruitment problems?

That is a question that has been asked before. Anytime you start talking about high input levels, people start thinking of the tolerance problems and loud environments. The WDRC is still in place, and because of the transparency, we are not turning up loud sounds. We are just opening up the window to capture more of them. We are just capturing that sound more accurately at the front end so that we can process it. If they have tolerance issues, we are still going to take that into consideration, but we are capturing the sound more accurately so that we can process it and use it with minimal errors and distortion.

Cite this content as:

Martin, J.W. (2014, February). Widex Dream: 3 reasons why. AudiologyOnline, Article 12399. Retrieved from: https://www.audiologyonline.com