Learning Outcomes

As a result of this course, participants will be able to:

- Explain why it is important to reduce listening effort for hearing.

- List the primax features which have been clinically proven to reduce listening effort.

- Describe the dedicated music programs in the primax platform that have been designed to improve the sound quality of music.

Introduction

The primary goal of amplification is improving speech understanding. When listening situations become noisier or more challenging, this is even more important. However, beyond improving speech intelligibility, another indicator of the effectiveness of hearing aid fittings that has sometimes been overlooked is the cognitive demands, or listening effort required for speech understanding to take place. This is why it is important to not only improve speech understanding, but also make speech understanding easy. This is the underlying goal of the new Signia primax™ platform ― to improve speech intelligibility and reduce listening effort for the wearer.

Improving Speech Intelligibility

Many of the sophisticated features of the primax platform have been carried over from its predecessor, binax. This includes its clinically proven benefits, especially in the area of improving speech intelligibility. Therefore, before we examine the new primax platform, it is relevant to review some of the key features of binax and the clinical research conducted with that instrument platform.

With the introduction of binaural beamforming and binaural noise-reduction technology, much headway has been made in reducing background noise and improving sound quality in order to promote better speech understanding. binax hearing aids include a binaural beamforming feature called Narrow Directionality, which was made possible by the real-time transfer of audio data between hearing aids (Kamkar-Parsi, Fischer, & Aubreville, 2014). This was the first hearing aid technology clinically proven to allow wearers to understand speech better in noisy environments than even those with normal hearing (Powers & Froehlich, 2014). As a part of the high-resolution automatic adaptive directional microphone system, Narrow Directionality fades in automatically from the standard directional mode when listening situations become very noisy, such as in crowded restaurants or during loud parties when speech understanding is the first priority. This intelligent, automatic steering ensures that the wearer benefits from the features exactly when necessary. Clinical research has shown that automatic binaural beamforming has even enabled single-microphone CIC wearers to enjoy directional speech recognition benefits for the first time (Froehlich & Powers, 2015).

These hearing aids can also automatically steer directionality towards the back or either side without compromising spatial cues (Littmann, Junnius, & Branda, 2015). This so-called Spatial SpeechFocus is ideal for situations when the wearer cannot turn to face the speaker, such as when driving a car. The binax hearing aids also introduced more efficient binaural noise reduction features. The eWindScreen binaural is able to transfer strategic portions of the audio signal from the side with less wind noise to the other, improving the sound quality of the signal without compromising speech intelligibility or spatial perception for the wearer (Freels, Pischel, Wilson, & Ramirez, 2015).

The Importance of Listening Effort

Beyond speech understanding, it is also important to consider the listening effort expended by the wearer in order for understanding to take place, which is an integral part of the new primax platform. Depending on the acoustic situation and the context, listening can require more or less attentional and cognitive processing. While listening, our brains process information sequentially and in parallel to the arrival of the acoustic input while discarding, storing, retrieving, and comparing information. This dynamic activity of the brain, which accompanies and enables listening, is ongoing and cumulative. And it happens regardless of how much of the speech is actually understood.

Even people with normal hearing experience situations that require increased listening effort ― we have to “work harder” to hear what we want to hear. Often this involves situations with excessive background noise, but it also occurs with very soft speech, poor mobile phone connections, trying to understand a speaker with a pronounced accent, and in many other difficult listening situations. Generally speaking, speech understanding relies on the quality of the incoming speech signal and on the cognitive processes involved in the internal representation and interpretation of that signal.

Hearing loss degrades our ability to perceive the speech signal, thereby increasing the demands on cognitive resources involved in selectively attending and interpreting it. The increase in cognitive activity is needed to compensate for missing speech details in difficult listening situations. This results in effortful listening and it eventually leads to listening fatigue. We know that even mild hearing loss causes increased listening effort and fatigue. Listening fatigue indirectly reduces speech understanding, as the patient will not have the mental energy to stay “tuned in.” This can have significant consequences on the patient’s energy level, which will influence how much they engage in speech communication, or in some cases, any activity that requires mental energy. Often enough, the patient simply “gives up” in the end, resulting in social isolation.

The nature of the auditory signal is such that it is ongoing over time. Any additional cognitive activity takes processing resources and slows down comprehension. In difficult listening situations this means the hearing impaired person would constantly lag behind during conversation, missing out on parts of speech because their brain is already busy processing the previous parts, unable to direct attention to the new input. This feeling of urgency and lagging behind also increases the likelihood of mental fatigue.

These compounding factors mean that, at the end of the day, the hearing impaired individual may be so tired simply from straining to hear and understand that they choose to stay home rather than go out to dinner with friends and family. Instead of enjoying life to the fullest, they might choose to withdraw. Therefore, it is critical to keep listening effort at a minimum throughout the day in order to reduce the likelihood of fatigue.

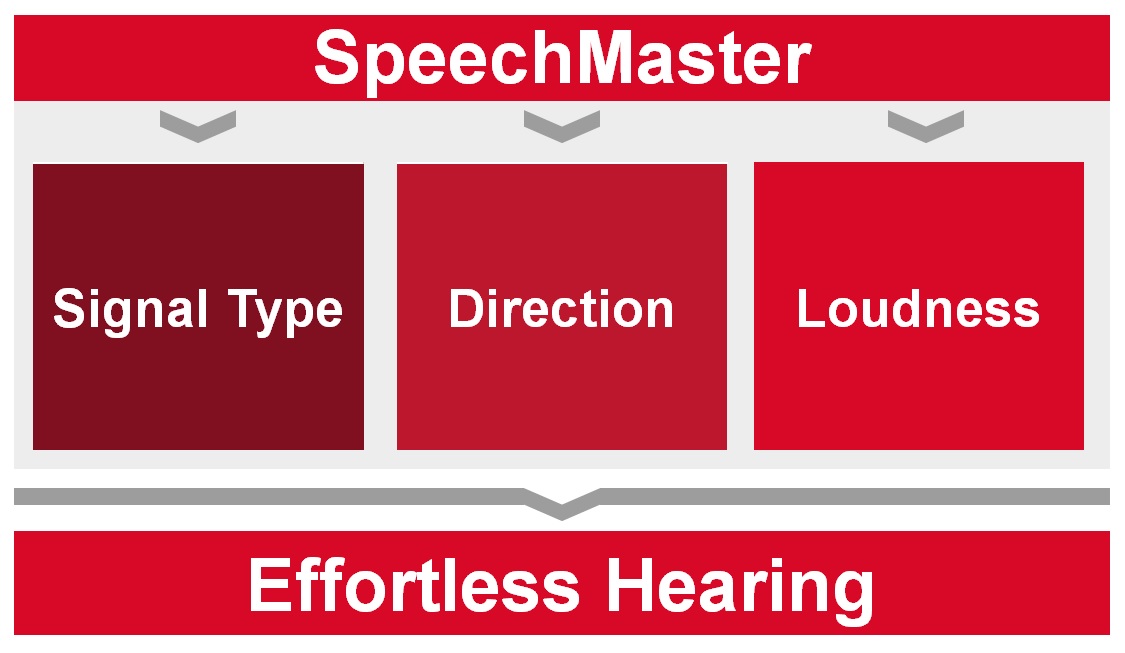

Reducing Listening Effort by Suppressing Noise and Highlighting Speech

As discussed earlier, one of the main factors that increase listening effort is the presence of other sounds and noises competing with the target speech. So the best way to reduce listening effort is minimizing noise while highlighting the target speech (Mueller, Ricketts, & Bentler, 2014). In primax hearing aids, this is accomplished via SpeechMaster. SpeechMaster is responsible for the automatic detection and subsequent adjustments of the automatic Universal program, and its main function is to reduce listening effort for the wearer throughout the day. More than a single feature, SpeechMaster is a combination of existing technology, such as the binaural features mentioned earlier, and new primax innovations. All of these components work automatically and in concert to highlight the desired speech source while reducing the intensity of all other competing sounds in a wide variety of situations. These components can be categorized into three strategies SpeechMaster uses to improve the signal: type, direction, and loudness (Figure 1).

Figure 1. SpeechMaster uses three strategies to improve speech intelligibility and sound quality, thereby promoting effortless hearing.

Improving the signal based on signal type. SpeechMaster can accurately identify and categorize the type of signals being picked up by the microphone. While speech signals are left untouched, all other signals categorized as noise are instantaneously reduced via digital noise reduction. Some of the features that fall under this strategy include Speech and Noise Management, SoundSmoothing, and eWindScreen binaural. Together, they reduce a variety of transient, steady state, and fluctuating noises. These noise reduction features enhance sound quality and perceived signal clarity, thereby reducing listening effort.

Improving the signal based on direction. In general, we face our conversation partner, especially in the presence of competing noise. This is the operating premise of directional microphones, which primarily reduce sounds from behind the wearer. The primax high-resolution, automatic, adaptive, binaural directional microphone system reduces competing sound sources originating from the back and sides, and if necessary, narrows the directional focus so that everything not originating from directly in front of the wearer is attenuated (i.e., the Narrow Directionality discussed earlier). The situation-dependent, automatic nature of the directional microphone system ensures that its behavior meets wearers’ listening needs in a wide spectrum of acoustic environments, including very difficult and noisy, party-like situations. It maximizes spatial awareness in quieter situations, and gradually narrows the directional focus as signal-to-noise-ratio (SNR) decreases and wearer demands shift toward speech understanding. It also includes the Spatial SpeechFocus feature mentioned earlier. Overall, the primax directional microphone system ensures optimal speech intelligibility in a variety of noisy situations, reducing listening effort for the wearer.

Improving the signal based on loudness. The loudness component of SpeechMaster is a completely new signal processing strategy that separates the dominant speech source from any other, interfering sound source. For example, the hearing aid can detect a 75 dB SPL dominant speech source in 70 dB SPL background noise. With this new signal-level dependent technology, SpeechMaster can further separate the useful 75 dB SPL speech from all other interfering noises and reduce the latter. The effect is that the processing can “pull” the talker closer to the listener and “push” the surrounding noises farther away. This new, third component of SpeechMaster behaves like another layer of noise reduction that reduces any sound other than the dominant speech. The difference here is that unlike digital noise reduction, which cleans up the signal based on signal type (speech vs. non-speech), this strategy operates on the detected loudness of different environmental sounds. It is level-dependant, channel-specific, and the transitions are smooth. SpeechMaster thus avoids the over-amplification of background noise that fills the short gaps between speech segments and interferes with speech understanding in conventional systems.

These three strategies, which improve the signal quality based on signal type, direction, and loudness, all work in concert and automatically as parts of SpeechMaster to highlight speech above background noise.

While emphasis will always be placed on ensuring that target speech is effortlessly audible, the intelligent steering component of SpeechMaster also ensures that other important aspects of hearing, such as sound quality and spatial awareness, are not overlooked. For example, it is well established that the narrower the directional focus of the microphones, the more spatial cues are compromised (Best, 2015; Brimijoin, Whitmer, McShefferty, & Akeroydet, 2014). Therefore, SpeechMaster fades among TruEar (a microphone response for BTE and RIC instruments designed to mimic the natural directivity of the outer ear), standard directional, and Narrow Directionality. This fading occurs as is necessary to balance the shifting wearer listening priorities between spatial awareness and speech intelligibility. In quieter environments where speech is easily understood, TruEar mode ensures that localization cues are preserved. As more noise is introduced and speech intelligibility is increasingly compromised, standard directional microphones can improve SNR for the wearer while still maintaining significant spatial cues. At the other end of the spectrum, in very noisy situations, people naturally converse in significantly smaller groups huddled close together ―in which case, all conversation participants would be within the wearer’s visual range. In such situations, speech understanding is a decisive listening priority over localization, and SpeechMaster fades in Narrow Directionality (Powers & Froehlich, 2014). As reported by Picou and colleagues (2014), when visual cues are present, localization is maintained even with narrow beamforming. In fact, since signals are processed differently for the left and right hearing aids in the generation of Narrow Directionality, the spatial information of the non-target sound sources is actually maintained. In this way, the user obtains unparalleled benefit of the enhanced SNR for the target speaker without having to accept an acoustic “tunnel effect.” This automation and intelligent steering aspect of SpeechMaster means that the wearer can take advantage of SpeechMaster throughout the day without the need to make any manual adjustments.

The benefit of SpeechMaster was assessed in a recent clinical study that objectively measured the participants’ listening effort by analyzing ongoing EEG results while they performed a difficult speech recognition task (Littmann, Froehlich, Beilin, Branda, & Schaefer, 2016). Results showed that when SpeechMaster was activated, the objective brain behavior measures revealed a significant reduction in listening effort (p<.05).

Reducing Listening Effort by Minimizing Reverberation

The clarity of the target speech is oftentimes compromised by other sounds in the environment. But in certain reflective spaces like large rooms or auditoriums reverberation can also compromise the sound quality of the target sound, and therefore increase listening effort for the hearing aid wearer. Research has shown that hearing impaired listeners report as many speech understanding problems in reverberation as they do in background noise (Cox, 1997; Johnson, Cox, & Alexander, 2010).

Reverberation is the persistence of sound caused by sound waves reflected from acoustically ’hard’ surfaces. Rooms with carpet, drapes, or furniture that absorb more sound waves reflect less sound and cause less reverberation than rooms with more hard surfaces like bare walls or hard-surfaced floors. While public spaces (places of worship, train stations, hotel lobbies, etc.) are commonly known for being reverberant, significant reverberation can also occur at home. For example, larger tiled foyers and hard-floored living rooms can also cause noticeable reverberation, which can reduce speech understanding.

Some reverberation is desirable, as it makes our world sound more natural and even enhances the quality of certain sounds, such as music. However, for speech understanding, excessive reverberation behaves like background noise ― degrading clarity and compromising intelligibility (Figure 2).

Figure 2. Speech from the talker arrives at the listener’s ears first via the direct path. But reflected sounds which arrive at the listener’s ears with a noticeable delay can degrade the clarity of the direct sound, making listening more effortful.

The new EchoShield feature in primax hearing aids is designed to reduce the effects of reverberation so that sounds are clear and crisp again, reducing listening effort for the wearer. More than just for large spaces commonly associated with reverberation, this feature is useful in many more places where reverberation is less apparent, but still problematic. These include hallways, lecture halls, and many rooms around the typical home.

EchoShield operates on the level difference between the direct sound and the reflected sounds, the latter of which is softer. Traditional wide-dynamic range compression provides more gain for soft sounds than louder sounds. As a negative side effect, therefore, compression actually amplifies these softer reverberations more than the actual target speech, making the situation even worse for hearing aid wearers. The primax EchoShield feature ensures that these softer reflections are not over-amplified.

Reverberation is typically only noticeable in quieter situations that would be classified by the hearing aid as Speech in Quiet, because if a reverberant room is not quiet, the reverberation would be masked by noise. Therefore, directional microphones are typically not activated in situations where reverberation is noticeable. However, EchoShield activates a directional microphone pattern to pick up the target speech coming from the front while reducing the reverberations coming from other azimuths.

In the same clinical study mentioned earlier that evaluated the effectiveness of SpeechMaster for reducing listening effort, EchoShield was also assessed using a similar methodology. Results showed that when EchoShield was activated, the objective brain behavior measures revealed a significant (p<.05) reduction in listening effort in reverberant listening situations (Littmann et. al., 2016).

Improving the Sound Quality of Music

The act of listening can require effort and cause mental fatigue, but depending on what we are listening to, it can also relax and reduce stress. Enjoying our favorite music is a prime example of how listening can recharge our mental energy. Hearing aids should not only reduce listening effort when we want to listen to speech, but also promote an optimal listening experience by improving the sound quality of music. But these two goals can be conflicting.

While hearing aids should provide an excellent listening experience for all situations, their priority is to maximize speech intelligibility. This means reducing background noise and highlighting speech signals. It is no coincidence that this is the purpose of SpeechMaster, which is a central part of the automatic Universal program designed to be used by the wearer throughout the majority of the day. The acoustic situation detector in the automatic Universal program is able to identify situations without speech sounds, such as nature sounds or music, and adjust the hearing aid settings to optimize the listening experience. However, as soon as speech is detected, priority is immediately given to highlighting speech again.

This solution is ideal for most hearing aid wearers who value speech understanding over music appreciation in most situations. In unique cases where music enjoyment would be prioritized over a conversation, (e.g., at a concert), dedicated music processing is necessary to adjust hearing aid settings specifically for music input. This is important because compared to speech, music has larger variations in signal frequency and level characteristics. In addition, the entire spectrum of music is important for a better sound impression, whereas for speech intelligibility only a limited frequency and dynamic range is relevant.

As a major improvement upon the industry benchmark binax music program (Várallyay, et. al., 2016), primax offers three different music programs. These dedicated music programs are designed to enrich the sound quality of music, whether the wearer is listening to recorded music, attending a live concert, or performing themselves. Designed to satisfy the serious music lover, these programs will also be appreciated by more casual listeners of music.

The Recorded Music program is meant for use at home or other indoor spaces where loudspeakers play recorded music. In this program, TruEar is engaged to offer a natural, surround-sound effect. Frequency shape optimized for music enjoyment (less emphasis on the speech area and less compression) is employed to capture the wider frequency range of music. Noise reduction algorithms are disabled to avoid any artifacts and to preserve sound quality. In this program, as is in all three music programs, the fidelity of the music is also promoted via the extended frequency range of the hearing aids up to 12,000 Hz.

The Live Music program should be activated when attending a concert – whether it’s Coldplay, a Mozart symphony, or anything in between. Because there are typically wider fluctuations in the loudness level at a live concert, including occasionally very loud sounds, this program offers an extended dynamic input range. Since the wearer would be in the audience facing the stage, directional microphones are activated to pick up the performance at the front rather than the crowd from behind. In addition, the Live Music program offers a music-optimized frequency shaping and deactivation of noise reduction.

Finally, the Musician program is dedicated to musicians who are playing a musical instrument or singing. More than just for professionals performing onstage, this program will also be appreciated by amateur band or choir members. The Musician program shares some of the same characteristics as the Live Music program. It also has an extended dynamic input range to preserve the fidelity of very high input levels, as well as music-optimized frequency shaping and deactivated noise reduction. This program also focuses on preserving the natural dynamics of the music in order for performing musicians to better gauge the loudness of their own instrument or voice in relation to other musicians performing with them. The TruEar mode is applied here as well since the musician could be positioned anywhere on stage and needs to have a balanced sound impression from all directions.

Summary

While improving speech understanding for wearers will always remain a priority for hearing aid technology, it is also important for hearing aids to reduce the amount of listening effort necessary for the understanding to take place. The primax platform from Signia introduces several new features to reduce listening effort for the wearer throughout the day. The SpeechMaster in the Universal program is a collection of automated features orchestrated to continuously highlight the target speech for the wearer in order to make listening as effortless as possible, while maintaining spatial information. EchoShield is dedicated to reducing reverberation to preserve clarity of speech. Both SpeechMaster and EchoShield have been clinically proven in objective studies of brain activity to reduce listening effort (Littmann et. al., 2016). Finally, because enjoying music helps us to relax and recharge our mental energy, the new HD Music programs are designed to optimize the sound quality of music in three distinct use cases. By keeping listening effort as low as possible and the sound quality of music as rich and full as possible, primax hearing aids help minimize listening fatigue, which leads to more hearing aid use, benefit, and satisfaction.

References

Best, V., Mejia, J., Freeston, K., van Hoesel, R., & Dillon, H. (2015). An evaluation of the performance of two binaural beamformers in complex and dynamic multitalker environments. International Journal of Audiololgy, 54(10), 727-35.

Brimijoin, W., Whitmer, W., McShefferty, D., & Akeroyd, M. (2014). The effect of hearing aid microphone mode on performance in an auditory orienting task. Ear & Hearing, 35(5), 201-12.

Cox, R. (1997). Administration and application of the APHAB. The Hearing Journal, 50 (4), 32-35.

Freels, K., Pischel, C., Wilson, C., & Ramirez, P. (2015). New wireless, binaural processing reduces problems associated with wind noise in hearing aids. AudiologyOnline, Article 15453. Retrieved from https://www.audiologyonline.com.

Froehlich, M., & Powers, T. (2015). Improving speech recognition in noise using binaural beamforming in ITC and CIC hearing aids. Hearing Review, 22(12), 22.

Johnson, J., Cox, R., & Alexander, G. (2010). Development of APHAB norms for WDRC hearing aids and comparisons with original norms. Ear and Hearing, 31(1), 47-55.

Kamkar-Parsi, H., Fischer, E., & Aubreville, M. (2014). New binaural strategies for enhanced hearing. Hearing Review, 21(10), 42-45.

Littmann, V., Froehlich, M., Beilin, J., Branda, E., & Schaefer, P. (2016). Clinical studies show advanced hearing aid technology reduces listening effort. Hearing Review, 23(4), 36.

Littmann, V., Junius, D., & Branda, E. (2015). SpeechFocus: 360° in 10 questions. Hearing Review, 22(11), 38.

Mueller, H.G., Ricketts, T., & Bentler R (2014). Modern hearing aids: Pre-fitting testing and selection considerations. San Diego: Plural.

Picou, E., spell E., & Ricketts T. (2014). Potential benefits and limitations of three types of directional processing in hearing aids. Ear & Hearing, 35(3), 339-52.

Powers, T. & Froehlich, M. (2014). Clinical results with a new wireless binaural directional hearing system. Hearing Review, 21(11), 32-34.

Várallyay, G., Legarth, S.V., & Ramirez, T. (2016). Music lovers and hearing aids. AudiologyOnline, Article 16478. Retrieved from https://www.audiologyonline.com.

Citation

Herbig, R., & Froehlich, M. (2016, May). Reducing listening effort via primax hearing technology. AudiologyOnline, Article 17275. Retrieved from www.audiologyonline.com