From the Desk of Gus Mueller

From the Desk of Gus Mueller

It’s Monday morning, and time for another week of interesting hearing aid fittings. Your 8:00 patient is a 40-year-old male with a common bilaterally symmetrical downward-sloping hearing loss, going from 25-30 dB in the lows, dropping to 65-75 dB in the 3000 to 6000 Hz range. Tympanometry and acoustic relflexs (500-2000 Hz) are normal. LDLs are 95 dB at 500 Hz and 105 dB at 3000 Hz. His QuickSIN scores are ~2-3 dB SNR-Loss bilaterally. Should be a pretty straight-forward fitting.

Coincidently, your 10:00 patient, an 80-year-old male, has very similar pure-tone thresholds, LDLs, and immittance findings. Audiometrically, the only difference that stands out is that his QuickSIN scores are ~7-9 dB SNR-Loss. So, do you program this fellow’s hearing aids to the same prescriptive gain as that of the 40-year-old? Same maximum output settings? What about directional processing? DNR? Same compression kneepoints, ratios, and time constants? All seem like pretty important things to think about. Fortunately, our 20Q guest author this month is here to help. Along with a couple of his colleagues, he recently reviewed over 200 articles on this very topic (published in Frontiers in Neurology).

Richard Windle, PhD, is a registered Clinical Scientist in Audiology, currently completing training to become a Consultant Clinical Scientist in the English National Health Service (NHS), for which this work supports a Doctorate in Clinical Science. He is based at Kingston Hospital NHS Foundation Trust, London, UK, where he is Audiology Team Leader, heading the vestibular assessment and rehabilitation service. He is active in direct patient care, working with adult hearing assessment and rehabilitation, and pediatric hearing assessment.

Dr. Windle previously was Senior Clinical Scientist at the Royal Berkshire NHS Foundation Trust, Reading, UK, where much of the work he will be discussing with us was conducted. He presently serves as the Chair of the Professional Guidance Group (PGG) of the British Society of Audiology (BSA); a group responsible for the overall portfolio of BSA guidance documents.

Going back to our Monday morning patients . . . there really are two issues to consider here. First, from a physiologic standpoint, when we compare a hard-of-hearing 40-year-old male to an 80-year-old male who has very similar pure-tone thresholds, what would be the expected differences in auditory processing, and also, what about the processing of speech due to probable cognitive decline? If we then conclude that yes, there are differences, then the next question is, do we program hearing aids differently for these two individuals?

A lot to cover in one 20Q, so Robert primarily will address the auditory processing issue in this installment, and has agreed to return next month, dealing more specifically with some suggestions for the hearing aid programing portion of this interesting topic.

Gus Mueller, PhD

Contributing Editor

Browse the complete collection of 20Q with Gus Mueller CEU articles at www.audiologyonline.com/20Q

20Q: Changes to Auditory Processing and Cognition During Normal Aging – Should it Affect Hearing Aid Programming? Part 1 – Changes Associated with Normal Aging

Learning Outcomes

After reading this article, professionals will be able to:

- Describe the processes of normal aging and how this affects auditory processing.

- Identify other elements of cognition that support speech perception and how these change during normal aging.

- Describe the overall effect of changes to cognition and auditory processing on speech perception in adults as they age.

1. Auditory processing and cognition sounds like something that might be complicated. Can you first give me a brief overview of how this might affect hearing aid fitting?

The combined effects of a decline in peripheral hearing, auditory processing, and some other elements of cognition with age can seem complicated, but there are some straightforward things we can do to modify our approach to fitting hearing aids. If you were to search the peer-reviewed literature based on the terms “aging” and “hearing,” you’ll end up with thousands of papers that cover diverse subjects around peripheral hearing and central processing. The aim of the review paper that we recently published on this subject (Windle et al., 2023) was to try to pull all of this together and to determine what aspects of it were relevant to the hearing aid fitting process. I won’t toss out too many references during our conversation, but the original paper is open-access, so you can find more detail there (https://doi.org/10.3389/fneur.2023.1122420). Before I go on, I’d like to acknowledge Harvey Dillon and Antje Henrich as co-authors of the original paper, and to Harvey for his comments on some of your questions.

There are a lot of things to talk about, and we may not get to all them in 20 Questions, so we might need to have another meeting. For starters, let’s look at what happens as we age, and how this affects auditory processing and other elements of cognition that support listening. We might have to save the details of what implications this has for hearing aid fitting for another day.

Briefly stated the relationship to hearing aid fitting goes like this. In general, older adults with reduced processing speed and capacity will find it harder to make use of detailed auditory information and to cope with any distortion introduced by hearing aids. For some older adults, fast-acting compression with high compression ratios will create too much distortion and may undermine listening ability, so we should consider slow-acting compression as a default. Likewise, other features of hearing aids (e.g., noise reduction, frequency compression) can also add distortion, so we need to consider each of these. We can’t simply predict which patients will need this, so it’s important to understand that hearing aid verification (with real ear measurements) is not sufficient to determine all of the hearing aid parameters we need to set. Proper validation is always required to assess an individual’s preference and outcomes.

2. I’ll look forward to hearing more details on this. But I have to ask, why did you do this research?

If you work with older adults, it quickly becomes apparent that many people struggle with hearing aids for multiple reasons. A public health system provides good accessibility to hearing aids, but far too many of them are under-used or not used at all (Dillon et al., 2020). The problems described by older adults also seem to be associated with age (or, more likely, underlying changes in auditory processing and cognition) than they do with the degree of hearing loss (Windle, 2022). I came to the belief that we could be doing much more to help older adults, both with hearing aids and other modifications to the environment, lifestyle or understanding. Sitting in follow-up clinics for complex patients, I also realized that the way we program hearing aids can sometimes be unhelpful. For example, adhering to gain prescriptions can end up with a high WDRC ratio, and it is sometimes surprising to observe the positive effect that simply reducing the compression ratio or changing the compression speed, can have on an individual. Ultimately, I believed that this was an important subject because the vast majority of patients seen for audiologic appointments are older adults; nearly 9/10 adults we fit with hearing aids are over 55 (Windle, 2022). The high prevalence of hearing loss in older adults often leads to the assumption that they are, somehow, “standard” patients. However, the combination of a decline in peripheral hearing, auditory processing, and cognition can make some older adults the most complex patients we see. As I mentioned earlier, the literature in this area can be overwhelming and, sometimes, inconsistent. I, therefore, set off to try to make sense of it all and to boil it down into some practical guidance that would be helpful to audiologists in the clinic and, primarily, a benefit to the majority of our patients.

3. So, when you talk about cognitive change with age, aren’t you simply referring to dementia?

No, definitely not! This is a common misapprehension, and in a survey of audiologists, we found that the term “cognitive change” seemed to be inextricably linked to ideas about “cognitive decline” and “dementia.” While these are obviously important subjects, the majority of patients we see in audiology clinics are “normally-aging” older adults. By this, I mean older adults without any form of diagnosable mild cognitive impairment or dementia.

During normal aging, some aspects of cognitive ability decline throughout adult life, and some improve. Our auditory processing abilities also decline. Older adults, even with perfectly normal peripheral hearing, will often still struggle to hear in difficult environments because of the demands it places on processing and cognition. Many of us should expect to struggle at some point with noisy places, fast speech, and unfamiliar accents as we get older, even if our ears remain in the same perfect condition as our teenage selves. Having said that, getting back to your question, all of the things we discuss later about setting hearing aids will be particularly applicable to those with dementia.

4. What do you mean by “cognition” as opposed to “auditory processing”?

Let’s start with a few definitions.

“Auditory processing” refers to the analysis of sound, determining its nature and location, separating sounds, and starting the process of forming auditory objects, which have different auditory profiles and other associations. For example, two different people will have different voices and also other personal characteristics that are defined in the mind of the listener as separate “objects”.

“Cognition” refers to the processes of acquiring, retaining and using knowledge and has many constituent elements, including reasoning, memory, speed, knowledge, reading, writing, maths, sensory and motor abilities. Auditory processing can be defined as one “narrow” element of overall cognition that is specific to the analysis of sound. Other “narrow” elements of cognition may be specific to other tasks, but some of these are necessary to develop understanding from the sound. Multiple pathways in the brain support a hierarchical process of word recognition, integration into phrases and sentences, syntax (grammar), and semantics (meaning).

In simple terms, you can think of the auditory system as the process of separating and identifying sounds before other cognitive processes develop meaning and understanding. However, these systems are interconnected via “bottom-up” and “top-down” processes. In case you haven’t thought about this complex processing for a while, here is a brief description:

- Bottom-up processing: takes in sound at the periphery (ears), turns it into nerve signals, tidies up the data, separates and identifies sounds, engaging speech recognition as a special system which can then interpret and, finally, understand speech “up” in the brain.

- Top-down processing: is dependent on the allocation of attention. If we decide to focus on one person’s voice, this will feed back “down” to the processing centers of the auditory system to process one sound in preference to another.

5. Can you give me a simple overview of the auditory processing system?

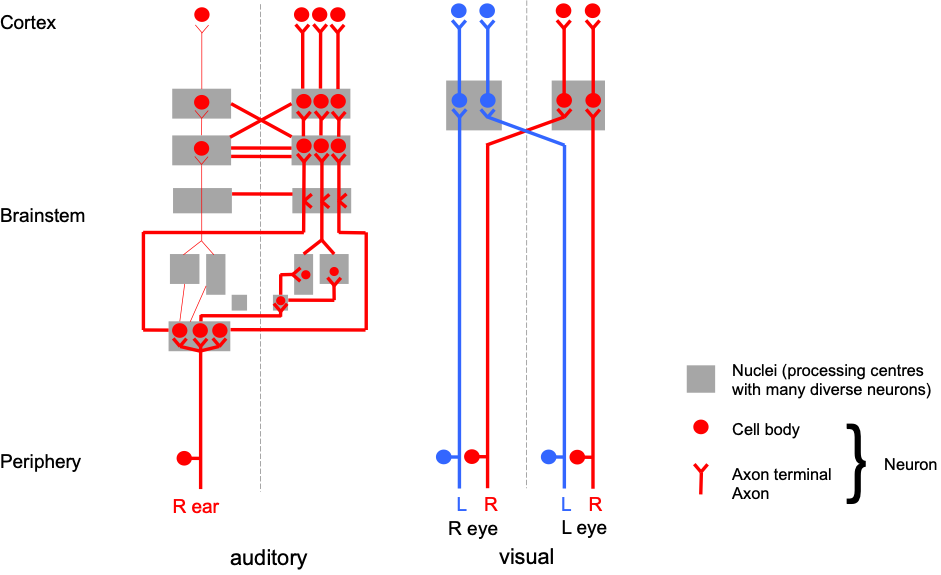

That’s a tough one, largely because we should first recognize the unusual complexity and speed of the auditory system. If we were to compare a “wiring diagram” of hearing and vision, the difference would appear quite stark (see Figure 1). A diagram of the visual system shows a couple of “wires” from the eyes, splitting in the middle to separate right and left visual fields, before entering the visual cortex. The auditory system would appear like a tangled plate of spaghetti by comparison, with multiple processing centers, interconnecting from left to right in several places, as well as interconnections with other sensory systems. There are also many descending auditory connections from the cortex down to all of the auditory nuclei (not shown in Figure 1) that go all the way down to the control of outer hair cells.

The auditory system is also much faster, reflecting the need for accurate timing information. Timing (or temporal) information is a key aspect of the processing of speech. Different parts of the auditory system are “tuned” to different elements of speech. For example, some parts are tuned to the variation in amplitude within frequency bands (or modulation); and some are phase-locked to the other specific details of speech. Accurate “neural synchronization” of the system is also important, which enables binaural processing. We should also recognize that age-related hearing loss introduces a degraded input to the auditory system.

Figure 1. A comparison of the complexity of the ascending auditory system “wiring diagram” (simplified) with that of the visual system, up to the auditory/visual cortex. Only ascending connections from one ear are shown as the diagram of the auditory system would get too complex with both ears and descending connections. Nerves (neurons) connect processing centers (nuclei) prior to presentation of information to the brain.

6. What do you mean by a “degraded input” to the auditory system?

The purpose of our discussion isn’t really to talk about the decline in our inner ears related to age; I assume we’re all familiar with that. However, it’s important to point out that the loss in cochlear hair cells doesn’t just affect our sensitivity to sound and increases our hearing threshold but also reduces the accuracy of tuning to specific frequencies, so that it’s harder to tell closer frequencies apart and to distinguish speech sounds, including vowels. A loss of the connections between hair cells and the auditory nerve (i.e., synapses), often called “hidden hearing loss”, further reduces the fidelity of neural signals representing the response of the cochlea for different levels of input. While hearing aids may address the loss of sensitivity, they do not directly address these others aspects of peripheral hearing loss. Likewise, we should recognize that a pure-tone audiogram only tells us about hearing sensitivity and tells us nothing about these other processes, nor does it give any relevant information about central auditory processing or cognition.

7. That all makes sense. So, what happens to the auditory processing system as we age?

It should be no surprise that a complex system, like auditory processing, requires a great deal of metabolic energy and is prone to decline with age. Changes to the underlying neural infrastructure causes progressive “central” auditory deficits. As “lower” and “higher” level processes are interrelated, the effects of peripheral and central decline can be difficult to dissociate. However, in general, there is a reduction in the density of connections in the brainstem and cortical structures involved in auditory processing that will impair synchronization and timing.

8. How do these changes to the auditory system affect our hearing ability?

Now we have to be careful what we mean by “hearing”. When conducting a routine hearing test, a simple sound has to register its presence in our brain and prompt a response. This does not depend on the “quality” of the sound reaching the brain, so has little relevance to the intelligibility of a complex signal like speech, even though we’ve “heard it”. It’s better to talk in terms of speech intelligibility, understanding or listening ability, which also depend on the allocation of cognitive resources. A simple example of this is that it’s harder to listen when you’re tired or just not concentrating.

The number of interconnections between neurons in the brainstem reduces with age, including all of the auditory processing centers (see Figure 1). This undermines the accurate representation of timing information and has a wide array of effects. For example, gap detection is impaired which makes it harder to separate words in sentences and also to discriminate between different words. Consider the words “dish” and “ditch”. These can be thought of as largely the same “di” and “sh” sounds separated by a gap; “dish” has a short gap and “ditch” has a longer gap. If you vary the length of the gap, then younger people need only a small change in the length of the gap for the word to change from “dish” to “ditch” whereas as older people, with comparable hearing ability, need the gap to be longer before their perception changes to “ditch” (Gordon-Salant, et al, 2008).

9. Do you have other examples of changes to auditory processing? I’m guessing that there are many.

Yes, you’re right, there are many effects on the auditory system. Normal aging will affect the parts of the auditory system that are employed in, what might be characterised as, “data enhancement”. For example, the system “sharpens” tuning to specific frequencies and to the overall change in amplitude or pitch (or amplitude and frequency modulation). Decline in these structures causes a reduction in our ability to discriminate pitch, or overall “spectral processing”, which further undermines our ability to discriminate between vowels, impacting our ability to understand speech in quiet. “Top down” connections are also affected. This enables our auditory system to preferentially process one element of sound (e.g., one person speaking) relative to other sounds (e.g., non-target speakers or background noise). Downwards (or “efferent”) connections reach all the way down to control the “gain” of outer hair cells, essentially reducing the range of amplitudes we are able to distinguish and cope with. Reduced timing accuracy also has an effect on our ability to combine the inputs from both ears; in other words, it impairs our binaural processing. This effects our ability to localize sounds. Location is an important aspect of being able to separate and form auditory objects, so undermines our ability to pay attention to a target speaker and to process speech in noise. Overall, these changes alter our relative dependence on different cues within speech as we age.

10. It’s been a while since my hearing science course. I think I need a refresher on “speech cues” and why are they important?

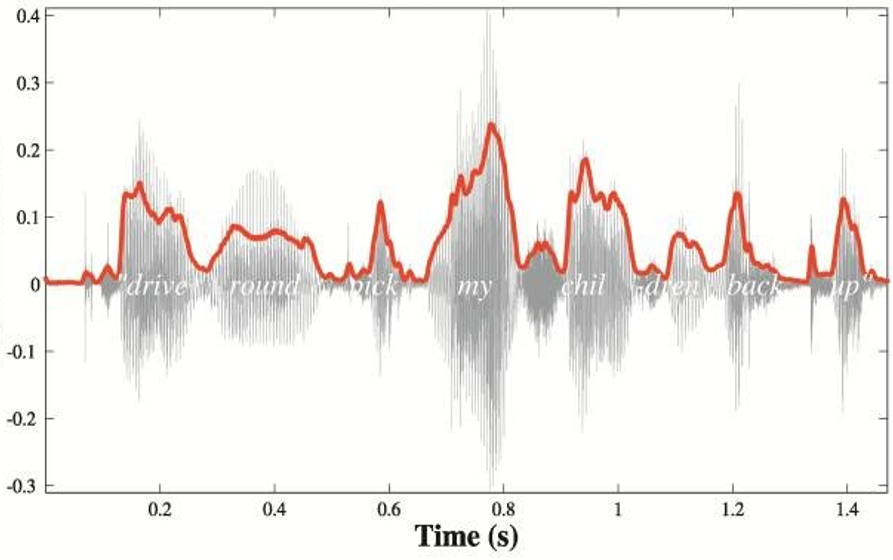

It’s really important for us clinicians to understand the different speech cues in order to be able to consider the potentially detrimental impact that hearing aids can have on speech intelligibility for some people. I will be using this terminology a lot when we discuss hearing aid processing later. A basic framework for describing the timing (or “temporal”) information in speech was developed by Rosen (1992). This separates the temporal information in speech into three main components and helps us to grasp how hearing aids might affect the speech signal in different ways. In reality, of course, auditory processing is more complicated than this, including the use of interactions between the different elements of speech cues. Nevertheless, the three main components of temporal information described by Rosen (1992) are:

- The speech envelope (often given the acronym ENV) or “amplitude envelope” which is the “shape” of the speech amplitude in a frequency band. ENV is essentially the amplitude modulation of the speech signal within each frequency band and this information is mostly carried in the frequency range below 50Hz.

- Periodicity, which mainly determines the difference between voiced (periodic) sounds and not-voiced (aperiodic) sounds. For example, vowels (voiced) have a periodic pattern in the time domain, whereas fricatives, such as “sh”, have an irregular pattern. In the frequency domain, periodic sound will contain a few frequencies (the fundamental frequency plus harmonics), and aperiodic sounds will have a continuous spectrum more like a narrow-band or wide-band noise. The fluctuation of periodic sounds occurs mainly in the frequency band between 50 and 500 Hz.

- Temporal fine structure (TFS), which is the detail in speech “underneath the envelope”, primarily in the frequency band above 500 Hz, providing detailed frequency and phase information, important for the perception of sound quality, or timbre.

ENV and TFS are key cues forming the basis of speech perception, frequency perception, and localization (see Figure 2). ENV is the most important cue for understanding speech in quiet, and we can make sense of speech in these situations with remarkably little frequency detail. This was tested using “vocoder” experiments in which ENV remained the same within a number of frequency bands, but the detail of speech was replaced with band-filtered noise. Speech remained understandable with as few as three frequency bands in quiet situations (Shannon et al., 1995). Another way to demonstrate our ability to make use of ENV with little TFS can also be evidenced by the success of cochlear implants, which provide a grossly reduced representation of frequency detail because they are limited by the number of electrodes on the implant. However, the same is not true when we try to listen to speech in a noisy background. In this case, the fine detail is needed. Adding back TFS to a signal progressively improves speech in noise performance. It also supports binaural localization, separation of competing sounds, perception of pitch and music, and perception of motion. For most of us with sufficient hearing, it’s not a simple case of making use of one or the other, ENV or TFS, to understand speech; they provide redundant cues that we can use to enhance intelligibility across different situations. In fact, the two are not entirely independent, and we could reconstruct ENV if we had access to the full details provided by TFS.

Figure 2. The two main speech cues: the speech envelope (ENV) and temporal fine structure (TFS). The diagram shows a speech envelope across all frequencies for illustration. In reality, the speech envelope is processed for each frequency band.

11. When do the changes to the auditory system that you’ve been talking about start to happen? Is it only apparent in the very elderly?

This is not something that suddenly occurs in old age, but a gradual decline throughout our adult years (Moore, 2021). If we were to take a group of younger and older people, with matched normal hearing, intelligence and education, we’d find that speech perception declines with age. If we assessed the whole population, we’d also find that the decline in speech perception is greater than can be predicted solely by the degree of hearing loss (Fullgrabe et al., 2014).

However, looking at age-related changes to auditory processing in greater detail reveals a more complex picture. Let’s first consider the simpler situation of processing sound from one ear, i.e., monaural listening. In this case, our sensitivity to TFS declines steadily from early adulthood and throughout adult life, but processing of ENV is less affected. Processing sound from both ears together, i.e., binaural processing, declines with age from early to late adult life for both ENV and TFS. Introducing hearing loss into the equation further complicates things. Declining TFS has some association with the degree of hearing loss, so those with greater hearing impairment also struggle to make use of TFS. Conversely, the association between ENV and the degree of hearing loss is weak.

We’ll also find a similar answer when we start to consider changes to cognition with age, i.e., some other elements of cognition also decline steadily throughout our adult lives.

12. If we understand what happens to auditory processing, why do we also need to think about cognition?

Peripheral hearing and auditory processing are only part of the system that develops understanding from speech sounds. As I described earlier, we can think of the auditory system as processing sound, separating different sources of speech and noise, for presentation from the auditory cortex to the rest of the brain, to then develop meaning. This might be an oversimplification, but it suffices for our later discussion to translate the effects of aging into practical considerations for hearing aid fitting.

At some point, the presence of speech has to be recognized then separated from other sounds, and the relevant sounds have to be turned into phonemes and words. Words have to be matched to meaning, set within a phrase or sentence with a defined structure, and within the context of a discussion. This all has to happen fast because of the continuous stream of speech directed at us, and then we might also have to switch attention to another speaker who might use the same words but pronounce them differently or even change subject. In short, once we’ve processed sounds in the auditory system, there’s a whole lot more to do before we can consciously understand speech, and this uses different cognitive processes.

13. What are some of the different cognitive processes that are needed to understand speech?

Let’s start with an obvious example. We wouldn’t be able to understand speech at all if we had no memory. By the time you got to the end of listening to this sentence, you wouldn’t be able to make meaning of it if you couldn’t remember the words, or their meaning, at the start of the sentence. When listening, you also have to have some form of template of words to match the sounds to meaning (or “semantics”) and a stored expectation of which order the words should come in (i.e., grammar or “syntax”). Different people will pronounce words in different ways, so it takes more effort to process a word in an unfamiliar accent and to make sense of a phrase if the words are in an unexpected order. We also have to resolve any gaps or conflicts in speech, e.g., if a word is misspoken or missed. As words are presented to us in a continuous stream, this all has to happen in parallel and then combined to generate understanding, and it has to happen very quickly because we cannot stop to think about it as the speaker continues talking. The faster somebody is speaking, the harder this will be. We also need to continuously decide where we allocate our attention; for example, to switch between different speakers in a conversation or to ignore other sources of speech or noise. The allocation of attention then needs to feedback and control the auditory processing centers so that they can preferentially process sounds that we want to focus on. If these processes can’t keep up, we will fail to understand and may simply give up.

14. Can you be more specific about the elements of cognition that support speech processing?

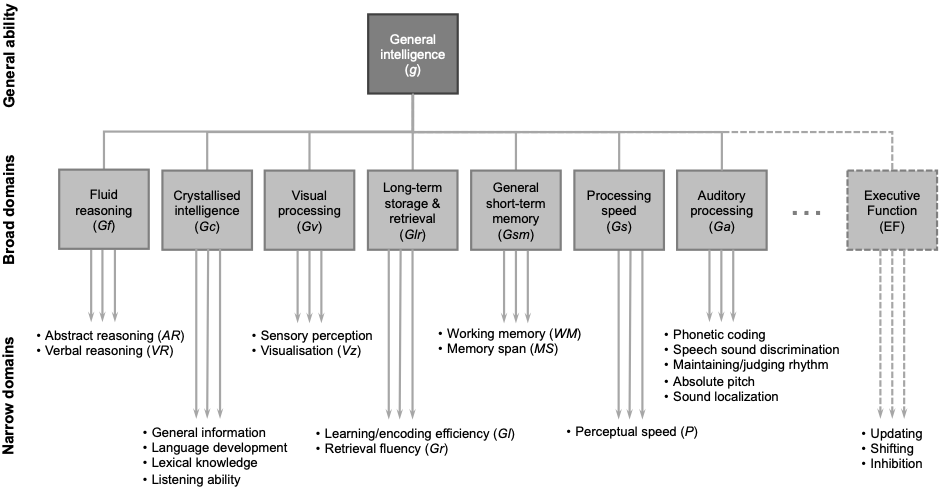

Yes, although this is where things get a bit more technical. We should first define different elements of cognitive ability. We can do this using the well-established Cattell-Horn-Carroll (CHC) model (Figure 3), which describes a three-level hierarchy:

- General intelligence, which is not much use as a measure because different abilities decline or improve as we age;

- Broad domains, basic abilities that manage a range of behaviors;

- Narrow domains are highly specialized and may be specific to certain tasks.

We are interested in the narrow domains because these may or may not be related to understanding speech, and they may be specifically tested in different situations and related to speech intelligibility tasks. Central auditory processing can be considered as one broad domain of cognition with many specific narrow domains, but these are entirely focused on the processing of sound. Some of the other (not specifically auditory) narrow domains of cognition are also important for understanding speech, as I mentioned earlier.

There are many more broad and narrow domains of cognitive ability than are shown in Figure 3, and not all of the domains are agreed upon. In particular, executive function is not usually included in the CHC model, and its definition is not agreed by all. I include it here because it includes some elements that are often associated with speech understanding.

Figure 3. A partial view of the Cattell-Horn-Carroll (CHC) model, describing the domains of cognitive abilities, reproduced from Windle et al. (2023).

It is not possible to define every narrow domain of cognitive ability that contributes to speech perception. Associations between different narrow domains and tests of speech intelligibility also vary in research because different cognitive tests are used, as well as different listening situations and test stimuli. On the whole, a better relationship is usually found with more difficult listening tasks, likely because it is the more difficult tasks that require the greater degree of effort and use of narrow cognitive domains other than auditory processing. Nevertheless, a meta-analysis (Dryden et al., 2017) found that processing speed (Gs in Figure 3), inhibition (part of executive function, EF), working memory (WM), and episodic memory (part of learning/encoding efficiency, Gl) were the domains the most commonly correlated with speech in noise tasks.

As I said, these are not the only domains associated with listening. Crystallized intelligence (Gc) includes important elements, such as the repositories of lexical information and syntax. Our auditory system is also connected to other systems, such as visual processing (Gv). As audiologists, we all know that lip-reading is often important to those with a hearing impairment. This is something “hard-wired” into all of us, as is demonstrated by the McGurk effect. This is when visual information “overrides” auditory information, so we think we hear a speech sound made by a mouth even when provided with a different sound. This is sometimes described as a “mistake” in the auditory system. However, I’d rather think of it as using multiple sensory inputs to best determine speech, which we all make use of in difficult situations such as speech in noise.

15. Can you describe a little more how each of these narrow cognitive domains relate to speech perception?

Sure, let’s start with memory. It should be clear that “memory” is a very broad word that covers lots of different narrow domains and doesn’t have much use as a general term. Different types of memory change in different ways with age. Working memory (WM) is one of the most researched areas in speech processing. It relates to the storage and processing of information used solely for a current task, most obviously many of the elements of speech processing we’ve already discussed. We can use the analogy of random access memory (RAM) in a computer, which holds the software that you’re currently using. If you open up too many pieces of software, RAM fills up, and your computer dramatically slows down or crashes. Likewise, our WM is limited in capacity and can become cluttered. Sadly, unlike a computer, we can’t buy more. Episodic memory is part of long-term memory and relates to the recollection of personal experiences, including the context of an event, its details, and associated feelings. This includes, for example, stored representations of words in a specific individual’s voice. This enables us to process words more efficiently and is why it is part of the CHC definition of learning and coding efficiency (Gl).

Inhibition is part of executive function (EF). Definitions of EF can vary but, in general terms, it can be thought of as the management of cognitive functions, planning, and determining where we focus our attention. Inhibition is an important element of EF because it determines our ability to suppress information that may be distracting or not relevant to our primary task. Most obviously, this relates to our ability to attend to a stream of speech while ignoring other sounds. Finally, I hope that the need for processing speed (Gs) is more obvious. If things don’t happen fast enough, or we can’t suppress competing information, understanding tasks will fail, WM will fill up, and we will cease to be able to process speech.

16. I assume that all the domains of cognitive function get worse as we age?

That would seem logical, but is not quite the way it works. Some elements of cognition decline with age, but some remain stable or improve. Unfortunately, the domains that decline with age tend to be many of the narrow domains of cognitive ability used for speech: working memory capacity and processing reduces; inhibition is less effective; processing speed reduces; and episodic memory declines more with age in comparison to semantic memory (recalling facts) and procedural memory (unconscious performance of tasks). The underlying cause is much the same as that for auditory processing: a generalized, gradual reduction in the number of interconnections between neurons.

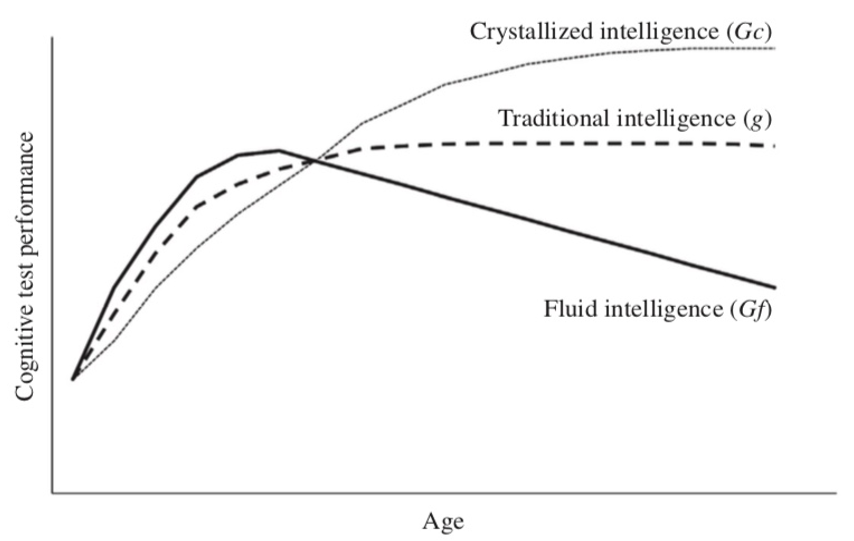

To look at the effects of aging, we can simplify the CHC model (Figure 3) because age-related changes apply to groups of narrow domains in different, but fairly consistent, ways. We can group all of the narrow domains under two basic concepts:

- “fluid intelligence”, the speed and ability to resolve problems in novel situations, including WM, processing speed, inhibition, and episodic memory;

- “crystallized intelligence”, an accumulation and use of skills, knowledge and experience, including storage of lexical information and syntax.

Fluid intelligence generally peaks at about 20 years of age and declines at a consistent rate throughout adult life (Figure 4) which is a similar pattern to that I described for auditory processing. Crystallized intelligence improves with age. The two types of intelligence tend to “cancel out,” and an individual’s overall performance in psychometric tests of intelligence (“traditional intelligence”, g) remains largely stable for most of adult life. Put in a different way, if I were able to compete against my younger self, I may end up with the same test score, but my younger self would have achieved it using raw speed and power, whereas my older self can call on conscious knowledge and unconscious “tips and tricks”. It is important to note, however, that the rate of change varies between individuals, so that the range of abilities for a group gets larger with increasing age. This means that age alone is not a very good indicator of any specific domain of cognition. Nevertheless, the narrow cognitive domains that relate to speech perception in challenging circumstances will all follow the same pattern of decline as that of overall fluid intelligence. This also highlights that an overall score for general cognitive ability is no use in determining the likely impact on speech perception.

Figure 4. The general pattern of change in cognitive abilities throughout adult life. All domains improve during childhood, up to about 20 years of age, after which Gc continues to increase, but Gf declines, resulting in stable traditional intelligence. Reproduced with permission from Fisher et al. (2019).

17. How do changes to cognition affect our listening ability as we age?

Changes to different domains of cognitive ability will have different effects on speech processing in different situations but will be especially apparent in difficult listening situations. It isn’t possible yet to map all of the narrow cognitive domains to a full list of situations. However, we can say that these changes cause problems with fast speech, different accents, the ability to process multisensory inputs like vision and hearing (although this is still helpful), and, in particular, multiple speakers and speech in noise. The more complex and speech-like the noise signal is, the harder it will be to disentangle speech from a target speaker. For example, people will perform better when the background noise is a constant sound, like white noise, compared to multi-talker babble.

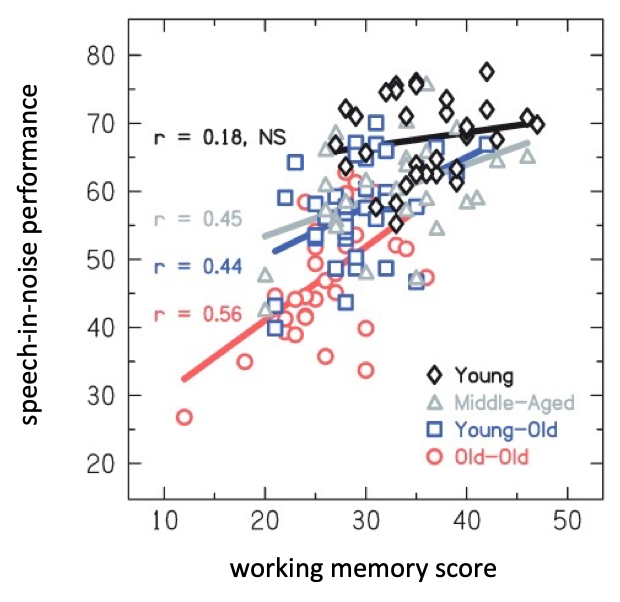

A good example of this is the comparison of working memory with speech in noise (SIN) performance undertaken by Fullgrabe and Rosen (2016) (Figure 5). Overall, there appears to be a clear relationship between SIN performance and working memory. Notably, the relationship becomes stronger with the increasing age of participants, so, as fluid cognition declines, it becomes more predictive of the variation in SIN ability.

Figure 5. The relationship between working memory and speech in noise performance for four age groups, reproduced with permission from Fullgrabe and Rosen (2016); r = correlation coefficient, NS = not significant.

18. I’ve heard quite a bit about “listening effort”. I’ve even seen ads that some hearing aids “reduce listening effort.” How does that fit into this model?

The Framework for Understanding Effortful Listening (FUEL) (Pichora-Fuller et al., 2016) is a useful model that defines “listening effort” as the allocation of cognitive resource to overcome obstacles to listening, so it has obvious relevance to our consideration of cognition and speech perception. Listening effort also has a finite capacity, and we will expend it at a different rate depending on the difficulty of a task. All of the age-related changes to peripheral hearing, auditory processing, and cognition we describe above will increase the amount of listening effort required. Older adults may therefore find more situations in which listening effort is exhausted and may simply give up or withdraw. This becomes more important when we consider hearing aids because, whatever else we do, we should also aim to minimize (or not increase) listening effort. You mentioned hearing aids, and we should be aware that hearing aids can potentially make the situation worse and may affect the motivation of individuals to comply with treatment or engage socially. This should prompt us to think more widely about how we support older adults, i.e., beyond the simple provision of hearing aids. The increase in listening effort due to age-related changes suggest that we should educate patients and associated adults, for example, to consider modifications to their environment (e.g., less noise) and the behavior of others (e.g., clear speech).

19. How do we put all of this together and assess the overall effects of a decline in hearing, auditory processing and cognition?

The relative importance of the hearing thresholds, auditory processing, and cognitive function for predicting individual speech perception will depend on the specific situations encountered. These will, of course, vary enormously in real life and between individuals. The results of research studies also vary because of the different types of auditory, cognitive, and listening tests used.

As we age, there is a decline in monaural TFS sensitivity, binaural processing, and other cognitive domains that support speech understanding. However, these factors do not seem to be directly related to each other once the effect of age is removed (Ellis & Ronnberg, 2022) so might be seen as separate factors that all undermine listening ability. For individuals with normal hearing, TFS sensitivity seems to be the most important factor in predicting speech intelligibility in quiet, relative to ENV sensitivity and other fluid cognitive abilities, but the other elements of cognition become stronger factors when predicting performance in noise (Fullgrabe et al., 2014). Of course, introducing peripheral hearing loss into the equation further complicates things. The ability to make use of TFS declines with both increasing age and degree of hearing loss; whereas binaural processing of ENV declines with age but has a weak association with the degree of hearing loss (Moore, 2021). Overall, a decline in auditory processing, cognition, and hearing loss with age increases the degree to which we become more dependent on ENV.

20. This might all be interesting stuff, but I see I just used up my 20th question, and we haven’t even talked about how it actually affects what we do with hearing aids?

Yeah, there’s a lot to talk about. How about we meet again next month and focus our discussion on how hearing aids and the programming of hearing aids fits into all of this?

Before we finish up, however, I will say that it’s important to understand that hearing aids can resolve only some of the issues caused by a reduction in peripheral hearing. In particular, they address the loss in hearing sensitivity but cannot restore the loss of frequency tuning. Directionality can enhance the signal-to-noise ratio, and noise reduction can reduce the amount of listening effort. Otherwise, hearing aids might not do anything to directly improve auditory processing or cognitive processes that support speech perception, but there is considerable potential for them to introduce distortion that could significantly undermine speech perception and increase listening effort in some older adults.

For now, we should all be aware that a combined decline in hearing, auditory processing, and cognition related to normal aging can make older adults some of the most complex patients we see in an audiology clinic and that we need to think carefully about all of the hearing aid parameters we set in these cases.

See you next month!

References

Dillon, H., Day, J., Bant, S., & Munro, K. J. (2020). Adoption, use and non-use of hearing aids: a robust estimate based on Welsh national survey statistics. Int J Audiol, 59(8), 567-573. doi:10.1080/14992027.2020.1773550.

Dryden, A., Allen, H. A., Henshaw, H., & Heinrich, A. (2017). The Association Between Cognitive Performance and Speech-in-Noise Perception for Adult Listeners: A Systematic Literature Review and Meta-Analysis. Trends Hear, 21, 23-31. doi:10.1177/2331216517744675.

Ellis, R. J., & Ronnberg, J. (2022). Temporal fine structure: associations with cognition and speech-in-noise recognition in adults with normal hearing or hearing impairment. Int J Audiol, 61(9), 778-786. DOI: 10.1080/14992027.2021.1948119.

Fisher, G. G., Chacon, M., & Chaffee, D. S. (2019). Chapter 2 - Theories of Cognitive Aging and Work. In B. B. Baltes, C. W. Rudolph, & H. Zacher (Eds.), Work Across the Lifespan (pp. 17-45). Academic Press.

Fullgrabe, C., Moore, B. C. J., & Stone, M. A. (2014). Age-group differences in speech identification despite matched audiometrically normal hearing: contributions from auditory temporal processing and cognition. Front Aging Neurosci, 6, 347. DOI: 10.3389/fnagi.2014.00347.

Fullgrabe, C., & Rosen, S. (2016). Investigating the Role of Working Memory in Speech-in-noise Identification for Listeners with Normal Hearing. Adv Exp Med Biol, 894, 29-36. doi:10.1007/978-3-319-25474-6_4.

Gordon-Salant, S., Yeni-Komshian, G., & Fitzgibbons, P. (2008). The role of temporal cues in word identification by younger and older adults: effects of sentence context. J Acoust Soc Am, 124(5), 3249-3260. doi:10.1121/1.2982409.

Moore, B. C. J. (2021). Effects of hearing loss and age on the binaural processing of temporal envelope and temporal fine structure information. Hear Res, 402, 107991. DOI: 10.1016/j.heares.2020.107991.

Pichora-Fuller, M. K., Kramer, S. E., Eckert, M. A., Edwards, B., Hornsby, B. W., Humes, L. E., ... Wingfield, A. (2016). Hearing Impairment and Cognitive Energy: The Framework for Understanding Effortful Listening (FUEL). Ear Hear, 37 Suppl 1, 5S-27S. doi:10.1097/AUD.0000000000000312.

Rosen, S. (1992). Temporal information in speech: acoustic, auditory and linguistic aspects. Philos Trans R Soc Lond B Biol Sci, 336(1278), 367-373. DOI: 10.1098/rstb.1992.0070.

Shannon, R. V., Zeng, F. G., Kamath, V., Wygonski, J., & Ekelid, M. (1995). Speech recognition with primarily temporal cues. Science, 270(5234), 303-304. DOI: 10.1126/science.270.5234.303.

Windle, R. (2022). Trends in COSI responses associated with age and degree of hearing loss. Int J Audiol, 61(5), 416-427. doi:10.1080/14992027.2021.1937347.

Windle, R., Dillon, H., & Heinrich, A. (2023). A review of auditory processing and cognitive change during normal aging, and the implications for setting hearing aids for older adults. Frontiers in Neurology, 14. doi:10.3389/fneur.2023.1122420.

Citation

Windle, R. (2024).20Q: Changes to auditory processing and cognition during normal aging – should it affect hearing aid programming? part 1 – changes associated with normal aging. AudiologyOnline, Article 28791. Available at www.audiologyonline.com