Editor’s Note: This text course is an edited transcript of a live webinar. Download supplemental course materials.

Learning Objectives

After this course learners will be able to:

- Describe basic directionality techniques including improving sound quality and automatic switching.

- Describe the benefits of ReSound's Natural Directionality II (asymmetric directional) in terms of directional benefit and environmental awareness.

- Describe the benefits of ReSound's Binaural Directionality II with Spatial Sense and list the listening environments where it is beneficial.

Directional Foundations

Dr. John Nelson: In today's course, I will discuss how ReSound implements directional processing in our hearing aids. We know that directional hearing aids provide a significant benefit in laboratory settings. When conducting studies, we will typically place a listener in a sound booth, in a controlled listening situation. An example of a controlled listening situation is where a speech signal comes from the front of the listener, and noise comes from the back. This setting does not represent a real-world situation. However, when we perform laboratory studies, we must control the situation so that we know what we are looking at and we can measure and evaluate the data. When we obtain our results, we can then go back and analyze what did or did not cause a directional benefit. Additionally, in a laboratory, the speech and noise may be spatially separated (i.e., they are not coming from the same direction). Spatial cues allow directional hearing aids to work better, because they can focus in on different directions, being able to provide better audibility and better understanding in different situations.

Individuals need a good signal-to-noise ratio (SNR) to be able to hear in their listening environments. For people with hearing loss, they require even more improvement in the SNR to have the exact same benefit and understanding as people with normal hearing. When people with hearing loss say, "I can hear what's going on, but I have more difficulty understanding in background noise than I used to," it’s not just a loss of the ability to hear different frequencies. It's also that those sounds are more distorted, and background noise makes it even more difficult to understand.

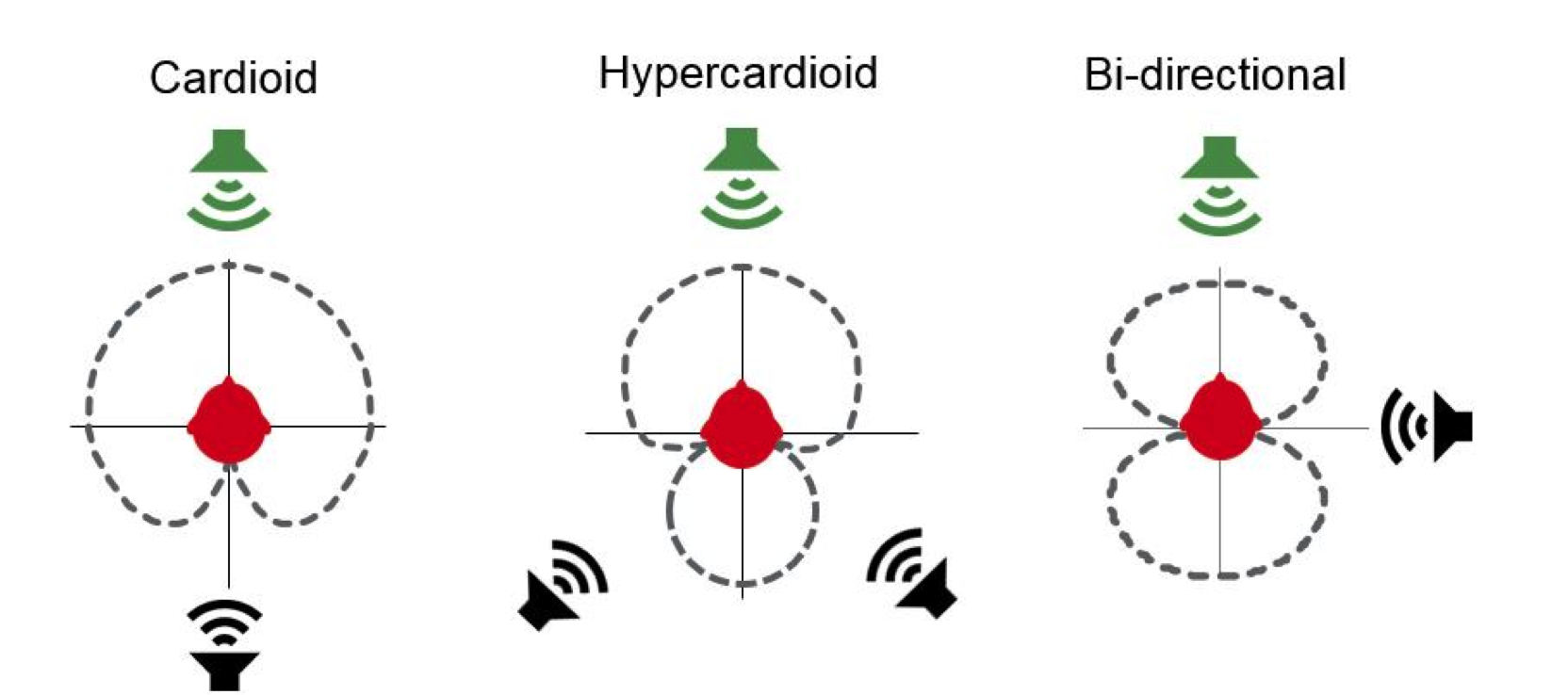

There are different polar characteristics depending on how the hearing aid microphone spacing, and the phase between those hearing aid microphones, is aligned. With digital signal processing, we can change the phase and the spacing relationships with phase adjustments in the digital signal processor. With an analog hearing aid, the polar characteristics would be fixed depending on the physical set up of the hearing aid. With digital hearing aids, we can adjust some things to be able to change the polar characteristics. To illustrate, in Figure 1, the image on the left is a polar characteristic for a cardioid microphone. A cardioid microphone has maximum reduction of amplification from sounds coming from directly behind the individual. The middle graph is a hypercardioid graph characteristic, where most of the sounds that are reduced are those coming from the listener’s back right or the back left. In a bi-directional polar characteristic setting, most of the sounds that are reduced are those coming from immediately to the right or immediately to the left of the listener. Years ago, we could program different polar characteristics into the hearing aid, and then the individual would change programs depending on the listening situation. For example, if the listener wanted to hear the person in front of them, and cut out a bothersome voice or sound behind them, they would go to cardioid. If the bothersome sound were coming from the sides and the back, they would go to hypercardioid. Or, if they were trying to understand the person across the table at a dinner party, and ignore the person to the right, they would go to bi-directional.

Figure 1. Examples of polar characteristics.

Think about how difficult and unrealistic it was for an end user to make some of these decisions. First of all, an end user would need to decide, "Should I be in omnidirectional or should I be in directional?" Next, they’d have to decide, "Should I be in hypercardioid, cardioid, or bi-directional?" Most end users want to put on the hearing aids and simply have them work properly, so that they can participate in the listening situation, without worrying about what type of program setting to select.

Sound Quality and Directionality

Directional Mode Low-Frequency Roll Off

There is one other factor that we need to consider in order to make hearing aids in a directional mode acceptable. Many times, people would complain about the fact that they couldn't hear as well in the directional mode or that they had more background noise in directional mode. When you go from an omnidirectional mode to a directional mode, you get a low frequency roll off in the amplification. That low frequency roll off is related to the fact that the long wavelength of low frequency sounds exceeds the distance between the two hearing aid microphones. Therefore, in the high frequencies you would have improved understanding of sounds from the front compared to the back, but in the low frequencies, you would have a reduction in audibility because of this physics law. Some people liked the fact that sounds weren’t as loud in directional mode because of that low frequency roll off. Conversely, some sounds weren't audible.

Directionality: Low-frequency Equalization

To account for this low frequency roll off, hearing aid manufacturers would increase the gain in the low frequencies in order to increase the audibility of low frequency sounds. This is especially important with hearing aids that automatically switch between omnidirectional and directional. With automatic switching, people would hear the loudness change when the hearing aid moved between the two different modes, depending on the listening situation. The omnidirectional mode would be louder than the directional mode. Manufacturers included something called a low-frequency bass boost to in their automatic switching mechanisms to account for the low-frequency roll off. Low-frequency bass boost provided more gain to the low frequencies in the directional mode so that the two hearing aid modes (omni and directional) had equal loudness. The drawback of this is that when you increase gain for audibility, you also increase the gain for unwanted sounds such as background noise. With more low frequency gain due to the bass boost, circuit noise would be audible in the directional mode in a quiet situation, and wind noise would be even more disturbing. More low frequency gain would also create more occlusion, especially for those individuals that have really good low frequency hearing. They would complain of their voice sounding boomy. For professionals, it became difficult to figure out how to fine tune hearing aids because it was difficult to determine what was happening.

Head-Related Directional Characteristics

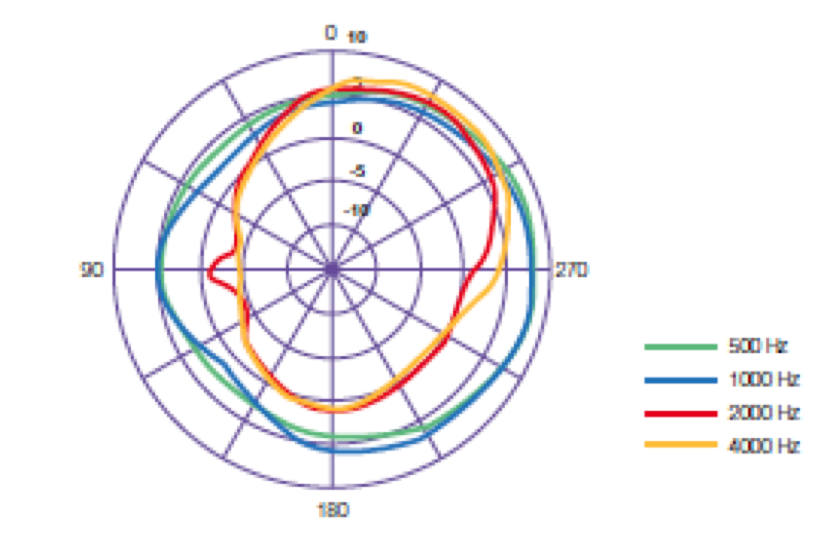

ReSound stepped back to analyze how sound travels around the head, to determine how to get rid of the problem associated with low-frequency bass boost. In Figure 2, imagine that a person’s head is in the center of the graph facing forward. We moved a loud speaker around the person's head and measured the intensity of the sound at the eardrum of the right ear. As we moved a low frequency sound around the head (represented by the blue and green lines), there wasn't much change in energy no matter where the loud speaker was placed around the head. That's because low frequency sounds travel fairly well around objects without losing intensity. But the high frequency sounds (red and orange lines) had more energy on the side that was being recorded and less energy when the sound was being delivered from the opposite side of the head. Therefore, because of the head shadow effect, there was a directional characteristic with the natural open ear, even without a hearing aid.

Figure 2. Head-related directional characteristics.

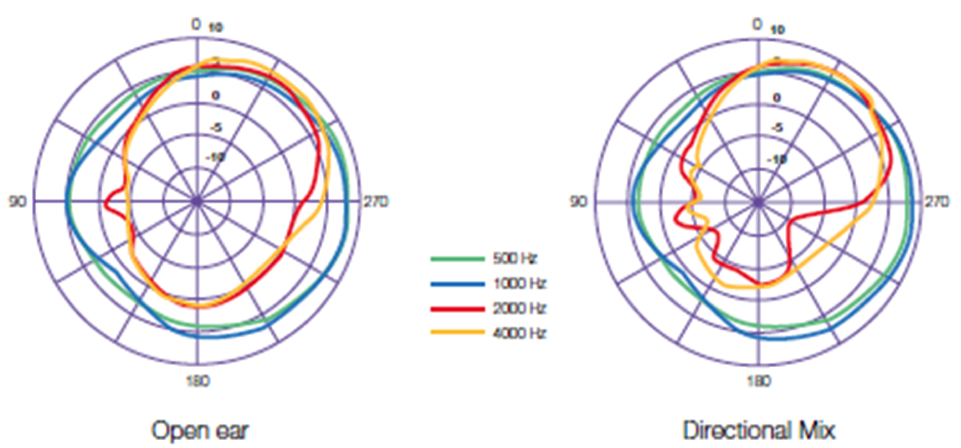

Directional Mix Processing

We wanted to eliminate the problem of low-frequency bass boost from directional hearing aids. We thought we could get better sound quality if we could implemented something that occurs within the natural ear into the hearing aid signal processing. The following graph compares the results from the previous open ear test, to a test conducted using a person wearing the ReSound hearing instrument with Directional Mix Processing (Figure 3). The result is that the hearing aid delivers omnidirectional characteristics in the low frequencies and directional characteristics in the high frequencies. The directional characteristics in the high frequencies will improve the ability to hear in background noise as long as the speech is from the front and the noise is from the sides and the back. The low frequency sounds will be delivered in omnidirectional pattern. In this way, we have audibility in the low frequency regions without the need to apply the low-frequency bass boost, and without the artifact or sound quality problems that go along with it.

Figure 3. Directional Mix Processing.

A number of hearing aid manufacturers do this in their directional processing and they call it different things (e.g., pinnae restoration or split band directionality). ReSound added the ability to change the cut-off frequency between omnidirectional and directional processing. In the Aventa fitting software, if we have a Directional Mix setting that is “Very Low”, it means there is a low amount of directionality compared to omnidirectionality. The omnidirectional processing is in the low frequency and some of the mid frequencies. If we change the Directional Mix setting to be “High”, then there is a lot of directionality processing and less omnidirectional processing. In that way, very low frequencies are processed in omni for sound quality benefits, but there's a lot of directionality processing for improvements in SNR in difficult listening situations.

There are some basic rules regarding setting the Directional Mix that are integrated into the Aventa fitting software. The fitting software will prescribe an individual setting for each patient based on two things: the hearing aid selected and the audiogram.

The hearing aid selected provides Aventa with information on how far apart the hearing aid microphones are on the device. On a Behind-the-Ear (BTE) hearing instrument, the hearing aids are physically further apart than an In-the-Ear (ITE) hearing instrument. With microphone spacing that is further apart, you experience fewer low frequency roll off problems. If the hearing aid selected is a BTE model, Aventa will prescribe a directional mix that is higher and still provide good sound quality because there's less low frequency roll off.

The audiogram indicates whether or not there is good low frequency hearing. If the person has good low frequency hearing, they may experience more problems with occlusion, and we don't want to use as much low-frequency bass boost for sound quality. Typically, if the low frequency hearing is good, we will put a larger vent in the hearing aid, or use an open dome. This allows more low-frequency sound to escape from the ear, and also allows the unprocessed sound from the environment to come in through the vent to the ear. To ensure that we have good sound quality when low frequency sound is coming in the vent to the ear, we want to confirm that the hearing aid output is similar to that of the environmental sound.

Remember, in a natural situation, low frequency sounds are omnidirectional. If we process the hearing aid output in the low frequencies as omnidirectional, and combine the hearing aid output with the sound coming into the vent, it will have a higher sound quality when it reaches the eardrum. The Directional Mix setting is selected automatically in Aventa and prescribed for each individual. You only need to make adjustments if there's a specific situation for which the person might want to have a different setting. If you use a top hearing aid in our portfolio, and someone desires more directionality in a specific situation, you could set a different program with a Directional Mix of “High”.

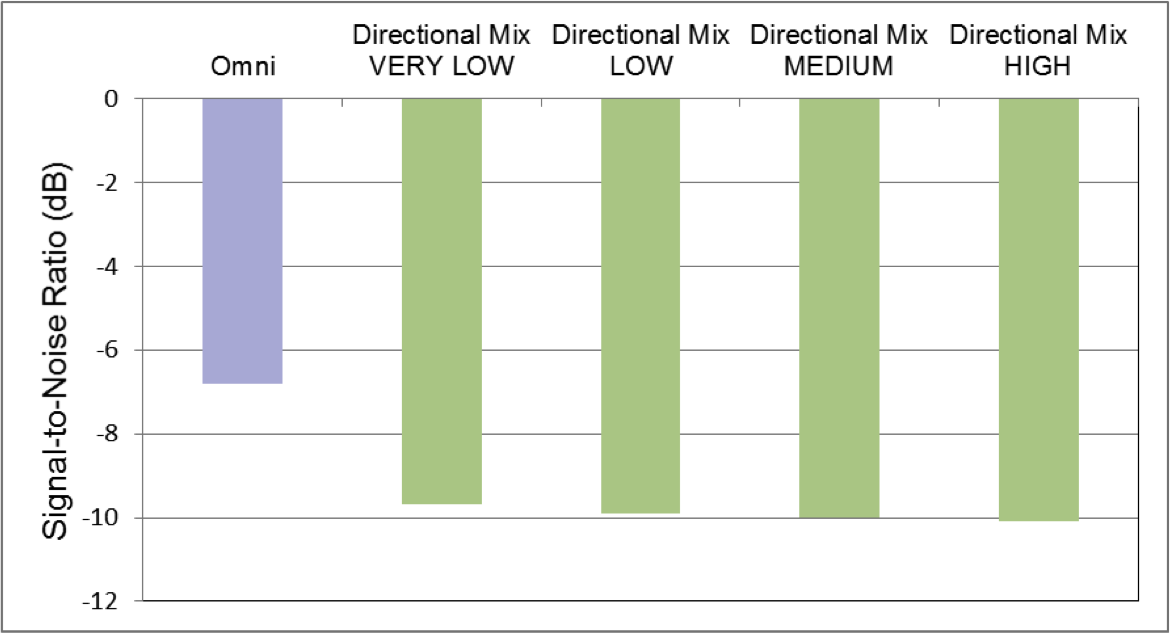

We conducted research to obtain data to show how Directional Mix Processing worked, and to prove that there is a benefit. In one study, we compared the ability to hear in background noise. We performed this study as a SNR test in different settings (Figure 4). The first purple bar represents the SNR for 50% performance when the hearing aids are in omnidirectional mode across all frequencies. We specifically used a test group of people that have open hearing aid fittings with very large vents. The benefit that you get at different Directional Mix settings is very similar, independent of whether you have a very low Directional Mix or a high Directional Mix. With an open fitting device, the improvement that you get does not change depending on the Directional Mix setting.

Figure 4. Directional Mix Processing benefit for open fittings.

To reiterate what I stated earlier, individuals with very good low frequency hearing and a very open vent will have a better sound quality when the hearing aid processor is similar to the environmental input in the low frequency that's coming into the vent. For this case group, Aventa will automatically set very low Directional Mix settings for the purpose of maintaining good sound quality, which will not change the benefit of that directional hearing aid setting.

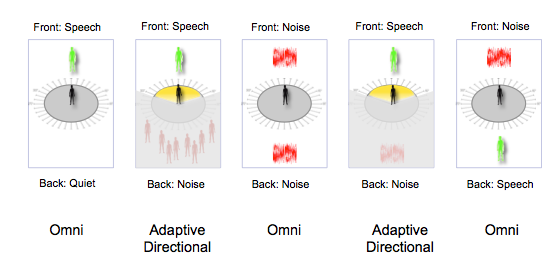

Figure 5 shows data obtained with individuals with traditional venting. These are people with moderate to severe hearing loss (above 40 dB HL) who require smaller venting. For this population, notice that with an omnidirectional setting, you get about 4.8 dB SNR for 50% performance. As you go from a very low Directional Mix setting to a high Directional Mix setting (more directionality in the mid frequencies), you see an improvement in understanding.

Figure 5. Directional Mix benefit for traditional venting.

For people with traditional venting and poorer audiograms, we want to have a Directional Mix setting of “High” to be able to get the best understanding in background noise. For these individuals, because they don't complain about occlusion, and who don't have environmental sounds coming in the vent (because they have a smaller vent), a Directional Mix setting of “High” will give them the best understanding in background noise without negatively impacting sound quality.

This is unique with ReSound hearing aids, and we apply it to all of our directional settings. Every one of the directional settings in ReSound hearing aids has band split Directional Mix signal processing, in order to provide the best understanding in background noise while providing the best sound quality for all individuals.

Automatic Directional Switching

Manufacturers began implementing automatic switching directional hearing aids in response to users who experienced difficulty manually changing the hearing aid settings.

In a test booth, we see a large difference in the benefit of omnidirectional versus directional in background noise; in the real world, we see a smaller difference. Part of the reason for the smaller difference in the real world is because individuals don't always switch their hearing aids correctly. We want to make sure that we choose hearing aid settings for these individuals to provide them the best benefit when they are in different situations.

If directional hearing aids are in the wrong mode, it can result in a directional deficit. The research that I mentioned earlier showed that there's a directional benefit and improved signal-to-noise ratio for listening, as long as the speech is from the front, and the noise is from the back. What if the person actually wants to hear what's going on behind them and they don't want to hear what's going on in front of them? For example, they are in a lecture hall, and they want to hear the conversation that's going on with the class students behind them and not the noise from the front, which might be the teacher. In that situation, we are giving them a decreased signal-to-noise ratio and a deficit to understanding what they want to hear. In fact, they might not even be able to hear what's going on behind them, to know if they want to turn their head and listen to it.

Basic Directional Philosophies

With regard to directional philosophies, they each focus on different things. For instance, they might focus on improving the SNR (typically front-to-back listening improvement). They might focus on automatic switching, assuming that you want to hear sounds from the front and not the back. They might switch modes based on the location of the loudest speech signal. However, the loudest speech signal might be the signal you are trying to ignore or repress, because you are having a quiet conversation with the person in front of you. We can't always assume that the person wants to listen to what is in front of them, or that they want to listen to the loudest speech signal. Hearing aids aren't perfect, but we need to understand how they work to be able to make sure that the person is in the right mode for the right listening situation.

Hearing aids are going to analyze the acoustic environment, decide what mode is best, and choose some features based on the user’s situation. They will look at if there is sound from the front, if there is sound from the back, if the loudest speech signal is from the front, if the loudest speech signal is on the right, etc. For example, if someone is watching television, and the loudest speech signal is on the right, some hearing aids will turn down the gain in the left hearing aid in attempt to cut down the background noise on that side. Then, the person can't hear the television on the left, which may have been what they actually did want to hear.

ReSound SoftSwitching

The automatic switching that ReSound developed a number of years ago (which is now in our budget price point product, ReSound Enya), is called ReSound SoftSwitching. ReSound SoftSwitching took into account more than whether or not there were sounds from the front or sounds from the back. This technology allowed us to analyze the type of sound that came from the front compared to the type of sound that came from the back.

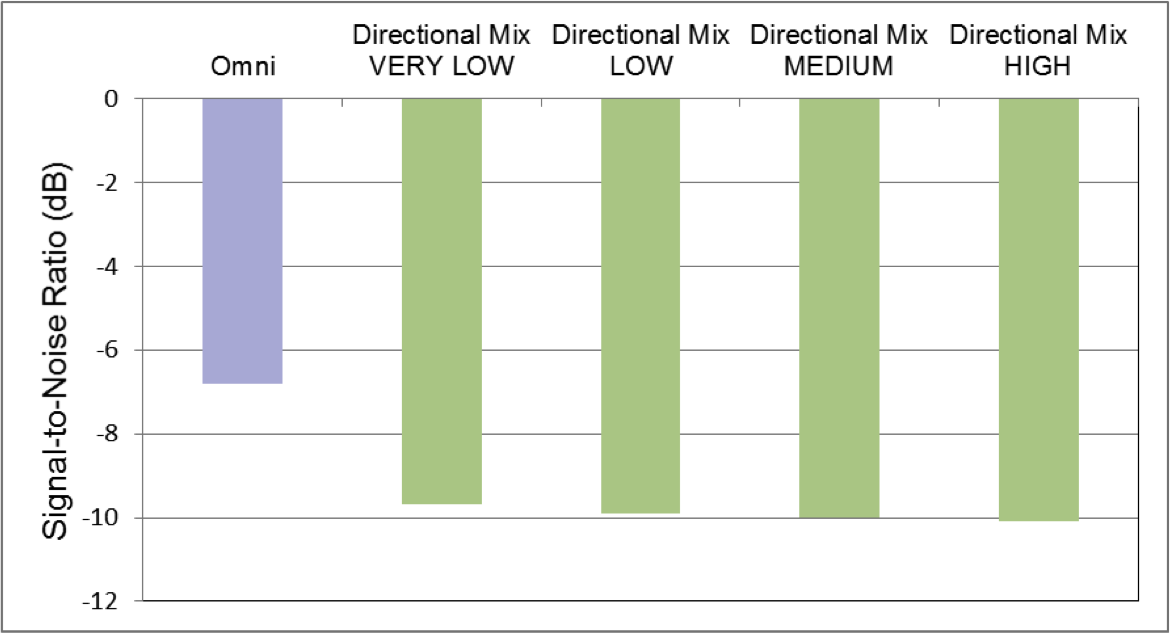

Let's look at these five different listening situations (Figure 6).

Figure 6. SoftSwitching: Different listening situations.

If you have speech in the front and quiet in the back, the hearing aid would switch to omnidirectional. There's no need to cut out sounds from the sides and the back if there's no noise from the back to interfere with the ability to hear what's going on from the front. In this condition, the person has full awareness and sound from all directions.

If there's noise going on behind the individual and speech coming from the front, the hearing aid should go into adaptive directional mode to be able to improve the SNR for speech coming from the front. Now, this makes the assumption that the person is looking at what they want to hear. For an automatic switching mechanism, sometimes these assumptions have to be made to determine the correct mode.

If there's noise from the front and from the back, and if the hearing aid can't detect speech in the front, the ReSound hearing aid with SoftSwitching assumes there's no reason to cut out sounds from the sides and the back. Also, there's no need to focus on sounds in the front, because there's no speech signal there. That's what directional microphones are supposed to do -- improve understanding of speech from a certain direction. An example of this would be if you are walking down a busy street, where typically you would not have someone talking to you from the front. You would want to have omnidirectional in this situation because it would allow you to hear all around you. For example, if a car honks its horn or an emergency vehicle drives by with its siren on, you would want your attention to be drawn to those noises.

The next listening situation would be speech from the front with some sort of noise in the back that is not speech-like. In that case, the hearing aid SoftSwitching would go into an adaptive directional mode, so that you could hear better in the direction that you are looking.

The fifth listening situation is unique. If there's noise from the front, and a specific speaker cannot be detected in the front, and also a speech signal from the back, the hearing aids will remain in omnidirectional mode. There's no reason to improve the signal-to-noise ratio from behind, because they're not looking at that individual. The hearing aid will stay in omni mode so the listener can hear in all directions, and they can make a decision whether or not they want to look at that person speaking. For example, the listener might be at an orchestra concert and someone behind them is talking. The listener doesn’t necessarily want to hear the person behind them, so their hearing aid shouldn't switch to directional mode. The omnidirectional mode will automatically allow them to hear what's going on in a 360 range, similar to someone who is not using hearing aids.

ReSound SoftSwitching was unique when it was introduced. We have since learned some things that have caused us to go in a different direction. SoftSwitching, and any automatic switching mechanism, makes an assumption about what the person wants to hear. When we make assumptions, there are going to be times that the assumptions are not correct. We do not want any ReSound end users to be taken away from their listening environment, or to be forced to listen to a specific signal or from a given direction. Individuals with hearing loss who wear ReSound hearing aids should be able to decide for themselves what's important to listen to, and not have the hearing aids determine it for them.

ReSound’s Directional Philosophy

Natural Directionality

A number of years ago, we developed a hearing aid signal processing called Natural Directionality. It is now in its second generation, Natural Directionality II, which includes our band split directional processing. Natural Directionality was built on our directional philosophy that focuses on providing high sound quality and natural sound perception. We also recognize that the hearing instrument, no matter how smart it is, cannot tap into the person's brain and find out what the person thinks is important to hear. We want to apply technology to take advantage of the brain’s ability to integrate and segregate sound sources for a natural listening experience. We look at the individual as a unique person with the intelligence and cognitive abilities to switch and utilize different inputs.

With Natural Directionality, we built upon an asymmetrical listening situation, where one hearing aid would always be in omnidirectional mode and one hearing aid would always be in the directional mode. A frequently asked question that we receive is, "How do you implement this within the monaural fitting?" You cannot implement it with a monaural fitting. It requires a hearing aid on both the right and the left ears. By definition, one hearing aid is omnidirectional, monitoring the listening environment, and one hearing aid is directional, being able to listen and better understand what the listener is looking at.

You could program the hearing aids with the right ear as directional and the left ear as omnidirectional, or vice versa. Based on the audiometric information, Aventa recommends that the ear with the better understanding of speech, or the better auditory thresholds for many situations, should be the ear that has the directional hearing aid. Then, the other ear is the monitor ear in omnidirectional. Most of the time, that means that the right ear will be directional because the opposite side of the brain, the left brain, is our language processing center. We program the right hearing aid as directional to provide the best understanding from the front, while still having the opposite ear to monitor the listening situation. In this way, the person can attend to whatever they decide is important. Maybe they want to listen to conversation from the side or the back with their left ear, or maybe they hear their name with their left ear from the sides and the back and they turn their head. Then, they can look at what they want to hear with a better signal-to-noise ratio.

A number of different studies have been conducted over the past several years, including one at Walter Reed (Cord, Walden, Surr, & Dittberner, 2007). These studies collected data to support the fact that if both hearing aids are programmed in directional mode, you will achieve a significant increase in understanding (as opposed to both ears omni) as long as the speech is from the front and the noise is from behind. They took it to one more level and asked, "What happens if we make the right ear omni and the left ear directional; or the right ear directional and the left ear omni?” They found that there's a slight decrease in understanding in background noise compared to both ears directional, but that decrease is not statistically significant. You see a large statistical improvement and understanding in background noise when you go from both ears omni, to at least one ear, if not two ears, in directional. If you compare both ears directional to one ear directional, there's no statistical change in ability to hear the speech signal. Therefore, we could take one of those ears that wasn't providing a statistical benefit and switch it to omnidirectional mode, resulting in better ability to hear from all directions, and allowing the listener to choose which listening sources are important to hear.

This concept has also been used in the field of vision for a number of years. People with nearsightedness and farsightedness will have glasses or contacts changed so that they have one eye to focus on seeing distance, and one eye to focus on seeing close up. It's not a novel thing in terms of sensory perception, but it is a novel thing in hearing aid signal processing.

ReSound Binaural Directionality

We recognize that there are some situations where an asymmetric directional setting is not ideal, but it isn't ever taking the person away from their listening situation. Our next generation of hearing aid signal processing, ReSound Binaural Directionality, uses device-to-device communication with wireless signal processing. With ReSound Binaural Directionality, the two hearing aid processors communicate with each other. The right hearing aid and the left hearing aid can communicate and make decisions together to change the hearing aid signal processing.

We stepped back and we asked, "How can we use this new technology in our directional switching mechanism?" Again, we recognized that real world listening situations cannot be controlled by the user, and hearing aids cannot always make the right assumptions about what the person wants to hear. Environments are dynamic and constantly changing, and hearing aids can't keep up, but the brain can if given the right information. After reviewing the existing literature, we found that bilateral omnidirectional is most often preferred and has the most natural sound quality, especially in quiet and single-speaker situations. Bilateral directional is preferred as long as the person they want to hear is in the front and the sounds that they don't want to hear are behind. However, that's not happening very often because people do not want to be cut out of their listening environment -- they want to interact and hear everything around them from all directions.

Bilateral directional is not the correct choice if the person wants to hear sounds from the sides and the back. We needed to devise a switching mechanism to improve on our Natural Directionality philosophy with the ability to have device-to-device communication. We looked at different listening scenarios, such as a couple dining in a restaurant. A woman with hearing aids, and her male companion, are sitting across the table from each other. The server approaches their table from the side to inform them of the specials and take their order. If both hearing aids were in omnidirectional mode, she would have no improved understanding from any situation with all the background noise going on in the restaurant. If they were in directional mode on both sides, she would have an improved understanding as long as she was looking at the man across the table from her, but she wouldn't be able to hear the server because the hearing aids would be reducing the sounds from her side.

Asymmetrical directional mode, or Natural Directionality, would provide a significant benefit for this individual, but only if the right ear were omnidirectional. If the right ear were directional, the server's voice would need to travel around the woman's head (i.e., head shadow effect) before it arrived at the left ear. What if we could switch the asymmetrical pattern, depending on the listening situation? Binaural Directionality provides the ability for hearing aids to communicate with each other. They can assess the listening environment and make appropriate changes to the programming on both sides.

In a quiet listening situation, or when a single person is speaking, we leave both hearing aids in an omnidirectional program. The omnidirectional program will give the listener full awareness of their surroundings and the most natural listening, when improved understanding of the front compared to the back is not needed. As soon as background noise is detected, the hearing aids will switch to an asymmetrical setting, where one ear is directional and one ear is omnidirectional. The hearing aids will compare their data and determine where is the loudest speech signal coming from the sides or the back. If the loudest speech signal coming from the sides or the back is on the left side of the head, it will have an omnidirectional setting on the left and a directional setting on the right. If the loudest speech signal from the sides and the back is coming from the right side of the head, it will flip automatically so that the right side is omnidirectional and the left is directional.

Some situations are so loud that even people with normal hearing have difficulty. In these extremely loud listening situations, where no speech can be detected from the sides and the back, both hearing aids will switch to directional for improved understanding from the front.

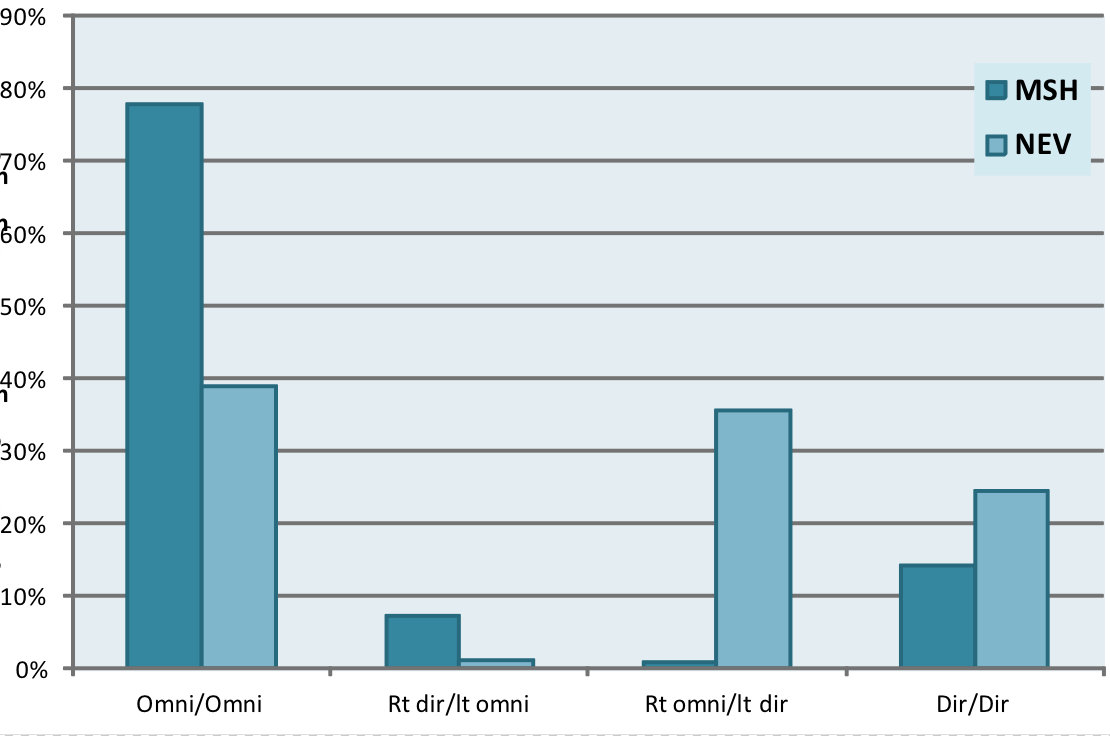

Binaural Directionality – Switching. Our data showed that about 78% of the time the users were omnidirectional in both ears, 14% of the time they were in some sort of asymmetric directionality, and 8% of the time they were in bilateral directional. Those numbers were pretty similar to a study conducted at Walter Reed (Cord et al. 2002) where 77% of the time, people preferred omnidirectional, and about 22% of the time they preferred to be in directional.

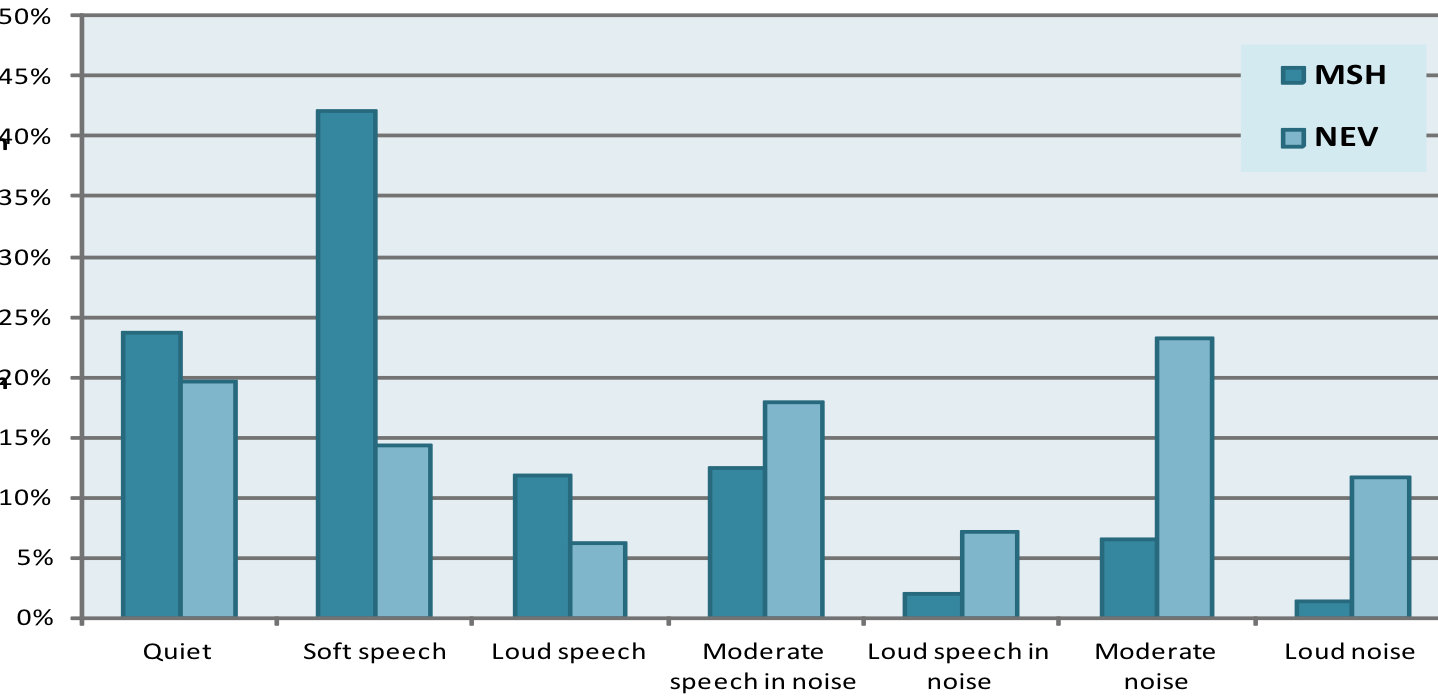

Cases. We know that not all patients fall into the average range, so I asked our colleagues at Global Audiology to produce some case studies from our trial. In Figure 7 you will see data from two individuals that we’ll refer to as Mr. Dark Blue and Mr. Light Blue.

Figure 7. Two case studies (light blue and dark blue): top graph is percentage time in different listening situations; bottom graph is percentage time in different microphone modes.

Mr. Dark Blue spends a fair amount of time in quiet and soft speech situations and very little time in loud speech and noise only situations. In contrast, Mr. Light Blue experiences a variety of listening situations on a daily basis. We compared the two to look at the automatic Binaural Directionality switching mechanisms. We found that Mr. Dark Blue, who spent most of the time in quiet and speech-only situations, had both ears in omnidirectional mode the majority of the time. There was no need to cut out sounds from the sides and the back because there wasn't as much background noise in his listening situations. In comparison, Mr. Light Blue had a variety of mic modes, because he needed directionality to provide improved understanding in his listening situations. I found it interesting that Mr. Light Blue had the hearing aids much of the time with the right ear in omni and the left ear in directional. We then discovered that he is a truck driver. He sits on the left hand side of the truck, and his passenger is always on the right side. For eight hours a day, the hearing aids have the right ear in omnidirectional, to pick up the person on the passenger side, and the left ear in directional, to cut down the background noise.

Challenging Acoustic Conditions. We did more research into the benefits of Binaural Directionality by analyzing two challenging acoustic listening situations. In the first scenario, speech was coming from the front and there was a lot of noise from the back (Speech Front, Noise Rear). In the second scenario, speech was coming from the right, with a lot of noise from the left (Speech Right, Noise Left).

Let’s first look at the Speech Front, Noise Rear condition. Natural Directionality II would provide a better signal-to-noise ratio out of the right ear (directional) and monitor what was going on with the left (omni), but in this condition there isn't any speech coming from the back. Binaural Directionality would switch both ears to directional because there's no speech to understand from the sides and the back, and both ears would have the benefit of a directional input. We found that individuals did perform better with Binaural Directionality in this situation, with both ears in directional. This supports moving individuals to Binaural Directionality compared to Natural Directionality. Of course, if the user were in omnidirectional mode in this situation, they wouldn't do very well at all because the directional benefit is quite strong.

Now let’s look at the second condition, with Speech Right, Noise Left. This situation might occur at a dinner party, where you are trying to understand the person sitting on your right. Natural Directionality is usually set up so that the right ear is directional. With speech on the right and noise on the left, the speech signal from the right will need to travel around the person's head to the left ear in order to be heard, since the right ear is directional and the left ear is omni. In a Binaural Directional mode, it would automatically switch the left ear to be directional, and the right ear to omnidirectional. The hearing aids detect that the speech signal is not from the front, but from the right. By switching the right ear to omnidirectional, there is no head shadow effect. In this listening situation, because there is so much background noise, most other hearing aids without this processing would likely switch both ears to directional. If both ears are directional, the person would barely even detect the speech signal from the right. We found that there is an advantage to Binaural Directionality compared to Natural Directionality if the speech is from the right, so that you have the omnidirectional ear on the right.

Binaural Directionality II with Spatial Sense

Binaural Directionality II with Spatial Sense considers spatial awareness cues in the listening environment. Some natural cues are diminished once we put hearing aids on the ears. With hearing aids, people often describe that they hear sounds coming from within the head instead of from around the head. Or that they hear well, but they have no idea the direction from which sounds originate. That's because some natural directionality cues are lost due to signal processing.

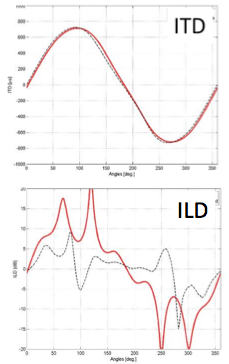

Two types of cues we use for localization are interaural timing differences, and interaural loudness differences.

Interaural timing differences (ITDs) occur through low frequency sound. If speech sounds originate on the right side of the head, they will reach the right ear before the left ear. This localization cue in the low frequencies enables us to distinguish the origin of sounds, more typically from sounds originating from the right and the left angles, not as much from the front and the back.

If the speaker is on the right emitting a high frequency sound, that sound will be louder at the right ear than at the left ear. This interaural loudness difference (ILD) allows you to ascertain if sounds are coming from the front or the back.

When we put a hearing aid on the ear, especially a BTE or a Receiver-In-the-Ear (RIE) instrument, high frequency cues that come from the pinnae and the concha directional characteristics are lost. Putting hearing aid microphones at the top of the pinnae, instead of having the input to the auditory system at the eardrum, takes away much of the interaural level differences for high frequency cues, which is what we hope to regain with Spatial Sense.

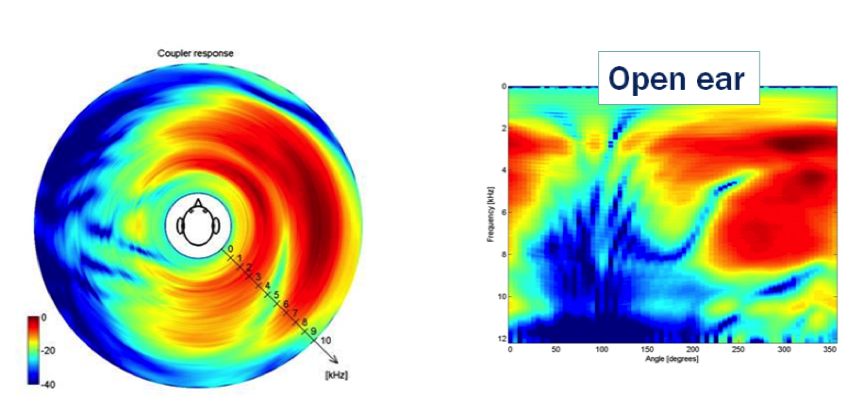

In Figure 8, we are looking at the natural ear, where the person's head is in the middle of a sound booth and sounds are coming through all different directions around the head. In the graph on the left, low frequencies are closer to the center and higher frequencies are to the outer of the circle. Azimuth is the angle around the head in counter-clockwise rotation, and amplitude is by color. Measuring the intensity at the right ear, we notice an increase in high frequency sound energy when the sound originates from the right, but a decrease in high frequency sound energy when the sound is from the left. You can also look at this in the rectangular graph on the right, where the vertical axis is frequency (low frequencies at the top), and the horizontal axis is the azimuth or angle (counter-clockwise rotation). Notice the blue characteristic are the cues that are important for interaural level differences to be able to get better localization or spatialization of where sounds are coming from.

Figure 8. Spectral characteristics: Natural ear.

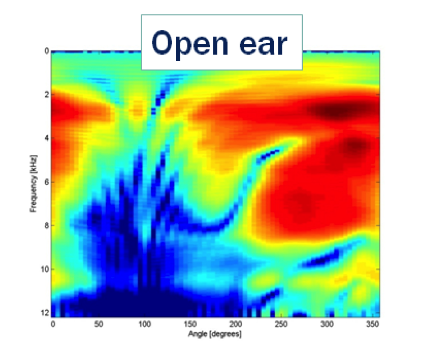

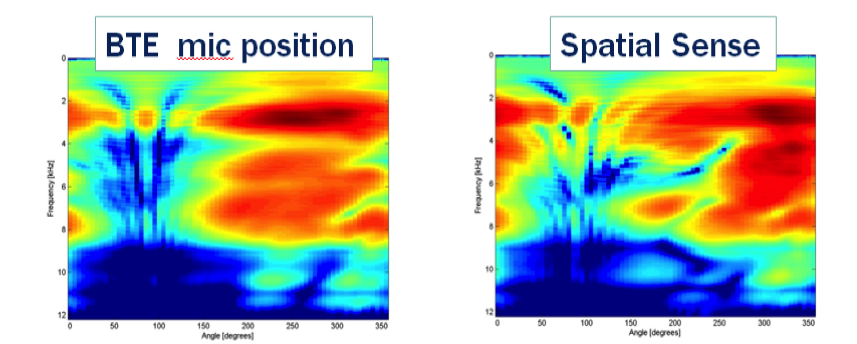

Now we can compare this open ear characteristic to that with a BTE or RIE instrument where the mic is above the ear. In Figure 9, compare the “open ear” graph (left) with the “BTE mic position” (center) graph. Notice how the blue spatial characteristics are changed dramatically. With a BTE/RIE, spectral cues are distorted as the signal travels to the top of the pinna to the device microphone location, and pinna, concha, and ear canal resonances and shadows are eliminated. The graph on the far right shows a Spatial Sense processing correction. Spectral cues lost due to BTE/RIE microphone placement are digitally applied so spectral cues are more similar to the open ear.

Figure 9. Spectral characteristics of the open ear (left) are lost due to BTE/RIE microphone placement (center), and digitally applied with Spatial Sense (right).

Figure 10 shows how we provide beneficial ITDs compared to ILDs. You see that our ITDs are maintained. In Figure 10, the desired open-ear response is the red line, and ReSound e2e is the black line. The dotted black line and red line overlap with Directional Mix processing. When both ears are omnidirectional in the low frequencies, the timing cues are preserved between the two devices. This results in localization of the low frequencies, regardless of which directional mode is selected with ReSound signal processing.

Interaural level difference lines don't overlap well with Binaural Directionality. We are going to start providing those back again with Binaural Directionality II. With Binaural Directionality II, with BTE or RIE hearing instruments, pinnae restoration cues are applied back to the signal processing on both ears, restoring interaural level differences to the hearing aid signal processing.

Figure 10. ITD preserved: Directional Mix processing.

There's a second step that we need to perform related to the compression on the two instruments. Compression, when it's independent, will obliterate that interaural level difference. We need wireless communication between the two hearing aids doing a gain correction.

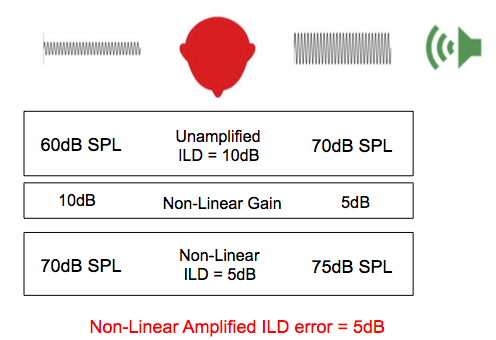

Let’s analyze this scenario mathematically (Figure 11). The sound source is from the person's left side of the head. It will be louder on the left than on the right, 10 dB different in this situation where there's an ILD of 10 dB -- common for a high frequency sound. If you have a 2:1 compression ratio of a non-linear gain, you're going to get more gain on the 60 dB side than the 70 dB side, which will give the hearing impaired individual an ILD of 5 dB instead of 10, which will cause localization problems.

Figure 11. Non-Linear amplification and interaural level difference (ILD).

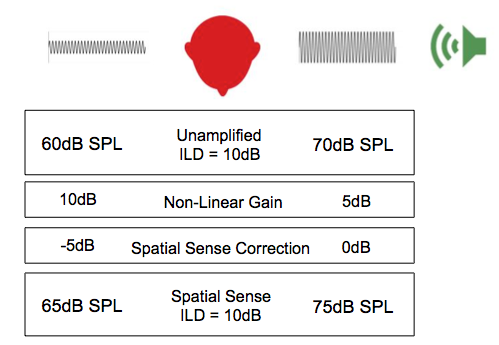

With Spatial Sense, we have a compression compensation that mimics the inhibitory function of the auditory system (Figure 12). Instead of doing a non-linear gain between the two ears, the hearing aid now is going to recognize that the difference between the two ears at the hearing aid microphone is 10. Therefore, it will correct the high frequency signal so that the 10 dB interaural level difference is maintained and audibility is also provided. That can occur because the two hearing aids can make measurements and compare them wirelessly between the devices to determine what gain to provide.

Figure 12. Spatial Sense mimics inhibitory function.

So, compression introduces ILD errors, and with Spatial Sense, we see fewer errors. With spatial cues, the user will have fewer errors in discerning if sounds are coming from the front compared to the back. Remember that these cues help to discern if sounds are coming from front compared to the back (not so much left versus right). If you don't have the Spatial Sense cues, like you might have in Binaural Directionality version I, you experience about 33% errors in determining where sounds are coming from, the front compared to the back. Those errors will reduce to about 20% with Spatial Sense.

With Spatial Sense, sound quality is statistically improved. This is especially true for music and female voices, because people are experiencing surround awareness. They are experiencing sounds coming from where they would naturally in the environment, instead of just being delivered by the hearing aids.

ReSound Directional Focus

In summary, ReSound’s directional focus is to provide audibility of desired signals; to provide audibility for important signals; to not remove the listener from the acoustic environment; and to provide high sound quality.

Today, I’ve provided an overview of the different signal processing that ReSound uses for directionality, including: SoftSwitching, which was our original automatic switching mechanism; Natural Directionality II, the asymmetrical setting; Binaural Directionality, that uses device-to-device communication to change the directional mode depending on the situation; and Binaural Directionality II with Spatial Sense, that brings back spatial awareness cues so that people have improved sound quality and a 3-dimensional listening space with their hearing aids. Thank you for attending this course. For more information, please visit www.resound.com or contact your ReSound representative.

References

Cord, M.T., Walden, B.E., Surr, R.K., & Dittberner, A.B. (2007). Field evaluation of an asymmetric directional microphone fitting. Journal of the American Academy of Audiology, 18, 245-256.

Further references can be found in the handout.

Citation

Nelson, J. (2016, April). ReSound's directional philosophy. AudiologyOnline, Article 16798. Retrieved from www.audiologyonline.com